The Intel 8086 microprocessor (1978) revolutionized computing by founding the x86 architecture that continues to this day. One of the lesser-known features of the 8086 is the "hold" functionality, which allows an external device to temporarily take control of the system's bus. This feature was most important for supporting the 8087 math coprocessor chip, which was an option on the IBM PC; the 8087 used the bus hold so it could interact with the system without conflicting with the 8086 processor.

This blog post explains in detail how the bus hold feature is implemented in the processor's logic. (Be warned that this post is a detailed look at a somewhat obscure feature.) I've also found some apparently undocumented characteristics of the 8086's hold acknowledge circuitry, designed to make signal transition faster on the shared control lines.

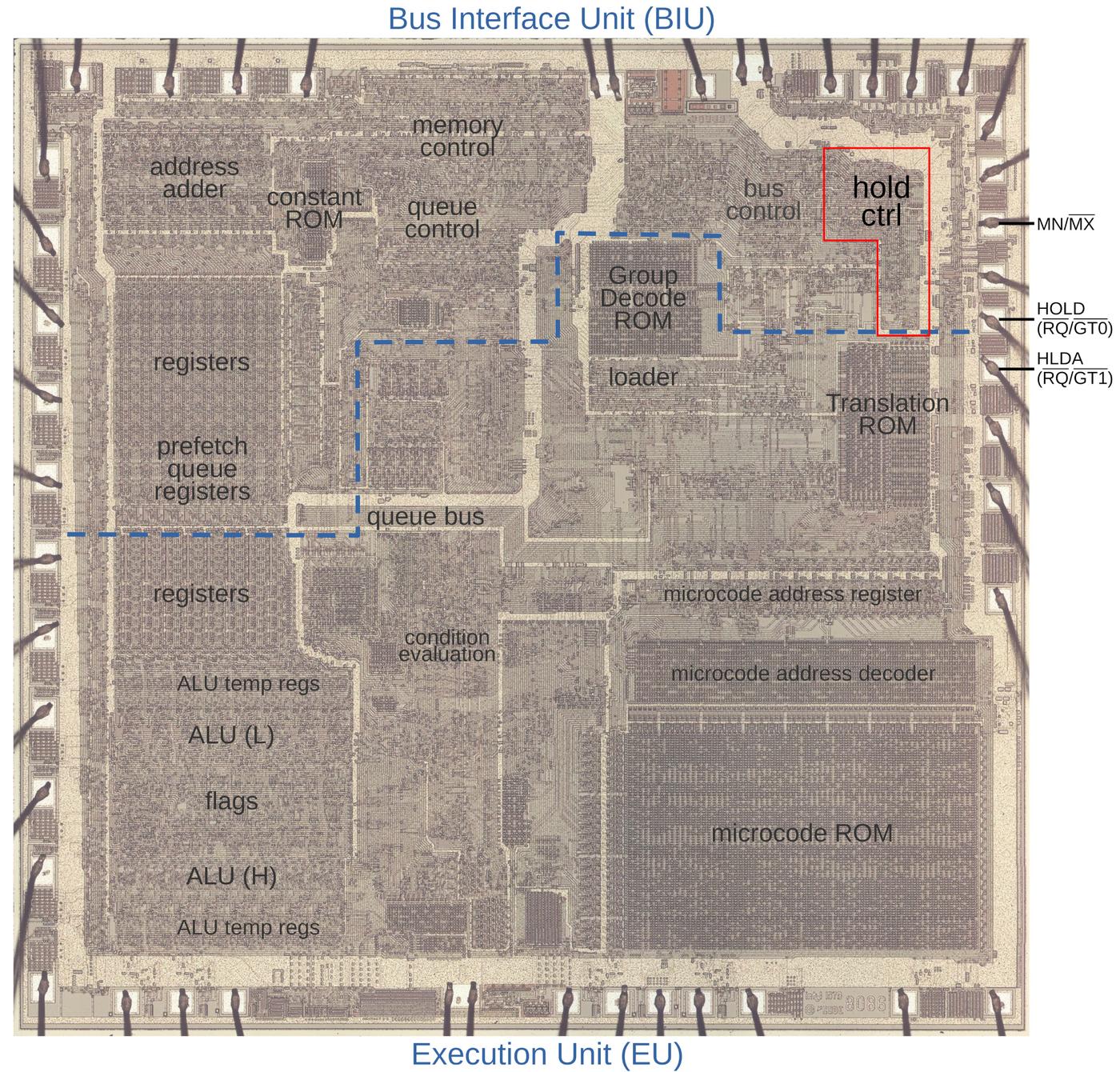

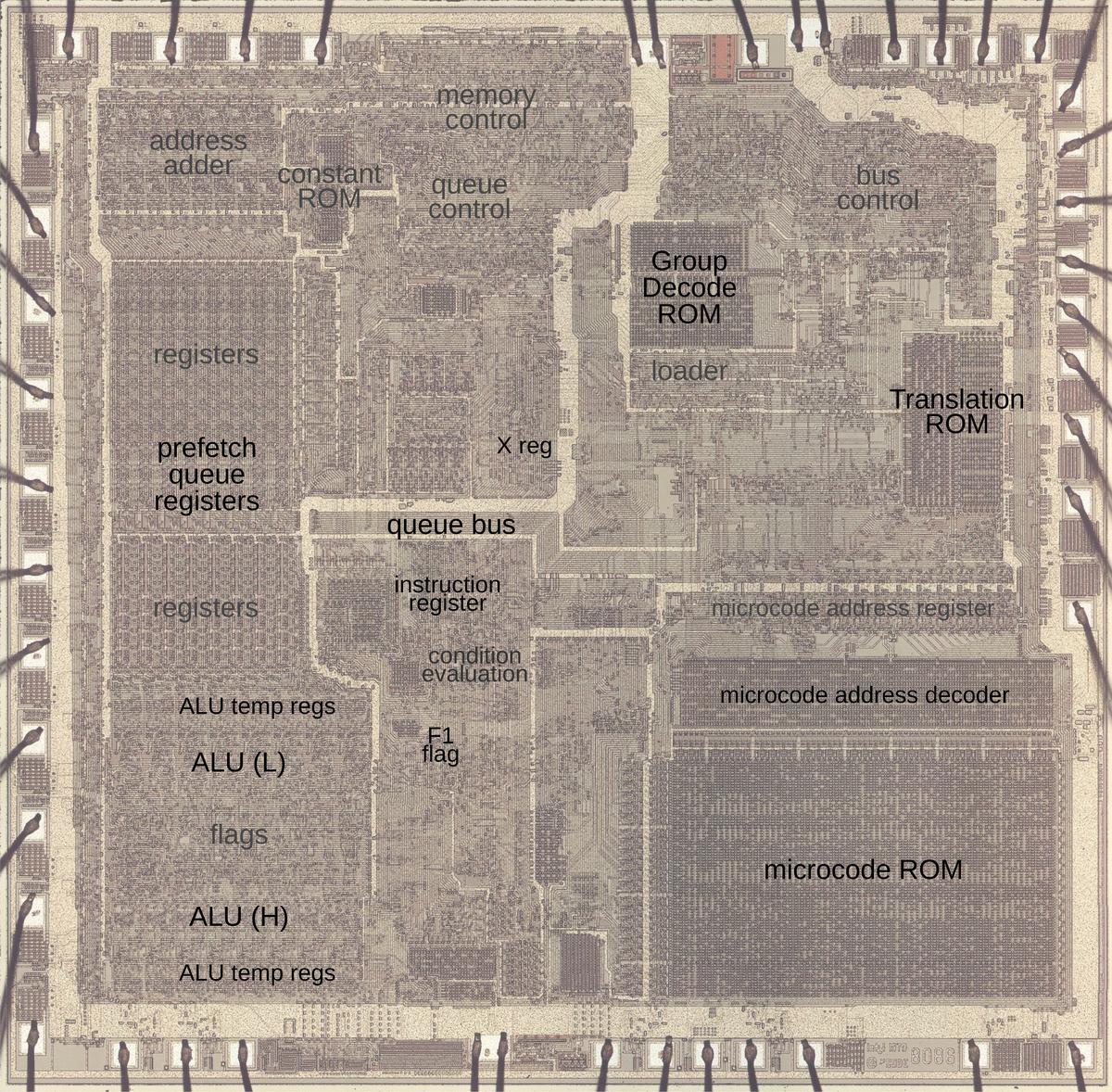

The die photo below shows the main functional blocks of the 8086 processor.

In this image, the metal layer on top of the chip is visible, while the silicon and polysilicon underneath are obscured.

The 8086 is partitioned into a Bus Interface Unit (upper) that handles bus traffic, and an Execution Unit (lower) that

executes instructions.

The two units operate mostly independently, which will turn out to be important. The Bus Interface Unit handles read and write operations as requested

by the Execution Unit.

The Bus Interface Unit also prefetches instructions that the Execution Unit uses when it needs them.

The hold control circuitry is highlighted in the upper right; it takes a

nontrivial amount of space on the chip.

The square pads around the edge of the die are connected by tiny bond wires to the chip's 40 external pins.

I've labeled the MN/MX, HOLD, and HLDA pads; these are the relevant signals for this post.

How bus hold works

In an 8086 system, the processor communicates with memory and I/O devices over a bus consisting of address and data lines along with various control signals. For high-speed data transfer, it is useful for an I/O device to send data directly to memory, bypassing the processor; this is called DMA (Direct Memory Access). Moreover, a co-processor such as the 8087 floating point unit may need to read data from memory. The bus hold feature supports these operations: it is a mechanism for the 8086 to give up control of the bus, letting another device use the bus to communicate with memory. Specifically, an external device requests a bus hold and the 8086 stops putting electrical signals on the bus and acknowledges the bus hold. The other device can now use the bus. When the other device is done, it signals the 8086, which then resumes its regular bus activity.

Most things in the 8086 are more complicated than you might expect, and the bus hold feature is no exception, largely due to the 8086's minimum and maximum modes. The 8086 can be designed into a system in one of two ways—minimum mode and maximum mode—that redefine the meanings of the 8086's external pins. Minimum mode is designed for simple systems and gives the control pins straightforward meanings such as indicating a read versus a write. Minimum mode provides bus signals that were similar to the earlier 8080 microprocessor, making migration to the 8086 easier. On the other hand, maximum mode is designed for sophisticated, multiprocessor systems and encodes the control signals to provide richer system information.

In more detail, minimum mode is selected if the MN/MX pin is wired high, while maximum mode is selected if the MN/MX pin

is wired low.

Nine of the chip's pins have different meanings depending on the mode, but only two pins are relevant to

this discussion.

In minimum mode, pin 31 has the function HOLD, while pin 30 has the function HLDA (Hold Acknowlege).

In maximum mode, pin 31 has the function RQ/GT0', while pin 30 has the function RQ/GT1'.

I'll start by explaining how a hold operation works in minimum mode.

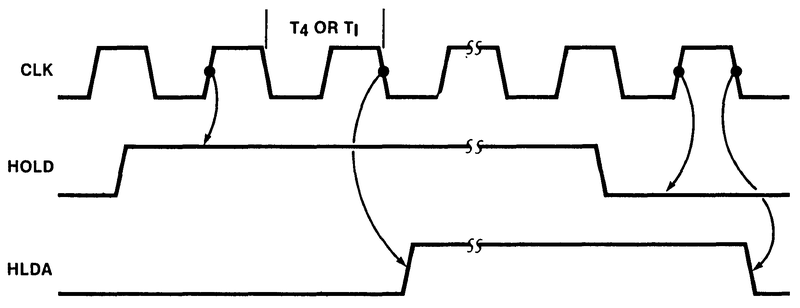

When an external device wants to use the bus, it pulls the HOLD pin high.

At the end of the current bus cycle, the 8086 acknowledges the hold request by pulling HLDA high.

The 8086 also puts its bus output pins into "tri-state" mode, in effect disconnecting them electrically from the bus.

When the external device is done, it pulls HOLD low and the 8086 regains control of the bus.

Don't worry about the details of the timing below; the key point is that a device pulls HOLD high and the

8086 responds by pulling HLDA high.

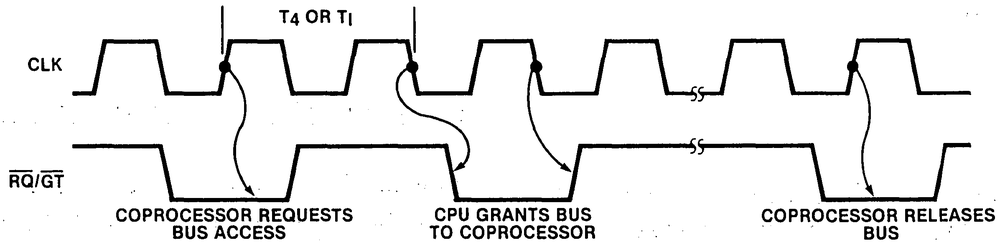

The 8086's maximum mode is more complex, allowing two other devices to share the bus by using a priority-based scheme.

Maximum mode uses two bidirectional signals, RQ/GT0 and RQ/GT1.2

When a device wants to use the bus, it issues a pulse on one of the signal lines, pulling it low.

The 8086 responds by pulsing the same line.

When the device is done with the bus, it issues a third pulse to inform the 8086.

The RQ/GT0 line has higher priority than RQ/GT1, so if two devices request the bus at the same time,

the RQ/GT0 device wins and the RQ/GT1 device needs to wait.1

Keep in mind that the RQ/GT lines are bidirectional: the 8086 and the external device both use the same line

for signaling.

The bus hold does not completely stop the 8086. The hold operation stops the Bus Interface Unit, but the Execution Unit will continue executing instructions until it needs to perform a read or write, or it empties the prefetch queue. Specifically, the hold signal blocks the Bus Interface Unit from starting a memory cycle and blocks an instruction prefetch from starting.

Bus sharing and the 8087 coprocessor

Probably the most common use of the bus hold feature was to support the Intel 8087 math coprocessor. The 8087 coprocessor greatly improved the performance of floating-point operations, making them up to 100 times faster. As well as floating-point arithmetic, the 8087 supported trigonometric operations, logarithms and powers. The 8087's architecture became part of later Intel processors, and the 8087's instructions are still a part of today's x86 computers.3

The 8087 had its own registers and didn't have access to the 8086's registers.

Instead, the 8087 could transfer values to and from the system's main memory.

Specifically, the 8087 used the RQ/GT mechanism (maximum mode) to take control of the bus if

the 8087 needed to transfer operands to or from memory.4

The 8087 could be installed as an option on the original IBM PC, which is why the IBM PC used maximum mode.

The enable flip-flop

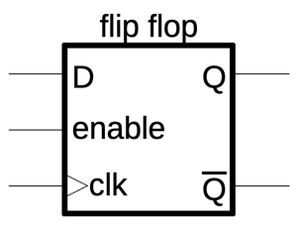

The circuit is built from six flip-flops. The flip-flops are a bit different from typical D flip-flops, so I'll discuss the flip-flop behavior before explaining the circuit.

A flip-flop can store a single bit, 0 or 1. Flip flops are very important in the 8086 because they hold information (state)

in a stable way, and they synchronize the circuitry with the processor's clock.

A common type of flip-flop is the D flip-flop, which takes a data input (D) and stores that value.

In an edge-triggered flip-flop, this storage happens

on the edge when the clock changes state from low to high.5

(Except at this transition, the input can change without affecting the output.)

The output is called Q, while the inverted output is called Q-bar.

Many of the 8086's flip-flops, including the ones in the hold circuit, have an "enable" input. When the enable input is high, the flip-flop records the D input, but when the enable input is low, the flip-flop keeps its previous value. Thus, the enable input allows the flip-flop to hold its value for more than one clock cycle. The enable input is very important to the functioning of the hold circuit, as it is used to control when the circuit moves to the next state.

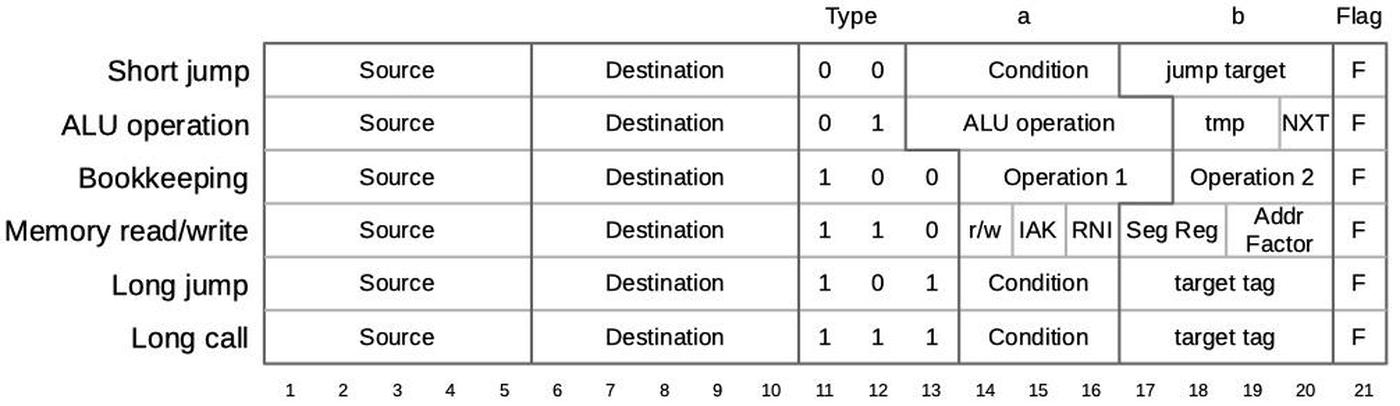

How bus hold is implemented (minimum mode)

I'll start by explaining how the hold circuitry works in minimum mode.

To review, in minimum mode the external device requests a hold through the HOLD input, keeping the input high for the duration of

the request.

The 8086 responds by pulling the hold acknowledge HLDA output high for the duration of the hold.

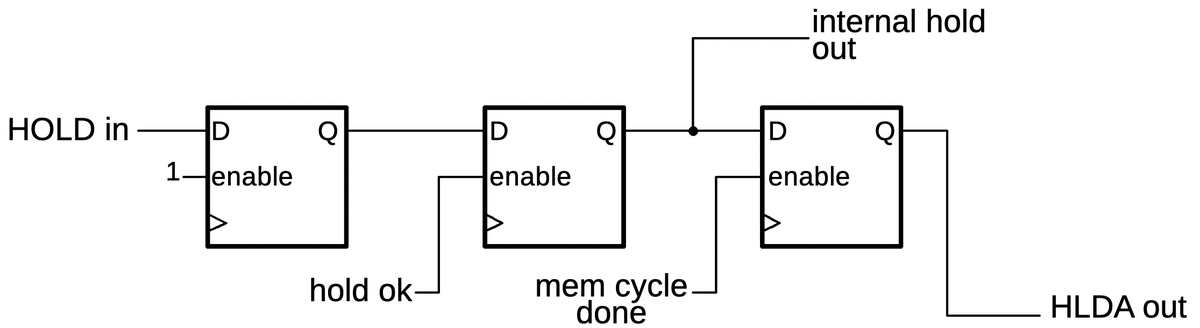

In minimum mode, only three of the six flip-flops are relevant.

The diagram below is highly simplified to show the essential behavior.

(The full schematic is in the footnotes.6)

At the left is the HOLD signal, the request from the external device.

When a HOLD request comes in, the first flip-flop is activated, and remains activated for the duration of the request.

The second flip-flop waits if any condition is blocking the hold request: a LOCK instruction, an unaligned

memory access, or so forth.

When the HOLD can proceed, the second flip-flop is enabled and it latches the request.

The second flip-flop controls the internal hold signal, causing the 8086 to stop further bus activity.

The third flip-flop is then activated when the current bus cycle (if any) completes; when it latches the request,

the hold is "official".

The third flip-flop drives the external HLDA (Hold Acknowledge) pin, indicating that the bus is free.

This signal also clears the bus-enabled latch (elsewhere in the 8086), putting the appropriate pins into floating tri-state mode.

The key point is that the flip-flops control the timing of the internal hold and the external HLDA, moving to the

next step as appropriate.

When the external device signals an end to the hold by pulling the HOLD pin low, the process reverses.

The three flip-flops return to their idle state in sequence.

The second flip-flop clears the internal hold signal, restarting bus activity.

The third flip-flop clears the HLDA pin.7

How bus hold is implemented (maximum mode)

The implementation of maximum mode is tricky because it uses the same circuitry as minimum mode, but the behavior

is different in several ways.

First, minimum mode and maximum mode operate on opposite polarity: a hold is requested by pulling HOLD high in minimum mode versus pulling a request line low in maximum mode.

Moreover, in minimum mode, a request on the HOLD pin triggers a response on the opposite pin (HLDA), while in maximum mode, a request and response are on the same pin.

Finally, using the same pin for the request and grant signals requires the pin to act as both an input and an output, with tricky electrical properties.

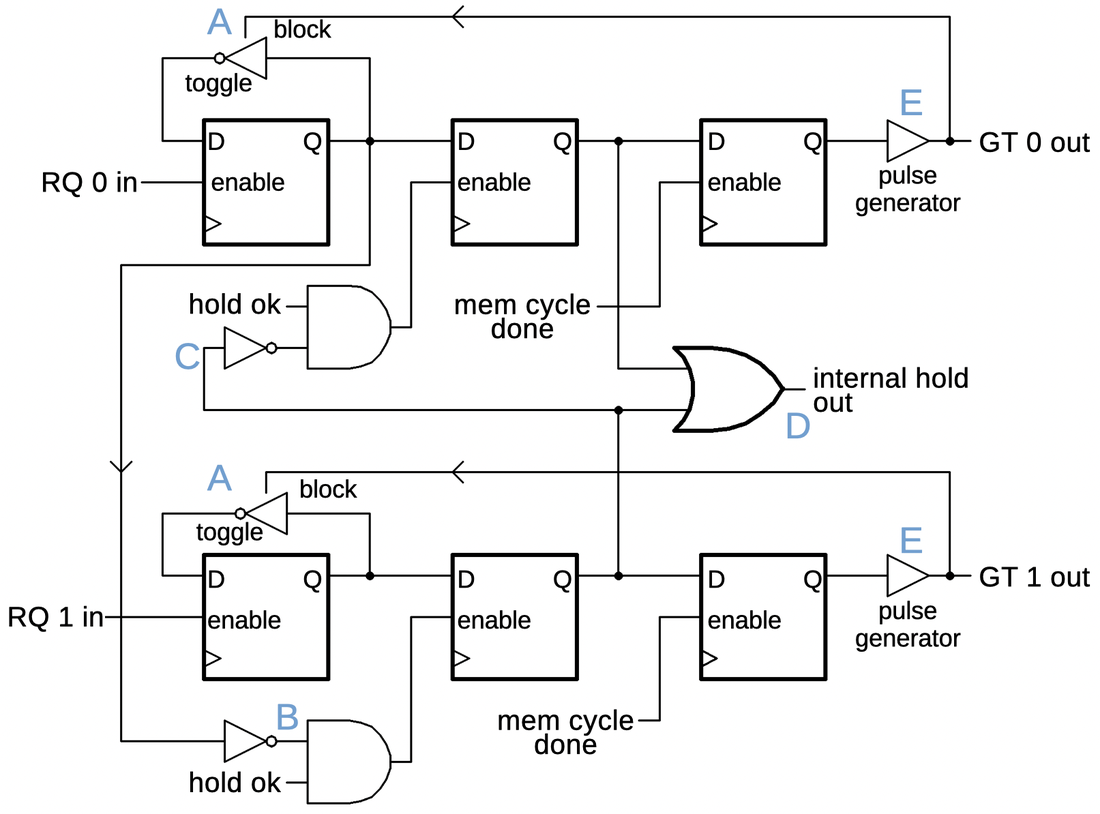

In maximum mode, the top three flip-flops handle the request and grant on line 0, while the bottom three

flip-flops handle line 1.

At a high level, these flip-flops behave roughly the same as in the minimum mode case,

with the first flip-flop tracking the hold request,

the second flip-flop activated when the hold is "approved", and the third flip-flop activated when the bus

cycle completes.

An RQ 0 input will generate a GT 0 output, while a RQ 1 input will generate

a GT 1 output.

The diagram below is highly simplified, but illustrates the overall behavior.

Keep in mind that RQ 0, GT 0, and HOLD use the same physical pin, as do RQ 1, GT 1, and HLDA.

In more detail, the first flip-flop converts the pulse request input into a steady signal.

This is accomplished by configuring the first flip-flop is configured with to toggle on when the request pulse

is received and toggle off when the end-of-request pulse is received.10

The toggle action is implemented by feeding the output pulse back to the input, inverted (A);

since the flip-flop is enabled by the RQ input, the flip-flop holds its value until an input pulse.

One tricky part is that the acknowledge pulse must not toggle the flip-flop.

This is accomplished by using the output signal to block the toggle.

(To keep the diagram simple, I've just noted the "block" action rather than showing the logic.)

As before, the second flip-flop is blocked until the hold is "authorized" to proceed.

However, the circuitry is more complicated since it must prioritize the two request lines and ensure that only one

hold is granted at a time.

If RQ0's first flip-flop is active, it blocks the enable of RQ1's second flip-flop (B).

Conversely, if RQ1's second flip-flop is active, it blocks the enable of RQ0's second flip-flop (C).

Note the asymmetry, blocking on RQ0's first flip-flop and RQ1's second flip-flop.

This enforces the priority of RQ0 over RQ1, since an RQ0 request blocks RQ1 but only an RQ1 "approval" blocks

RQ0.

When the second flip-flop is activated in either path, it triggers the internal hold signal (D).8

As before, the hold request is latched into the third flip-flop when any existing memory cycle completes.

When the hold request is granted, a pulse is generated (E) on the corresponding GT pin.9

The same circuitry is used for minimum mode and maximum mode, although the above diagrams show differences between

the two modes. How does this work?

Essentially, logic gates are used to change the behavior between minimum mode and maximum mode as required.

For the most part, the circuitry works the same, so only a moderate amount of logic is required to make

the same circuitry work for both.

On the schematic, the signal MN is active during minimum mode, while MX is active during maximum mode,

and these signals control the behavior.

The "hold ok" circuit

As usually happens with the 8086, there are a bunch of special cases when different features interact.

One special case is if a bus hold request comes in while the 8086 is acknowledging an interrupt.

In this case, the interrupt takes priority and the bus hold is not processed until the interrupt acknowledgment

is completed.

A second special case is if the bus hold occurs while the 8086 is halted. In this case, the 8086 issues

a second HALT indicator at the end of the bus hold.

Yet another special case is the 8086's LOCK prefix, which locks the use of the bus for the following instruction,

so a bus hold request is not honored until the locked instruction has completed.

Finally, the 8086 performs an unaligned word access to memory by breaking it into two 8-bit bus cycles;

these two cycles can't be interrupted by a bus hold.

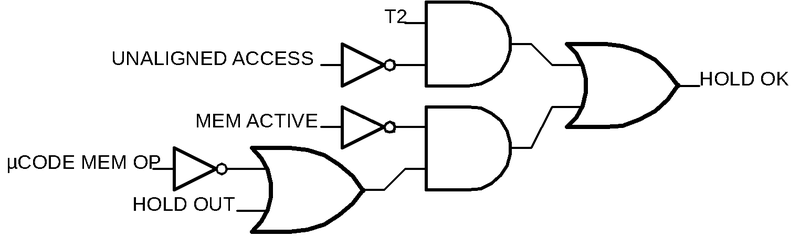

In more detail, the "hold ok" circuit determines at each cycle if a hold could proceed. There are several conditions under which the hold can proceed:

- The bus cycle is `T2`, except if an unaligned bus operation is taking place (i.e. a word split into two byte operations), or

- A memory cycle is not active and a microcode memory operation is not taking place, or

- A memory cycle is not active and a hold is currently active.

The first case occurs during bus (memory) activity, where a hold request before cycle T2 will be handled at the end of that cycle. The second case allows a hold if the bus is inactive. But if microcode is performing a memory operation, the hold will be delayed, even if the request is just starting. The third case is opposite from the other two: it enables the flip flop so a hold request can be dropped. (This ensures that the hold request can still be dropped in the corner case where a hold starts and then the microcode makes a memory request, which will be blocked by the hold.)

An instruction with the LOCK prefix causes the bus to be locked against other devices for the duration of the instruction.

Thus, a hold cannot be granted while the instruction is running. This is implemented through a separate path.

This logic is between the output of the first (request) flip-flop and the second (accepted) flip-flop, tied into the LOCK signal.

Conceptually, it seems that the LOCK signal should block hold-ok and thus block the second (accepted) flip-flop from

being enabled.

But instead, the LOCK signal blocks the data path, unless the request has already been granted.

I think the motivation is to allow dropping of a hold request to proceed uninterrupted.

In other words, LOCK prevents a hold from being accepted, but it doesn't prevent a hold from being dropped, and it

was easier to implement this in the data path.

The pin drive circuitry

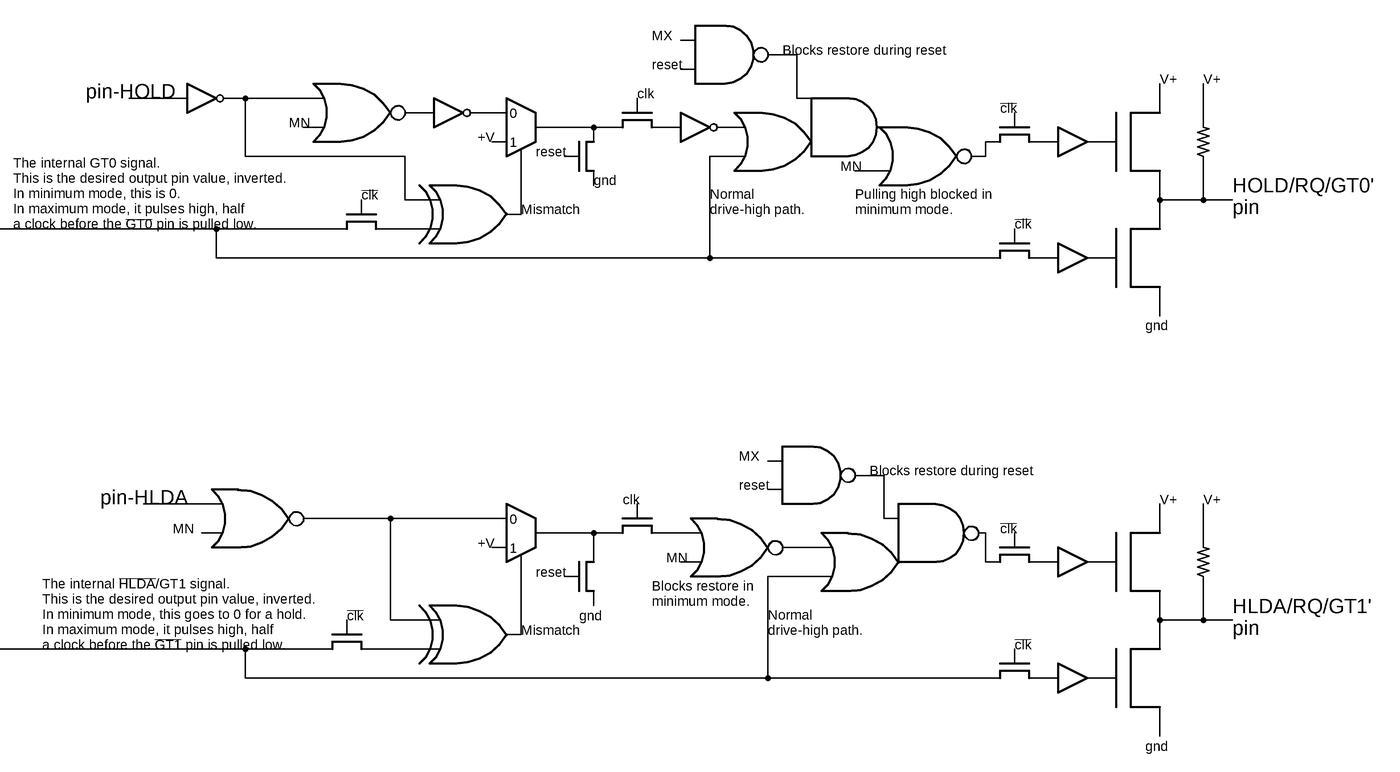

The circuitry for the HOLD/RQ0/GT0 and HLDA/RQ1/GT1 pins is somewhat complicated, since they are used for both input and output.

In minimum mode, the HOLD pin is an input, while the HLDA pin is an output.

In maximum mode, both pins act as an input, with a low-going pulse from an external device to start or stop the hold.

But the 8086 also issues pulses to grant the hold.

Pull-up resistors inside the 8086 to ensure that the pins remain high (idle) when unused.

Finally, an undocumented active pull-up system restores a pin to a high state after it is pulled low, providing faster

response than the resistor.

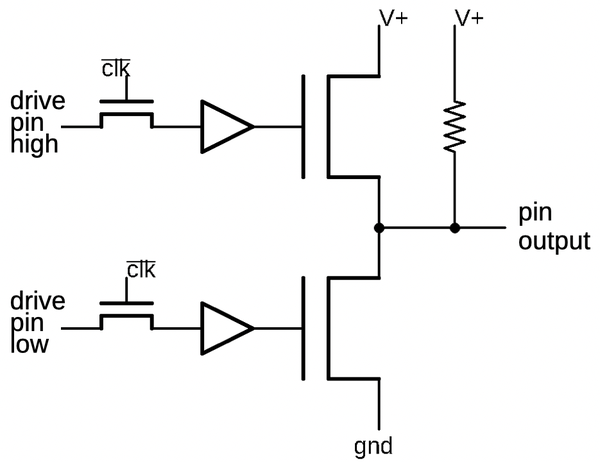

The schematic below shows the heart of the tri-state output circuit. Each pin is connected to two large output MOSFETs, one to drive the pin high and one to drive the pin low. The transistors have separate control lines; if both control lines are low, both transistors are off and the pin floats in the "tri-state" condition. This permits the pin to be used as an input, driven by an external device. The pull-up resistor keeps the pin in a high state.

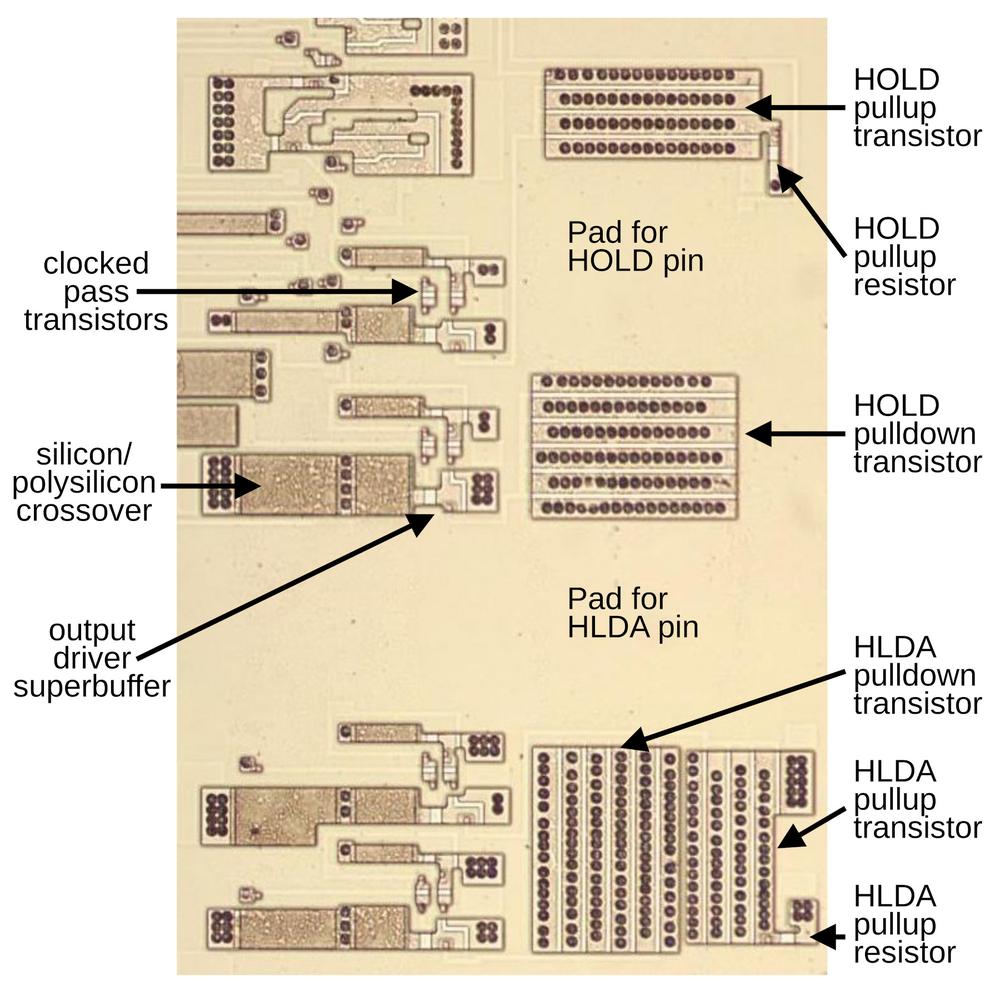

The diagram below shows how this circuitry looks on the die.

In this image, the metal and polysilicon layers have been removed with acid to show the underlying doped

silicon regions. The thin white stripes are transistor gates where polysilicon wiring crossed the silicon.

The black circles are vias that connected the silicon to the metal on top.

The empty regions at the right are where the metal pads for HOLD and HLDA were.

Next to the pads are the large transistors to pull the outputs high or low.

Because the outputs require much higher current than internal signals, these transistors are much

larger than logic transistors.

They are composed of several transistors placed in parallel, resulting in the parallel stripes.

The small pullup resistors are also visible.

For efficiency, these resistors are actually depletion-mode transistors, specially doped to act as constant-current sources.

At the left, some of the circuitry is visible. The large output transistors are driven by "superbuffers" that provide more current than regular NMOS buffers. (A superbuffer uses separate transistors to pull the signal high and low, rather than using a pull-up to pull the signal high as in a standard NMOS gate.) The small transistors are the pass transistors that gate output signals according to the clock. The thick rectangles are crossovers, allowing the vertical metal wiring (no longer visible) to cross over a horizontal signal in the signal layer. The 8086 has only a single metal layer, so the layout requires a crossover if signals will otherwise intersect. Because silicon's resistance is much higher than metal's resistance, the crossover is relatively wide to reduce the resistance.

The problem with a pull-up resistor is that it is relatively slow when connected to a line with high capacitance. You essentially end up with a resistor-capacitor delay circuit, as the resistor slowly charges the line and brings it up to a high level. To get around this, the 8086 has an active drive circuit to pulse the RQ/GT lines high to pull them back from a low level. This circuit pulls the line high one cycle after the 8086 drops it low for a grant acknowledge. This circuit also pulls the line high after the external device pulls it low.11 (The schematic for this circuit is in the footnotes.) The curious thing is that I couldn't find this behavior documented in the datasheets. The datasheets describe the internal pull-up resistor, but don't mention that the 8086 actively pulls the lines high.12

Conclusions

The hold circuitry was a key feature of the 8086, largely because it was necessary for the 8087 math coprocessor chip.

The hold circuitry seems like it should be straightforward, but there are many corner cases in this circuitry:

it interacts with unaligned memory accesses, the LOCK prefix, and minimum vs. maximum modes.

As a result, it is fairly complicated.

Personally, I find the hold circuitry somewhat unsatisfying to study, with few fundamental concepts but a lot of special-case logic. The circuitry seems overly complicated for what it does. Much of the complexity is probably due to the wildly different behavior of the pins between minimum and maximum mode. Intel should have simply used a larger package (like the Motorola 68000) rather than re-using pins to support different modes, as well as using the same pin for a request and a grant. It's impressive, however, the same circuitry was made to work for both minimum and maximum modes, despite using completely different signals to request and grant holds. This circuitry must have been a nightmare for Intel's test engineers, trying to ensure that the circuitry performed properly when there were so many corner cases and potential race conditions.

I plan to write more on the 8086, so follow me on Twitter @kenshirriff or RSS for updates. I've also started experimenting with Mastodon recently as @[email protected] and Bluesky as @righto.com so you can follow me there too.

Notes and references

-

The timing of priority between

RQ0andRQ1is left vague in the documentation. In practice, even ifRQ1is requested first, a laterRQ0can still preempt it until the point thatRQ1is internally granted (i.e. the second flip-flop is activated). This happens before the hold is externally acknowledged, so it's not obvious to the user at what point priority no longer applies. ↩ -

The

RQ/GT0andRQ/GT1signals are active-low. These signals should have an overbar to indicate this, but it makes the page formatting ugly :-) ↩ -

Modern x86 processors still support the 8087 (x87) instruction set. Starting with the 80486DX, the floating point unit was included on the CPU die, rather than as an external coprocessor. The x87 instruction set used a stack-based model, which made it harder to parallelize. To mitigate this, Intel introduced SSE in 1999, a different set of floating point instructions that worked on an independent register set. The x87 instructions are now considered mostly obsolete and are deprecated in 64-bit Windows. ↩

-

The 8087 provides another

RQ/GTinput line for an external device. Thus, two external devices can still be used in a system with an 8087. That is, although the 8087 uses up one of the 8086's twoRQ/GTlines, the 8087 provides another one, so there are still two lines available. The 8087 has logic to combine its bus requests and external bus requests into a singleRQ/GTline to the 8086. ↩ -

Confusingly, some of the flip-flops in the hold circuit transistion when the clock goes high, while others use the inverted clock signal and transition when the clock goes low. Moreover, the flip-flops are inconsistent about how they treat the data. In each group of three flip-flops, the first flip-flop is active-high, while the remaining flip-flops are active-low. For the most part, I'll ignore this in the discussion. You can look at the schematic if you want to know the details. ↩

-

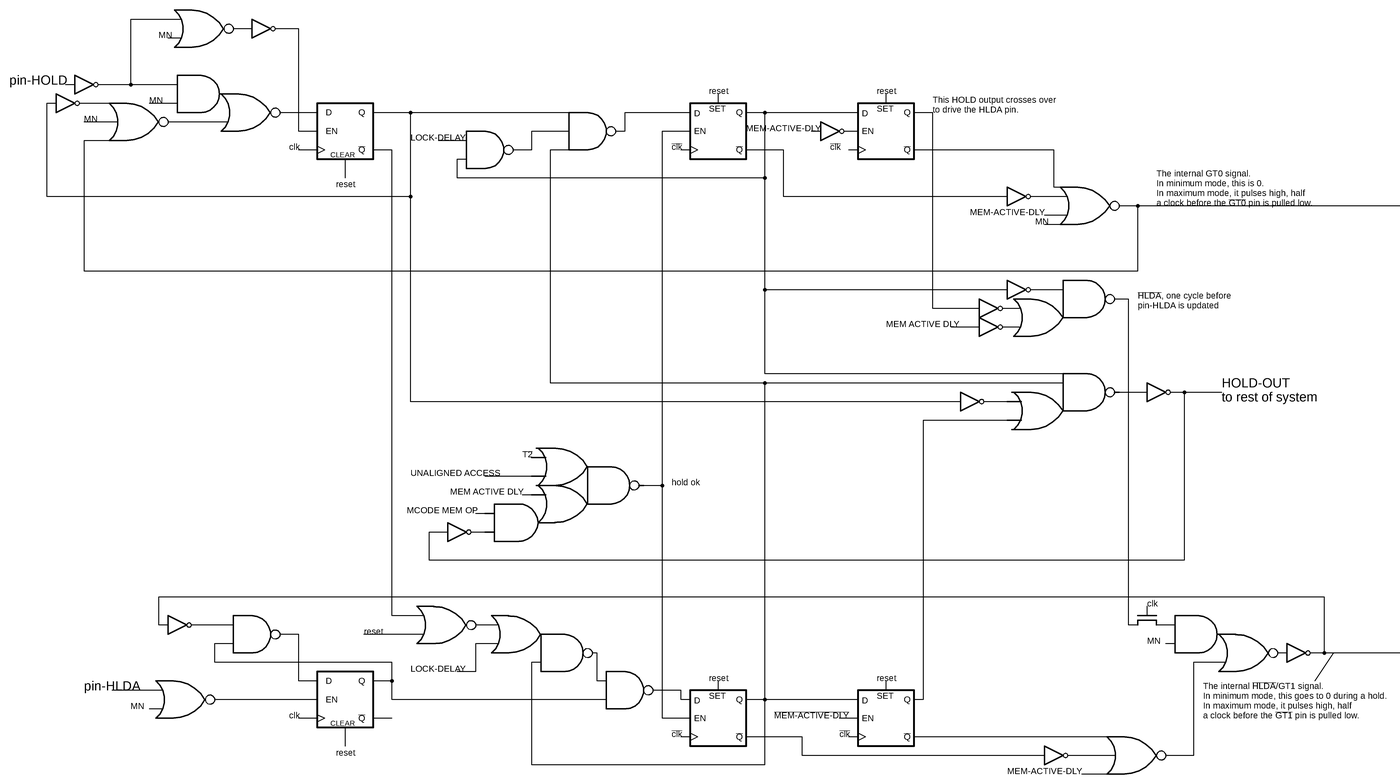

The schematics below shows my reverse-engineered schematic for the hold circuitry. I have partitioned the schematic into the hold logic and the output driver circuitry. This split matches the physical partitioning of the circuitry on the die.

In the first schematic, the upper part handles

HOLDandrequest0, while the lower part handlesrequest1. There is some circuitry in the middle to handle the common enabling and to generate the internal hold signal. I won't explain the circuitry in much detail, but there are a few things I want to point out. First, even though the hold circuit seems like it should be simple, there are a lot of gates connected in complicated ways. Second, although there are many inverters, NAND, and NOR gates, there are also complex gates such as AND-NOR, OR-NAND, AND-OR-NAND, and so forth. These are implemented as single gates. Due to how gates are constructed from NMOS transistors, it is just as easy to build a hierarchical gate as a single gate. (The last step must be inversion, though.) The XOR gates are more complex; they are constructed from a NOR gate and an AND-NOR gate.Schematic of the hold circuitry. Click this image (or any other) for a larger version.The schematic below shows the output circuits for the two pins. These circuits are similar, but have a few differences because only the bottom one is used as an output (

HLDA) in minimum mode. Each circuit has two inputs: what the current value of the pin is, and what the desired value of the pin is.Schematic of the pin output circuits. -

Interestingly, the external pins aren't taken out of tri-state mode immediately when the

HLDAsignal is dropped. Instead, the 8086's bus drivers are re-enabled when a bus cycle starts, which is slightly later. The bus circuitry has a separate flip-flop to manage the enable/disable state, and the start of a bus cycle is what re-enables the bus. This is another example of behavior that the documentation leaves ambiguous. ↩ -

There's one more complication for the

hold-outsignal. If a hold is granted on one line, a request comes in on the other line, and then the hold is released on the first line, the desired behavior is for the bus to remain in the hold state as the hold switches to the second line. However, because of the way a hold on line 1 blocks a hold on line 0, theGT1second flip-flop will drop a cycle before the GT0 second flip-flop is activated. This would causehold-outto drop for a cycle and the 8086 could start unwanted bus activity. To prevent this case, thehold-outline is also activated if there is an RQ0 request and RQ1 is granted. This condition seems a bit random but it covers the "gap". I have to wonder if Intel planned the circuit this way, or they added the extra test as a bug fix. (The asymmetry of the priority circuit causes this problem to occur only going from a hold on line 1 to line 0, not the other direction.) ↩ -

The pulse-generating circuit is a bit tricky. A pulse signal is generated if the request has been accepted, has not been granted, and will be granted on the next clock (i.e. no memory request is active so the flip-flop is enabled). (Note that the pulse will be one cycle wide, since granting the request on the next cycle will cause the conditions to be no longer satisfied.) This provides the pulse one clock cycle before the flip-flop makes it "official". Moreover, the signals come from the inverted Q outputs from the flip-flops, which are updated half a clock cycle earlier. The result is that the pulse is generated 1.5 clock cycles before the flip-flop output. Presumably the point of this is to respond to hold requests faster, but it seems overly complicated. ↩

-

The request pulse is required to be one clock cycle wide. The feedback loop shows why: if the request is longer than one clock cycle, the first flip-flop will repeatedly toggle on and off, resulting in unexpected behavior. ↩

-

The details of the active pull-up circuitry don't make sense to me. First it XORs the state of the pin with the desired state of the pin and uses this signal to control a multiplexer, which generates the pull-up action based on other gates. The result of all this ends up being simply NAND, implemented with excessive gates. Another issue is that minimum mode blocks the active pull-up, which makes sense. But there are additional logic gates so minimum mode can affect the value going into the multiplexer, which gets blocked in minimum mode, so that logic seems wasted. There are also two separate circuits to block pull-up during reset. My suspicion is that the original logic accumulated bug fixes and redundant logic wasn't removed. But it's possible that the implementation is doing something clever that I'm just missing. ↩

-

My analysis of the

RQ/GTlines being pulled high is based on simulation. It would be interesting to verify this behavior on a physical 8086 chip. By measuring the current out of the pin, the pull-up pulses should be visible. ↩