One interesting aspect of a computer's instruction set is its addressing modes, how the computer determines the address for a memory access. The Intel 8086 (1978) used the ModR/M byte, a special byte following the opcode, to select the addressing mode.1 The ModR/M byte has persisted into the modern x86 architecture, so it's interesting to look at its roots and original implementation.

In this post, I look at the hardware and microcode in the 8086 that implements ModR/M2 and how the 8086 designers fit multiple addressing modes into the 8086's limited microcode ROM. One technique was a hybrid approach that combined generic microcode with hardware logic that filled in the details for a particular instruction. A second technique was modular microcode, with subroutines for various parts of the task.

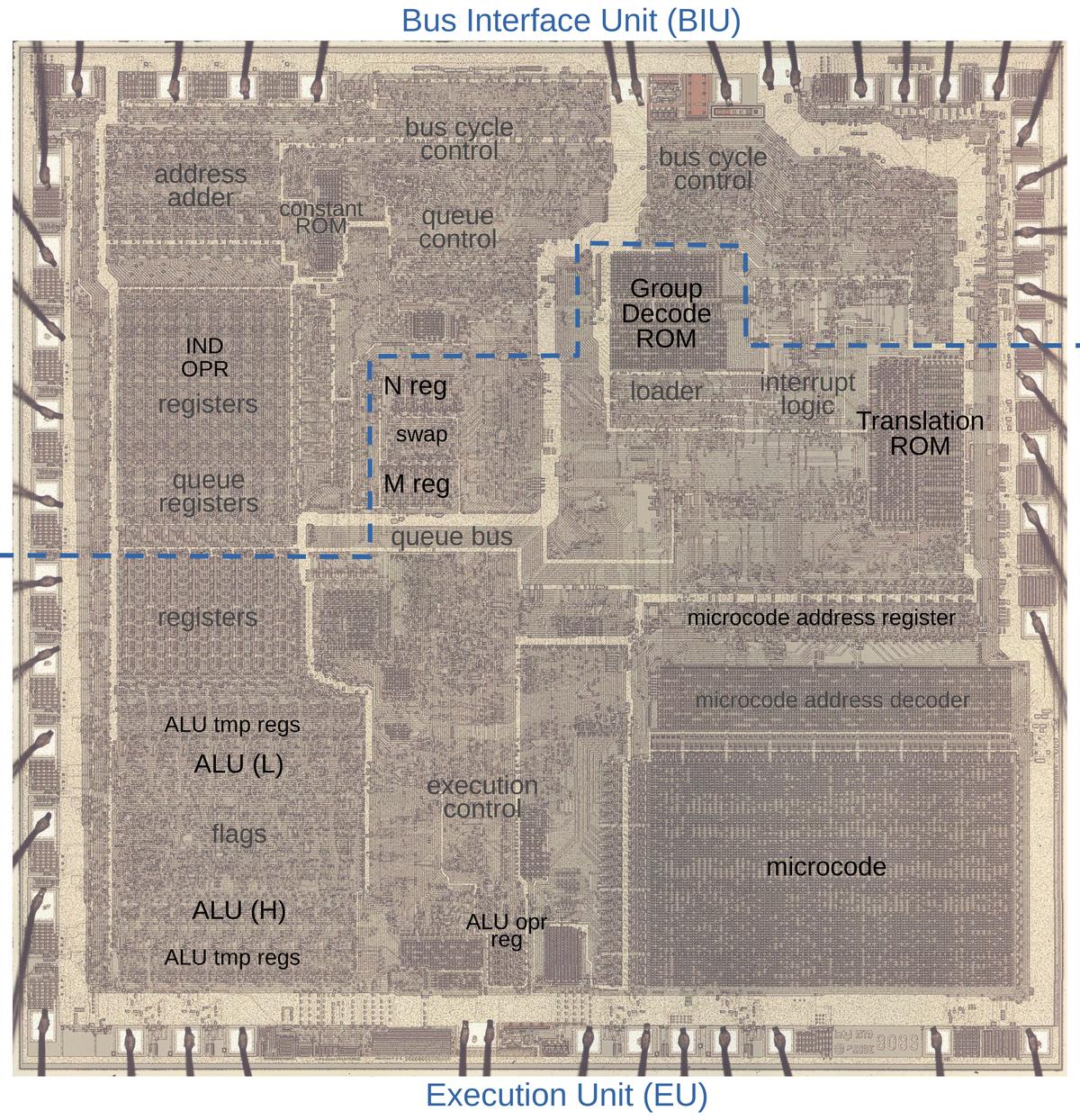

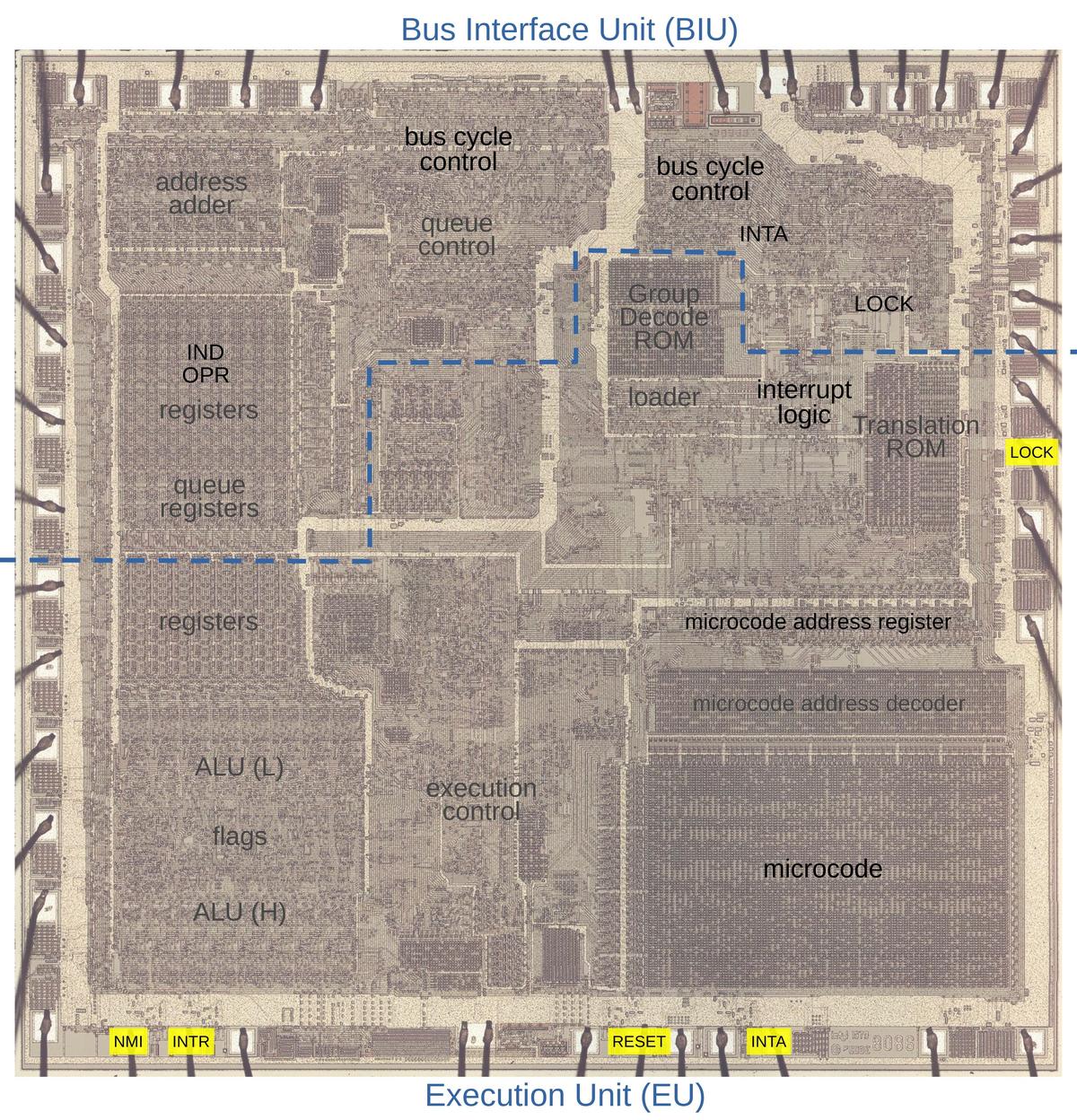

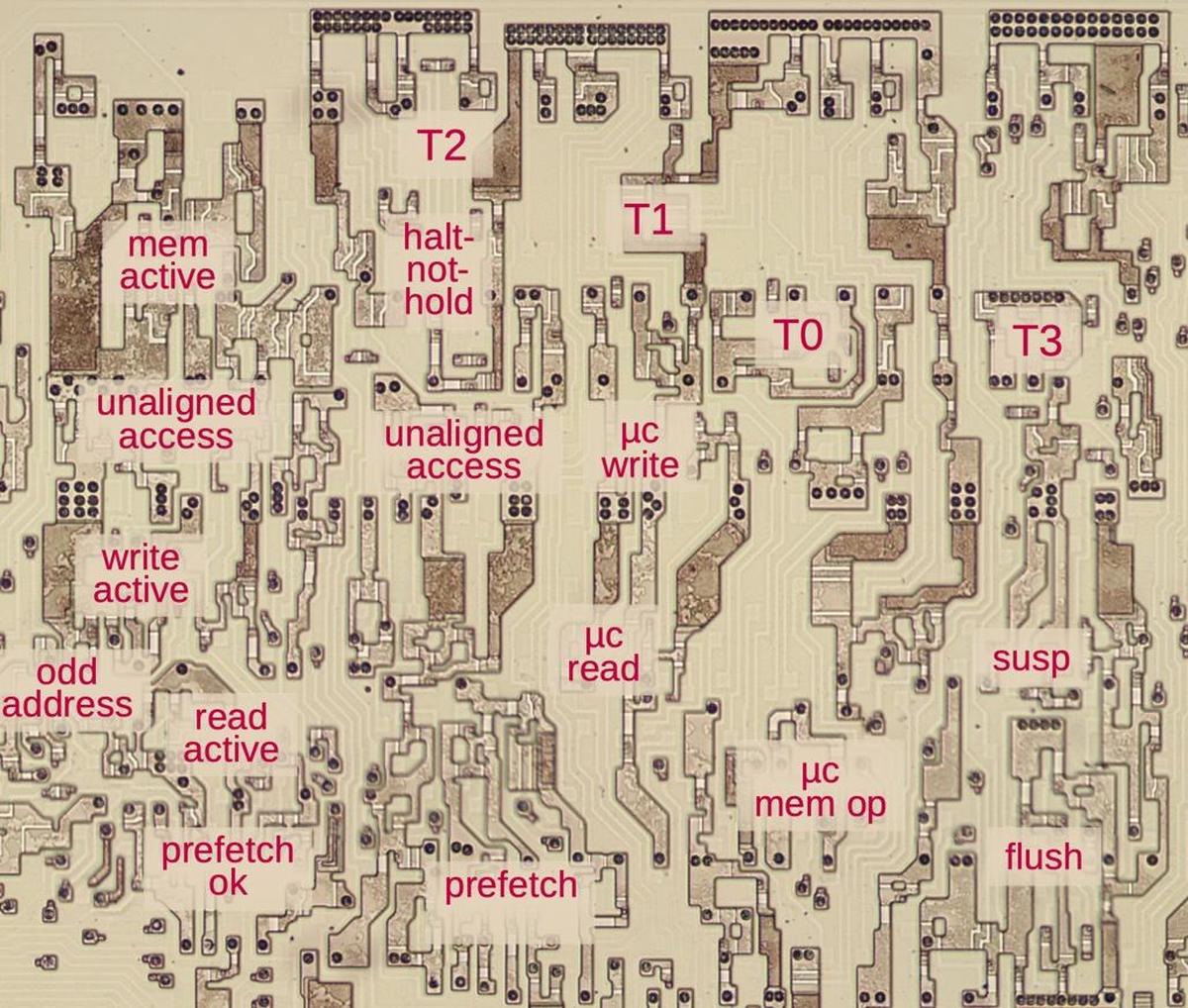

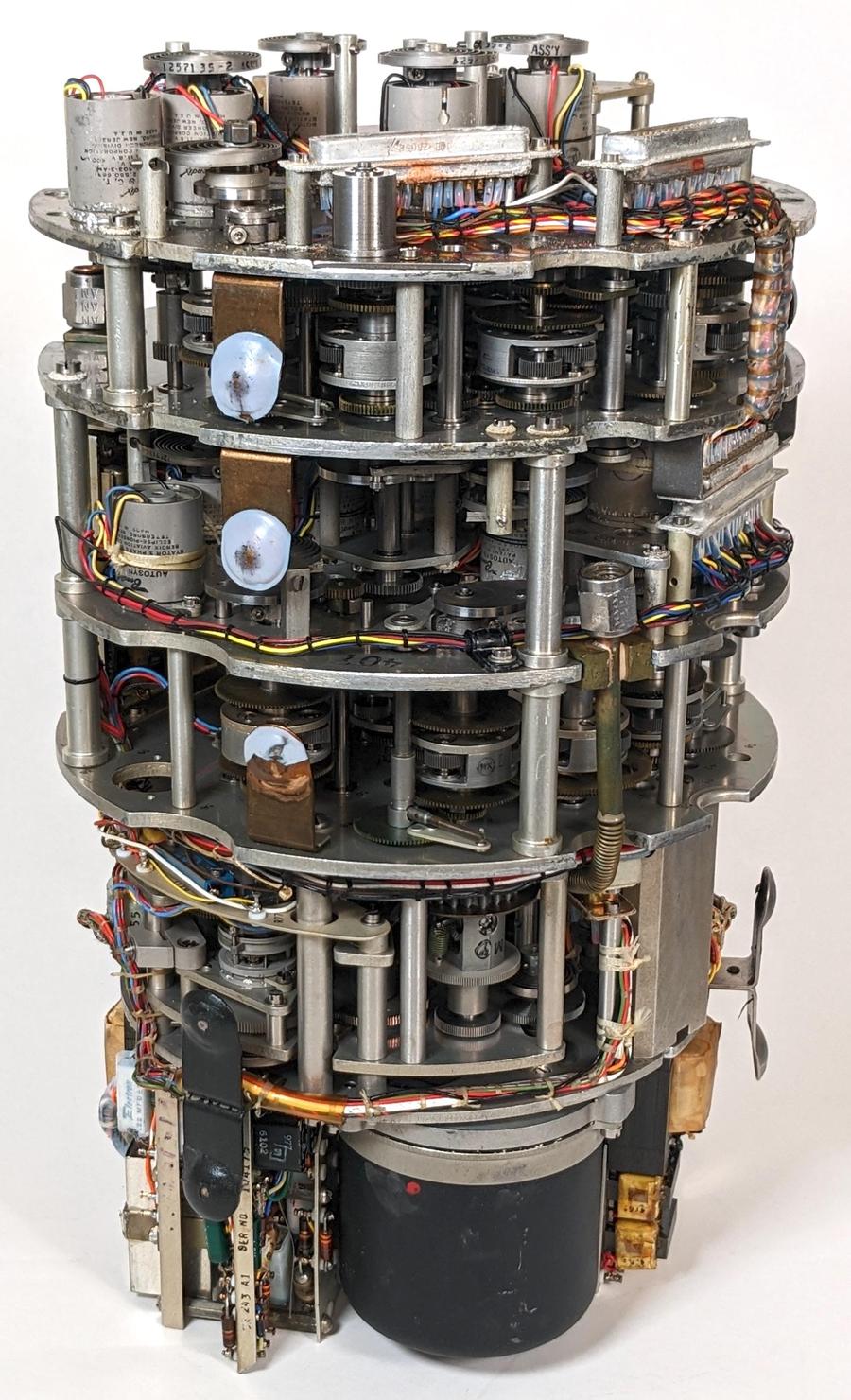

I've been reverse-engineering the 8086 starting with the silicon die. The die photo below shows the chip under a microscope. The metal layer on top of the chip is visible, with the silicon and polysilicon mostly hidden underneath. Around the edges of the die, bond wires connect pads to the chip's 40 external pins. I've labeled the key functional blocks; the ones that are important to this discussion are darker and will be discussed in detail below. Architecturally, the chip is partitioned into a Bus Interface Unit (BIU) at the top and an Execution Unit (EU) below. The BIU handles bus and memory activity as well as instruction prefetching, while the Execution Unit (EU) executes instructions and microcode. Both units play important roles in memory addressing.

8086 addressing modes

Let's start with an addition instruction, ADD dst,src, which adds a source value to a destination value and stores the result in the destination.3

What are the source and destination? Memory? Registers? The addressing mode answers this question.

You can use a register as the source and another register as the destination.

The instruction below uses the AX register as the destination and the BX register as the source. Thus, it adds BX to AX and puts the result

in AX.

ADD AX, BX Add the contents of the BX register to the AX register

A memory access is indicated with square brackets around the "effective address"4 to access.

For instance, [1234] means the memory location with address 1234,

while [BP] means the memory location that the BP register points to.

For a more complicated addressing mode, [BP+SI+1234] means the memory location is determined by adding the BP and SI registers to the constant 1234 (known as the displacement).

On the 8086, you can use memory for the source or the destination, but not both.

Here are some examples of using memory as a source:

ADD AX, [1234] Add the contents of memory location 1234 to AX register ADD CX, [BP] Add memory pointed to by BP register to CX register ADD DX, [BX+SI+1234] Source memory address is BX + SI + constant 1234

Here are examples with memory as the destination:

ADD [1234], AX Add AX to the contents of memory location 1234 ADD [BP], CX Add CX to memory pointed to by BP register ADD [BX+SI+1234], DX Destination memory address is BX + SI + constant 1234

You can also operate on bytes instead of words, using a byte register and accessing a memory byte:

ADD AL, [SI+1234] Add to the low byte of AX register ADD AH, [BP+DI+1234] Add to the high byte of AX register

As you can see, the 8086 supports many different addressing schemes. To understand how they are implemented, we must first look at how instructions encode the addressing schemes in the ModR/M byte.

The ModR/M byte

The ModR/M byte follows many opcodes to specify the addressing mode.

This byte is fairly complicated but I'll try to explain it in this section.

The diagram below shows how the byte is split into three fields:5

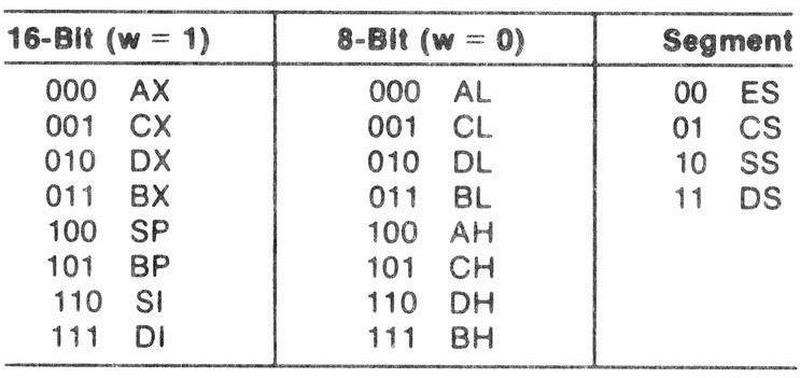

mod selects the overall mode, reg selects a register, and r/m selects either a register or memory mode.

| mod | reg | r/m | |||||

|---|---|---|---|---|---|---|---|

| 7 | 6 | 5 | 4 | 3 | 2 | 1 | 0 |

I'll start with the register-register mode, where the mod bits are 11 and the reg and r/m fields each select one of eight registers, as shown below.

The instruction ADD AX,BX would use reg=011 to select BX and r/m=000 to select AX, so the ModR/M byte would be

11011000.

(The register assignment depends on whether the instruction operates on words, bytes, or segment registers.

For instance, in a word instruction, 001 selects the CX register, while in a byte instruction, 001 selects the CL register, the low byte of CX.)

The next addressing mode specifies a memory argument and a register argument. In this case, the mod bits are 00, the reg field specifies a

register as described above, and the r/m field specifies a memory address according to the table below.

For example, the instruction ADD [SI],CX would use reg=001 to select CX and r/m=100 to select [SI], so the ModR/M byte would be

00001100.

| r/m | Operand Address |

|---|---|

| 000 | [BX+SI] |

| 001 | [BX+DI] |

| 010 | [BP+SI] |

| 011 | [BP+DI] |

| 100 | [SI] |

| 101 | [DI] |

| 110 | [BP] |

| 111 | [BX] |

The next mode, 01, adds an 8-bit signed displacement to the address. This displacement consists of one byte

following the ModR/M byte. This supports addressing modes such as [BP+5].

The mode 10 is similar except the displacement is two bytes long,

for addressing modes such as [BP+DI+0x1234].

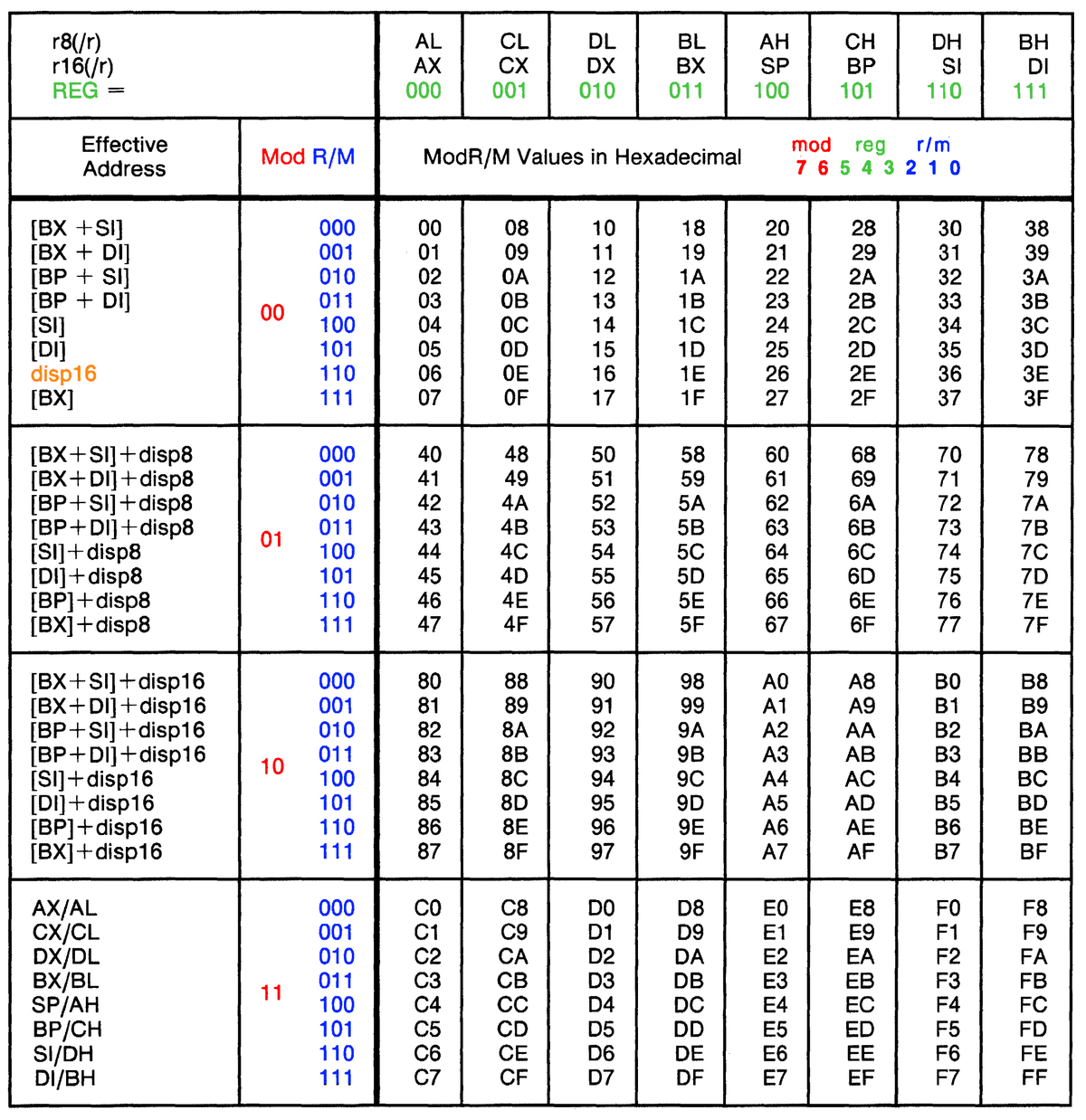

The table below shows the meaning of all 256 values for the ModR/M byte.

The mod bits are colored red, the reg bits green, and the r/m bits blue.

Note the special case "disp16" to support a 16-bit fixed address.

The register combinations for memory accesses may seem random but they were designed to support the needs of high-level

languages, such as arrays and data structures.

The idea is to add a base register, an index register, and/or a fixed displacement to determine the address.6

The base register can indicate the start of an array, the index register holds the offset in the array, and the displacement provides the

offset of a field in the array entry.

The base register is BX for data or BP for information on the stack.

The index registers are SI (Source Index) and DI (Destination Index).7

Some addressing features are handled by the opcode, not the ModR/M byte.

For instance, the ModR/M byte doesn't distinguish between

ADD AX,[SI] and ADD [SI],AX.

Instead, the two

variants are distinguished by bit 1 of the instruction, the D or "direction" bit.8

Moreover, many instructions have one opcode that operates on words and another that operates on bytes, distinguished by bit 0 of

the opcode, the W or word bit.

The D and W bits are an example of orthogonality in the 8086 instruction set,

allowing features to be combined in various combinations.

For instance, the addressing modes combine 8 types of offset computation with three sizes of displacements and 8 target registers.

Arithmetic instructions combine these addressing modes with eight ALU operations,

each of which can act on a byte or a word, with two possible memory directions.

All of these combinations are implemented with one block of microcode, implementing a large instruction set with a small amount of microcode.

(The orthogonality of the 8086 shouldn't be overstated, though; it has many special cases and things that don't quite fit.)

An overview of 8086 microcode

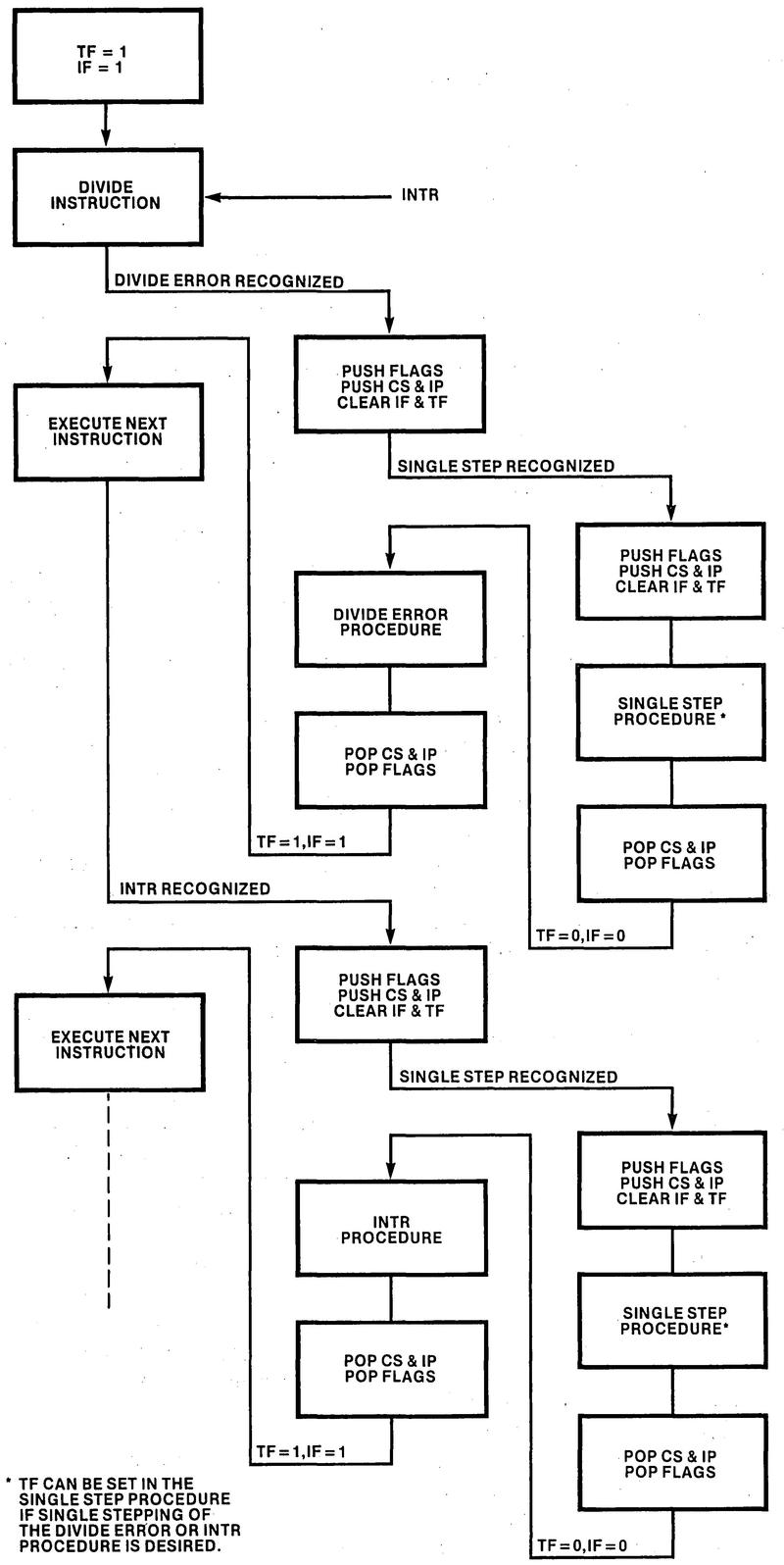

Most people think of machine instructions as the basic steps that a computer performs. However, many processors (including the 8086) have another layer of software underneath: microcode. With microcode, instead of building the control circuitry from complex logic gates, the control logic is largely replaced with code. To execute a machine instruction, the computer internally executes several simpler micro-instructions, specified by the microcode.

The 8086 uses a hybrid approach: although it uses microcode, much of the instruction functionality is implemented with gate logic. This approach removed duplication from the microcode and kept the microcode small enough for 1978 technology. In a sense, the microcode is parameterized. For instance, the microcode can specify a generic Arithmetic/Logic Unit (ALU) operation and a generic register. The gate logic examines the instruction to determine which specific operation to perform and the appropriate register.

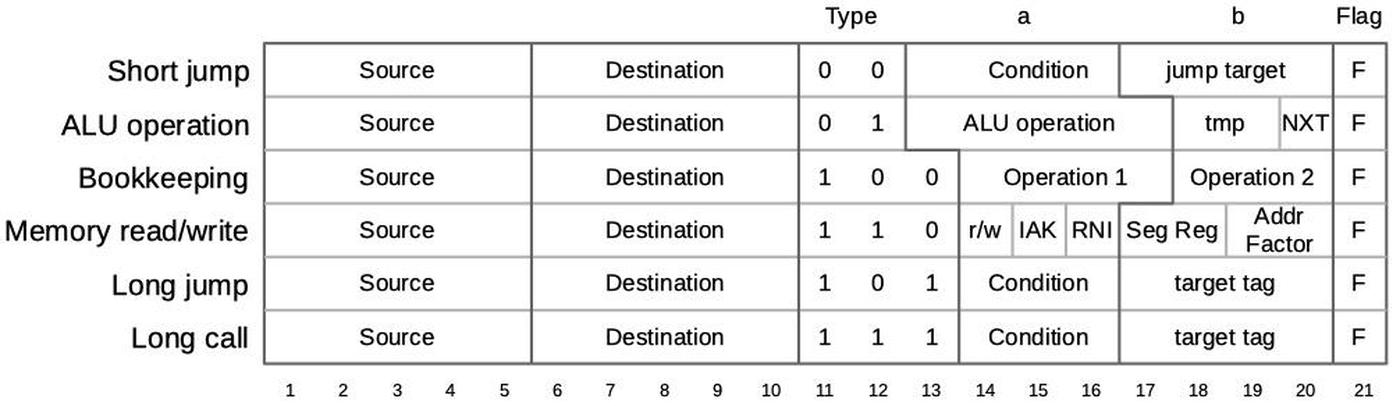

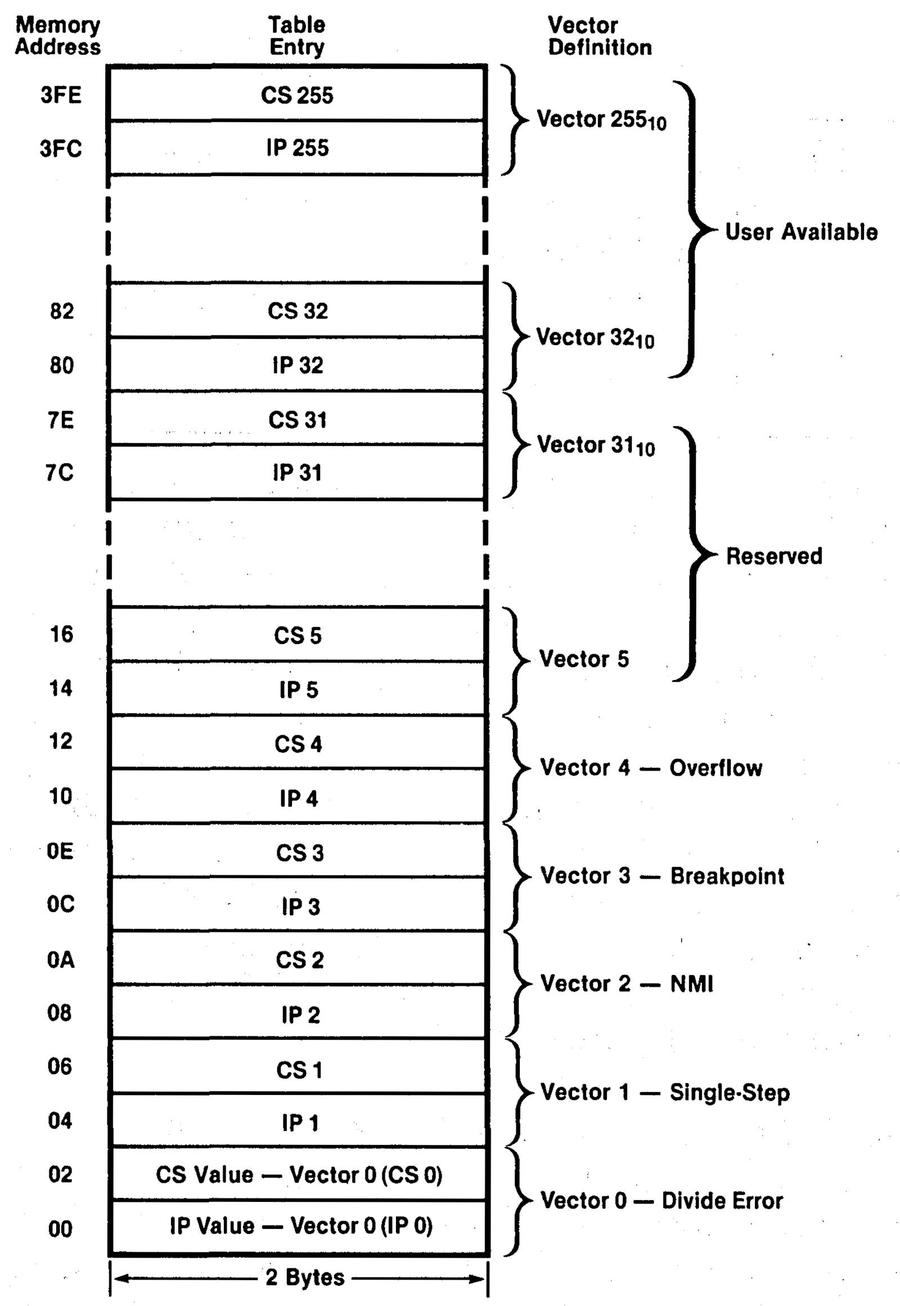

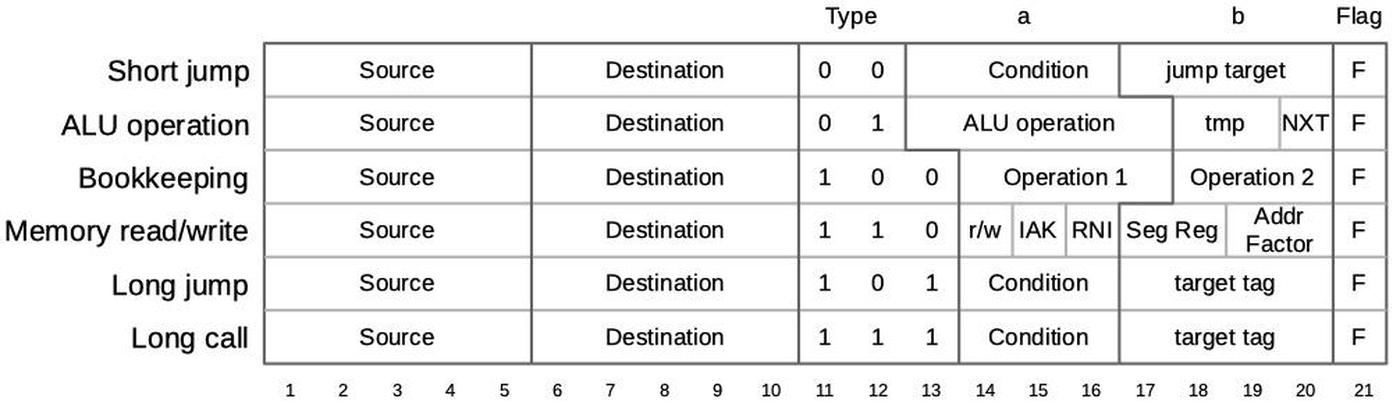

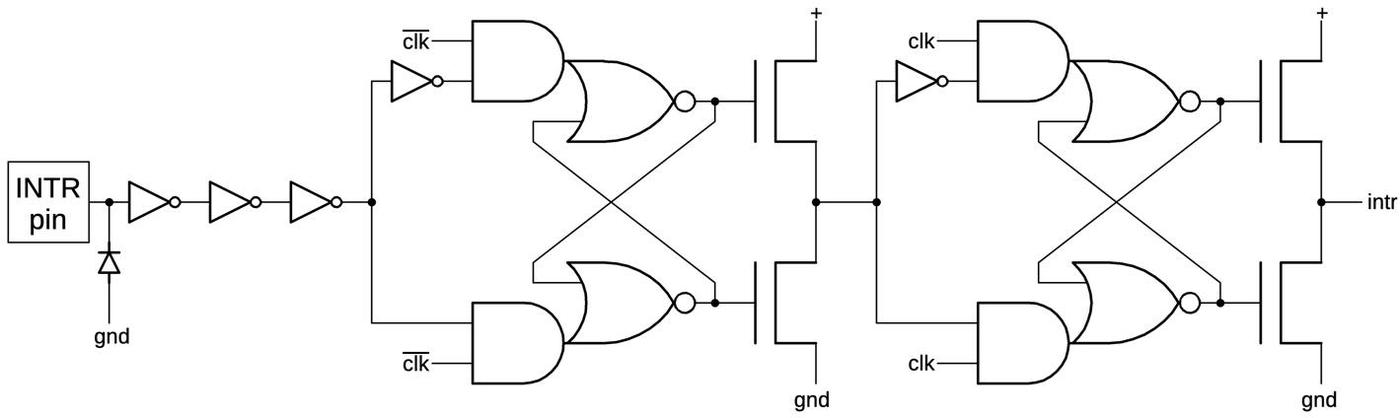

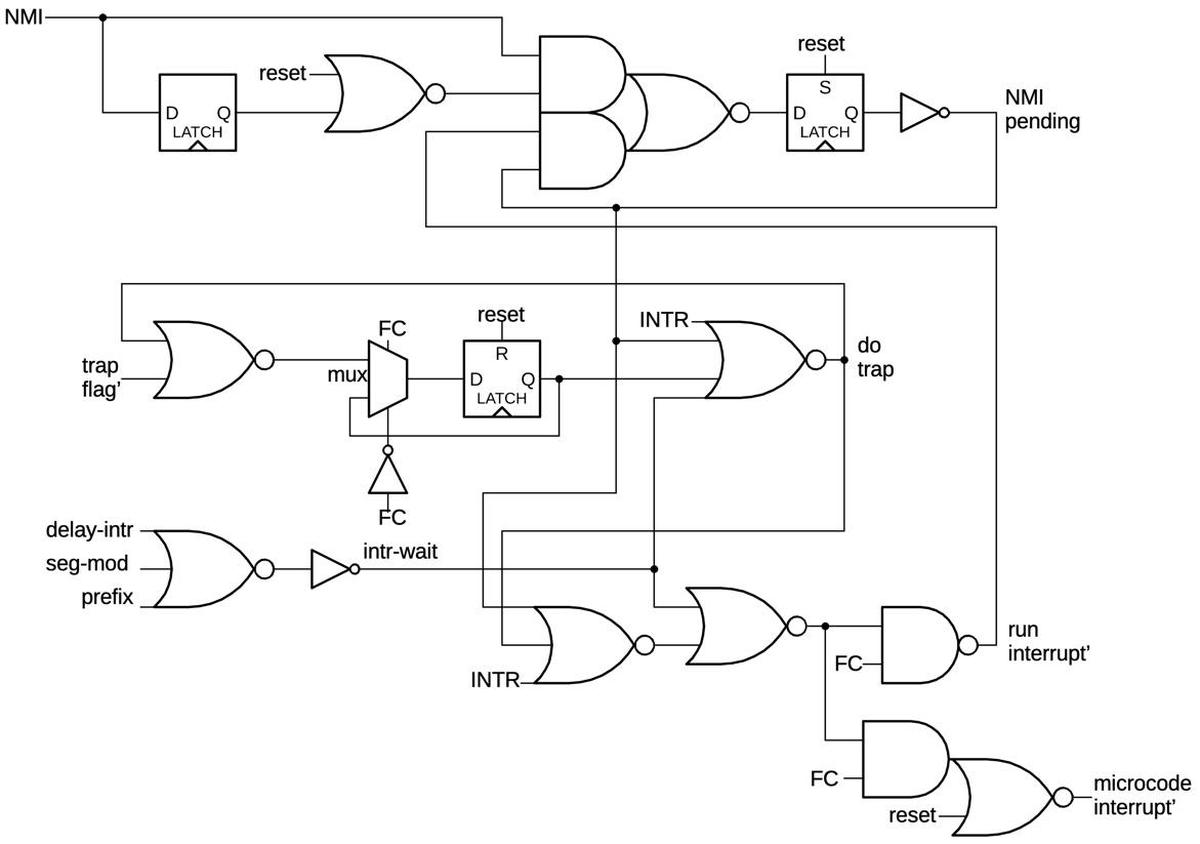

A micro-instruction in the 8086 is encoded into 21 bits as shown below. Every micro-instruction has a move from a source register to a destination register, each specified with 5 bits. The meaning of the remaining bits depends on the type field. A "short jump" is a conditional jump within the current block of 16 micro-instructions. An ALU operation sets up the arithmetic-logic unit to perform an operation. Bookkeeping operations are anything from flushing the prefetch queue to ending the current instruction. A memory operation triggers a bus cycle to read or write memory. A "long jump" is a conditional jump to any of 16 fixed microcode locations (specified in an external table called the Translation ROM). Finally, a "long call" is a conditional subroutine call to one of 16 locations. For more about 8086 microcode, see my microcode blog post.

Some examples of microcode for addressing

In this section, I'll take a close look at a few addressing modes and how they are implemented in microcode. In the next section, I'll summarize all the microcode for addressing modes.

A register-register operation

Let's start by looking at a register-to-register instruction, before we get into the complications of memory accesses: ADD BX,AX which adds AX to BX, storing the result in BX. This instruction has the opcode value 01 and ModR/M value C3 (hex).

Before the microcode starts, the hardware performs some decoding of the opcode.

The Group Decode ROM (below) classifies an instruction into multiple categories:

this instruction contains a D bit, a W bit, and an ALU operation, and has a ModR/M byte.

Fields from the opcode and ModR/M bytes are extracted and stored in various internal registers.

The ALU operation type (ADD) is stored in the ALU opr register.

From the ModR/M byte,

the reg register code (AX) is stored in the N register, and the r/m register code

(BX) is stored in the M register.

(The M and N registers are internal registers that are invisible to the programmer; each holds a 5-bit register code that specifies a register.9)

Once the preliminary decoding is done, the microcode below for this ALU instruction is executed.10

(There are three micro-instructions, so the instruction takes three clock cycles.)

Each micro-instruction contains a move and an action.

First, the register specified by M (i.e. BX) is moved to the ALU's temporary A register (tmpA).

Meanwhile, the ALU is configured to perform the appropriate operation on tmpA; XI indicates that the ALU operation is specified by the instruction bits, i.e. ADD).

The second instruction moves the register specified by N (i.e. AX) to the ALU's tmpB register.

The action NX indicates that this is the next-to-last micro-instruction so

the microcode engine can start processing the next machine instruction.

The last micro-instruction stores the ALU's result (Σ) in the register indicated by M (i.e. BX).

The status flags are updated because of the F.

WB,RNI (Run Next Instruction) indicates that this is the end and the microcode engine can process the next machine instruction.

The WB prefix would skip the actions if a memory writeback were pending (which is not the case).

move action M → tmpA XI tmpA ALU rm↔r: BX to tmpA N → tmpB WB,NX AX to tmpB Σ → M WB,RNI F result to BX, run next instruction.

This microcode packs a lot into three micro-instructions. Note that it is very generic: the microcode doesn't know what ALU operation is being performed or which registers are being used. Instead, the microcode deals with abstract registers and operations, while the hardware fills in the details using bits from the instructions. The same microcode is used for eight different ALU operations. And as we'll see, it supports multiple addressing modes.

Using memory as the destination

Memory operations on the 8086 involve both microcode and hardware.

A memory operation uses two internal registers: IND (Indirect) holds the memory address, while OPR (Operand) holds the word that is read or written.

A typical memory micro-instruction is R DS,P0, which starts a read from the Data Segment

with a "Plus 0" on the IND register afterward. The Bus Interface Unit carries out this operation by adding the segment register

to compute the physical address, and then running the memory bus cycles.

With that background, let's look at the instruction ADD [SI],AX, which adds AX to the memory location indexed by SI.

As before, the hardware performs some analysis of the instruction (hex 01 04).

In the ModR/M byte, mod=00 (memory, no displacement), reg=000 (AX), and R/M=100 ([SI]).

The N register is loaded with the code for AX as before.

The M register, however, is loaded with OPR (the memory data register) since the Group Decode ROM determines that the instruction has a memory addressing mode.

The microcode below starts in an effective address microcode subroutine for the [SI] mode.

The first line of the microcode subroutine computes the effective address simply by loading the tmpA register with SI. It jumps to the micro-routine EAOFFSET which ends up at EALOAD (for reasons that will be described below), which loads the value from memory.

Specifically, EALOAD puts the address in IND, reads the value from memory, puts the value into tmpB, and returns from the subroutine.

SI → tmpA JMP EAOFFSET [SI]: put SI in tmpA tmpA → IND R DS,P0 EALOAD: read memory OPR → tmpB RTN M → tmpA XI tmpA ALU rm↔r: OPR to tmpA N → tmpB WB,NX AX to tmpB Σ → M WB,RNI F result to BX, run next instruction. W DS,P0 RNI writes result to memory

Microcode execution continues with the ALU rm↔r routine described above, but with a few differences.

The M register indicates OPR, so the value read from memory is put into tmpA.

As before, the N register specifies AX, so that register is put into tmpB.

In this case, the WB,NX determines that the result will be written back to memory so it skips the NXT operation.

The ALU's result (Σ) is stored in OPR as directed by M.

The WB,RNI is skipped so microcode execution continues.

The W DS,P0 micro-instruction writes the result (in OPR) to the memory address in IND.

At this point, RNI terminates the microcode sequence.

A lot is going on here to add two numbers! The main point is that the same microcode runs as in the register case, but the results are different due to

the M register and the conditional WB code.

By running different subroutines, different effective address computations can be performed.

Using memory as the source

Now let's look at how the microcode uses memory as a source, as in the instruction ADD AX,[SI].

This instruction (hex 03 04) has the same

ModR/M byte as before, so the N register holds AX and the M register holds OPR.

However, because the opcode has the D bit set, the M and N registers are swapped when accessed.

Thus, when the microcode uses M, it gets the value AX from N, and vice versa. (Yes, this is confusing.)

The microcode starts the same as the previous example, reading [SI] into tmpB and returning to the ALU code.

However, since the meaning of M and N are reversed, the AX value goes into tmpA while the memory value goes into tmpB.

(This switch doesn't matter for addition, but would matter for subtraction.)

An important difference is that there is no writeback to memory, so WB,NX starts processing the next machine instruction.

In the last micro-instruction, the result is written to M, indicating the AX register. Finally, WB,RNI runs the next machine instruction.

SI → tmpA JMP EAOFFSET [SI]: put SI in tmpA tmpA → IND R DS,P0 EALOAD: read memory OPR → tmpB RTN M → tmpA XI tmpA ALU rm↔r: AX to tmpA N → tmpB WB,NX OPR to tmpB Σ → M WB,RNI F result to AX, run next instruction.

The main point is that the same microcode handles memory as a source and a destination, simply by setting the D bit.

First, the D bit reverses the operands by swapping M and N.

Second, the WB conditionals prevent the writeback to memory that happened in the previous case.

Using a displacement

The memory addressing modes optionally support a signed displacement of one or two bytes.

Let's look at the instruction ADD AX,[SI+0x1234].

In hex, this instruction is 03 84 34 12, where the last two bytes are the displacement, reversed because the 8086 uses little-endian numbers.

The mod bits are 10, indicating a 16-bit displacement, but the other bits are the same as in the previous example.

Microcode execution again starts with the [SI] subroutine.

However, the jump to EAOFFSET goes to [i] this time, to handle the displacement offset. (I'll explain how, shortly.)

This code loads the offset as two bytes from the instruction prefetch queue (Q) into the tmpB register.

It adds the offset to the previous address in tmpA and puts the sum Σ in tmpA, computing the effective address. Then it jumps to EAFINISH (EALOAD).

From there, the code continues as earlier, reading an argument from memory and computing the sum.

SI → tmpA JMP EAOFFSET [SI]: put SI in tmpA Q → tmpBL JMPS MOD1 12 [i]: load from queue, conditional jump Q → tmpBH Σ → tmpA JMP EAFINISH 12: tmpA → IND R DS,P0 EALOAD: read memory OPR → tmpB RTN M → tmpA XI tmpA ALU rm↔r: AX to tmpA N → tmpB WB,NX OPR to tmpB Σ → M WB,RNI F result to AX, run next instruction.

For the one-byte displacement case,

the conditional MOD1 will jump over the fetch of the second displacement byte.

When the first byte is loaded into the low byte of tmpB, it was sign-extended into the high byte.14

Thus, the one-byte displacement case uses the same microcode but ends up with a sign-extended 1-byte displacement in tmpB.

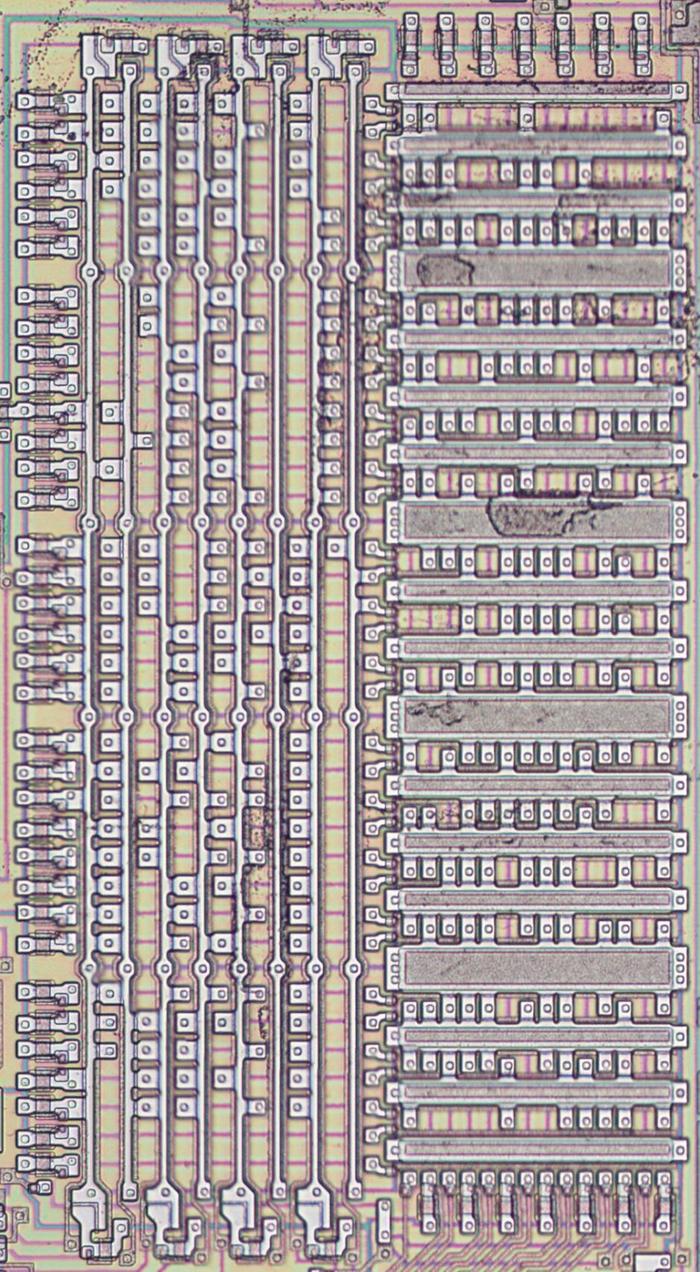

The Translation ROM

Now let's take a closer look at the jumps to EAOFFSET, EAFINISH, and the effective address subroutines, which use something called the Translation ROM.

The Translation ROM converts the 5-bit jump tag in a micro-instruction into a 13-bit microcode address.

It also provides the addresses of the effective address subroutines.

As will be seen below, there are some complications.11

The effective address micro-routines

Register calculations

The Translation ROM has an entry for the addressing mode calculations such as [SI] and [BP+DI], generally indicated by the r/m bits,

the three low bits of the ModR/M byte.

Each routine computes the effective address and puts it into the ALU's temporary A register and jumps to EAOFFSET, which adds any

displacement offset.

The microcode below shows the four simplest effective address calculations, which just load the appropriate register into tmpA.

SI → tmpA JMP EAOFFSET [SI]: load SI into tmpA DI → tmpA JMP EAOFFSET [DI]: load SI into tmpA BP → tmpA JMP EAOFFSET [BP]: load BP into tmpA BX → tmpA JMP EAOFFSET [BX]: load BX into tmpA

For the cases below, an addition is required, so the registers are loaded into the ALU's temporary A and temporary B registers.

The effective address is the sum (indicated by Σ), which is moved to temporary A.12

These routines are carefully arranged in memory so [BX+DI] and [BP+SI] each execute one micro-instruction and then jump into

the middle of the other routines, saving code.13

BX → tmpA [BX+SI]: get regs SI → tmpB 1: Σ → tmpA JMP EAOFFSET BP → tmpA [BP+DI]: get regs DI → tmpB 4: Σ → tmpA JMP EAOFFSET BX → tmpA JMPS 4 [BX+DI]: short jump to 4 BP → tmpA JMPS 1 [BP+SI]: short jump to 1

The EAOFFSET and EAFINISH targets

After computing the register portion of the effective address, the routines above jump to

EAOFFSET, but this is not a fixed target.

Instead, the Translation ROM selects one of three target microcode addresses based on the instruction and the ModR/M byte:

If there's a displacement, the microcode jumps to [i] to add the displacement value.

If there is no displacement but a memory read, the microcode otherwise jumps to EALOAD to load the memory contents.

If there is no displacement and no memory read should take place, the microcode jumps to EADONE.

In other words, the microcode jump is a three-way branch that is implemented by the Translation ROM and is transparent to the microcode.

For a displacement, the [i] immediate code below loads a 1-byte or 2-byte displacement into the tmpB register and adds it to the tmpA register,

as described earlier.

At the end of a displacement calculation, the microcode jumps to the EAFINISH tag, which is another branching target.

Based on the instruction, the Translation ROM selects one of two microcode targets: EALOAD to load from memory, or EADONE to skip the load.

Q → tmpBL JMPS MOD1 12 [i]: get byte(s) Q → tmpBH Σ → tmpA JMP EAFINISH 12: add displacement

The EALOAD microcode below reads the value from memory, using the effective address in tmpA. It puts the result in tmpB.

The RTN micro-instruction returns to the microcode that implements the original machine instruction.

tmpA → IND R DS,P0 EALOAD: read from tmpA address OPR → tmpB RTN store result in tmpB, return

The EADONE routine puts the effective address in IND, but it doesn't read from the memory location.

This supports machine instructions such as MOV (some moves) and LEA (Load Effective Address) that don't read from memory

tmpA → IND RTN EADONE: store effective address in IND

To summarize, the microcode runs different subroutines and different paths, depending on the addressing mode, executing the appropriate code. The Translation ROM selects the appropriate control flow path.

Special cases

There are a couple of special cases in addressing that I will discuss in this section.

Supporting a fixed address

It is common to access a fixed memory address, but the standard addressing modes use a base or index register.

The 8086 replaces the mode of [BP] with no displacement with 16-bit fixed addressing.

In other words, a ModR/M byte with the pattern 00xxx110 is treated specially.

(This special case is the orange disp16 line in the ModR/M table earlier.)

This is implemented in the Translation ROM which has additional rows to

detect this pattern and execute the immediate word [iw] microcode below instead.

This microcode fetches a word from the instruction prefetch queue (Q) into the tmpA register, a byte at a time.

It jumps to EAFINISH instead of EAOFFSET because it doesn't make sense to add another displacement.

Q → tmpAL [iw]: get bytes

Q → tmpAH JMP EAFINISH

Selecting the segment

Memory accesses in the 8086 are relative to one of the 64-kilobyte segments: Data Segment, Code Segment, Stack Segment, or Extra Segment.

Most addressing modes use the Data Segment by default.

However, addressing modes that use the BP register use the Stack Segment by default.

This is a sensible choice since the BP (Base Pointer) register is intended for accessing values on the stack.

This special case is implemented in the Translation ROM. It has an extra output bit that indicates that the addressing mode should use the Stack Segment. Since the Translation ROM is already decoding the addressing mode to select the right microcode routine, adding one more output bit is straightforward. This bit goes to the segment register selection circuitry, changing the default segment. This circuitry also handles prefixes that change the segment. Thus, segment register selection is handled in hardware without any action by the microcode.

Conclusions

I hope you have enjoyed this tour through the depths of 8086 microcode. The effective address calculation in the 8086 uses a combination of microcode and logic circuitry to implement a variety of addressing methods. Special cases make the addressing modes more useful, but make the circuitry more complicated. This shows the CISC (Complex Instruction Set Computer) philosophy of x86, making the instructions complicated but highly functional. In contrast, the RISC (Reduced Instruction Set Computer) philosophy takes the opposite approach, making the instructions simpler but allowing the processor to run faster. RISC vs. CISC was a big debate of the 1980s, but isn't as relevant nowadays.

People often ask if microcode could be updated on the 8086. Microcode was hardcoded into the ROM, so it could not be changed. This became a big problem for Intel with the famous Pentium floating-point division bug. The Pentium chip turned out to have a bug that resulted in rare but serious errors when dividing. Intel recalled the defective processors in 1994 and replaced them at a cost of $475 million. Starting with the Pentium Pro (1995), microcode could be patched at boot time, a useful feature that persists in modern CPUs.

I've written multiple posts on the 8086 so far and plan to continue reverse-engineering the 8086 die so follow me on Twitter @kenshirriff or RSS for updates. I've also started experimenting with Mastodon recently as @oldbytes.space@kenshirriff.

Notes and references

-

There are additional addressing modes that don't use a ModR/M byte. For instance, immediate instructions use a constant in the instruction. For instance

ADD AX,42adds 42 to the AX register. Other instructions implicitly define the addressing mode. I'm ignoring these instructions for now. ↩ -

The 8086 supports more addressing modes than the ModR/M byte provides, by using separate opcodes. For instance, arithmetic instructions can take an "immediate" value, an 8- or 16-bit value specified as part of the instruction. Other instructions operate on specific registers rather than memory or access memory through the stack. For this blog post, I'm focusing on the ModR/M modes and ignoring the other instructions. Also, although I'm discussing the 8086, this blog post applies to the Intel 8088 processor as well. The 8088 has an 8-bit bus, a smaller prefetch queue, and some minor internal changes, but for this post you can consider them to be the same. ↩

-

My assembly code examples are based on Intel ASM86 assembly syntax. There's a completely different format of x86 assembly language known as AT&T syntax. Confusingly, it reverses the source and destination. For example, in AT&T syntax,

addw %bx, %cx%stores the result in CX. AT&T syntax is widely used, for instance in Linux code. The AT&T syntax is based on earlier PDP-11 assembly code. ↩ -

The term "effective address" dates back to the 1950s, when computers moved beyond fixed memory addresses and started using index registers. The earliest uses that I could find are from 1955 for the IBM 650 data processing machine and the IBM 704 mainframe. The "Load Effective Address" instruction, which provides the effective address as a value instead of performing the memory access, was perhaps introduced in the IBM System/360 (1964) under the name "Load Address". It has been a part of many subsequent processors including the 8086. ↩

-

Note that the ModR/M byte has the bits grouped in threes (as do many instructions). This is due to the octal heritage of the 8086, dating back through the 8080 and the 8008 to the Datapoint 2200 (which used TTL chips to decode groups of three bits). Although the 8086 instruction set is invariably described in hexadecimal, it makes much more sense when viewed in octal. See x86 is an octal machine for details. ↩

-

The 8086's addressing schemes are reminiscent of the IBM System/360 (1964). In particular, System/360 had a "RX" instruction format that accessed memory through a base register plus an index register plus a displacement, using another register for the other argument. This is very similar to the 8086's base + index + displacement method. The System/360's "RR" (register-register) instruction format accessed two registers, much like the register mode of the ModR/M byte. The details are very different, though, between the two systems. See the IBM System/360 Principles of Operation for more details. ↩

-

The motivation behind the ModR/M options is discussed in The 8086/8088 Primer by 8086 designer Steve Morse, pages 23-33. ↩

-

The D bit is usually called the register direction bit, but the designer of the 8086 instruction set calls it the destination field; see The 8086/8088 Primer, Steve Morse, page 28. To summarize:

If the bit is 0, the result is stored into the location indicated by themodandr/mfields while the register specified byregis the source.

If the bit is 1, the result is stored into the register indicated by theregfield.For the

Wword bit, 0 indicates a byte operation and 1 indicates a word operation.One curious side-effect of the D bit is that an instruction like

ADD AX,BXcan be implemented in two ways since both arguments are registers. Theregfield can specifyAXwhile ther/mfield specifiesBXor vice versa, depending on the D bit. Different 8086 assemblers can be "fingerprinted" based on their decisions in these ambiguous cases. ↩ -

The M and N registers hold a 5-bit code. This code indicates a 16-bit register (e.g.

AXorIND), an 8-bit register (e.g.AL), or a special value (e.g.Σ, the ALU result;ZEROS, all zero bits; orF, the flags). The 3-bit register specification is mapped onto the 5-bit code depending on whether the W bit is set (byte or word register), or if the operation is specifying a segment register. ↩ -

The microcode listings are based on Andrew Jenner's disassembly. I have made some modifications to (hopefully) make it easier to understand. ↩

-

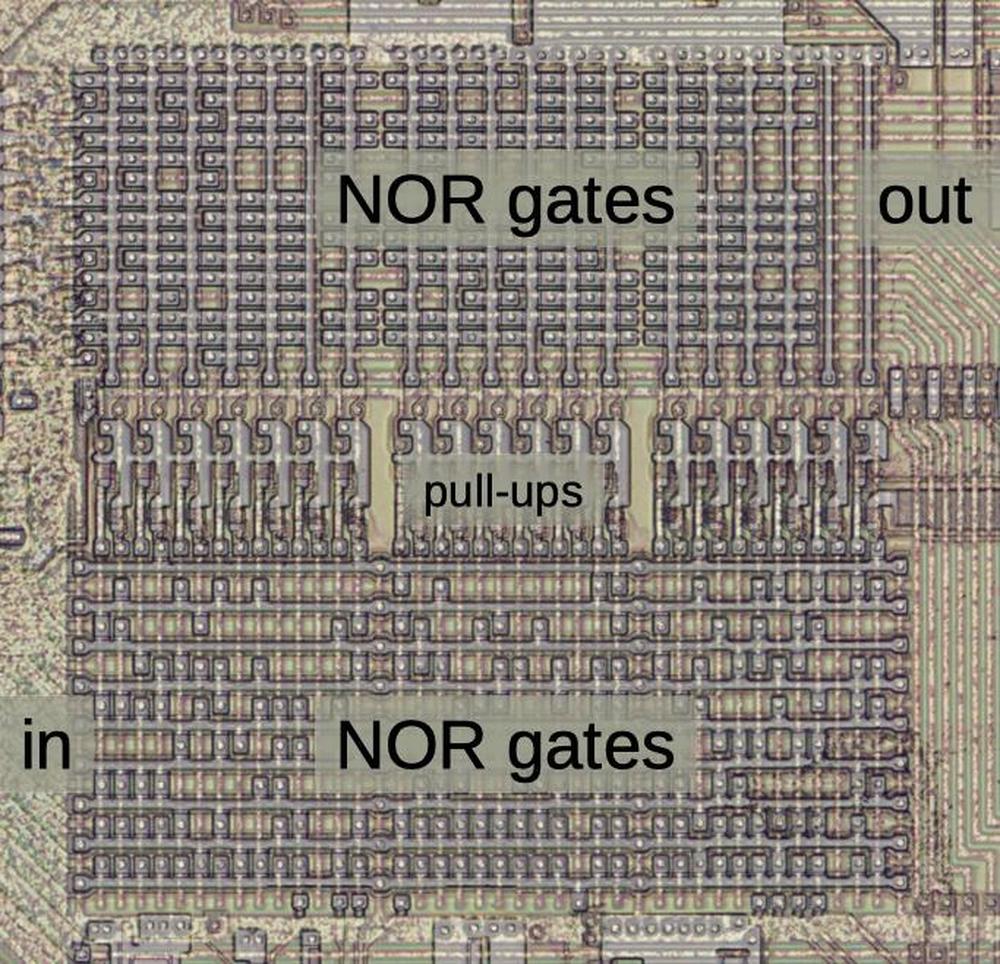

You can also view the Translation ROM as a PLA (Programmable Logic Array) constructed from two layers of NOR gates. The conditional entries make it seem more like a PLA than a ROM. Technically, it can be considered a ROM since a single row is active at a time. I'm using the name "Translation ROM" because that's what Intel calls it in the patents. ↩

-

Normally, an ALU operation requires a micro-instruction to specify the desired ALU operation and temporary register. For the address addition, the ALU operation is not explicitly specified because it uses the ALU's default, of adding

tmpAandtmpB. The ALU is reset to this default at the beginning of each machine instruction. ↩ -

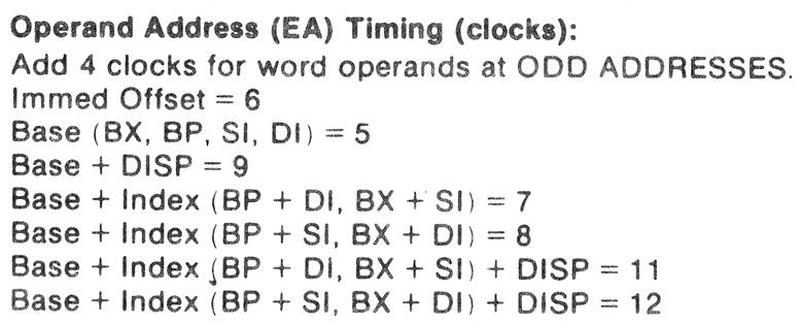

A microcode jump takes an extra clock cycle for the microcode address register to get updated. This is why, for instance,

[BP+DI]takes 7 clock cycles but[BX+DI]takes 8 clock cycles. Thus, the 8086 implementers took the tradeoff of slowing down some addressing modes by a clock cycle in order to save a few micro-instructions in the small microcode ROM.This table shows the clock cycles required for effective address calculations. From MCS-86 Assembly Language Reference Guide. -

A one-byte signed number can be sign-extended into a two-byte signed number. This is done by copying the top bit (the sign) from the low byte and filling the top byte with that bit. For example, 0x64 is sign-extended to 0x0064 (+100), while 0x9c is sign-extended to 0xff9c (-100). ↩