The Soviet space program used completely different controls and instruments from American spacecraft. One of the most interesting navigation instruments onboard Soyuz spacecraft was the Globus, which used a rotating globe to indicate the spacecraft's position above the Earth. This navigation instrument was an electromechanical analog computer that used an elaborate system of gears, cams, and differentials to compute the spacecraft's position. Officially, the unit was called a "space navigation indicator" with the Russian acronym ИНК (INK),1 but I'll use the more descriptive nickname "Globus".

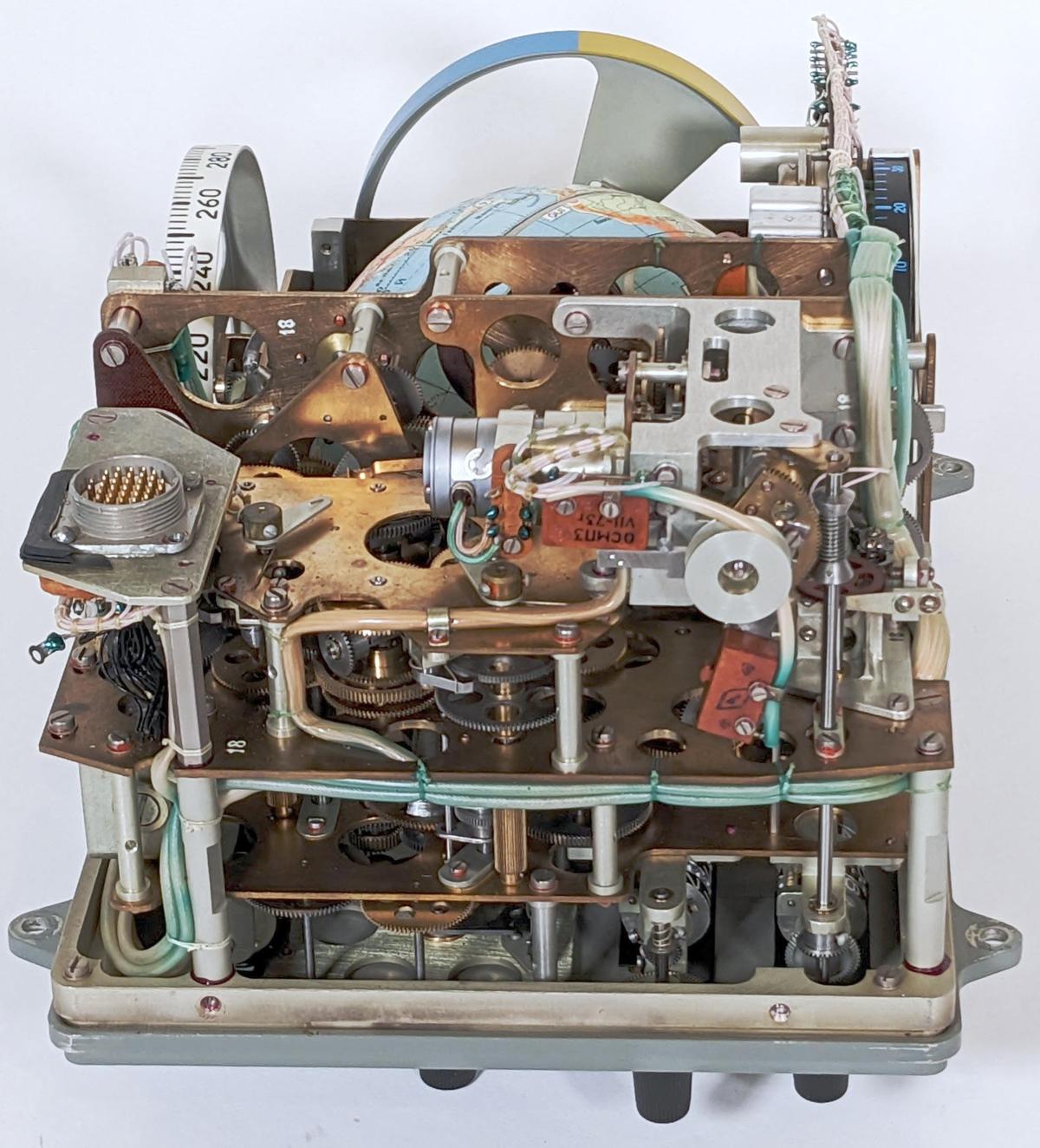

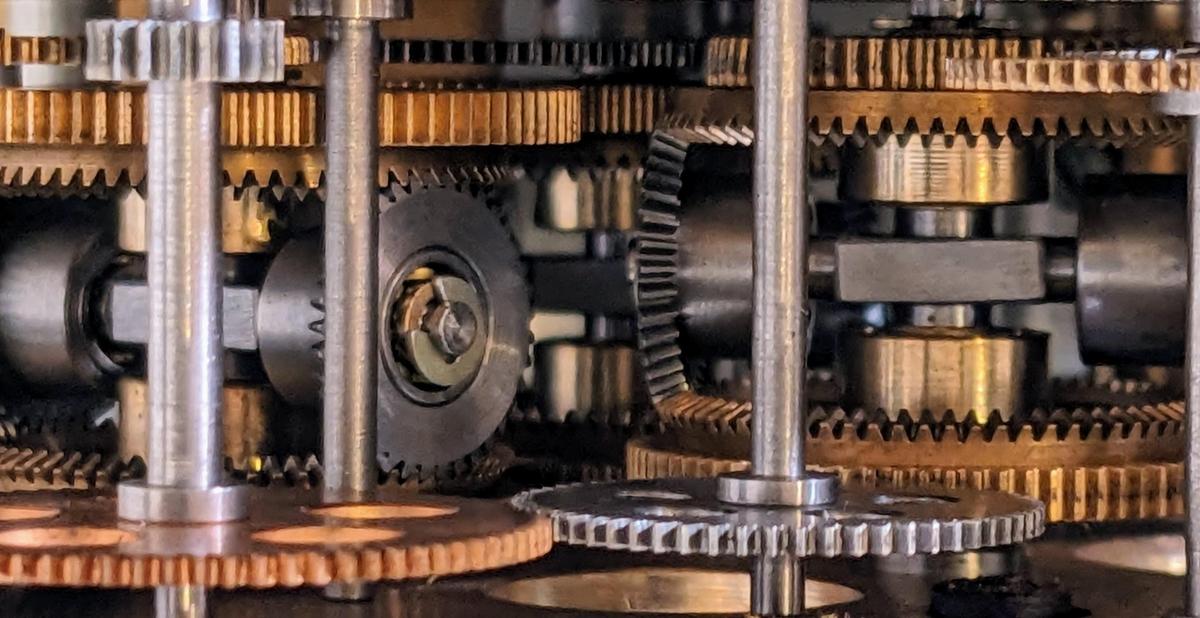

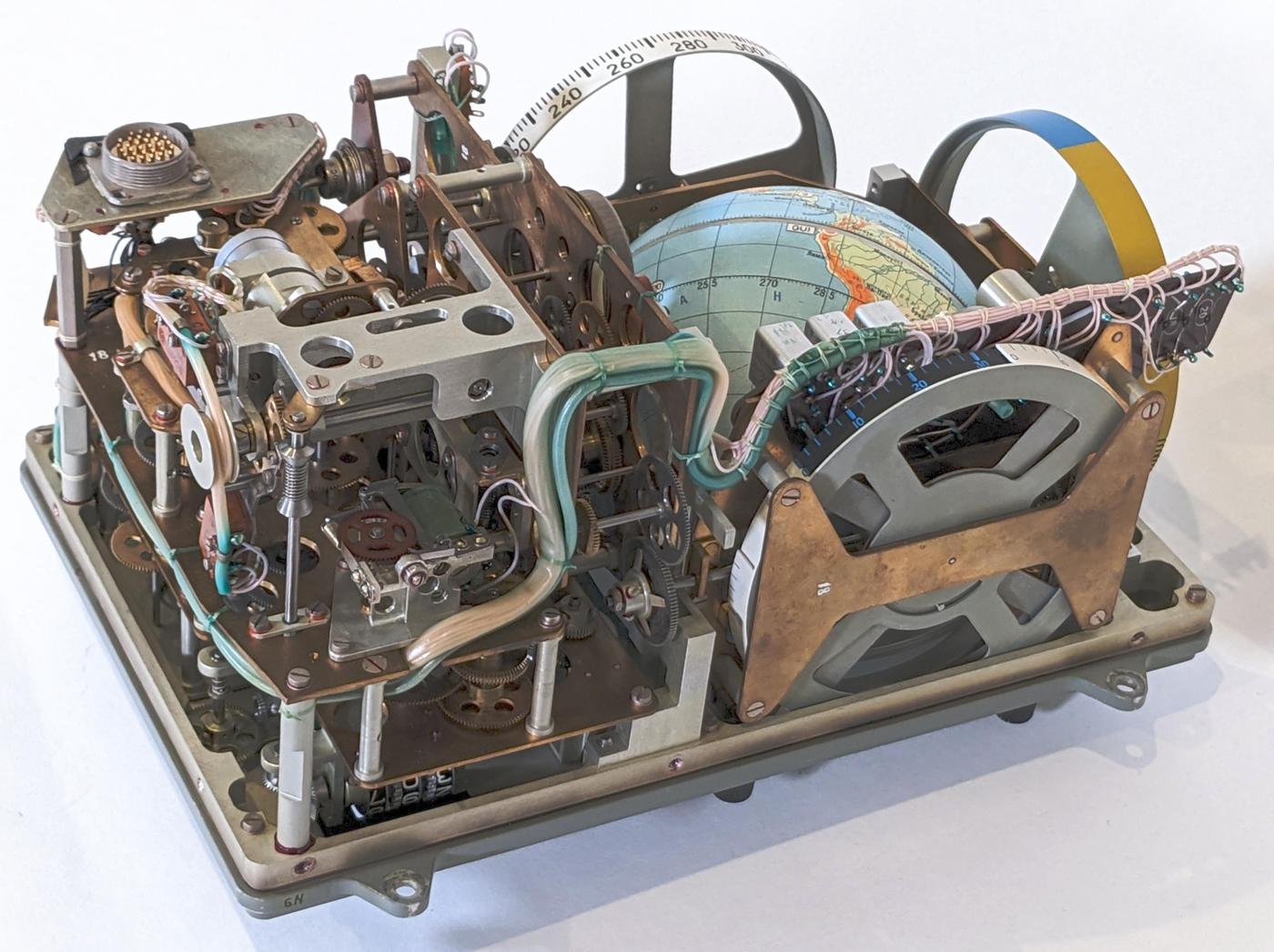

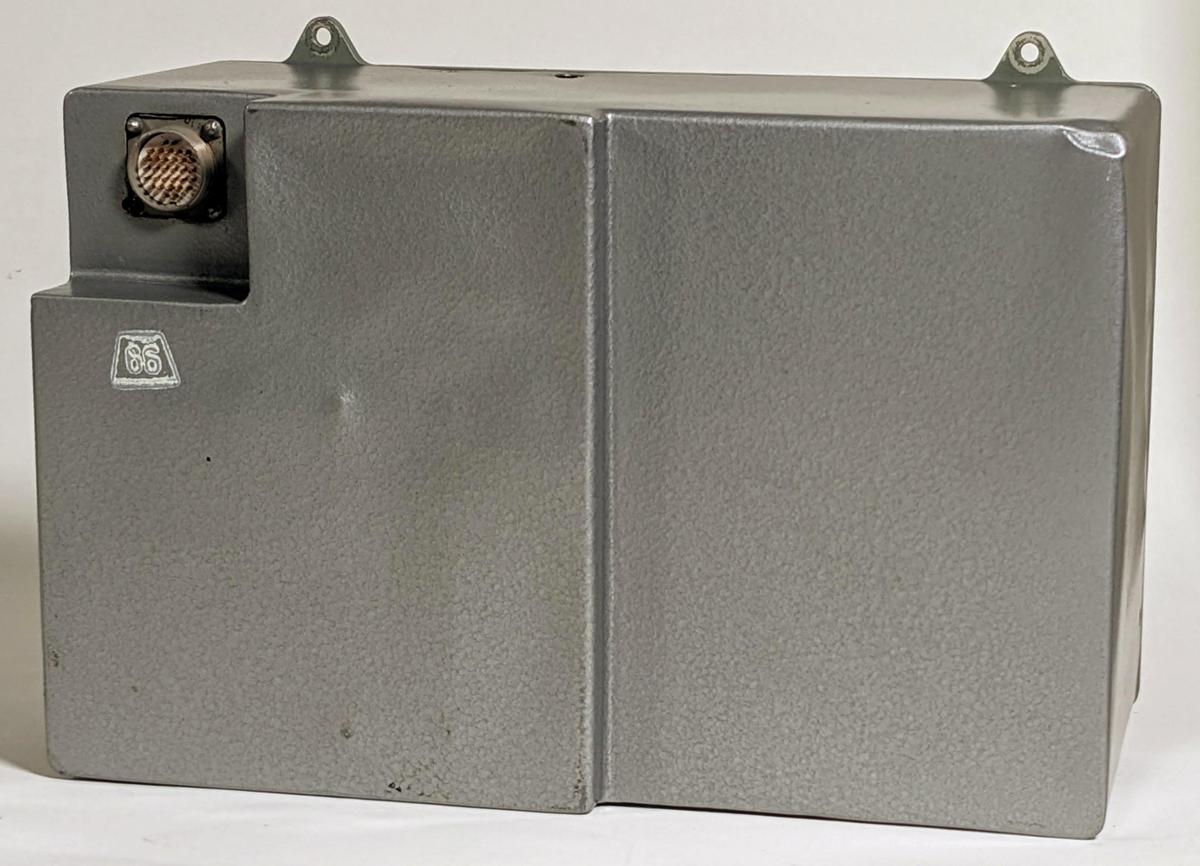

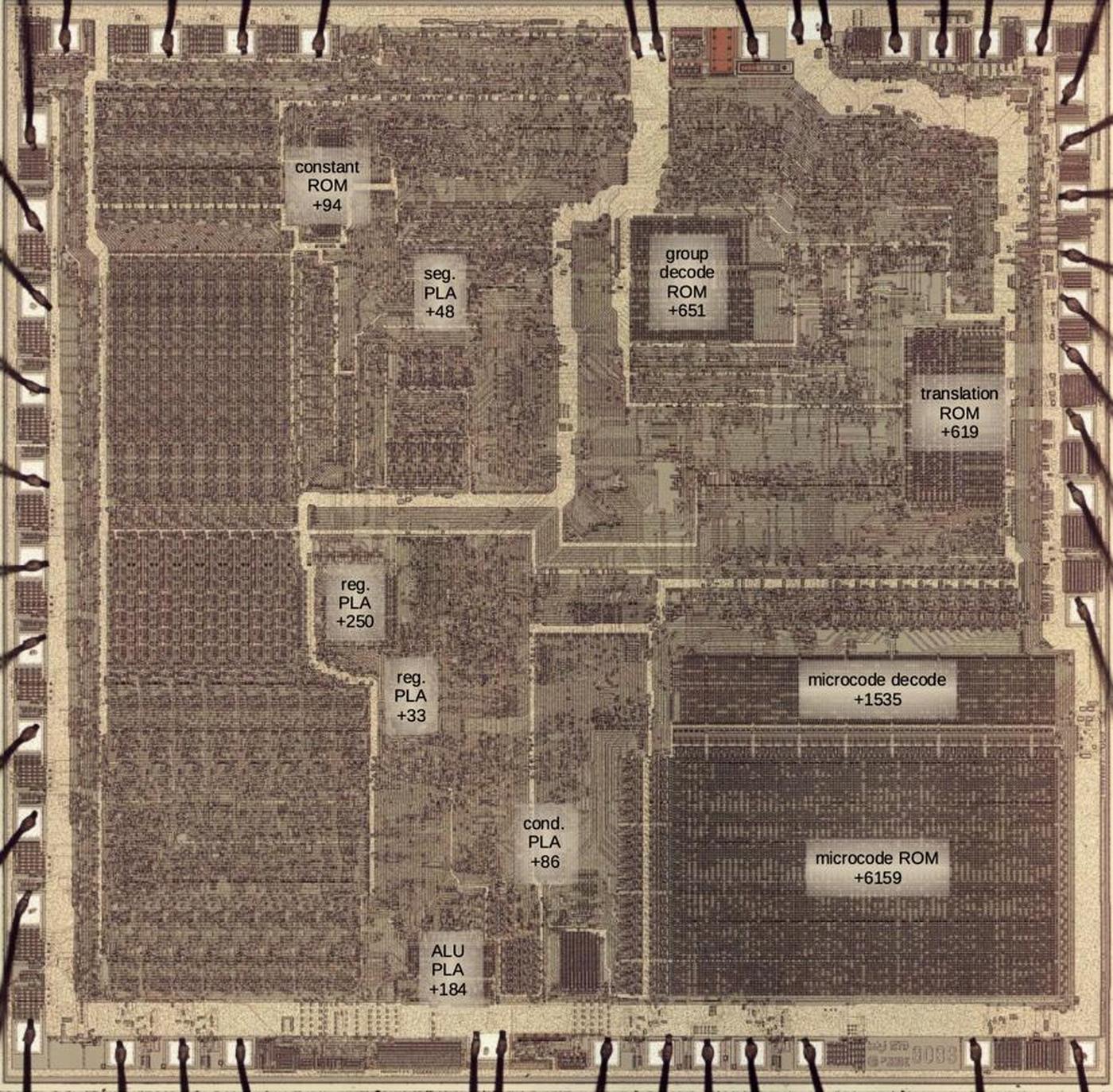

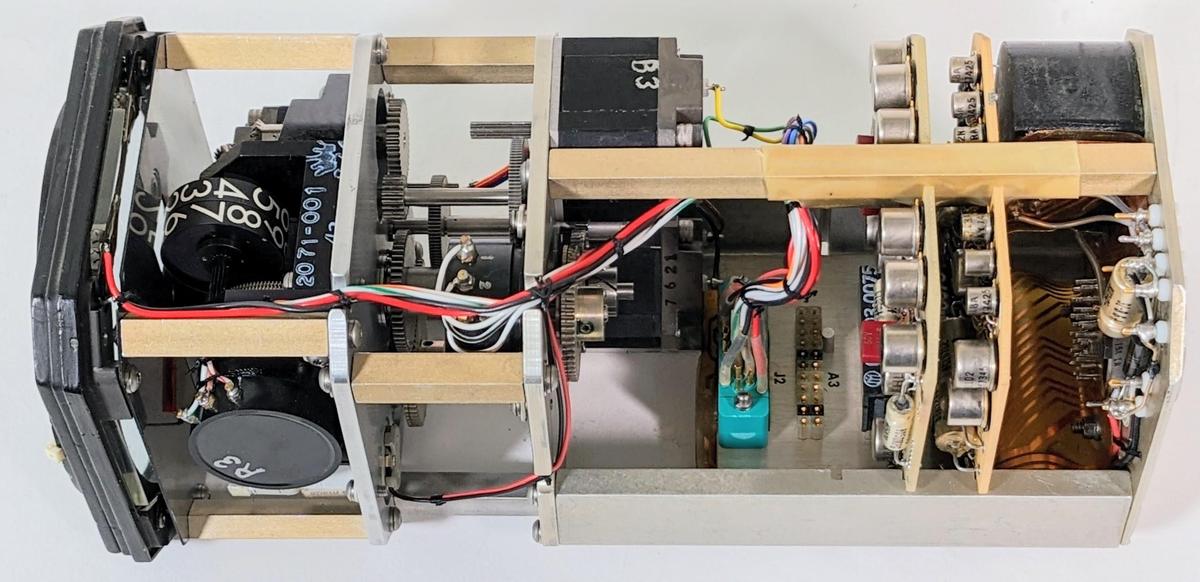

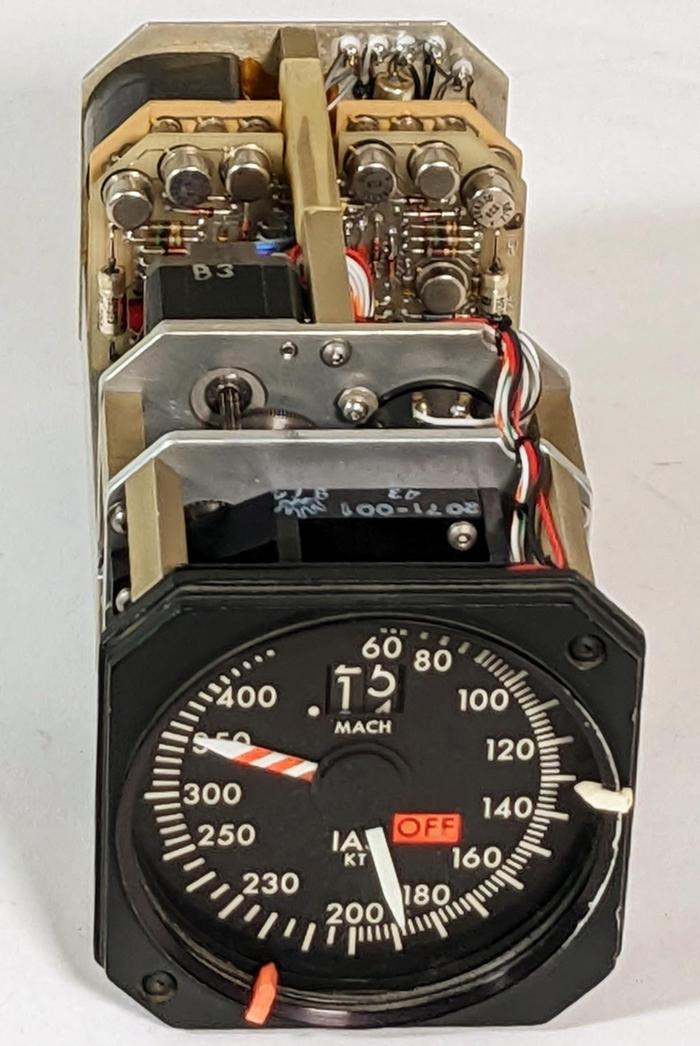

We recently received a Globus from a collector and opened it up for repair and reverse engineering. In this blog post, I explain how it operated, show its internal mechanisms, and describe what I've learned so far from reverse engineering. The photo below gives an idea of the mechanical complexity of this device, which also has a few relays, solenoids, and other electrical components.

Functionality

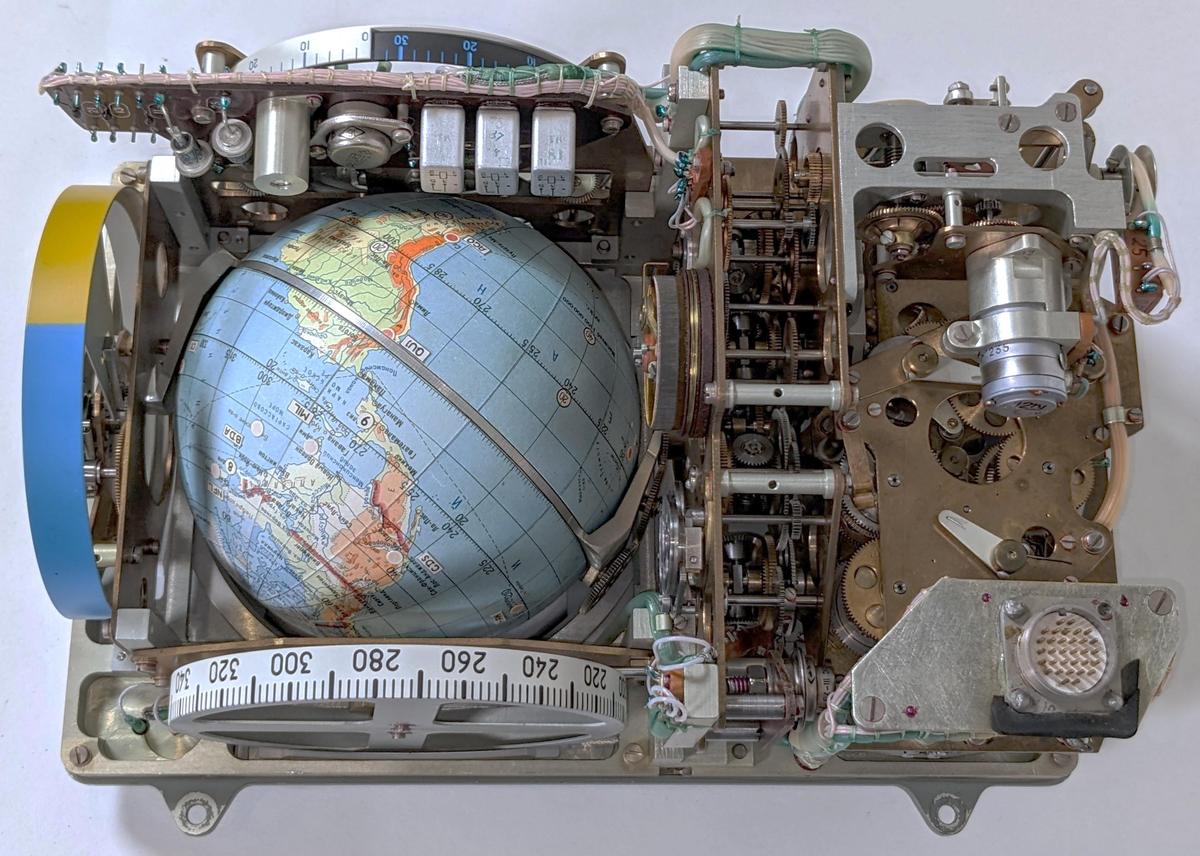

The primary purpose of the Globus was to indicate the spacecraft's position. The globe rotated while fixed crosshairs on the plastic dome indicated the spacecraft's position. Thus, the globe matched the cosmonauts' view of the Earth, allowing them to confirm their location. Latitude and longitude dials next to the globe provided a numerical indication of location. Meanwhile, a light/shadow dial at the bottom showed when the spacecraft would be illuminated by the sun or in shadow, important information for docking. The Globus also had an orbit counter, indicating the number of orbits.

The Globus had a second mode, indicating where the spacecraft would land if they fired the retrorockets to initiate a landing. Flipping a switch caused the globe to rotate until the landing position was under the crosshairs and the cosmonauts could evaluate the suitability of this landing site.

The cosmonauts configured the Globus by turning knobs to set the spacecraft's initial position and orbital period. From there, the Globus electromechanically tracked the orbit. Unlike the Apollo Guidance Computer, the Globus did not receive navigational information from an inertial measurement unit (IMU) or other sources, so it did not know the spacecraft's real position. It was purely a display of the predicted position.

The globe

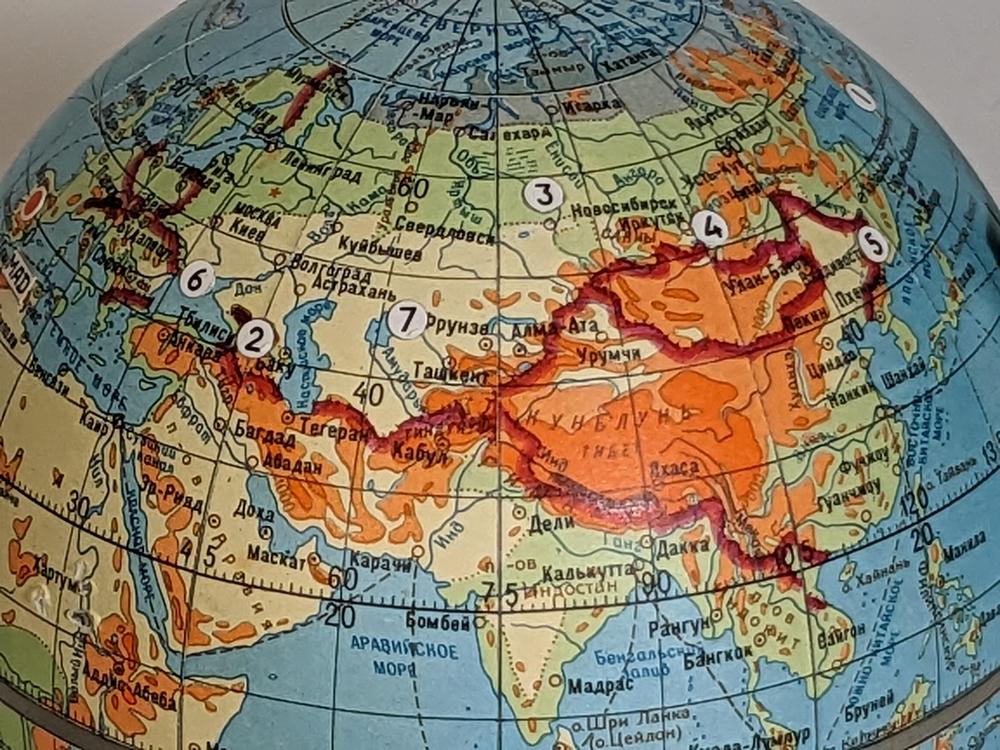

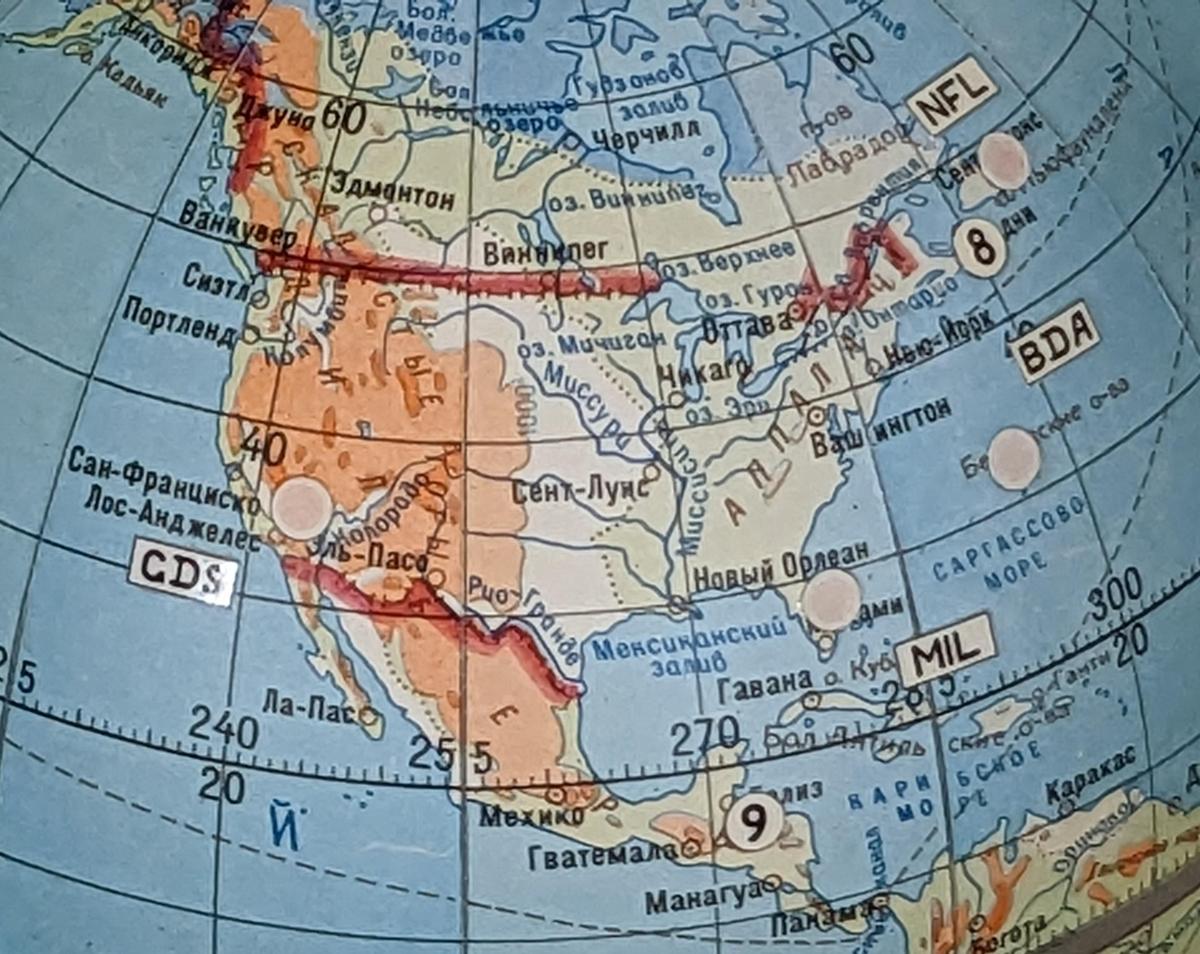

The globe itself is detailed for its small size, showing terrain features such as mountains, lakes, and rivers. These features on the map helped cosmonauts compare their position with the geographic features they could see on Earth. These features were also important for selecting a landing site, so they could see what kind of terrain they would be landing on. For the most part, the map doesn't show political boundaries, except for thick red and purple lines. This line shows the borders of the USSR, as well as the boundaries between communist and non-communist countries, also important for selecting a landing site. The globe also has numbered circles 1 through 8 that indicate radio sites for communication with the spacecraft, allowing the cosmonauts to determine what ground stations could be contacted.

Controlling the globe

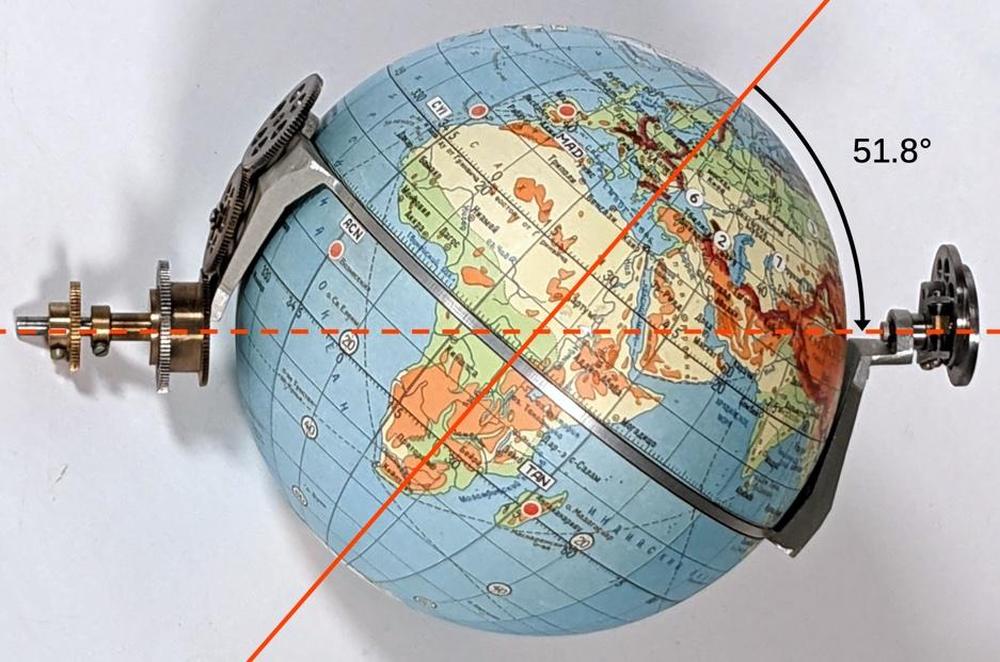

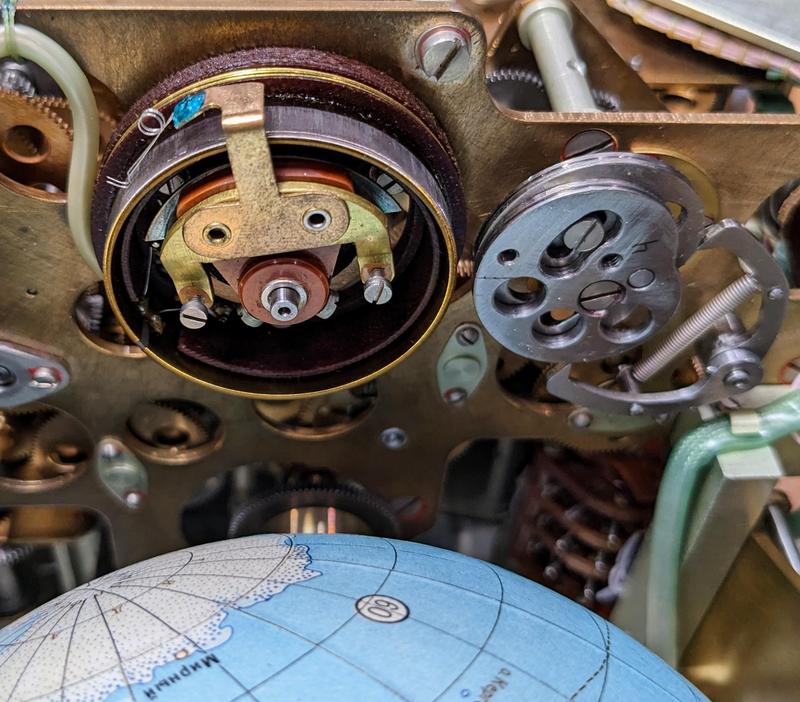

On seeing the Globus, one might wonder how the globe is rotated. It may seem that the globe must be free-floating so it can rotate in two axes. Instead, a clever mechanism attaches the globe to the unit. The key is that the globe's equator is a solid piece of metal that rotates around the horizontal axis of the unit. A second gear mechanism inside the globe rotates the globe around the North-South axis. The two rotations are controlled by concentric shafts that are fixed to the unit, allowing two rotational degrees of freedom through fixed shafts.

The photo below shows the frame that holds and controls the globe. The dotted axis is fixed horizontally in the unit and rotations are fed through the two gears at the left. One gear rotates the globe and frame around the dotted axis, while the gear train causes the globe to rotate around the vertical polar axis (while the equator remains fixed).

The angle above is 51.8° which is very important: this is the inclination of the standard Soyuz orbit. As a result, simply rotating the globe around the dotted line causes the crosshair to trace the standard orbit.2 Rotating the two halves of the globe around the poles yields the different 51.8° orbits over the Earth's surface as the Earth rotates. (Why 51.8 degrees? The Baikonur Cosmodrome, launching point for Soyuz, is at 45.97° N latitude, so 45.97° would be the most efficient inclination. However, to prevent the launch from passing over western China, the rocket must be angled towards the north, resulting in 51.8° (details).)

One important consequence of this design is that the orbital inclination is fixed by the angle of the globe mechanism. Different Globus units needed to be built for different orbits. Moreover, this design only handles circular orbits, making it useless during orbit changes such as rendezvous and docking. These were such significant limitations that some cosmonauts wanted the Globus removed from the control panel, but it remained until it was replaced by a computer display in Soyuz-TMA (2002).3

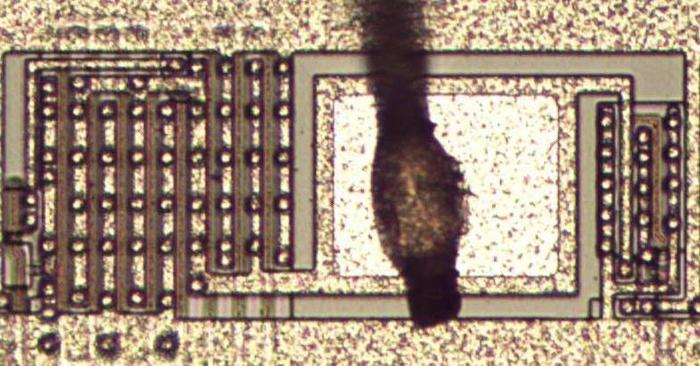

This Globus had clearly suffered some damage. The back of the case had some large dents.7 More importantly, the globe's shaft had been knocked loose from its proper position and no longer meshed with the gears. This also put a gouge into Africa, where the globe hit internal components. Fortunately, CuriousMarc was able to get the globe back into position while ensuring that the gears had the right timing. (Putting the globe back arbitrarily would mess up the latitude and longitude.)

Orbital speed and the "cone"

An orbit of Soyuz takes approximately 90 minutes, but the time varies according to altitude.4 The Globus has an adjustment knob (below) to adjust the orbital period in minutes, tenths of minutes, and hundredths of minutes. The outer knob has three positions and points to the digit that changes when the inner knob is turned. The mechanism provides an adjustment of ±5 minutes from the nominal period of 91.85 minutes.3

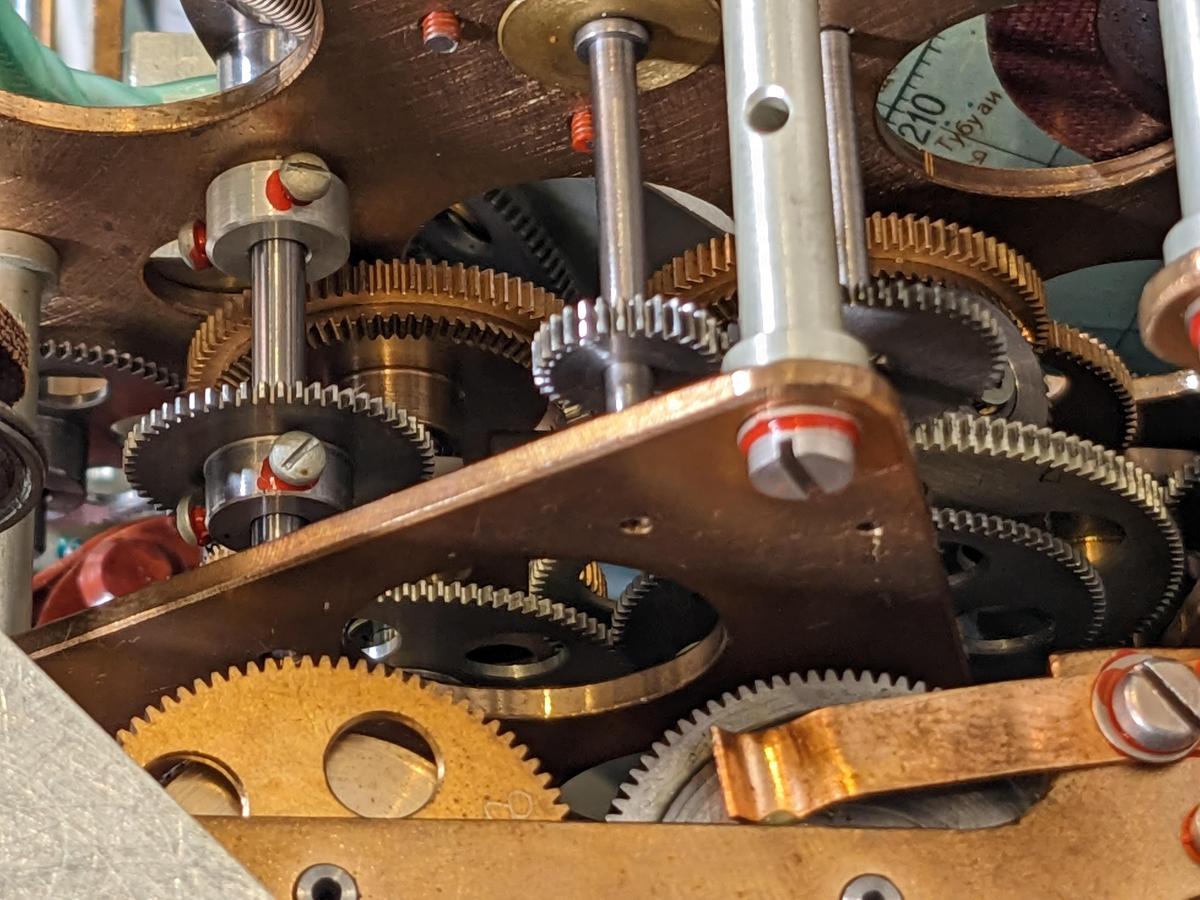

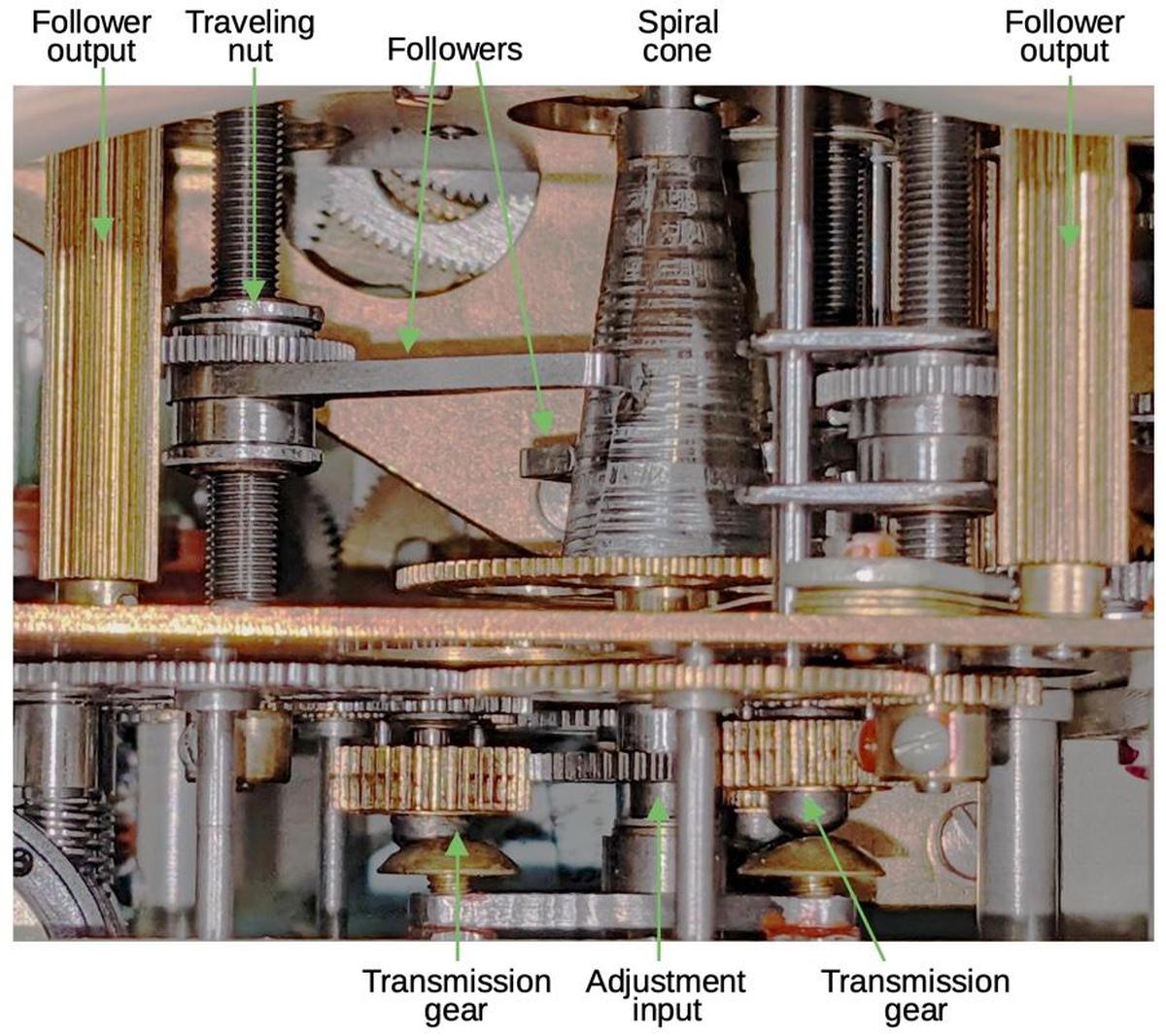

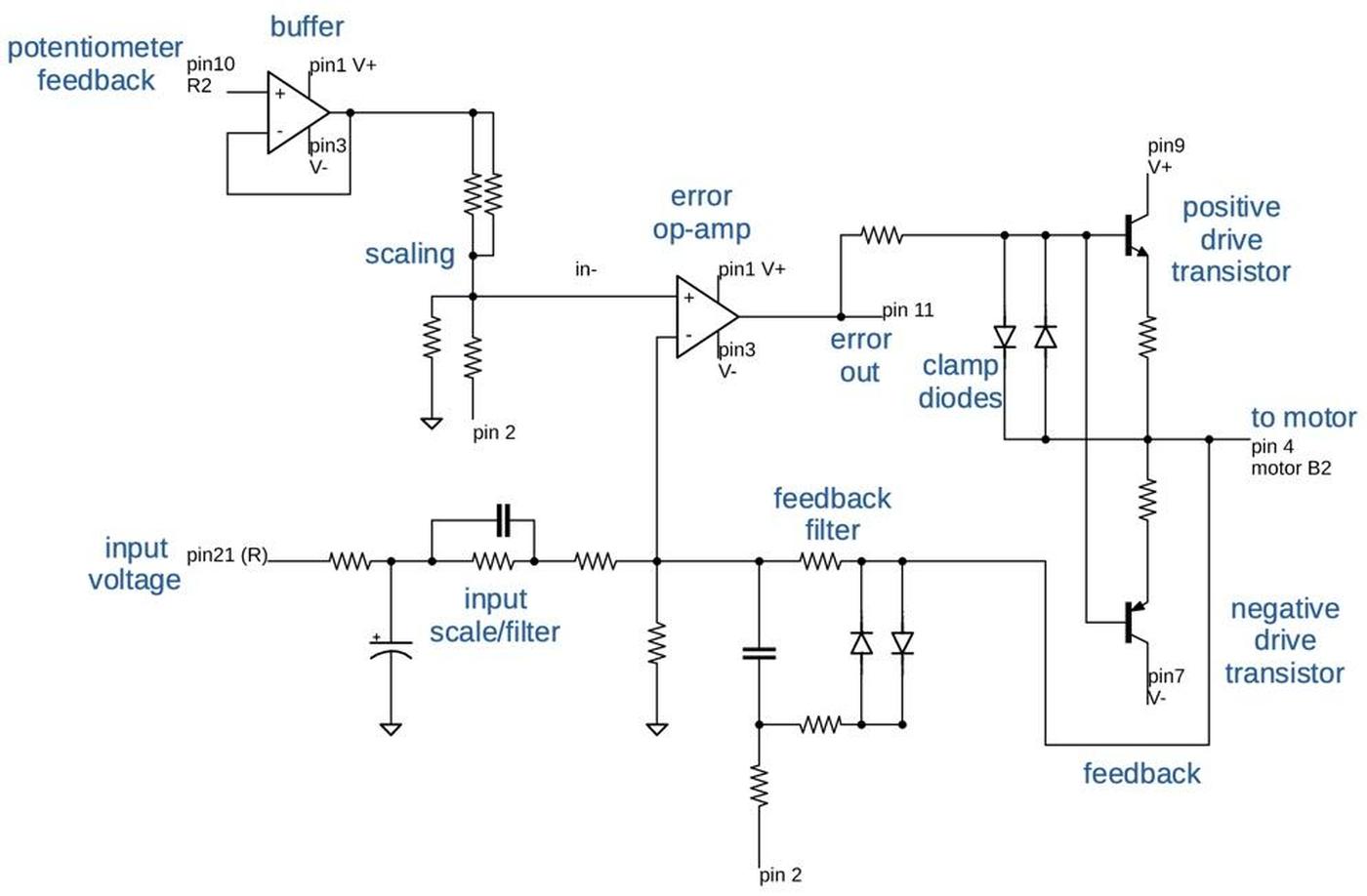

The orbital speed feature is implemented by increasing or decreasing the speed at which the globe rotates around the orbital (horizontal) axis. Generating a variable speed is tricky, since the Globus runs on fixed 1-hertz pulses. The solution is to start with a base speed, and then add three increments: one for the minutes setting, one for the tenths-of-minutes setting, and one for the hundredths-of-minutes setting.5 These four speeds are added (as shaft rotation speeds) using obtain the overall rotation speed.

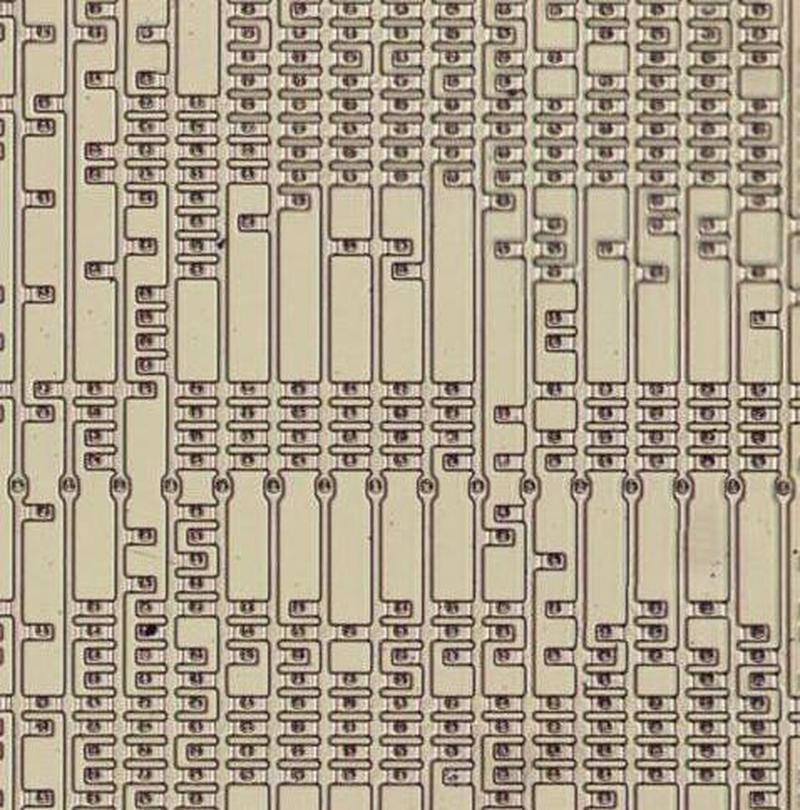

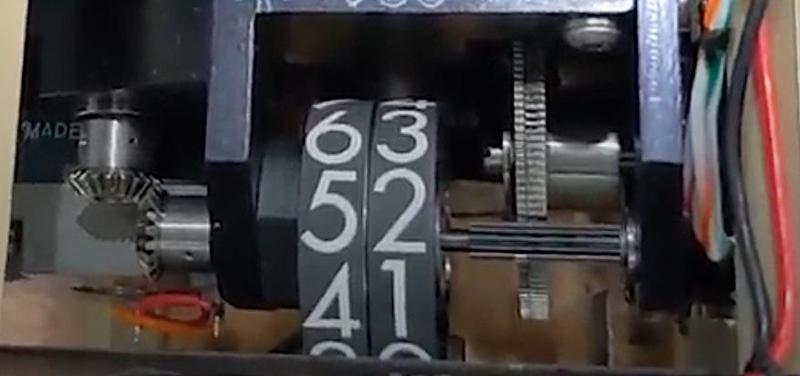

The Globus uses numerous differential gears to add or subtract rotations. The photo below shows two sets of differential gears, side-by-side.

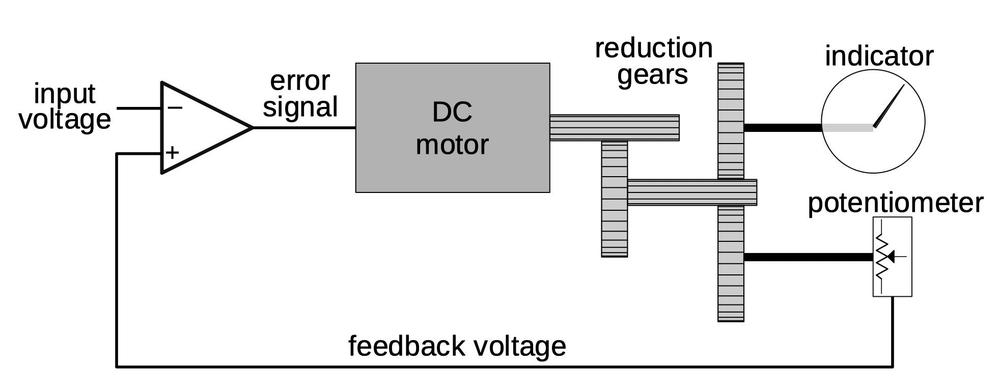

The problem is how to generate these three variable rotation speeds from the fixed input. The solution is a special cam, shaped like a cone with a spiral cross-section. Three followers ride on the cam, so as the cam rotates, the follower is pushed outward and rotates on its shaft. If the follower is near the narrow part of the cam, it moves over a small distance and has a small rotation. But if the follower is near the wide part of the cam, it moves a larger distance and has a larger rotation. Thus, by moving the follower to a particular point on the cam, the rotational speed of the follower is selected.

Obviously, the cam can't spiral out forever. Instead, at the end of one revolution, its cross-section drops back sharply to the starting diameter. This causes the follower to snap back to its original position. To prevent this from jerking the globe backward, the follower is connected to the differential gearing via a slip clutch and ratchet. Thus, when the follower snaps back, the ratchet holds the drive shaft stationary. The drive shaft then continues its rotation as the follower starts cycling out again. Thus, the output is a (mostly) smooth rotation at a speed that depends on the position of the follower.

Latitude and longitude

The indicators at the left and the top of the globe indicate the spacecraft's latitude and longitude respectively. These are defined by surprisingly complex functions, generated by the orbit's projection onto the globe.6

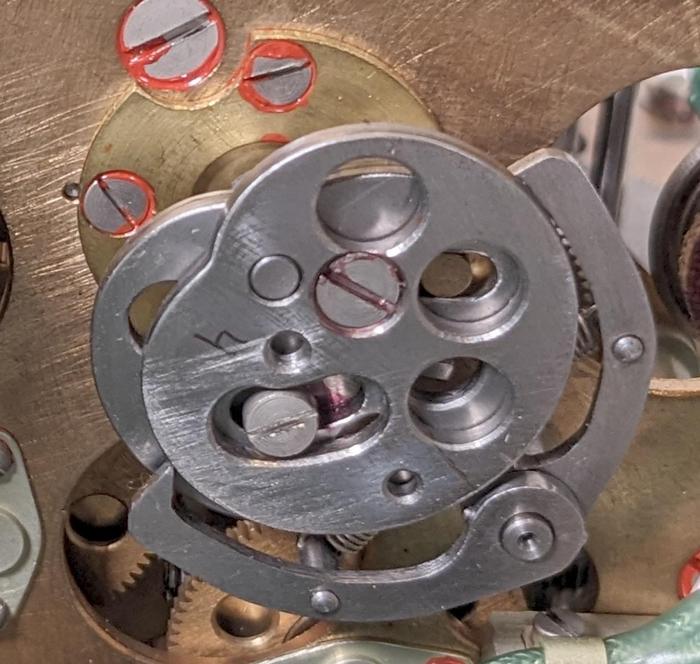

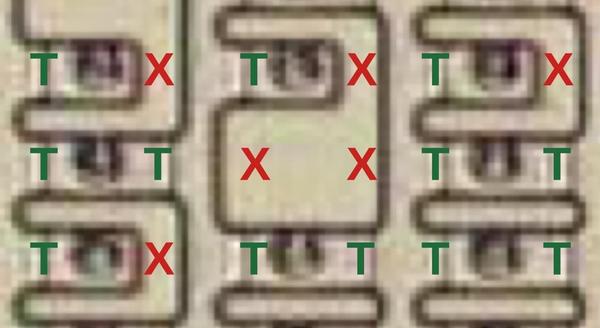

The latitude and longitude functions are implemented through the shape of metal cams; the photo below shows the longitude mechanism. Each function has two cams: one cam implements the desired function, while the other cam has the "opposite" shape to maintain tension on the jaw-like tracking mechanism.

The latitude cam drives the latitude dial, causing it to oscillate between 51.8° N and 51.8° S. Longitude is more complicated because the Earth's rotation causes it to constantly vary. The longitude output on the dial is produced by adding the cam's value to the Earth's rotation through a differential gear.

Light and shadow

The Globus has an indicator to show when the spacecraft will enter light or shadow. The dial consists of two concentric dials, configured by the two knobs. These dials move with the spacecraft's orbit, while the red legend remains fixed. I think these dials are geared to the longitude dial, but I'm still investigating.

The landing location mechanism

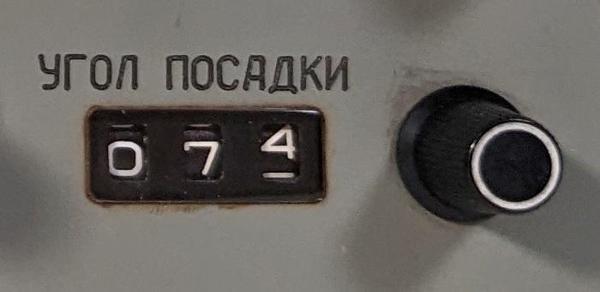

The Globus can display where the spacecraft would land if you started a re-entry burn now, with an accuracy of 150 km. This is computed by projecting the current orbit forward by a partial orbit, depending on how long it would take to land. The cosmonaut specifies this value by the "landing angle", which indicates this fraction of an orbit as an angle. An electroluminescent indicator in the upper-left corner of the unit shows "Место посадки" (Landing place) to indicate this mode.

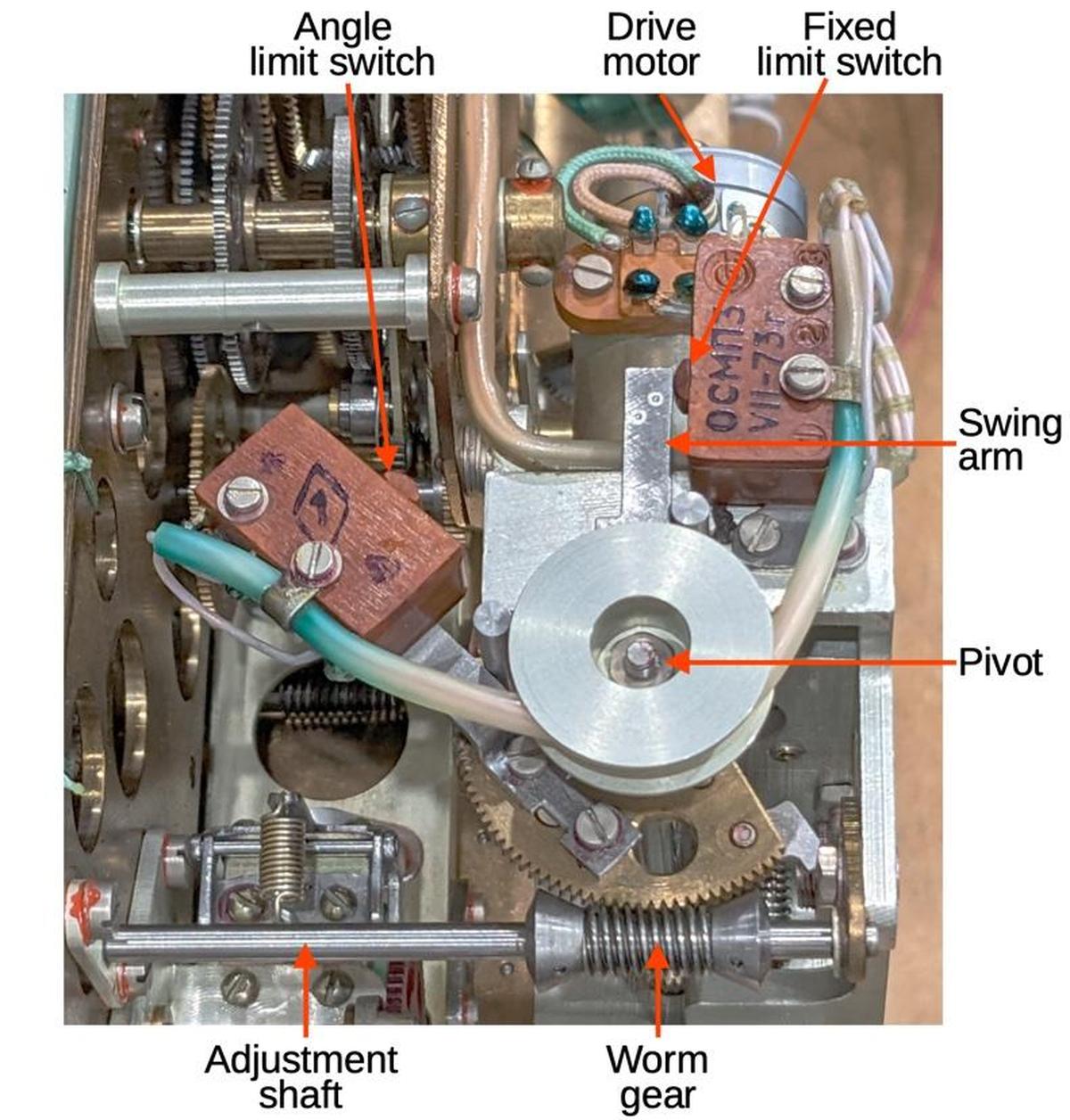

To obtain the landing position, a motor spins the globe until it is stopped after rotating through the specified angle. The mechanism to implement this is shown below. The adjustment knob on the panel turns the adjustment shaft which moves the limit switch to the desired angle via the worm gear. The wiring is wrapped around a wheel so the wiring stays controlled during this movement. When the drive motor is activated, it rotates the globe and the swing arm at the same time. Since the motor stops when the swing arm hits the angle limit switch, the globe rotates through the desired angle. The fixed limit switch is used when returning the globe's position to its regular, orbital position.

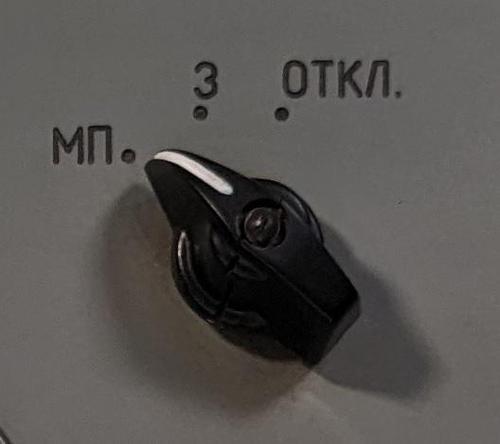

The landing location mode is activated by a three-position rotary switch. The first position "МП" (место посадки, landing site) selects the landing site, the second position "З" (Земля, Earth) shows the position over the Earth, and the third position "Откл" (off) undoes the landing angle rotation and turns off the mechanism.

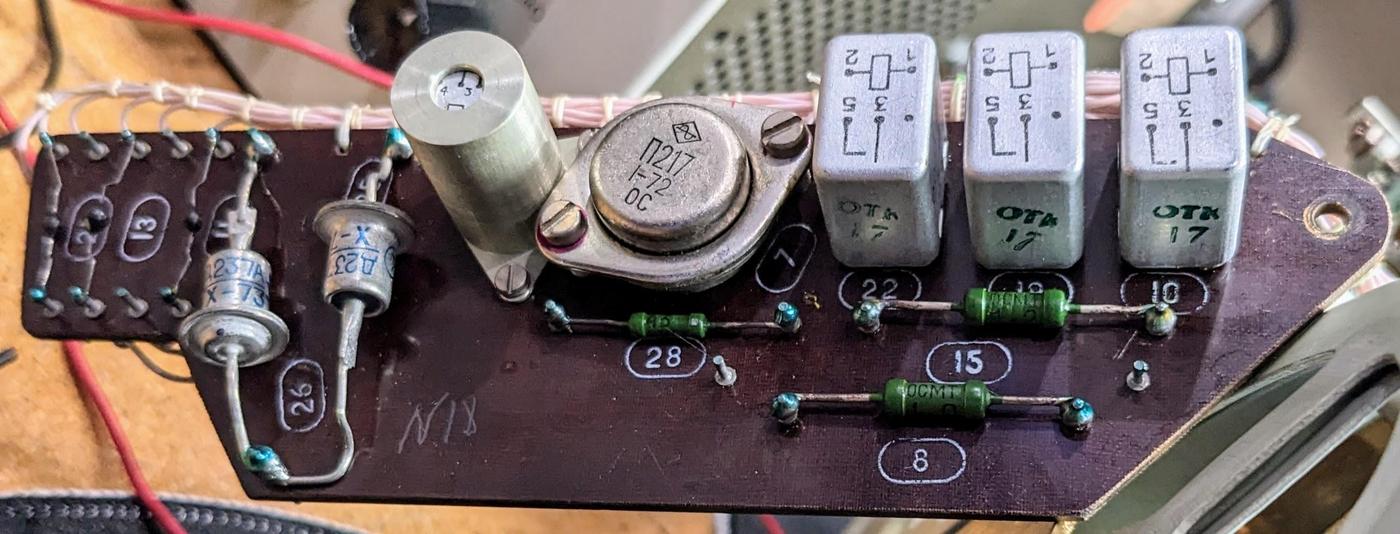

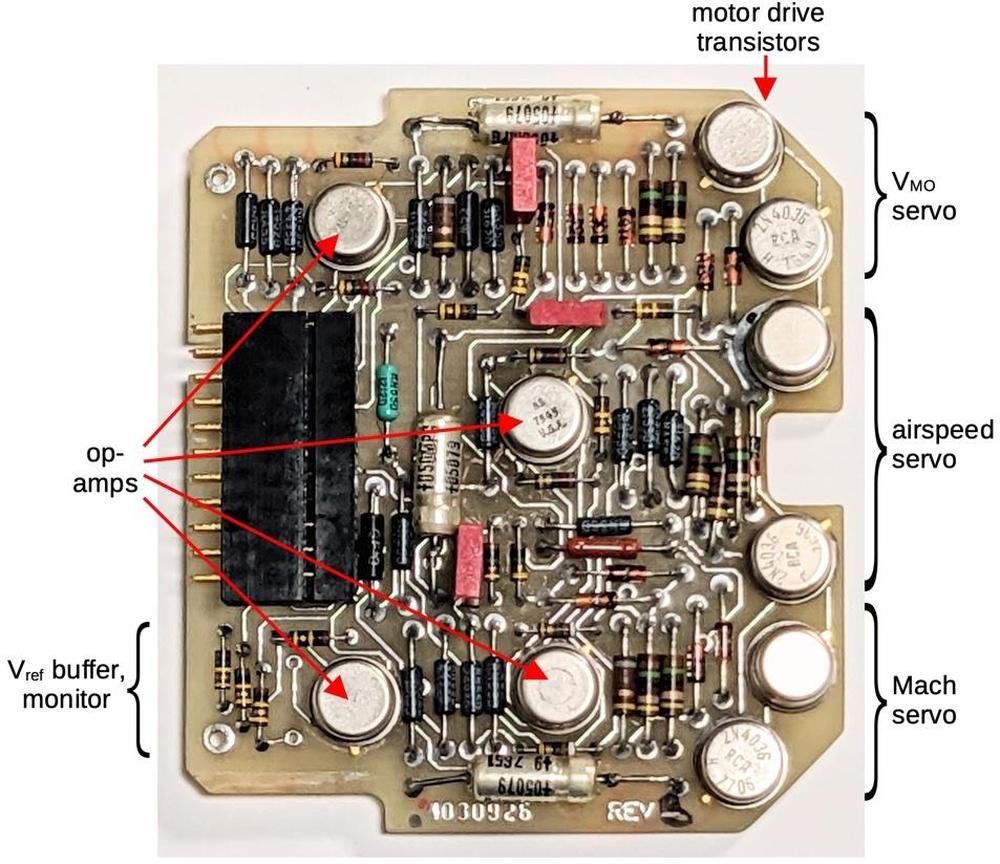

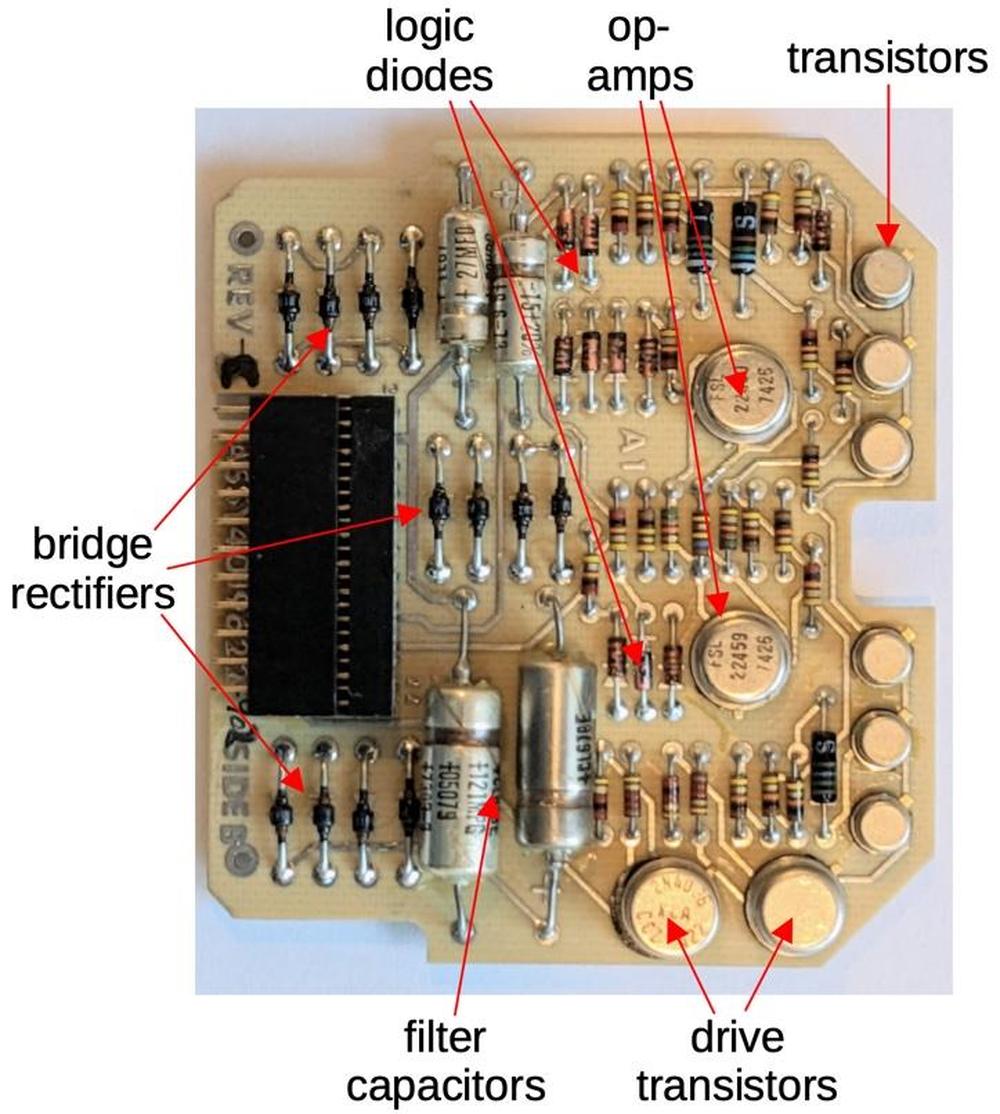

Electronics

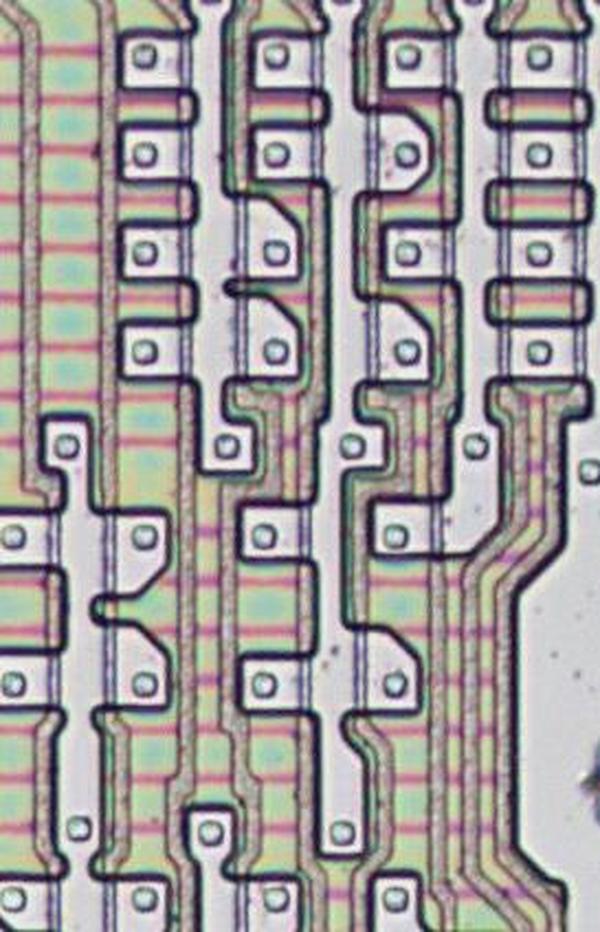

Although the Globus is mostly mechanical, it has an electronics board with four relays and a transistor, as well as resistors and diodes. I think that most of these relays control the landing location mechanism, driving the motor forward or backward and stopping at the limit switch. The diodes are flyback diodes, two diodes in series across each relay coil to eliminate the inductive kick when the coil is disconnected.

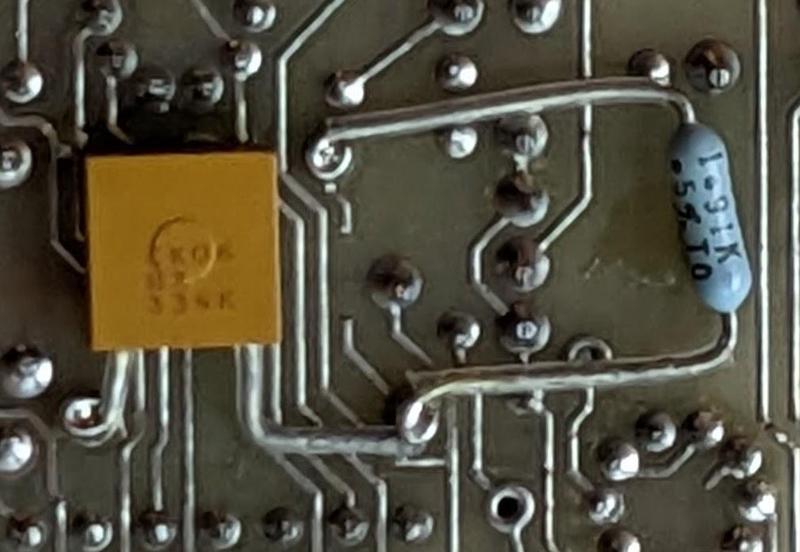

A 360° potentiometer (below) converts the spacecraft's orbital position into a voltage. Sources indicate that the Globus provides this voltage signal to other units on the spacecraft. My theory is that the transistor on the electronics board amplifies this voltage, but I am still investigating.

The photo below shows the multiple wiring bundles in the Globus, at the front and the left. The electronics board is at the front right. The Globus contains a surprising amount of wiring for a device that is mostly mechanical. Inconveniently, all the wires to the box's external connector (upper left) were cut.7 Perhaps this was part of decommissioning the unit. However, one of the screws on the case is covered with a tamper-resistant wax seal with insignia, and this wax seal was intact. This indicates that the unit was officially re-sealed after cutting the wires, which doesn't make sense for a decommissioned unit.

The drive solenoids

The unit is driven by two ratchet solenoids: one for the orbital rotation and one for the Earth's rotation. These solenoids take 27-volt pulses at 1 hertz.3 Each pulse causes the solenoid to advance the gear by one tooth; a pawl keeps the gear from slipping back. These small rotations drive the gears throughout the Globus and result in a tiny movement of the globe.

Apollo-Soyuz

If you look closely at the globe, it has a bunch of pink dots added, along with three-letter labels in Latin (not Cyrillic) characters.8 In the photo below, you can see GDS (Goldstone), MIL (Merritt Island), BDA (Bermuda), and NFL (Newfoundland). These are NASA tracking sites, which implies that this Globus was built for the Apollo-Soyuz Test Project, a 1975 mission where an Apollo spacecraft docked with a Soyuz capsule.

Further confirmation of the Apollo-Soyuz connection is the VAN sticker in the middle of the Pacific Ocean (not visible above). The USNS Vanguard was a NASA tracking ship that was used in the Apollo program to fill in gaps in radio coverage. It was an oil tanker from World War II, converted postwar to a missile tracking ship and then used for Apollo. In the photo below, you can see the large tracking antennas on its deck. During the Apollo-Soyuz mission, Vanguard was stationed at 25 S 155 W for the Apollo-Soyuz mission, exactly matching the location of the VAN dot on the globe.

History

The Globus has a long history, back to the beginnings of Soviet crewed spaceflight. The first version was simpler and had the Russian acronym ИМП (IMP).9 Development of the IMP started in 1960 for the Vostok (1961) and Voshod (1964) spaceflights.

The basic functions of the earlier Globus IMP are similar to the INK, showing the spacecraft's position and the landing position. It has an orbit counter in the lower right. The latitude and longitude displays at the top were added for the Voshod flights. The large correction knob allows the orbital period to be adjusted. The main differences are that the IMP doesn't have a display at the bottom for sun and shade and doesn't have a control to set the landing angle.9 Unlike the INK, the mode (orbit vs landing position) was selected by external switches, rather than a switch on the unit.

The more complex INK model (described in this blog post) was created for the Soyuz flights, starting in 1967. It was part of the "Sirius" information display system (IDS). The Neptun IDS used on Soyuz-T (1976) and the Neptun-M for Soyuz-TM (1986) modernized much of the console but kept the Globus INK. The photo below shows the Globus mounted in the upper-right of a Soyuz-TM console.

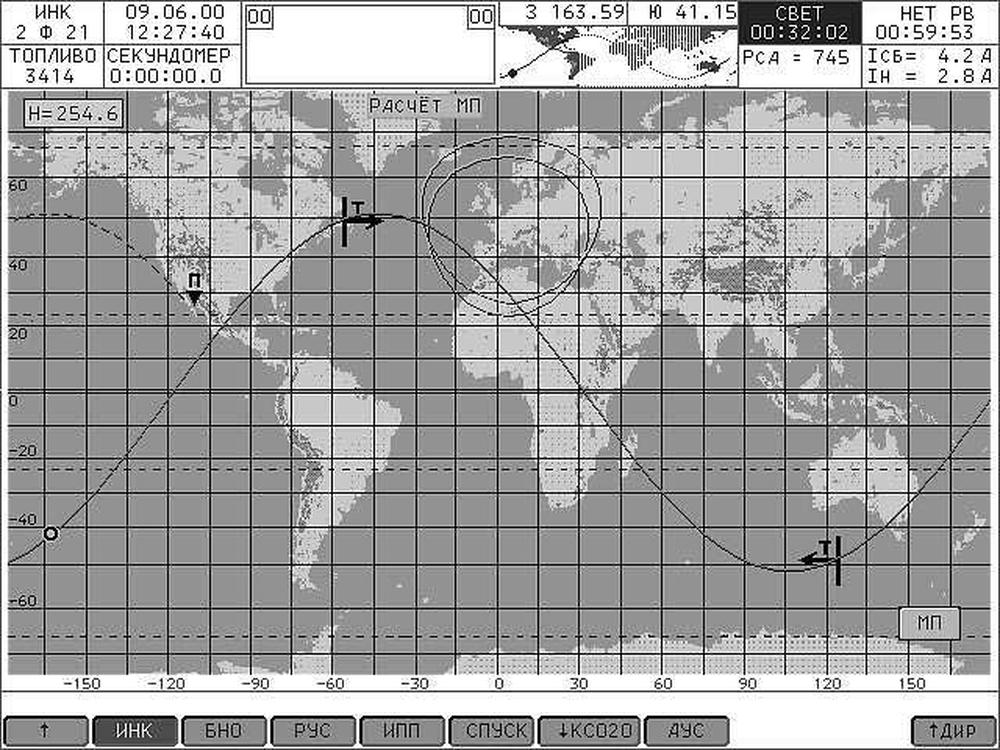

The Soyuz-TMA (2002) upgraded to the Neptun-ME system3 which used digital display screens. In particular, the Globus was replaced with the graphical display below.

Conclusions

The Globus INK is a remarkable piece of machinery, an analog computer that calculates orbits through an intricate system of gears, cams, and differentials. It provided cosmonauts with a high-resolution, full-color display of the spacecraft's position, way beyond what an electronic space computer could provide in the 1960s.

Although the Globus is an amazing piece of mechanical computation, its functionality is limited. Its parameters must be manually configured: the spacecraft's starting position, the orbital speed, the light/shadow regions, and the landing angle. It doesn't take any external guidance inputs, such as an IMU (inertial measurement unit), so it's not particularly accurate. Finally, it only supports a circular orbit at a fixed angle. While the more modern digital display lacks the physical charm of a rotating globe, the digital solution provides much more capability.

I plan to continue reverse-engineering the Globus and hope to get it operational, so follow me on Twitter @kenshirriff or RSS for updates. I've also started experimenting with Mastodon recently as @oldbytes.space@kenshirriff. Many thanks to Marcel for providing the Globus. Thanks to Stack Overflow for orbit information and my Twitter followers for translation assistance.

I should give a disclaimer that I am still reverse-engineering the Globus, so what I described is subject to change. Also, I don't read Russian, so any errors are the fault of Google Translate. :-)

Notes and references

-

In Russian, the name for the device is "Индикатор Навигационный Космический" abbreviated as ИНК (INK). This translates to "space navigation indicator." The name Globus (Глобус) seems to be a nickname, and I suspect it's more commonly used in English than Russian. ↩

-

To see how the angle between the poles and the globe's rotation results in the desired orbital inclination, consider two limit cases. First, suppose the angle between is 90°. In this case, the globe is "straight" with the equator horizontal. Rotating the globe along the horizontal axis, flipping the poles end-over-end, will cause the crosshair to trace a polar orbit, giving the expected inclination of 90°. On the other hand, suppose the angle is 0°. In this case, the globe is "sideways" with the equator vertical. Rotating the globe will cause the crosshair to remain over the equator, corresponding to an equatorial orbit with 0° inclination. ↩

-

A detailed description of Globus in Russian is in this document, in Section 5. ↩↩↩↩↩

-

Or conversely, the altitude varies according to the speed. ↩

-

Note that panel control adjusts the period of the orbit, while the implementation adjusts the speed of the orbit. These are reciprocals, so linear changes in the period result in hyperbolic changes in the speed. The mechanism, however, changes the speed linearly, which seems like it wouldn't work. However, since the period is large relative to the change in the period, this linear approximation works and the error is small, about 1%. It's possible that the cone has a nonlinear shape to correct this, but I couldn't detect any nonlinearity in photographs. ↩

-

The latitude is given by arcsin(sin i * sin (2πt/T)), while the longitude is given by λ = arctan (cos i * tan(2πt/T)) + Ωt + λ0, where t is the spaceship's flight time starting at the equator, i is the angle of inclination (51.8°), T is the orbital period, Ω is the angular velocity of the Earth's rotation, and λ0 is the longitude of the ascending node.3

The formula for latitude is simpler than longitude because the latitude repeats every orbit. The longitude, however, continually changes as the Earth rotates under the spacecraft. ↩

-

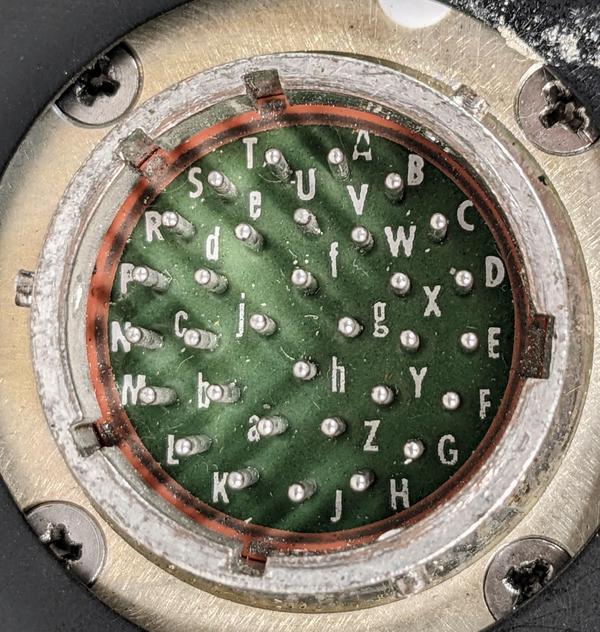

The back of the Globus has a 32-pin connector, a standard RS32TV Soviet military design. The case also has some dents visible; the dents were much larger before CuriousMarc smoothed them out.

The back of the Globus. -

The NASA tracking sites marked with dots are CYI (Grand Canary Island), ACN (Ascension), MAD (Madrid, Spain), TAN (Tananarive, Madagascar), GWM (Guam), ORR (Orroral, Australia), HAW (Hawaii), GDS (Goldstone, California), MIL (Merritt Island, Florida), QUI (Quito, Ecuador), AGO (Santiago, Chile), BDA (Bermuda), NFL (Newfoundland, Canada), and VAN (Vanguard tracking ship). Most of these sites were part of the Spacecraft Tracking and Data Network. The numbers 1-7 are apparently USSR communication sites, although I'm puzzled by 8 in Nova Scotia and 9 in Honduras. ↩

-

Details on the earlier Globus IMP are at this site, including a discussion of the four different versions IMP-1 through IMP-4. Wikipedia also has information. ↩↩