The groundbreaking 8086 microprocessor was introduced by Intel in 1978 and led to the x86 architecture that still dominates desktop and server computing. One way that the 8086 increased performance was by prefetching: the processor fetches instructions from memory before they are needed, so the processor can execute them without waiting on the (relatively slow) memory. I've been reverse-engineering the 8086 from die photos and this blog post discusses what I've uncovered about the prefetch circuitry.

The 8086 was introduced at an interesting point in microprocessor history, where memory was becoming slower than the CPU. For the first microprocessors, the speed of the CPU and the speed of memory were comparable.1 However, as processors became faster, the speed of memory failed to keep up. The 8086 was probably the first microprocessor to prefetch instructions to improve performance. While modern microprocessors have megabytes of fast cache2 to act as a buffer between the CPU and much-slower main memory, the 8086 has just 6 bytes of prefetch queue. However, this was enough to increase performance by about 50%.3

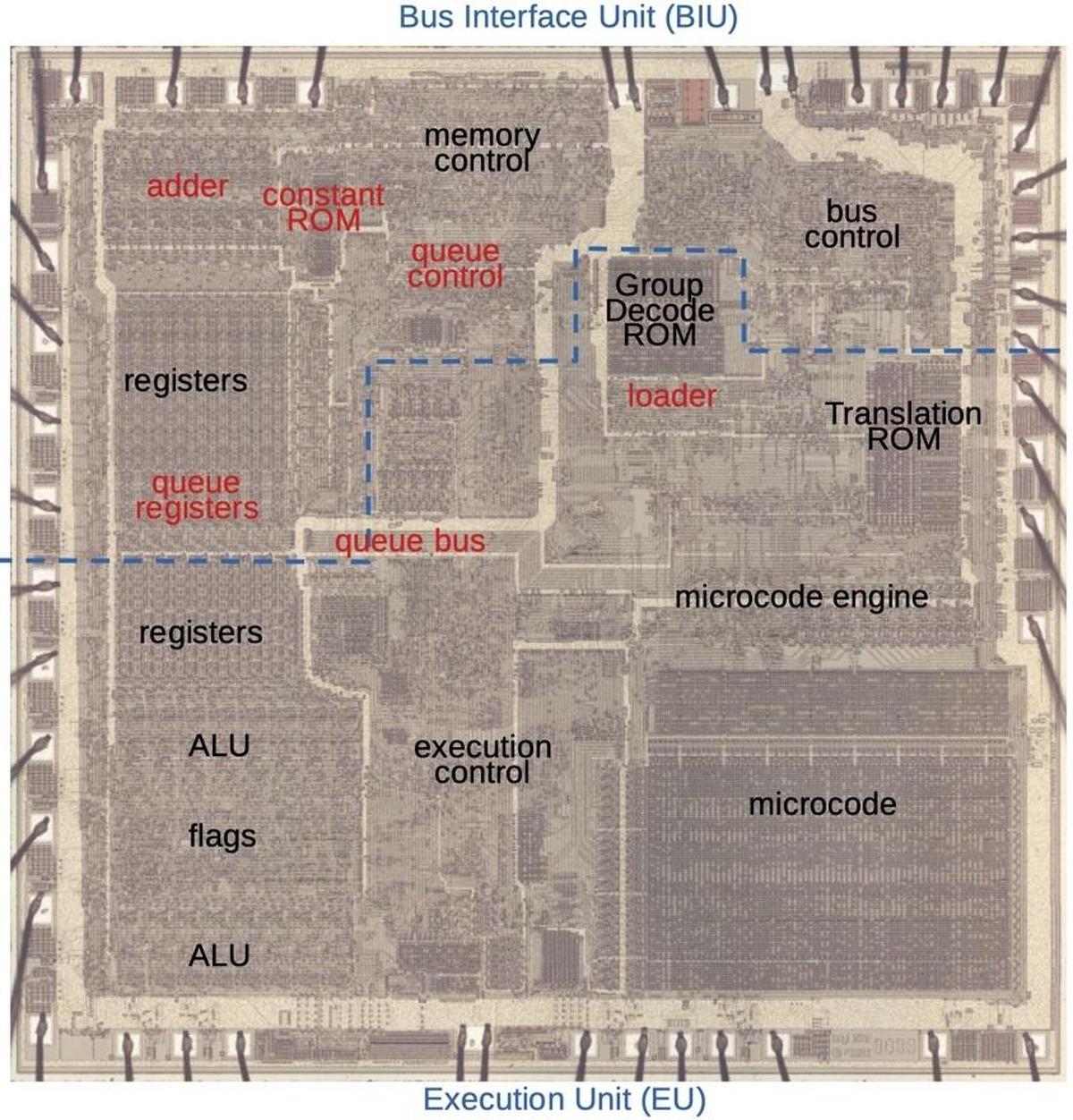

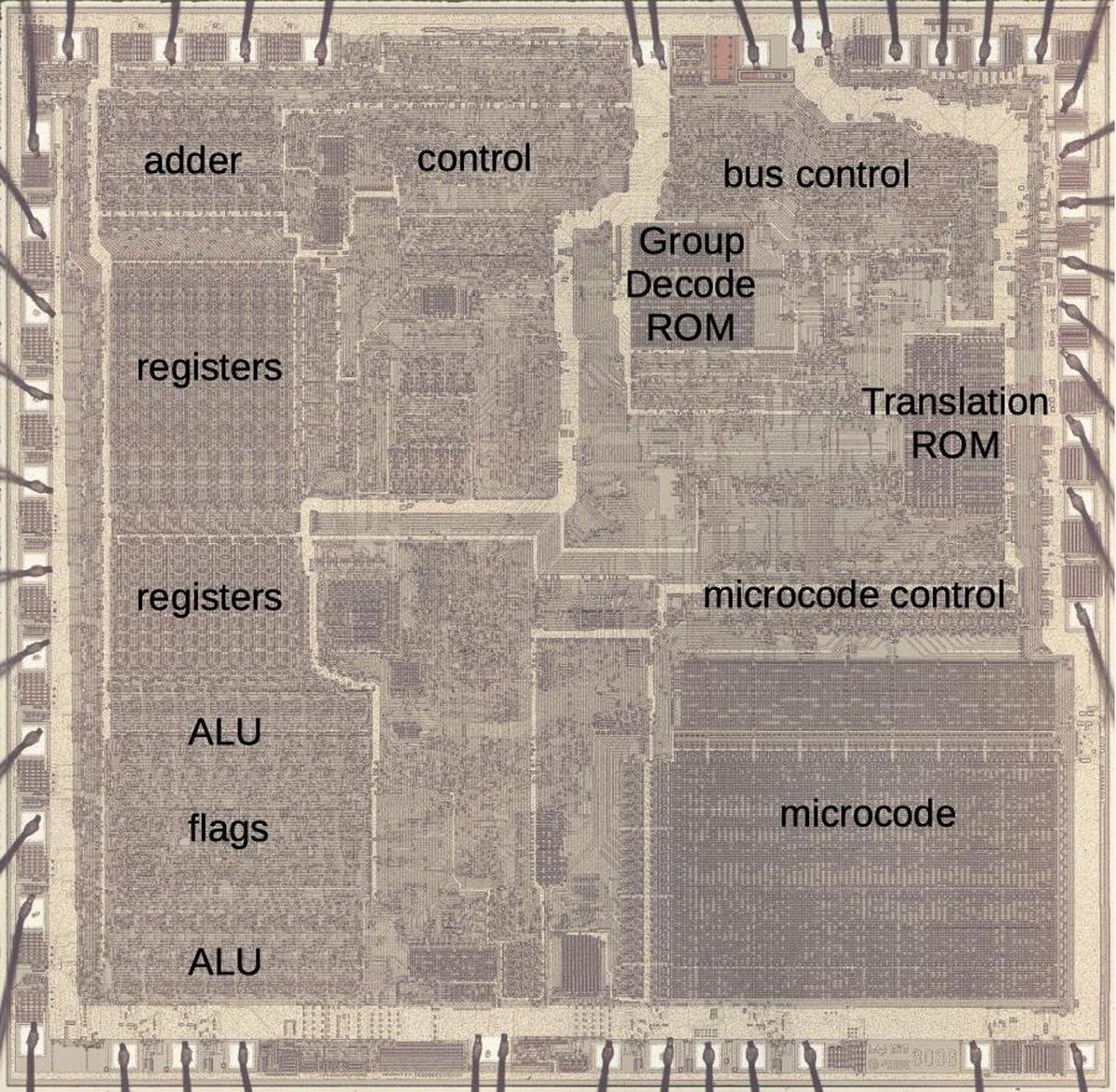

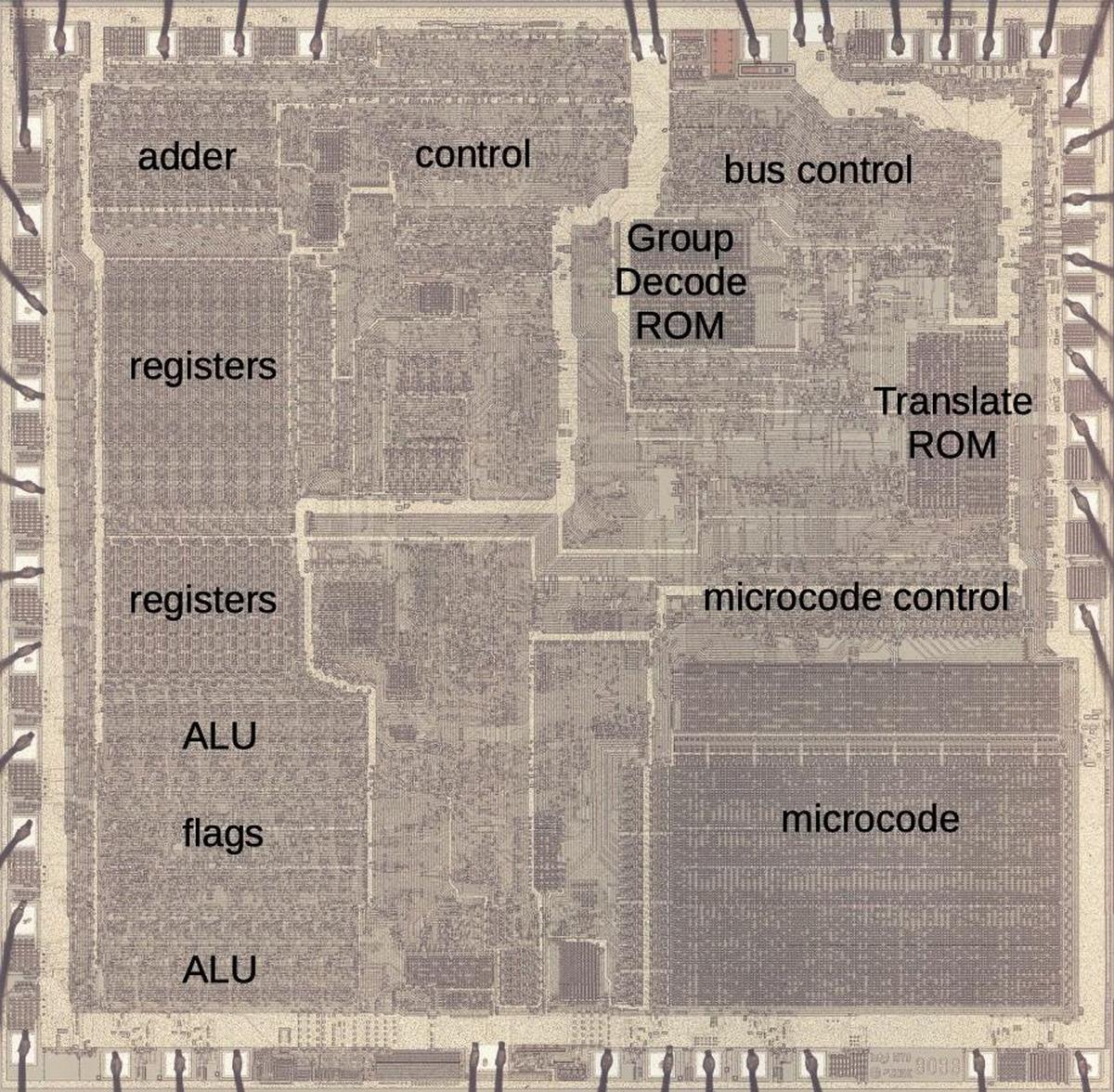

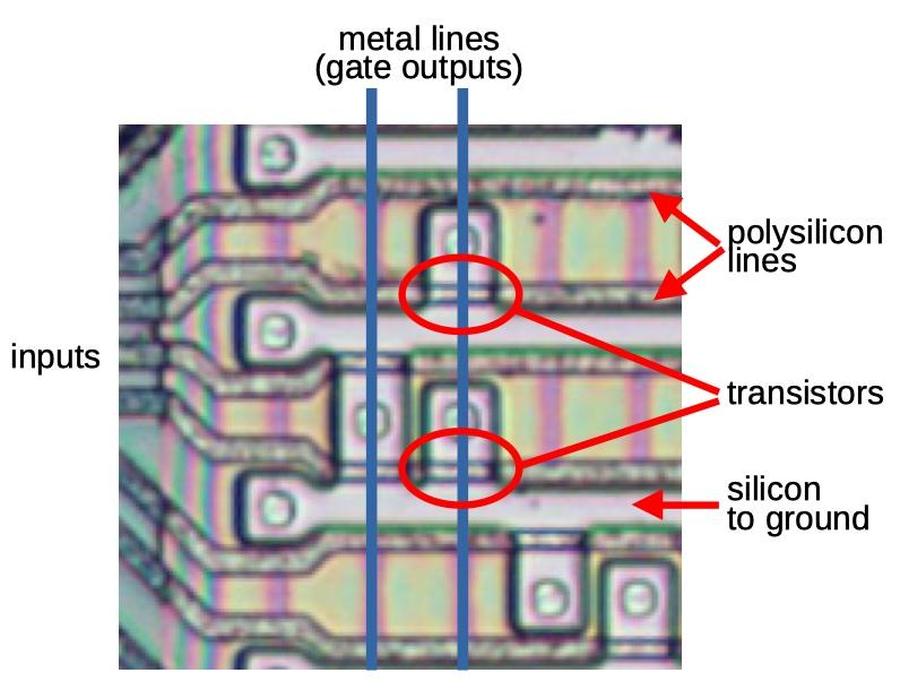

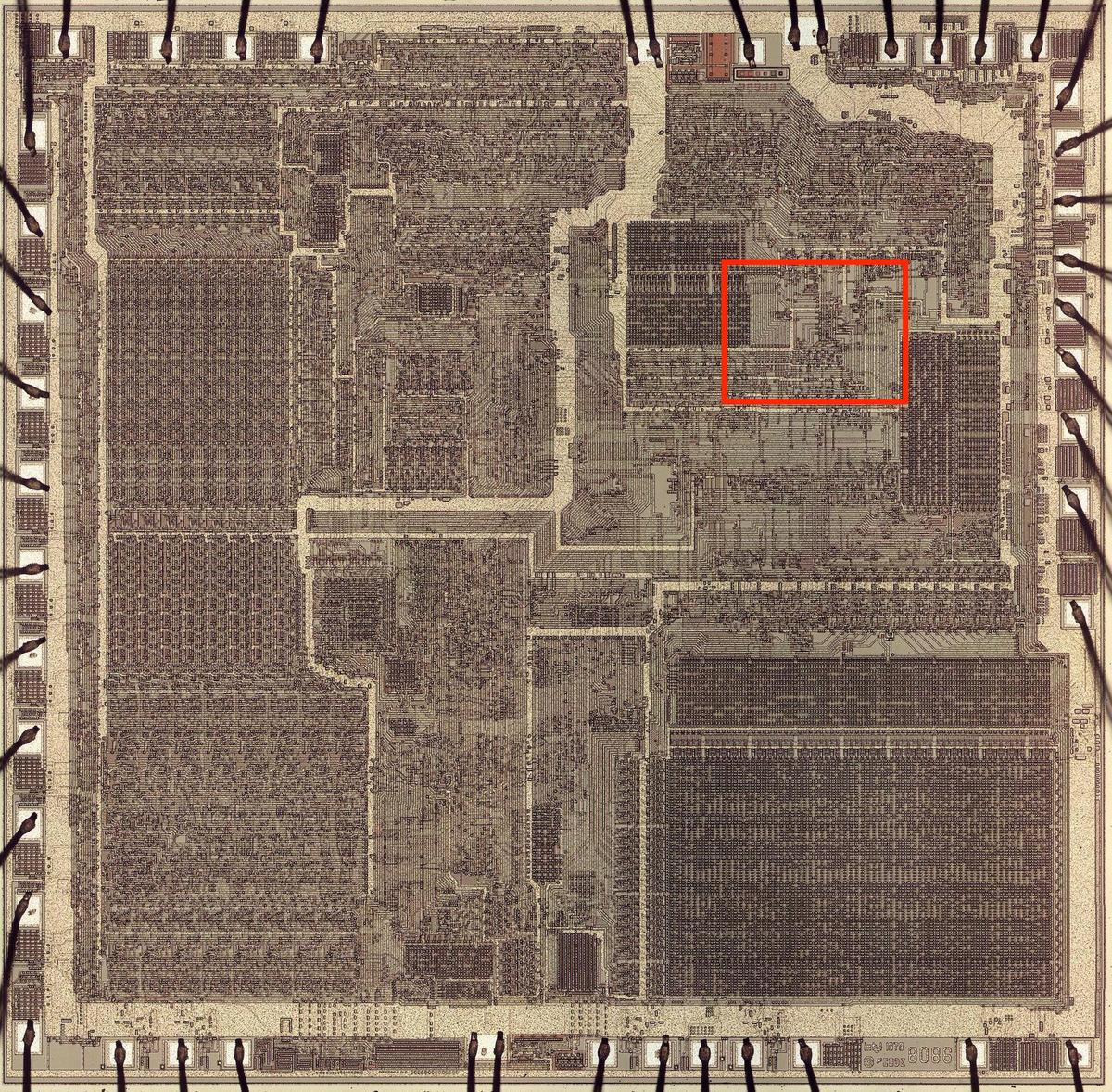

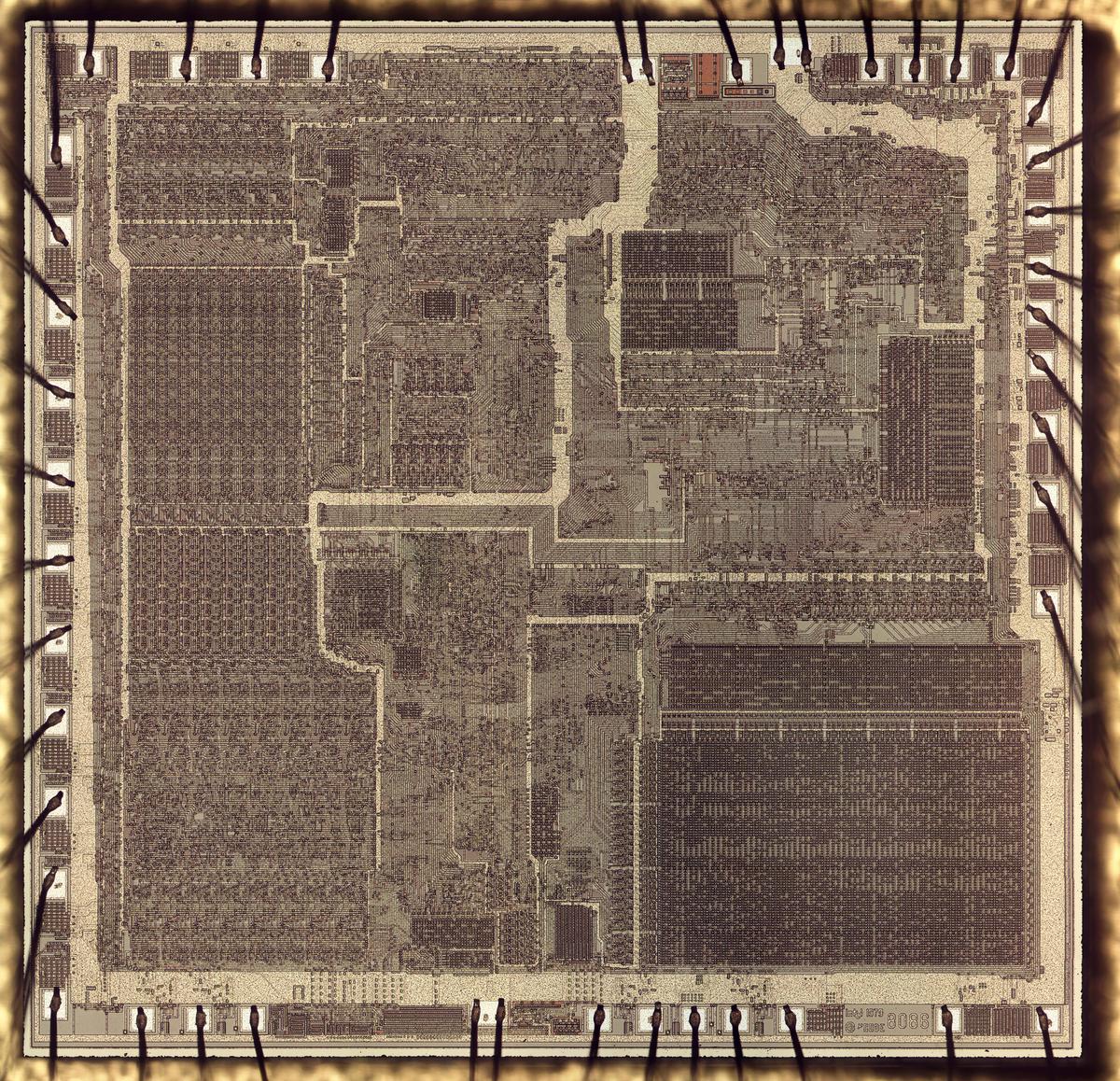

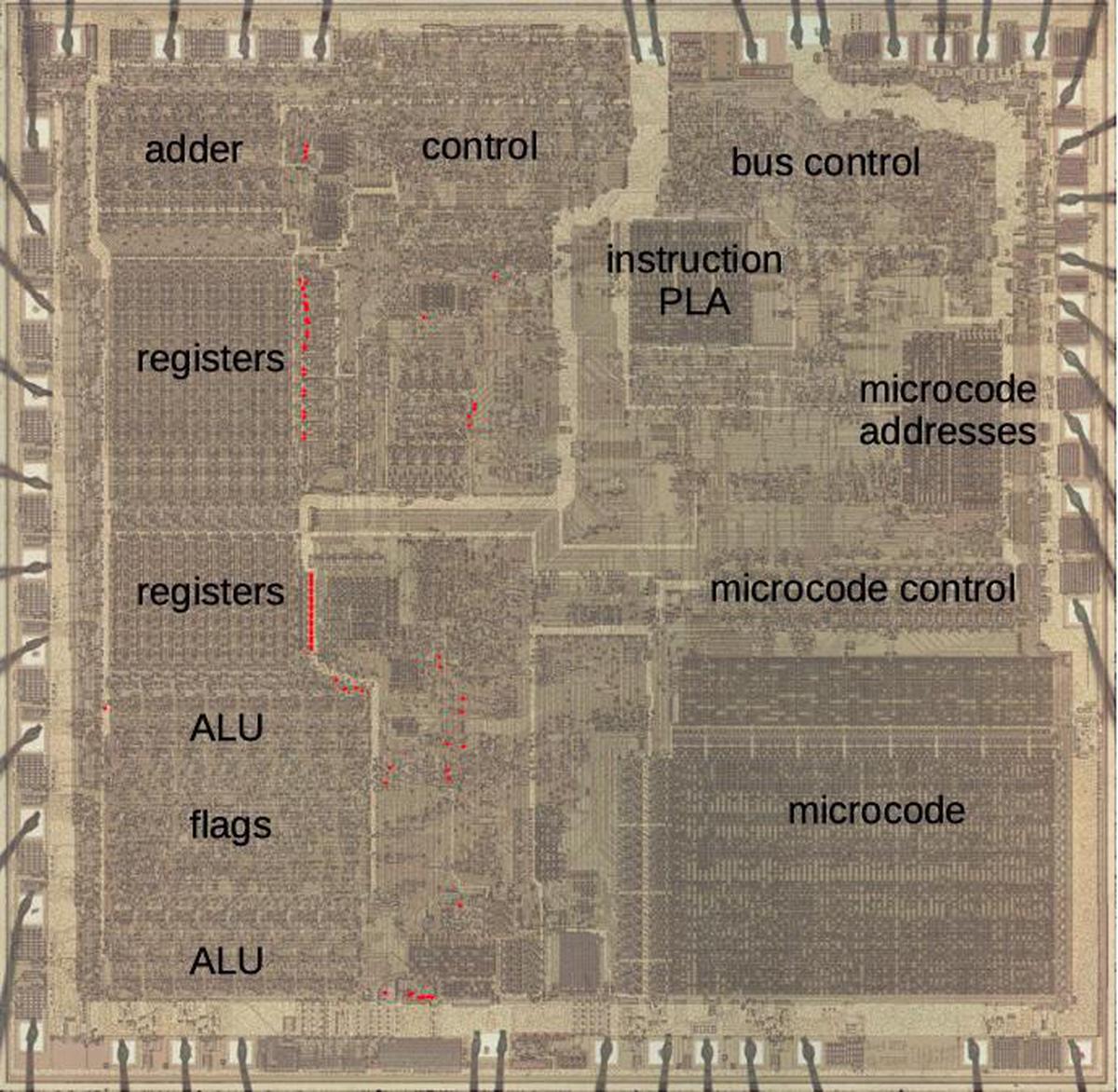

The die photo below shows the 8086 microprocessor under a microscope. The metal layer on top of the chip is visible, with the silicon and polysilicon mostly hidden underneath. Around the edges of the die, bond wires connect pads to the chip's 40 external pins. I've labeled the key functional blocks; ones that are important to the prefetch queue are highlighted in red and will be discussed in detail below. Architecturally, the chip is partitioned into a Bus Interface Unit (BIU) at the top and an Execution Unit (EU) below. The BIU handles memory accesses, while the Execution Unit (EU) executes instructions.

Prefetching and the architecture of the 8086

Prefetching had a major impact on the design of the 8086. Earlier processors such as the 6502, 8080, or Z80 were deterministic. The processor fetched an instruction, executed the instruction, fetched the next instruction, and so forth. Memory accesses corresponded directly to instruction fetching and execution and instructions took a predictable number of clock cycles. This all changed with the introduction of the prefetch queue. Memory operations became unlinked from instruction execution since prefetches happen as needed and when the memory bus is available.

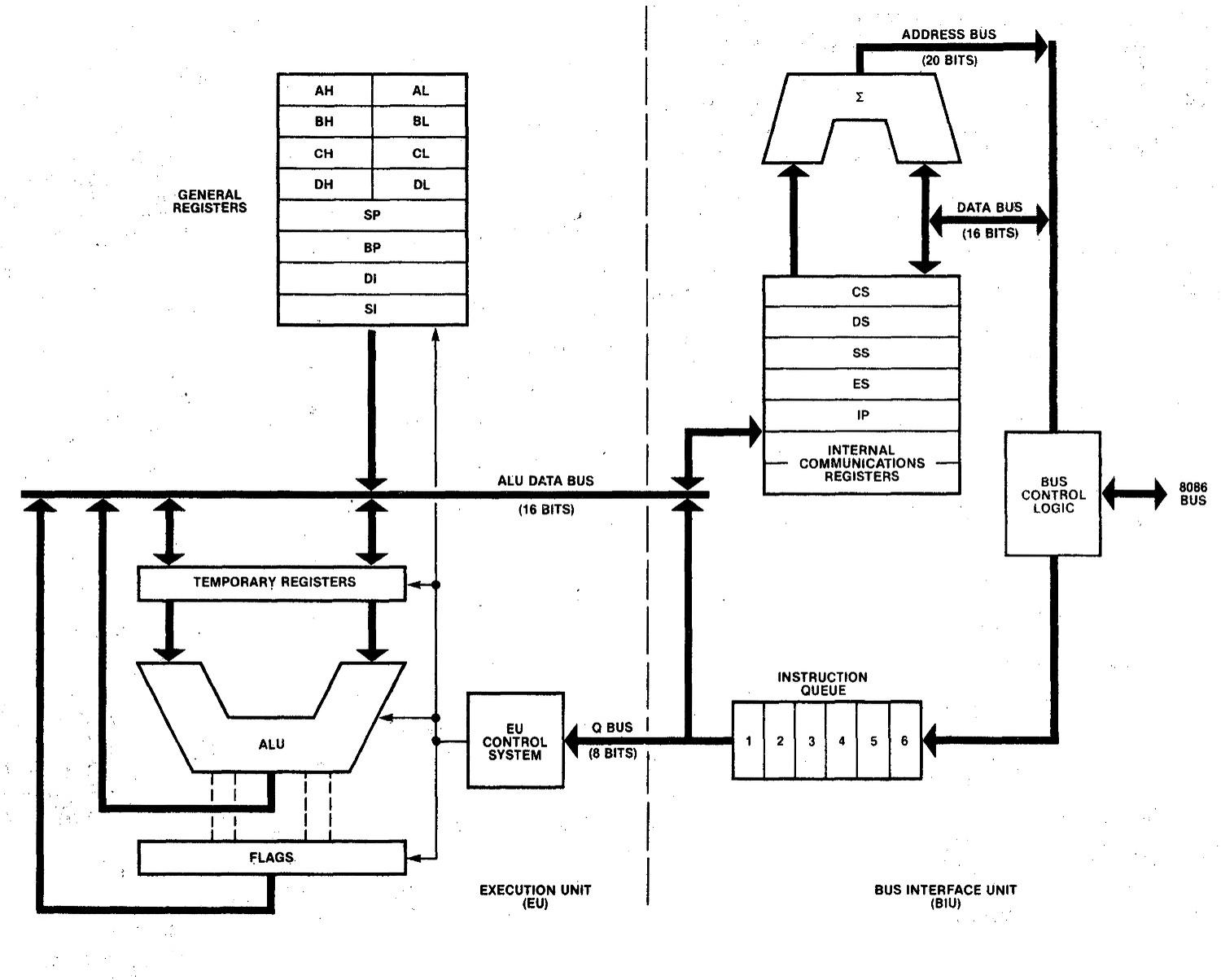

Since memory operations and instruction execution happen independently, the implementors of the 8086 split the chip into two processing units: the Bus Interface Unit (BIU) that handles memory accesses, and the Execution Unit (EU) that executes instructions, as shown below.4 The Bus Interface Unit contains the 6-byte instruction prefetch queue; it supplies instructions to the Execution Unit via the Q (queue) bus. The adder (Σ) performs address calculation, adding the segment register base to an address offset, among other things. The Execution Unit is what comes to mind when you think of a processor: it has most of the registers, the arithmetic/logic unit (ALU), and the microcode that implements instructions. The address adder and the ALU are independent arithmetic units. The segment registers (CS, DS, SS, ES) and the Instruction Pointer (IP) are in the Bus Interface Unit since they are directly involved in memory accesses, while the general-purpose registers are in the Execution Unit.

The 8086's segment registers play an important part in this architecture, so I'll review them quickly. One of the challenges of the 8086 was how to support more than 64K of memory with 16-bit registers. The much-reviled solution was to create a 1-megabyte (20-bit) address space consisting of 64K segments, with segment registers indicating the start of each segment. Specifically, a memory address was specified by a 16-bit offset address along with a particular segment register selecting a segment (Code Segment, Data Segment, Stack Segment, or Extra Segment). The segment register's value was shifted by 4 bits to give the segment's 20-bit base address. The 16-bit offset address was added, yielding a 20-bit memory address. This gave the processor a 1-megabyte address space, although only 64K could be accessed without changing a segment register.

It may seem inefficient for the Bus Interface Unit to have its own adder instead of using the ALU, but there are a couple of reasons for the separate adder. First, every memory access uses the adder at least once to add the segment base and offset. The adder is also used to increment the PC or index registers. Since these operations are so frequent, they would create a bottleneck if they used the ALU. Second, since the Execution Unit and the Bus Interface Unit run asynchronously with respect to each other, it would be complicated to share the ALU without causing delays and conflicts.

Prefetching had another major but little-known effect on the 8086 architecture: the designers were considering making the 8086 a two-chip microprocessor. Prefetching, however, required a one-chip design because the number of control signals required to synchronize prefetching across two chips exceeded the package pins available. This became a compelling argument for the one-chip design that was used for the 8086.3 (The unsuccessful Intel iAPX 432, which was under development at the same time, ended up being a two-chip processor: one to fetch and decode instructions, and one to execute them.)

Implementing the queue

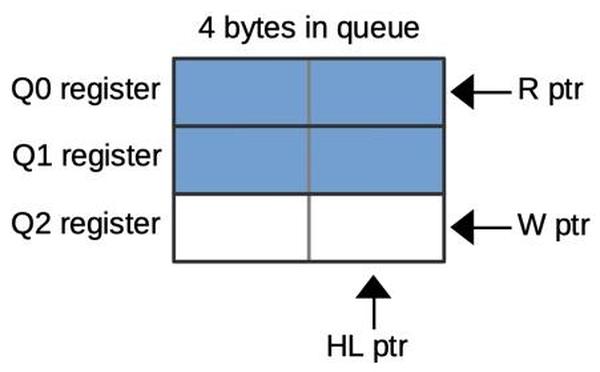

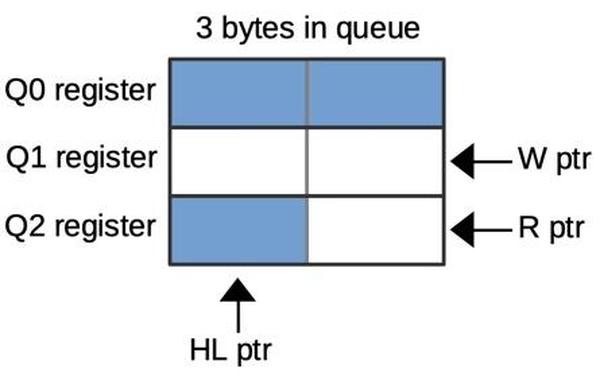

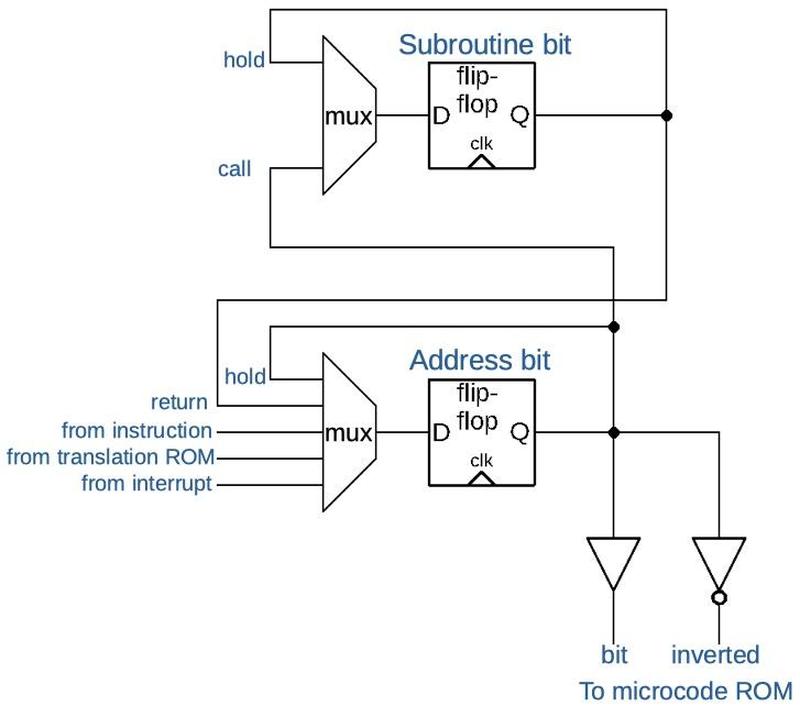

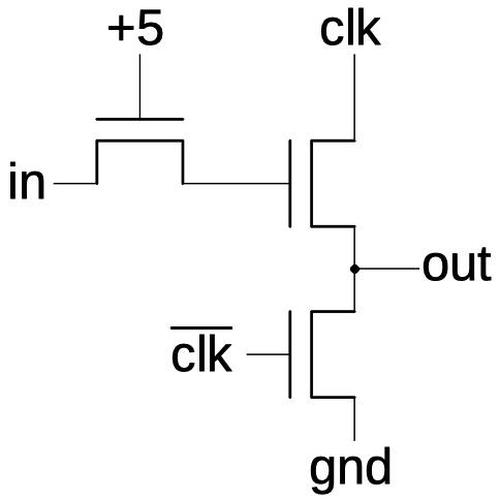

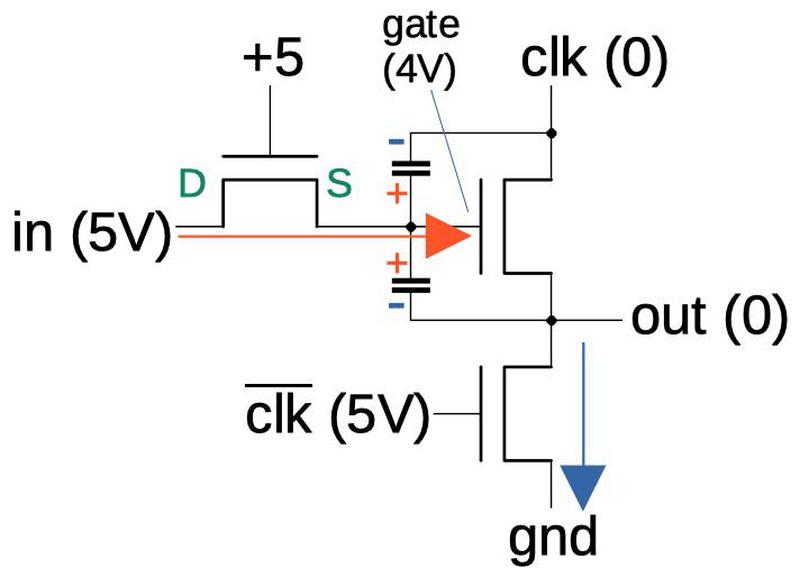

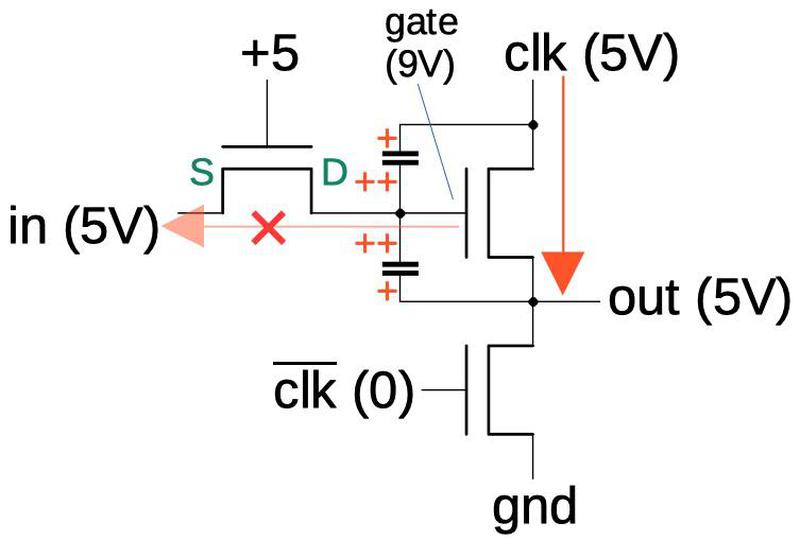

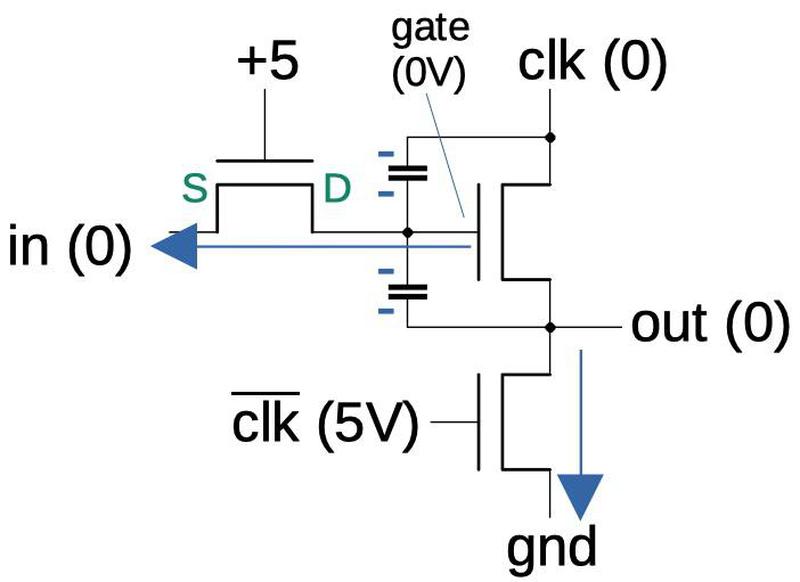

The instruction prefetch queue is implemented with three 16-bit queue registers along with two hardware pointers that keep track of the current position in the queue. One two-bit counter keeps track of the current read position from 0 to 2, i.e. the queue register that will provide the next instruction. The second counter keeps track of the current write position, i.e. the queue register that will receive the next instruction from memory. As words are fetched from the queue, the read pointer advances. As words are added to the queue, the write pointer advances. Because the queue registers hold words, while the prefetch circuitry provides bytes, another flip-flop keeps track of whether the high byte or the low byte of the word is being used. I call this the HL flip-flop. This causes the low byte to be provided first and then the high byte (since the 8086 is little-endian).

The diagram below shows an example queue configuration with four bytes. The first two queue registers (Q0 and Q1) hold data. The read pointer and HL pointer indicate that the next prefetched byte will come from the low byte of Q0. The write pointer indicates that the next prefetched word will go into Q2.

The diagram below shows how the queue pointers can wrap around. In this configuration, one byte has been used from Q2 so the next byte will be Q2's high byte. Q0 holds the next prefetched word. The next word to be prefetched will be stored in Q1, as indicated by the write pointer.

The relative positions of the write and read pointers indicate how much data is in the queue. If the write pointer is one position before the read pointer (modulo 3), the queue holds 3 or 4 bytes. If the write pointer is one position after the read pointer (modulo 3), the queue holds 1 or 2 bytes. But what about when the read pointer and write pointer indicate the same register? This can either indicate that the queue is empty or that the queue is full (5 or 6 bytes). To distinguish these cases, a flip-flop is set if the queue enters the empty state. This flip-flop generates a signal that Intel called MT (empty).

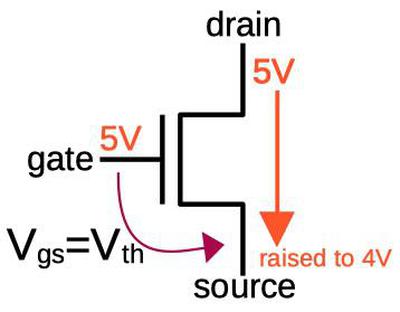

Another complication occurs if you jump to an odd address. Because of its 16-bit bus, the 8086 will prefetch a word from the even address one less. This loads one usable byte and one byte that needs to be discarded. The 8086 handles this by setting the HL flip-flop high, using a handful of gates to detect this case. As in the diagram above, the unwanted low byte will be skipped.

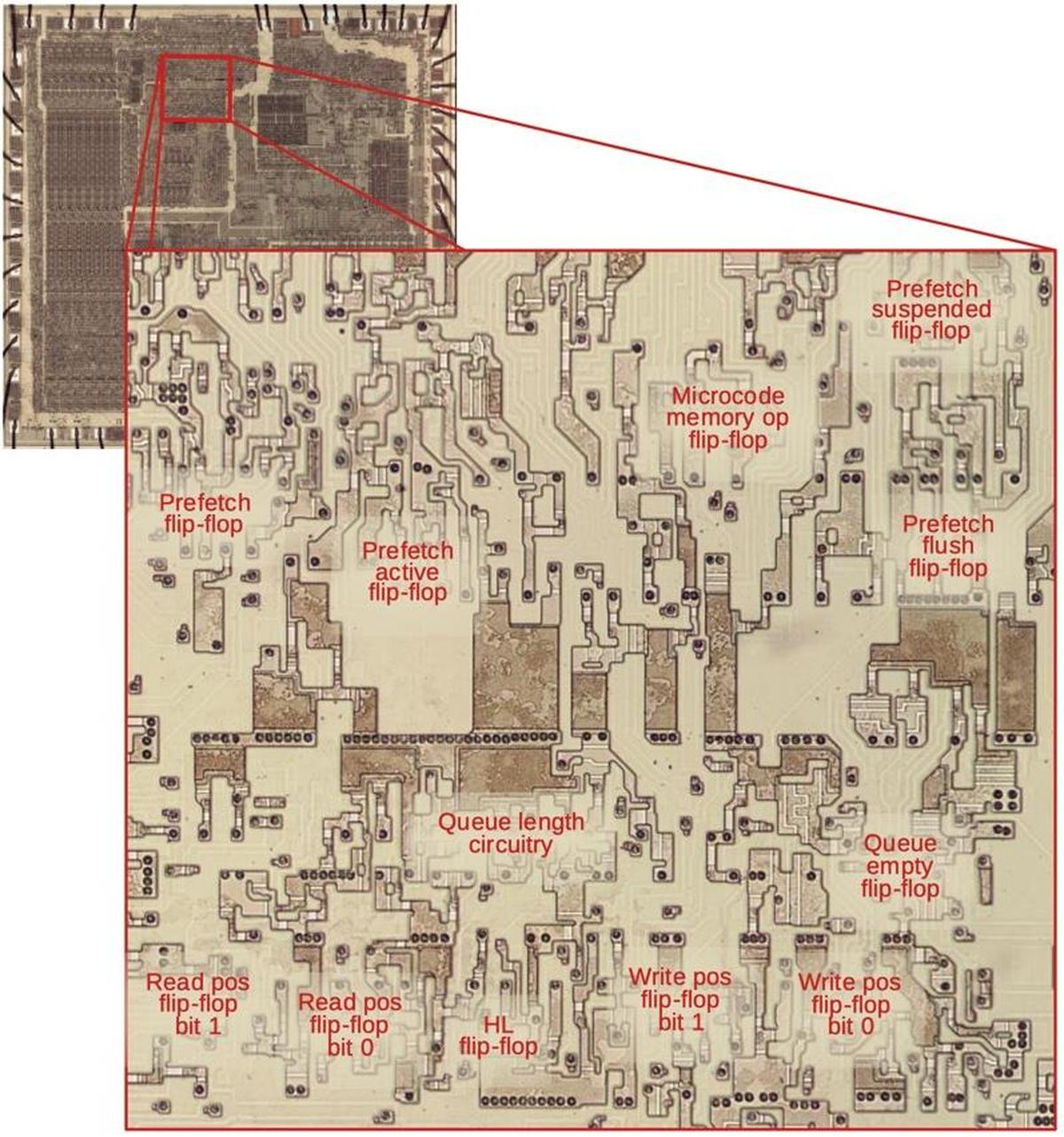

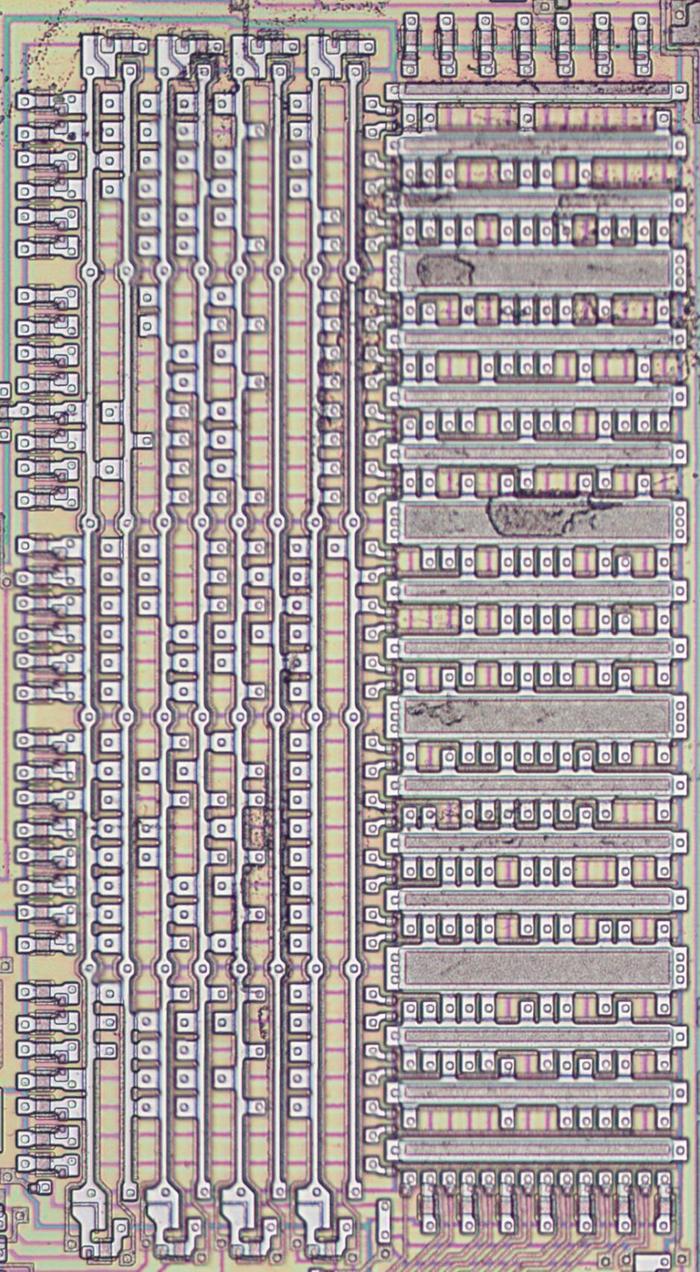

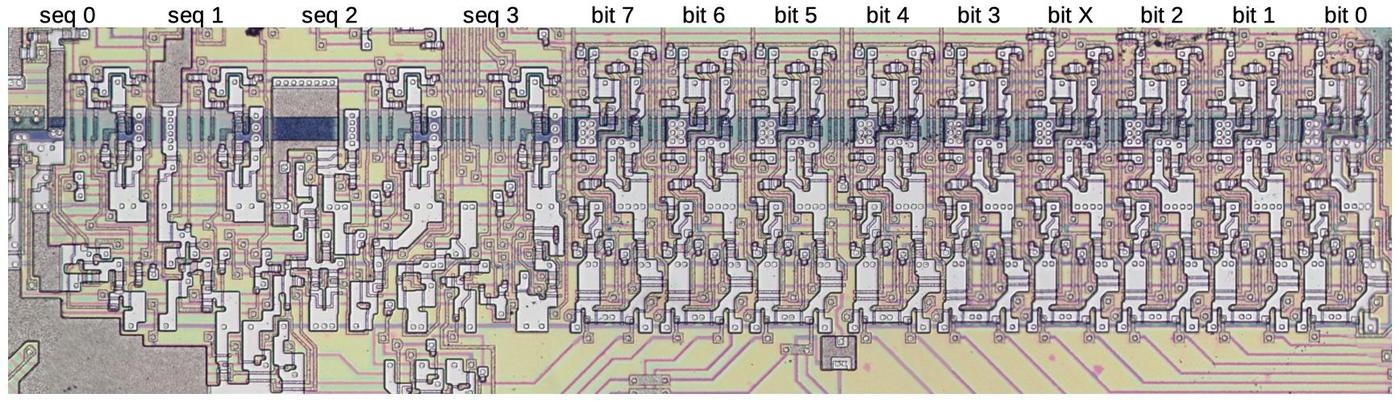

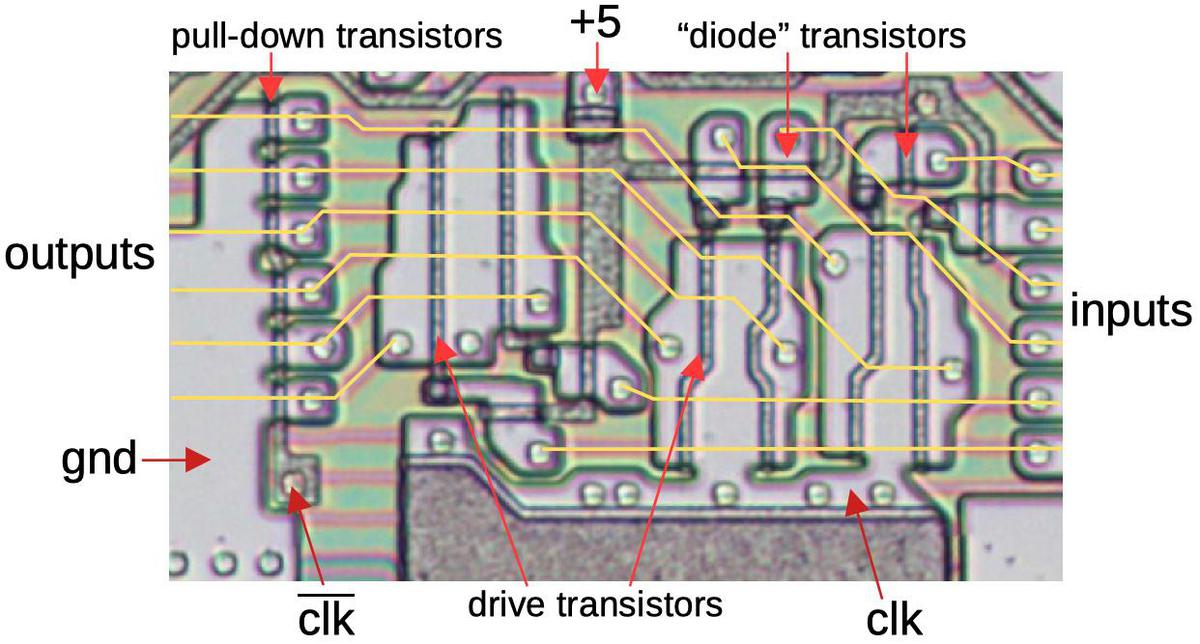

The diagram below zooms in on the prefetch and queue control circuitry on the die, with the main flip-flops and circuitry labeled. The lower half manages the queue, keeping track of the read and write positions and computing the queue length. The upper circuitry controls prefetch operations and interacts with the rest of the memory cycle circuitry.

Even though there is not a lot of circuitry involved (about a dozen flip-flops and associated logic gates), this circuitry occupies a substantial part of the 8086 die. (The relatively small amount of circuitry did not make this easy to reverse-engineer, however!) Compared to modern chips, the density of the 8086 is very low; you can almost see the flip-flops with the naked eye. This diagram only shows the circuitry directly involved in prefetching. Additional circuitry is scattered through the memory cycle control circuitry to deal with prefetching, and the queue registers take up a substantial part of the register file. Thus, prefetching was a moderately expensive feature for the 8086, as far as die area.

The loader

To decode and execute an instruction, the Execution Unit must get instruction bytes from the Bus Interface Unit, but this is not entirely straightforward. The main problem is that the queue can be empty, in which case instruction decoding must block until a byte is available from the queue. The second problem is that instruction decoding is relatively slow, so for maximum performance, the decoder needs a new byte before the current instruction is finished. A circuit called the "loader" solves these problems by providing synchronization between the prefetch queue and the instruction decoder. The loader uses a small state machine to efficiently fetch bytes from the queue at the right time and to provide timing signals to the decoder and microcode engine.

In more detail, as the loader requests the first two instruction bytes from the prefetch queue, it generates two timing signals that control the microcode execution. The FC (First Clock) indicates that the first instruction byte is available, while the SC (Second Clock) indicates the second instruction byte. Note that the First Clock and Second Clock are not necessarily consecutive clock cycles because the prefetch queue could be empty or contain just one byte, in which case the First Clock and/or Second Clock would be delayed. The instruction decoding circuitry and the microcode engine are controlled by the First Clock and Second Clock signals, so they remain synchronized with the bytes supplied by the prefetch queue.

At the end of a microcode sequence, the Run Next Instruction (RNI) micro-operation causes the loader to fetch the next machine instruction. However, fetching and decoding the next instruction is a bit slow so microcode execution would be blocked for a cycle. In many cases, this slowdown can be avoided: if the microcode knows that it is one micro-instruction away from finishing, it issues a Next-to-last (NXT) micro-operation so the loader can start loading the next instruction. This achieves a degree of pipelining in most cases; fetching the next instruction is overlapped with finishing the execution of the previous instruction.

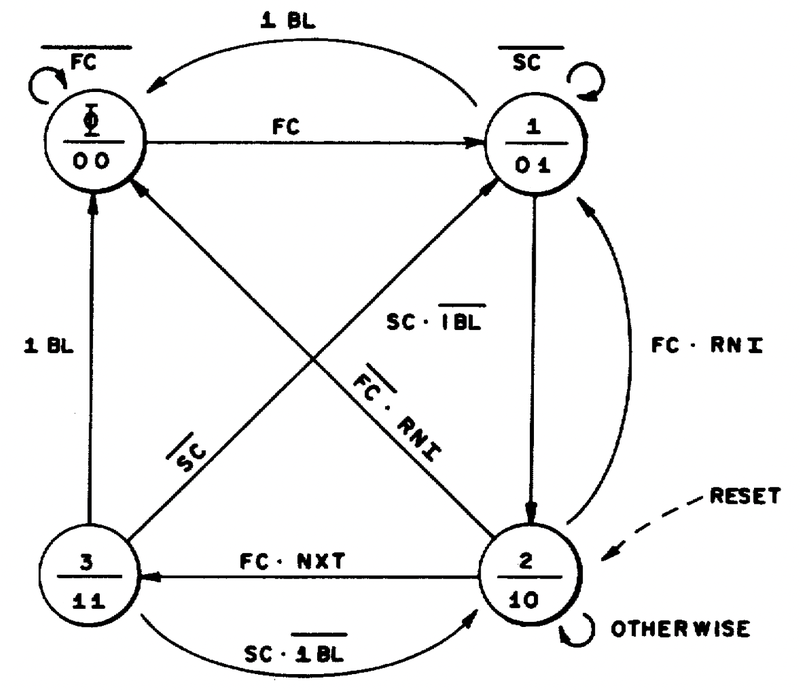

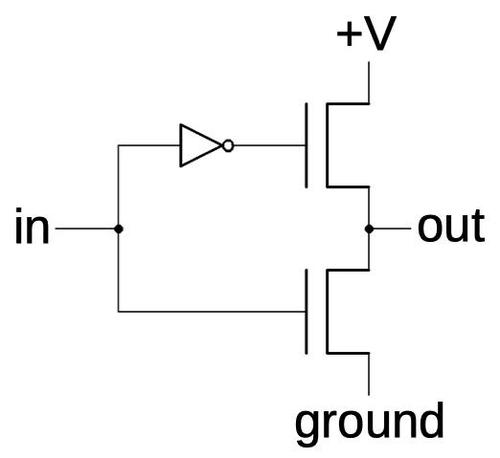

The diagram above shows the state machine for the loader. I won't explain it in detail, but essentially it keeps track of whether it is waiting for a First Clock byte or a Second Clock byte, and if it is performing a fetch in advance (NXT) or at the end of an instruction (RNI). The state machine is implemented with two flip-flops to support its four states.

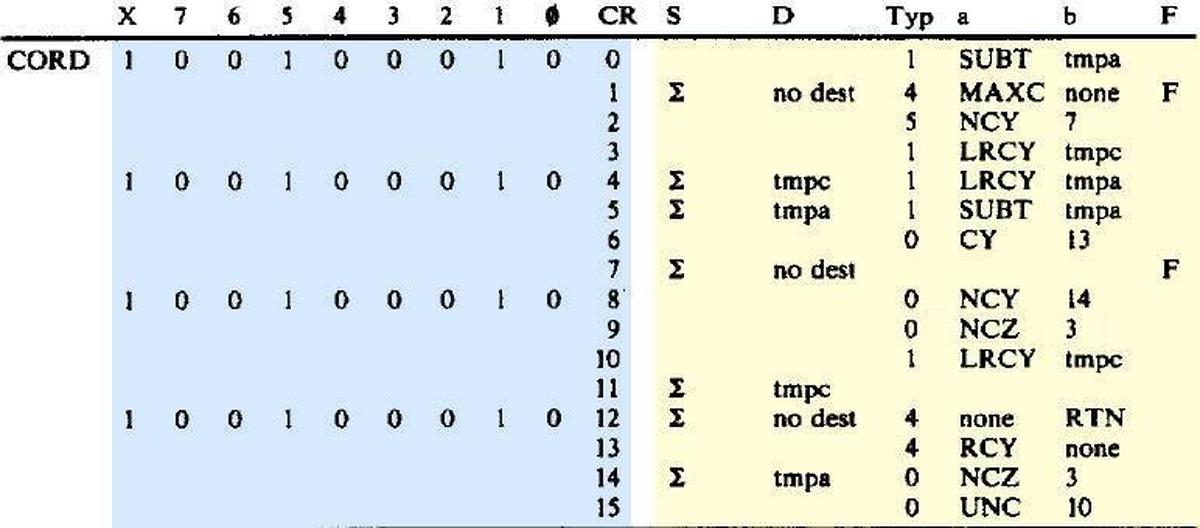

Other memory accesses

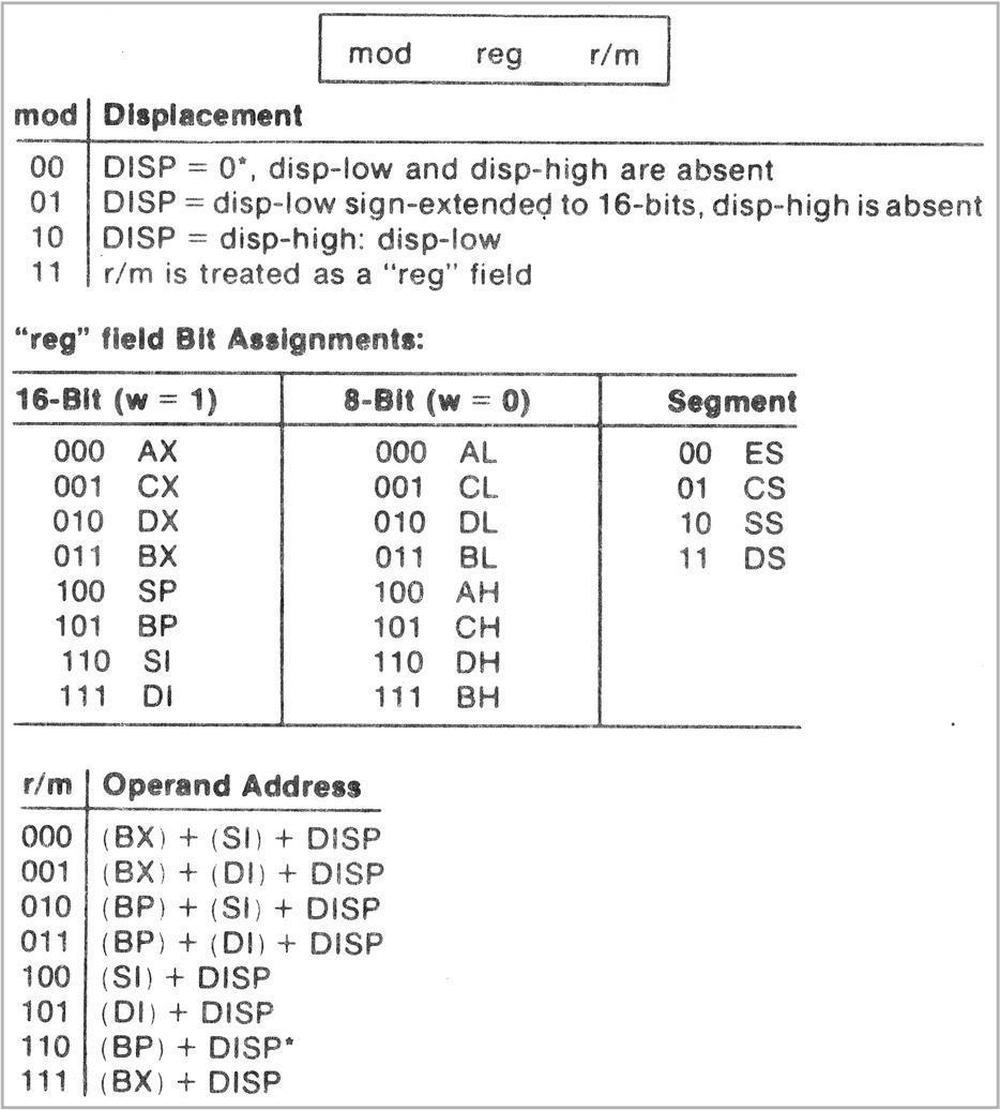

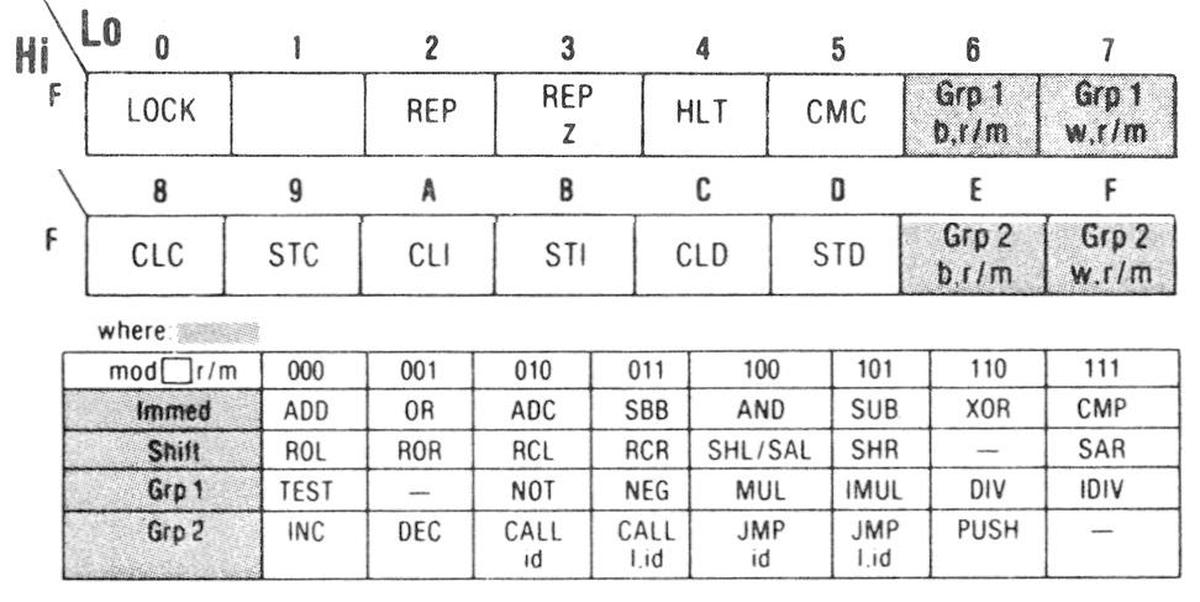

The loader takes care of fetching an instruction that consists of an opcode byte and a Mod R/M (addressing mode) byte. However, many instructions have additional bytes or don't follow this format For example, an opcode such as "ADD AX" can be followed by an 8- or 16-bit immediate value, adding that value to the AX register. Or a "move memory to AX" instruction can be followed by a 16-bit memory address The microcode uses a separate mechanism for fetching these instruction bytes from the queue. Specifically, each micro-instruction contains a source register and a destination register that specify a data move. By specifying "Q" (the queue) as the source, a byte is fetched from the prefetch queue.

A third path is used for arbitrary memory reads and writes, such as when an instruction stores a register's contents to memory. In this case, the microcode puts the memory address in the IND (indirect) register. The microcode then issues a read or write micro-operation which causes the memory contents to be read into the OPR (operand register) or written from the OPR. (The IND and OPR registers are internal 8086 registers that are not visible to the programmer.) In the 8086, a memory cycle takes at least four clock cycles (called T1 through T4), including adding the segment register to compute the memory address. An "unaligned" memory access takes twice as long, though, because the 8086 has a word-based 16-bit data bus. Thus, if you try to access a word from an odd address, two memory accesses are required, one for the first byte and one for the second byte.5

As you can see, a memory access is a fairly complex operation. In the 8086, all these steps are done by hardware in the Bus Interface Unit, rather than being performed by microcode. (I'll discuss the complex memory control circuitry in detail in a future post.) After issuing a memory read or write, the microcode engine is blocked until the memory request completes.

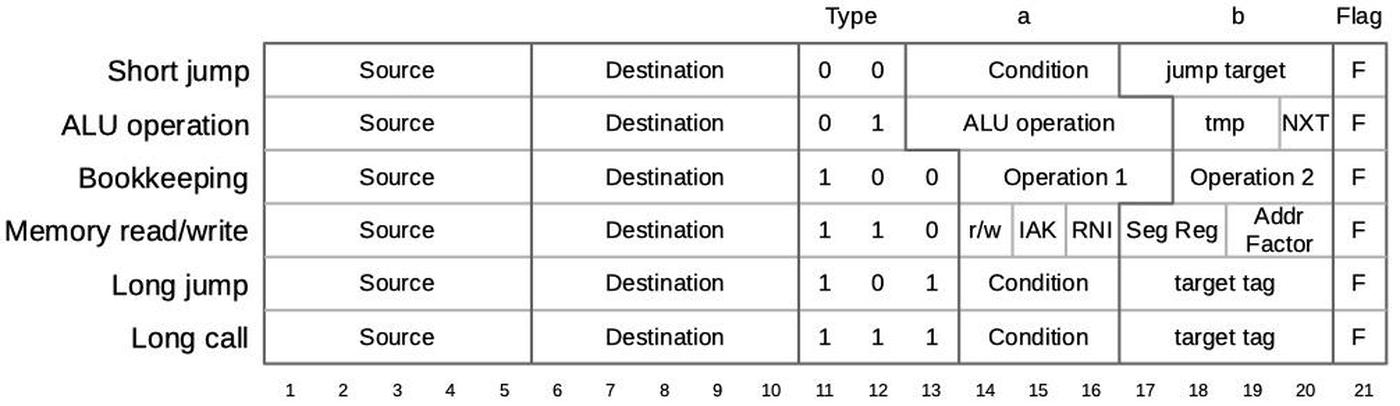

Microcode instructions and the correction circuitry

The microcode interacts with prefetching in several ways. In addition to requesting a byte from the queue (as discussed above), microcode can perform three micro-instructions that involve prefetching: SUSP, FLUSH, and CORR. The SUSP (suspend) micro-instruction stops prefetching, typically before a change to execution flow. The FLUSH micro-instruction flushes the prefetch queue and resumes prefetching. To implement these, the prefetching circuitry has a flip-flop to keep track of the suspended state, and logic to reset the queue pointer counters to flush the queue.

The CORR (correct) micro-instruction corrects the Instruction Pointer to point to the next execution position. This is an interesting and more complicated micro-instruction. Like most processors, the 8086 has a program counter (PC) to keep track of what instruction to execute; the 8086 calls this the Instruction Pointer (IP). In the programmer's view, the Instruction Pointer points to the memory address of the next instruction to execute. However, in the hardware, the Instruction Pointer points to the next instruction to be fetched, which is generally several bytes after the next instruction to be executed.6

For the most part, this doesn't matter; the queue provides instructions in the order they were fetched and it doesn't matter if the Instruction Pointer runs ahead. However, there are a few cases where the "real" Instruction Pointer address is needed. For example, a relative jump instruction causes execution to jump to an address relative to the current instruction. When performing a subroutine call, the return address must be pushed on the stack. The correct Instruction Pointer value is also needed for an interrupt. Thus, the 8086 needs a mechanism to compute the real Instruction Pointer value from the value in the Instruction Pointer register.

The solution is the CORR micro-instruction, which corrects the Instruction Pointer value by subtracting the prefetch queue length, so the Instruction Pointer holds the "true" value. For instance, if there are 4 bytes in the queue, then the address in the Instruction Pointer register is four more than the desired Instruction Pointer address. The Bus Interface Unit performs this subtraction by using the addressing adder and a small table of constants called the Constant ROM.7

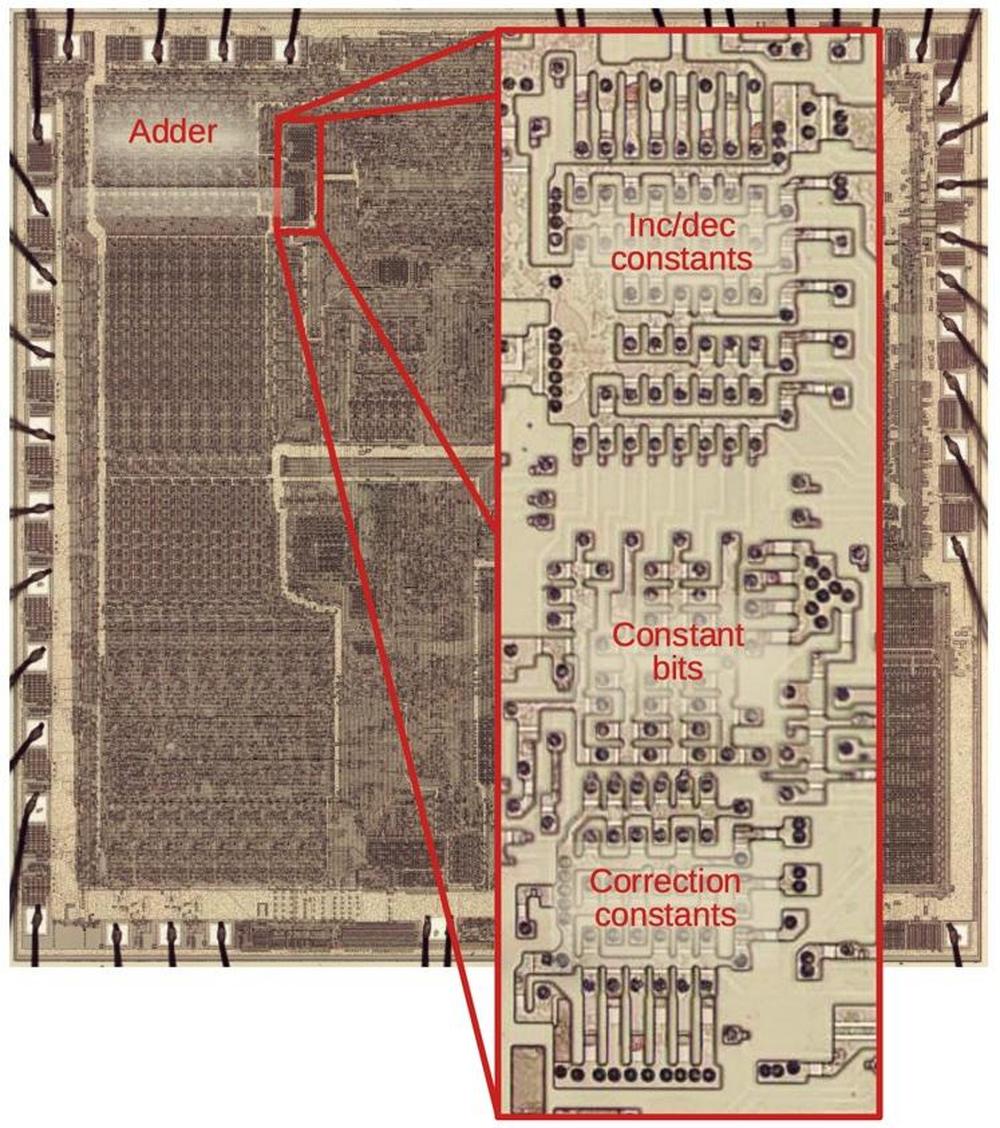

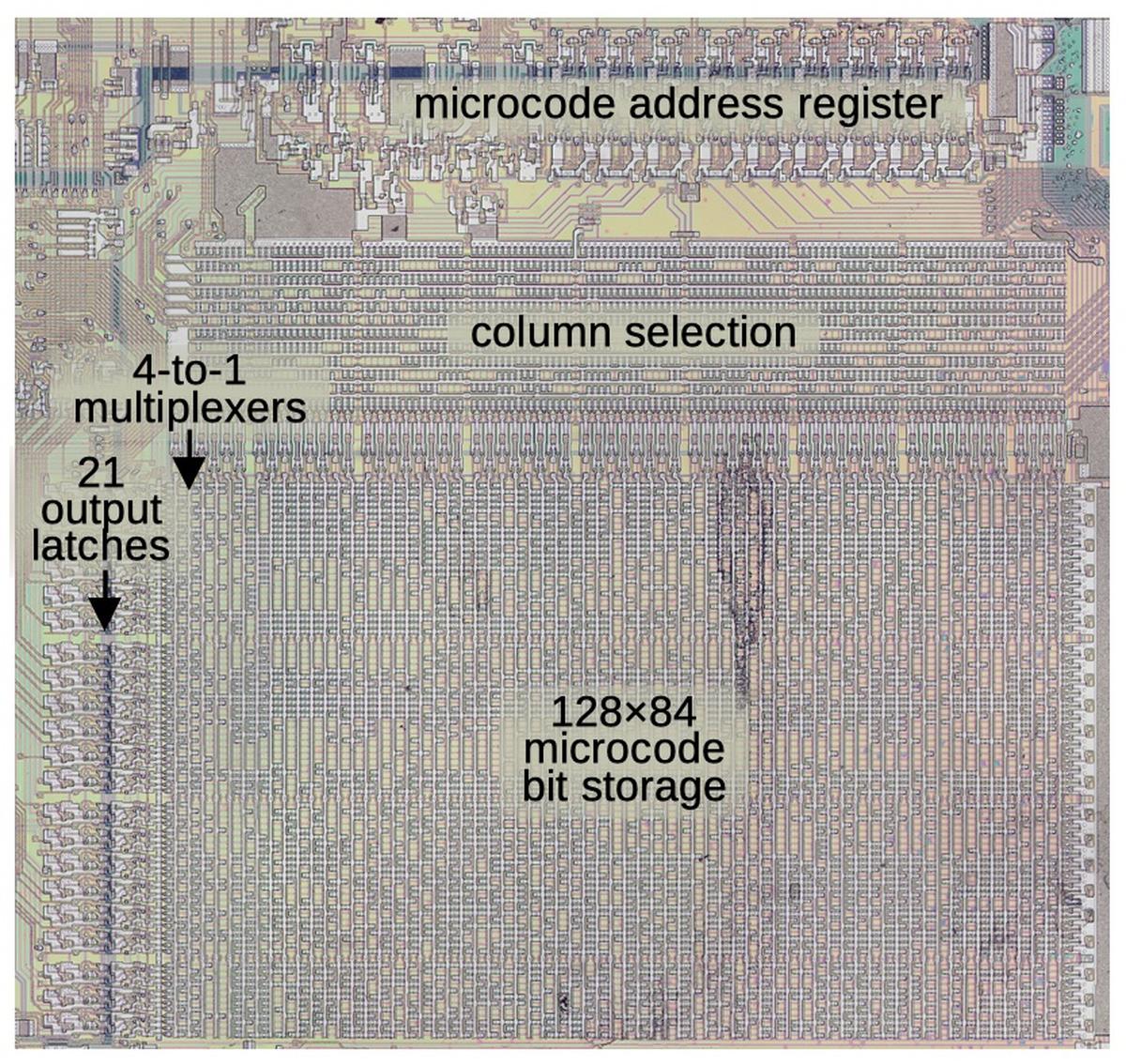

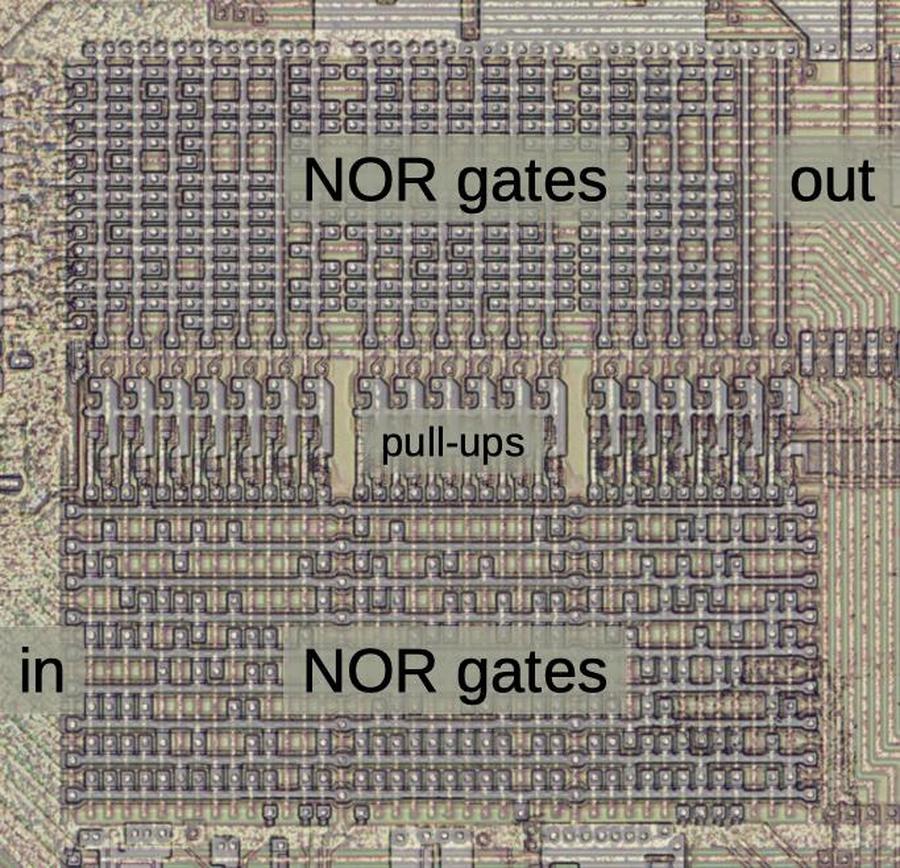

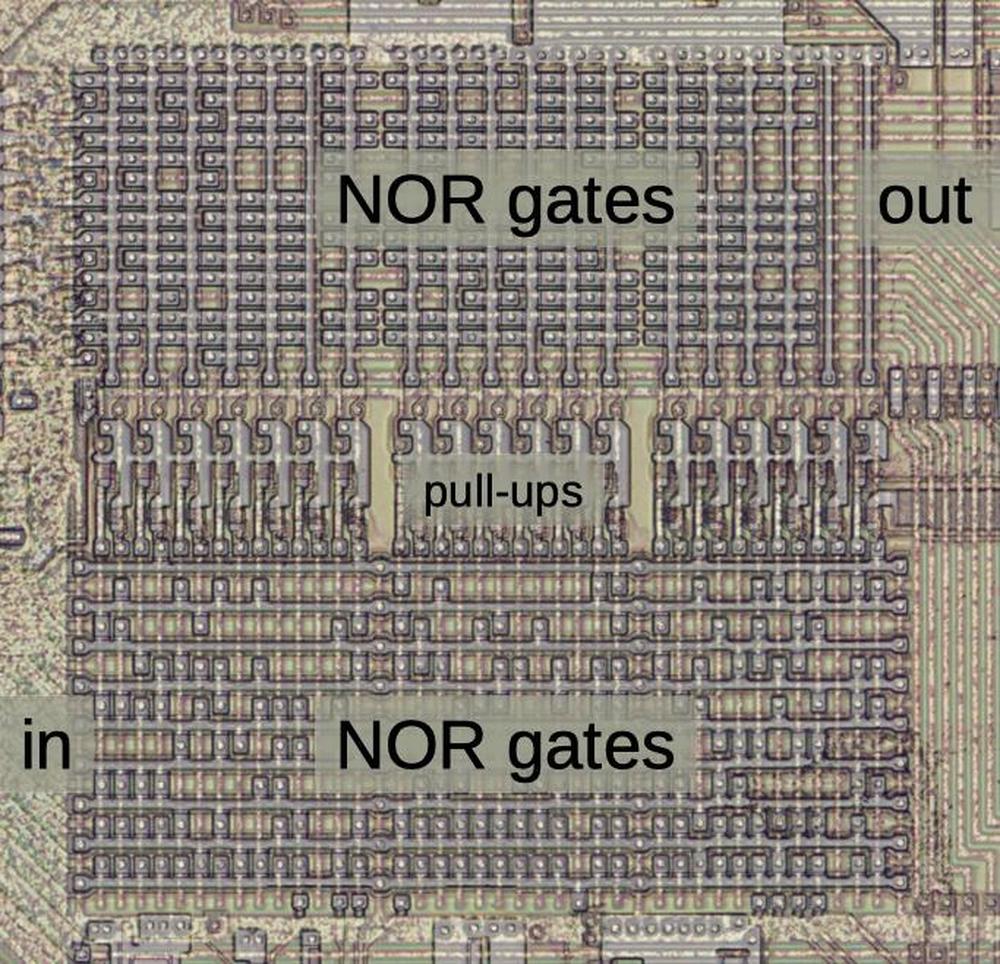

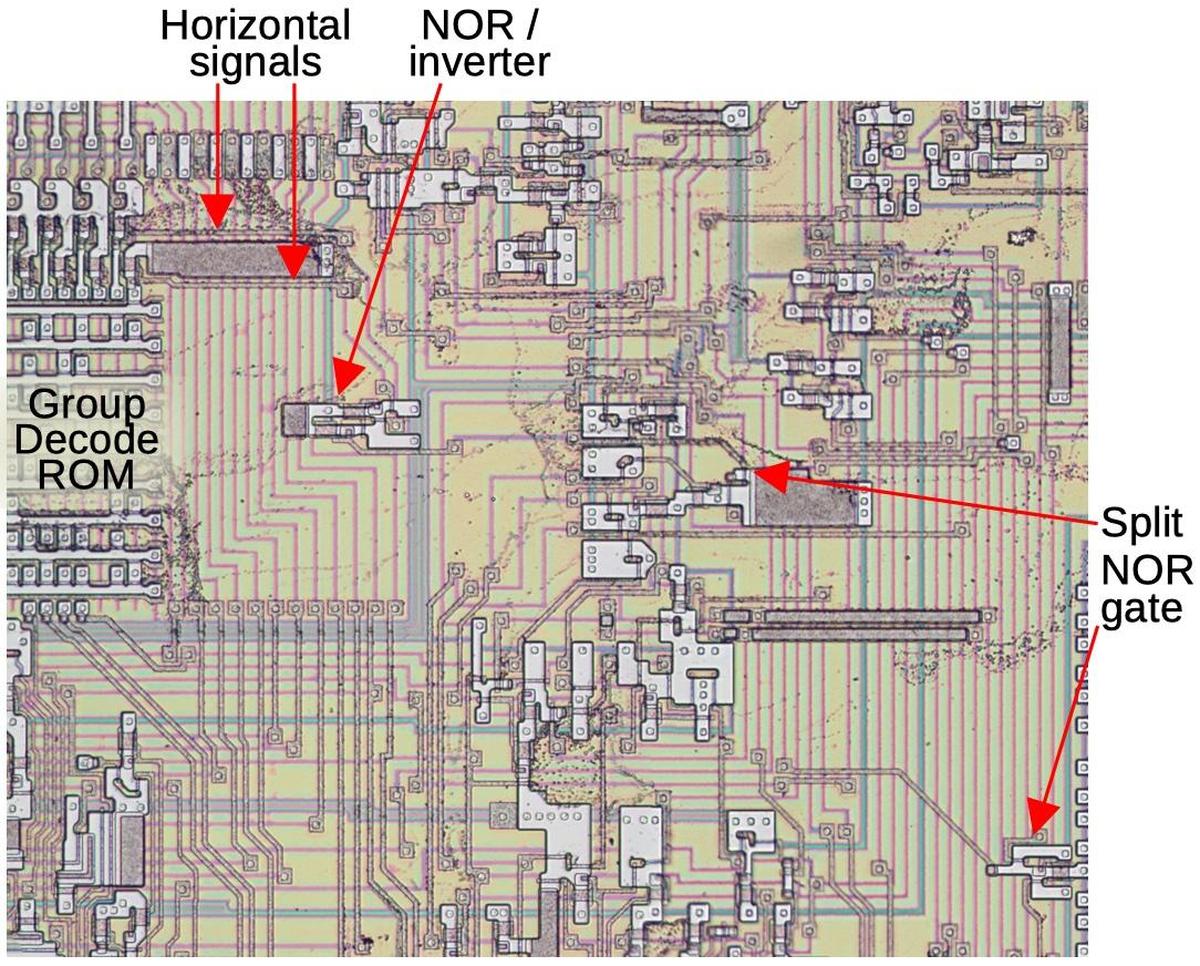

The diagram below zooms in on the Constant ROM, located next to the adder. The Constant ROM is implemented as a PLA (programmable logic array), a two-level structured arrangement of gates. The first level (bottom) selects the desired correction constant, while the second level (middle) generates the bits of the constant: three bits plus a sign bit. The necessary correction constant is selected based on the length of the queue in words, the HL pointer, and the empty (MT) flag.

The Constant ROM is used for more than just address correction. For example, it is also used to increment the Instruction Pointer by 2 after a prefetch. Other constants are used for the 8086's string operations, which act on a block of memory. The index registers are incremented or decremented by 1 for bytes or 2 for words. When popping a value from the stack, the stack pointer is decremented, which uses the constant -2. Additional constants are required to increment and decrement the IND register when accessing words from unaligned (odd) addresses. These increment/decrement values are selected in the upper part of the Constant ROM. In total, the Constant ROM holds values from -6 to +2.

Policy

There are some "policy" decisions on prefetching, and it's interesting to see how the 8086 implements them. Prefetching is not free: there is a tradeoff when performing a prefetch between saving time later versus delaying memory accesses from an executing instruction. Moreover, if a jump operation takes place, the prefetch queue is discarded and the memory cycles were wasted. Thus, the length of the queue is an "extremely tricky design problem, because performance can deteriorate if the queue is too long as well as if it is too short."3

Intel performed simulations to determine the best queue length. A 4-byte queue provided a large benefit, while a 6-byte queue (which they chose) was slightly better. The designers were surprised to find that performance flattened out after that; they expected a much longer queue would be necessary. The 8088 process has only a 4-byte prefetch queue because its 8-bit bus changes the tradeoffs.8

The basic prefetch policy is that if a memory access and a prefetch are requested at the same time, the memory access "wins", since it is guaranteed to be useful while the prefetch is just speculative. If the queue holds 0 to 2 bytes, prefetch happens during the next free memory cycle. If the queue holds 5 or 6 bytes, no prefetch can happen, since prefetch happens a word at a time. However, if the queue holds 3 or 4 bytes, prefetch is delayed for two clock cycles, which is an interesting choice. This gives an instruction more opportunities to perform a memory operation without being delayed by a prefetch. There is a tradeoff because maybe delaying the prefetch will waste two cycles of memory bandwidth, but performing the prefetch might waste four cycles of memory bandwidth. The motivation for this delay is that the last two bytes in the queue are less valuable because they are more likely to be discarded.

Another policy decision is how to handle a change in execution flow, such as a jump or subroutine call. The 8086 simply discards the prefetch queue and starts fetching from the new address. The 8086 designers considered better ways of handling jumps, but it wasn't practical to implement at the time. There is no intelligence if the instructions are already in the queue (e.g. jumping forward a couple of bytes). There is also no branch prediction; prefetching proceeds linearly regardless of branch instructions.

The 8086 does nothing to ensure consistency between the prefetch queue and memory if a prefetched instruction is modified in memory.9 In this case, the "stale" instruction in the queue is executed. This situation may seem contrived, but self-modifying code used to be fairly popular, where a program would change its own instructions.10

Prefetching and the 8087 coprocessor

One feature of the 8086 microprocessor is that it supports coprocessors such as the 8087 floating point chip.11 The 8087 implements high-performance floating-point computation, performing arithmetic and transcendental computations up to 100 times faster than the 8086. The 8087 gets instructions in an interesting fashion, executing floating-point instructions from the 8086's instruction stream. Specifically, an "ESCAPE" opcode indicates an instruction that is performed by the 8087 rather than the 8086. However, prefetching adds a lot of complexity to the coprocessor because the 8087 monitors the bus to determine when it should execute an instruction. With prefetching, the instruction on the bus doesn't match the instruction being executed. An instruction may be executed many cycles after it was fetched over the bus. A prefetched instruction may even be discarded and never executed.

To solve this problem, the 8087 manages its own copy of the prefetch queue to determine when the 8086 would be executing a floating-point instruction. The 8087 watches the bus to see when instructions are prefetched. The 8086 provides queue status signals (QS0 and QS1) to indicate when it takes bytes from the queue or flushes the queue. These signals allow the 8087 coprocessor to keep track of the 8086's queue state so it can tell what instruction the 8086 is executing. In other words, the 8086 doesn't tell the 8087 coprocessor what to do; instead, the two chips process the instruction stream in parallel. Another complication is the 8087 coprocessor can be used with the 8088 processor chip, which has a smaller 4-byte queue. Thus, the 8087 coprocessor must detect whether it is connected to an 8086 or an 8088 and maintain its queue appropriately.

Brief history

Caching and prefetching were used in mainframe computers dating back to the 1960s. For instance, the IBM System/360 Model 91 (1966) had a cache with prefetching. Minicomputers such as the VAX 11/780 (1977) later used caching and prefetching. However, these features took a while to trickle down to microprocessors. The Motorola 68000 (1980) had a 4-byte prefetch queue. As far as I can tell, the 8086 was the first microprocessor with a prefetch queue.

We can view the 8086 as a stepping-stone towards the large caches first used externally in the 80386 and internally in the 486. The 80186 and 80286 kept the 6-byte prefetch buffer size of the 8086. The 80386 has a 16-byte prefetch buffer, although apparently due to a bug it was shrunk to 12 bytes in later revisions. As well as the prefetch queue, the 80386 supported an external cache.

Early microprocessors such as the 6502 or Z80 could fetch the next instruction while they were finishing the previous instruction. This minimal two-stage pipelining improved performance, but was much more limited than 8086-style prefetching. An Intel study found that this simple overlap provides a 35% performance increase with 15% more hardware, while implementing prefetching provided an additional 11% gain with 14% more hardware.3 This illustrates how the increasing transistor counts from Moore's law opened up new opportunities to improve performance. But it also shows diminishing returns as performance increases become smaller and more expensive.

Conclusions

Well, this was supposed to be a quick post about the prefetch queue, but the topic turned out to have a lot more complexity than I expected. A six-byte prefetch queue may seem like a simple feature to add to a processor, but it affects many parts of the system. Prefetching is tied closely to the memory access circuitry, of course, but it also required a Constant ROM to handle the difference between the execution address and the prefetch address. Prefetching also impacted the microcode, with three micro-instructions to support prefetching.

Prefetching also illustrates some of the ways that each feature and corner case of a processor like the 8086 leads to more complexity. For instance, byte-aligned (rather than word-aligned) instructions require a mechanism to fetch bytes as well as words. Supporting multiple instruction formats (1-byte opcodes, an opcode byte followed by a Mod R/M byte, multi-byte instructions) resulted in the loader state machine. The segment registers required an adder to compute the memory address for every access. Looking at the 8086 internals makes it easier to understand the motivation behind RISC processors, discarding the complexity and corner cases to create a simpler but faster processor.

I plan to continue reverse-engineering the 8086 die so follow me on Twitter @kenshirriff or RSS for updates. I've also started experimenting with Mastodon recently as @oldbytes.space@kenshirriff. If you're interested in the 8086, I wrote about the 8086 die, its die shrink process and the 8086 registers earlier.

Notes and references

-

Steve Furber, co-creator of the ARM chip, mentions that "The first integrated CPUs were coincidentally quite well matched to semiconductor memory speeds, and were therefore built without caches. This can now be seen as a temporary aberration." See VLSI Risc Architecture and Organization p77. To make this concrete, the Apple II (1977) used a MOS 6502 processor running at about 1 megahertz while its 4116 DRAM chips could perform an access in 250 nanoseconds (4 times the clock speed). The 8086 processor ran at 5-10 MHz which meant that 250 ns DRAM chips were slower than the clock speed. Nowadays, processors run at 4 GHz but DRAM access speed is about 50 nanoseconds (1/200 the clock speed). ↩

-

Modern processors use caches to improve memory performance; caches are often megabytes in size. Accessing data from a cache is faster than accessing it from main memory, but the tradeoff is that caches are smaller. The 8086's prefetch queue is similar to a cache in some ways, but there are some key differences. First, the prefetch queue is strictly sequential. If you jump ahead two bytes, even if the prefetch queue has those instruction bytes, the processor can't use them. Second, the prefetch queue can't reuse bytes. If you have a 6-byte loop, even though all the code fits in the prefetch queue, it will be reloaded every time. Third, the prefetch queue doesn't provide any consistency. If you modify an instruction in memory a couple of bytes ahead of the PC, the 8086 will run the old instruction if it's in the queue. ↩

-

The design decisions for the 8086 prefetch cache (and many other aspects of the chip) are described in: J. McKevitt and J. Bayliss, "New options from big chips," in IEEE Spectrum, vol. 16, no. 3, pp. 28-34, March 1979, doi: 10.1109/MSPEC.1979.6367944. ↩↩↩↩

-

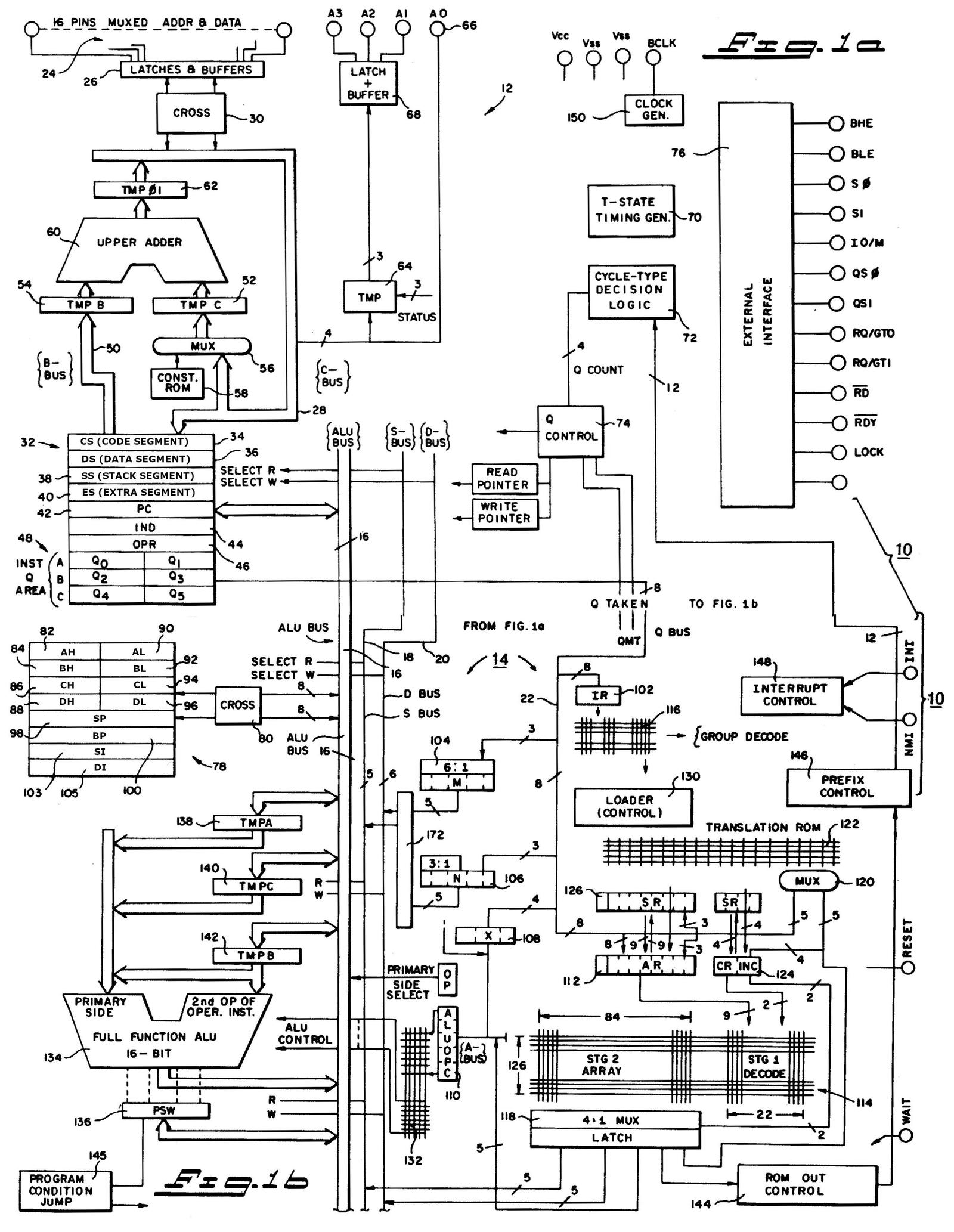

A detailed block diagram of the 8086 is provided in the patent. Conveniently, the layout of the diagram is close to the physical layout of the chip.

Detailed block diagram of the 8086, based on patent US4449184. I have modified the register names to match the common naming.I won't discuss this block diagram in detail here, but I'll point out the Q (queue) control logic in the upper center, with its associated read and write pointers. The Q bus connects the queue to various parts of the instruction decoding circuitry.

-

Supporting misaligned memory accesses (i.e. accessing a word at an odd address) adds complexity to the 8086. It's not surprising that many RISC chips prohibit unaligned accesses. On SPARC, for instance, an unaligned access fails with a "bus error", which the Sun programmers out there probably recognize. ARM processors before ARMv7 didn't support unaligned accesses. RISC-V supports misaligned data accesses but not misaligned instructions. ↩

-

The 8086 patent describes how the program counter in the 8086 does not hold the "real" value:

PC is not a real or true program counter in that it does not, nor does any other register within CPU, maintain the actual execution point at any time. PC actually points to the next byte to be input into queue. The real program counter is calculated by instruction whenever a relative jump or call is required by subtracting the number of accessed instructions still remaining unused in queue from PC.

↩ -

The CORR correction operation adds more complexity to the system than you might expect, with synchronization between the Bus Interface Unit and the Execution Unit. Because the correction computation uses the addressing adder, the correction operation must be synchronized with memory accesses that also use the adder. To accomplish this, the Bus Interface Unit waits until any memory operation is finished and then generates two clock cycles of "fake" memory operation, keeping the adder free for the CORR instruction. As a result, the memory control circuitry needs logic to implement this memory cycle. Meanwhile, the microcode engine is stopped until the CORR instruction completes, requiring synchronization circuitry. ↩

-

The 8088 is famous as the processor in the original IBM PC. The 8088 processor is essentially the same as the 8086 except that it has an 8-bit data bus instead of a 16-bit data bus, so it performs memory accesses a byte at a time instead of a word at a time. Internally, the 8088 is nearly identical to the 8086 but there are a few differences in microcode and in the bus circuitry. The most visible difference is that the 8088 has a 4-byte prefetch queue instead of a 6-byte prefetch queue. Simulations showed that a 4-byte queue was sufficient for the 8088. Because it fetches one byte at a time instead of two bytes, the 8088 fills the prefetch queue more slowly and wouldn't get much benefit from the larger queue. I haven't looked at the 8088's prefetch circuitry in detail, so I can't describe it exactly. ↩

-

At some point, Intel implemented consistency between cached instructions and memory. This ensures that self-modifying code will run the latest version of an instruction rather than a stale instruction in the cache. I couldn't determine exactly when this was implemented; various sources say the 486, the Pentium, or the Pentium Pro. (If you have a definitive answer, please let me know.) ↩

-

Self-modifying code can be used as a way to distinguish between the 8086 and 8088 chips in software. Since the 8086 has a 6-byte queue and the 8088 has a 4-byte queue, you can create self-modifying code that will run a prefetched instruction on the 8086 but run the modified instruction on the 8088. ↩

-

Although the 8087 is the most well-known coprocessor for the 8086, it was not the only coprocessor. The Intel 8089 input/output coprocessor provided mainframe-style I/O channels, offloading I/O processing from the 8086. More than just a DMA engine, the 8089 was a separate processor with its own instruction set. Unlike the 8087, the 8089 didn't take instructions from the 8086's instruction stream so it didn't interact with prefetching; instead, the 8086 sent a Channel Attention signal to the 8089 and the 8089 read instructions from shared memory. The 8089 was complex and expensive and wasn't very popular. The Intel 82586 Ethernet coprocessor used a similar Channel Attention scheme. ↩