In 1969, Sharp introduced the first calculator built from high-density MOS chips, the QT-8D, followed by the handheld Sharp EL-8, the world's smallest calculator at the time.1 These calculators were high-end products, selling for $345 (about $1800 today). Integrated circuits at the time couldn't fit the entire calculator on one chip, so these calculators contained five ICs: an arithmetic chip, a decimal point chip, a keypad/display chip, a control chip, and a clock chip.

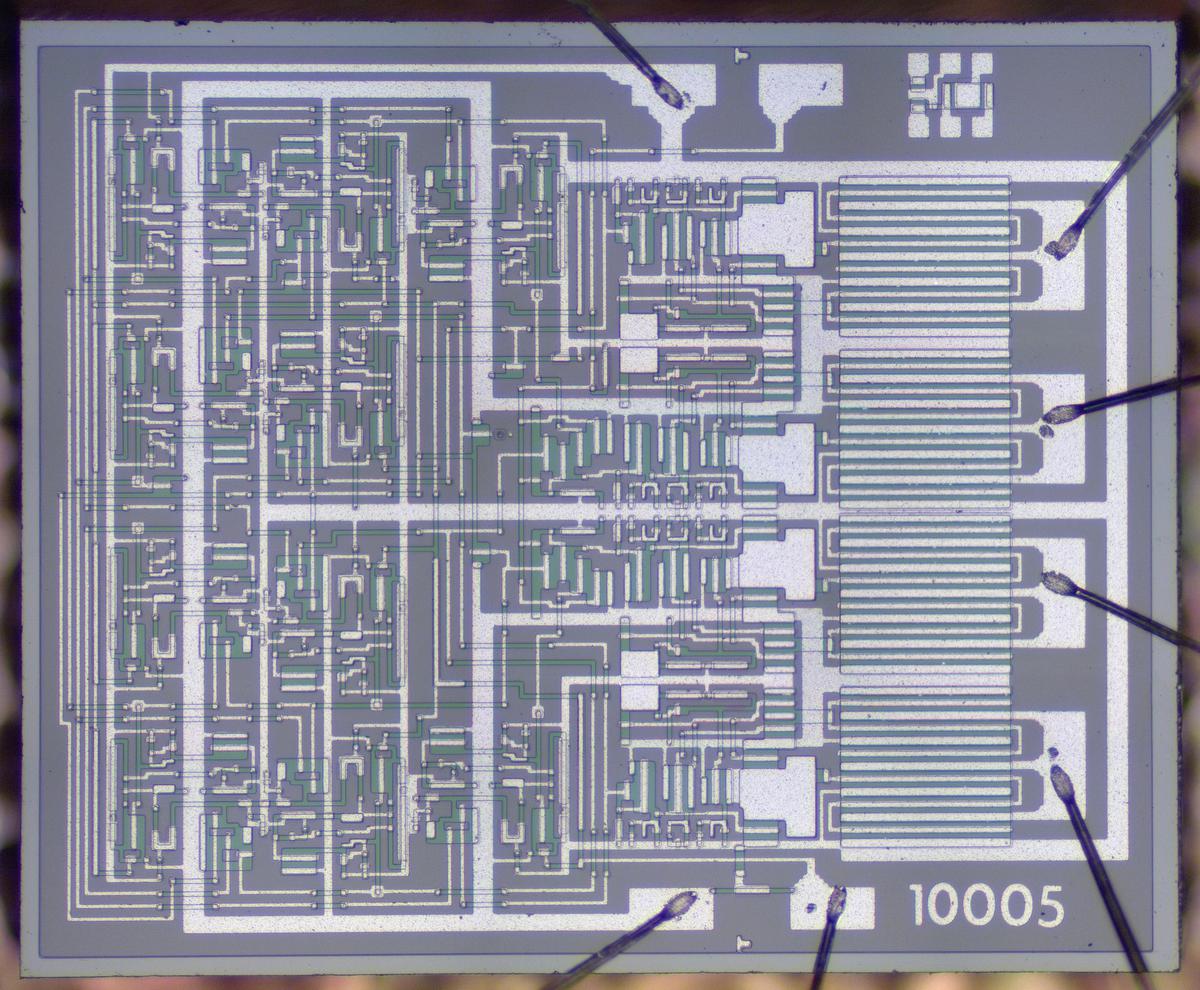

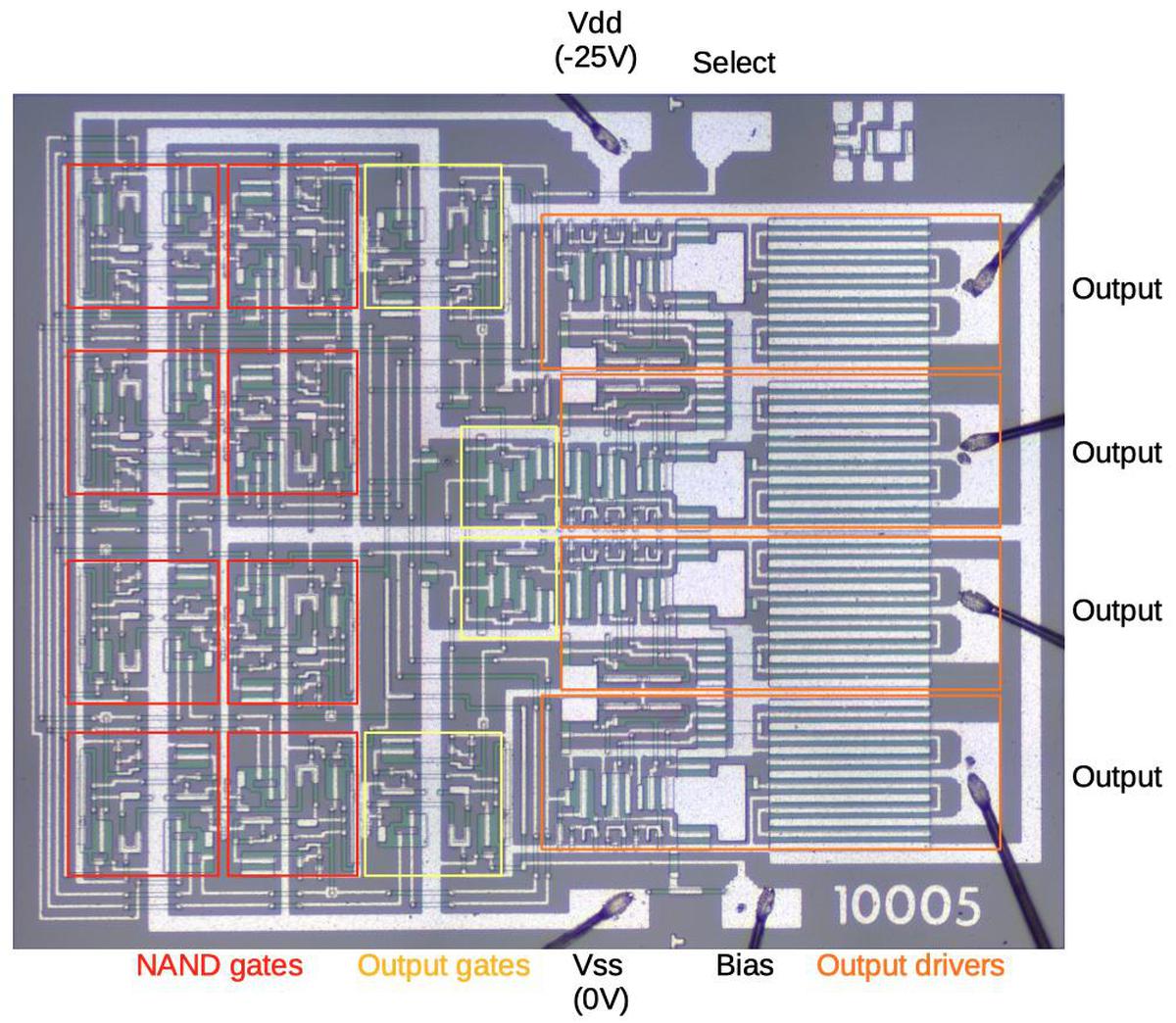

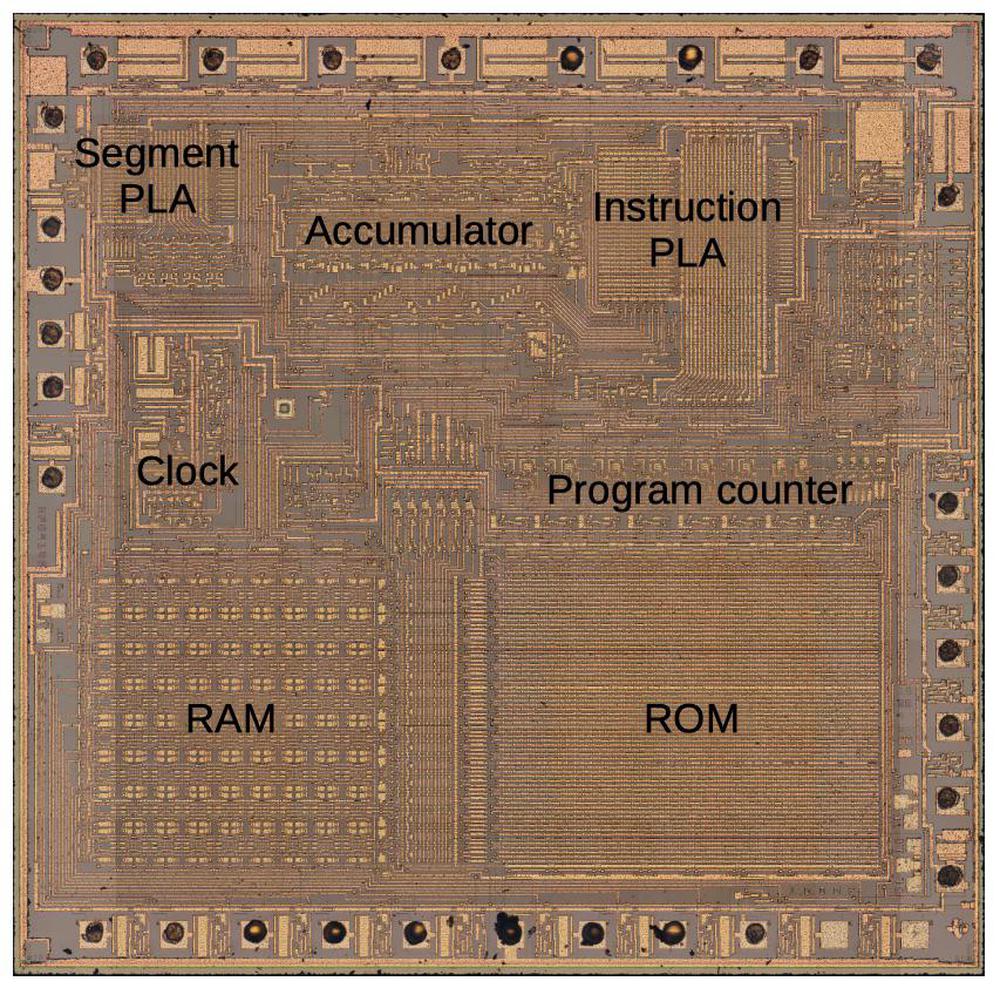

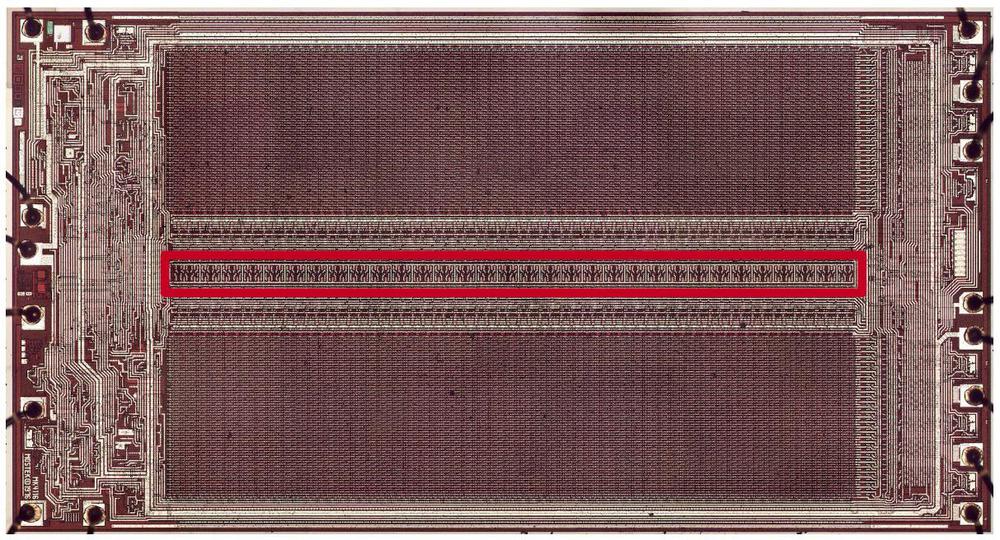

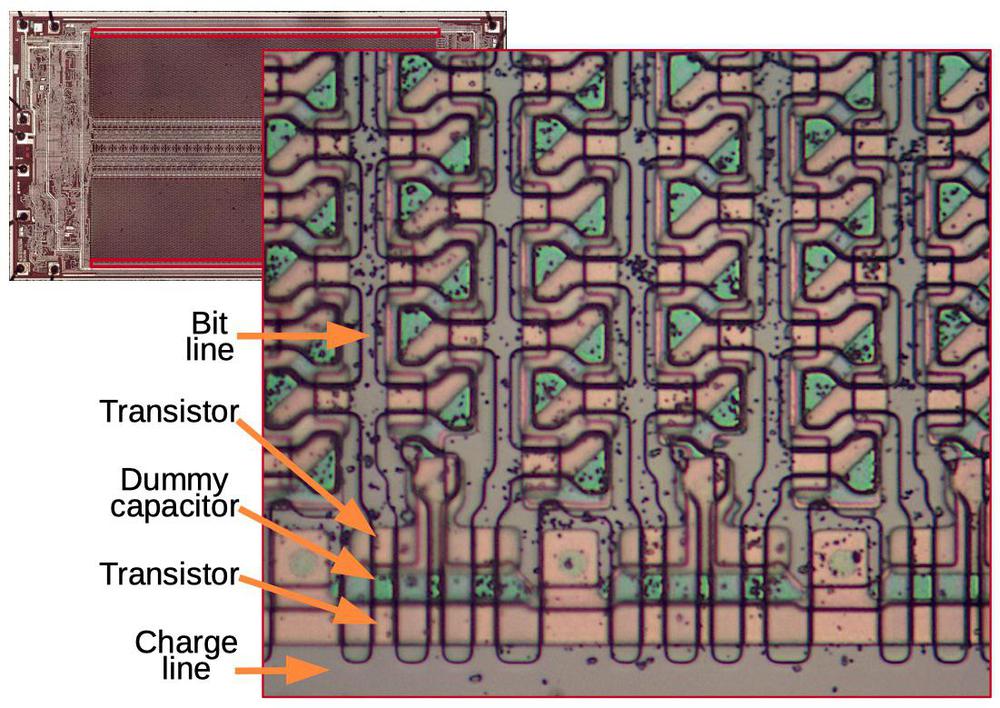

This blog post discusses the clock chip and how it generated the unusual four-phase clock signals required by the calculator. The die photo below, provided by calculator researcher Francois Gueissaz, shows the silicon die of the clock chip. the silicon substrate has a purple tint while the doped, conductive silicon is green. The metal layer on top is white. Around the edges, seven thin bond wires connect the die to the external pins.2 This chip has about 200 transistors and implements just a dozen moderately complex logic gates. While the density of this chip is absurdly low by modern standards, it illustrates the progress of MOS integrated circuits in the late 1960s.

Although computers now all use MOS integrated circuits, the path to MOS was rocky, with MOS integrated circuits viewed as slow and unreliable in the 1960s.4 Handheld calculators were a good match for the characteristics of MOS, though: they needed to be compact and lightweight with low power consumption, but computational speed was not important. In 1969, the Japanese calculator company Sharp signed a $30 million deal with Rockwell for this MOS-based calculator chipset, the largest MOS order in history at the time. The five chips were implemented by the Autonetics division of Rockwell.3

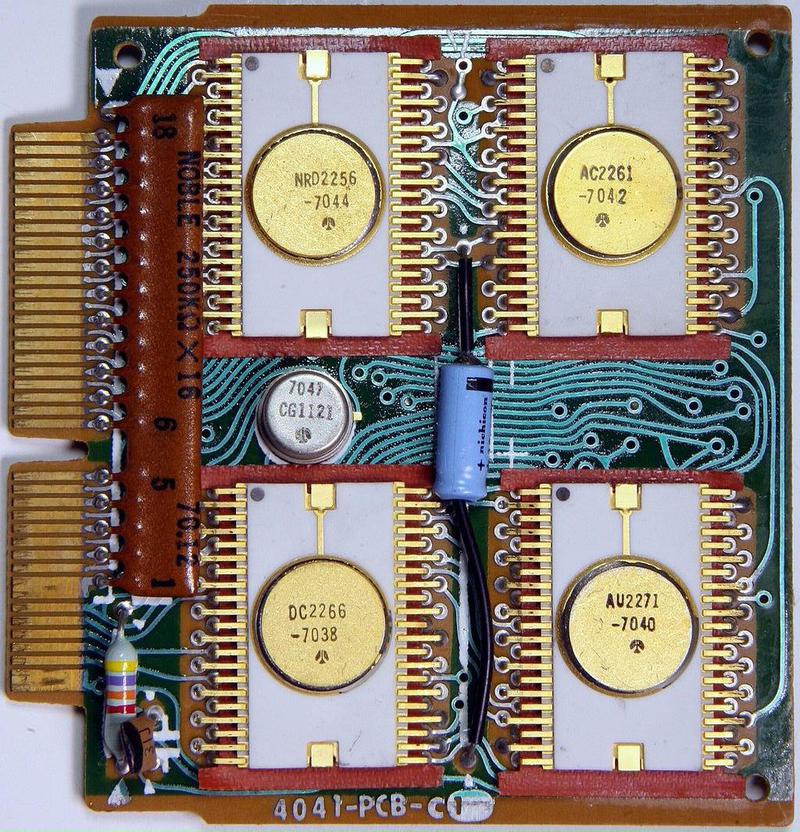

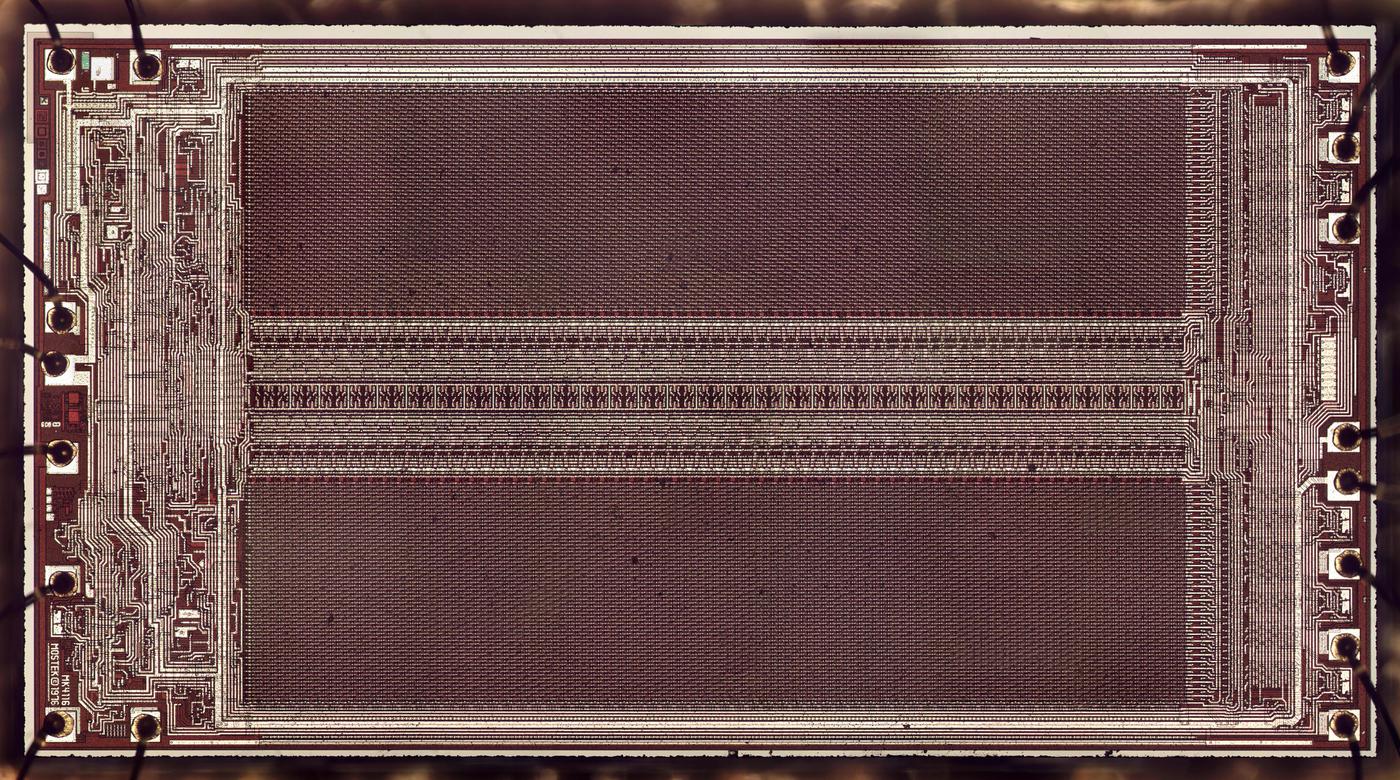

Although the Sharp calculator (above) was handheld, you can see that it was rather thick and chunky, with unusual 8-segment vacuum fluorescent display tubes for its display. The photo below shows the circuit board inside the calculator. The board is dominated by the four large integrated circuits with circular golden lids. These integrated circuits were packaged as 42-pin ceramic ICs with staggered pins. Unlike modern printed circuit boards, the traces on this board are curved, showing its hand-drawn layout.

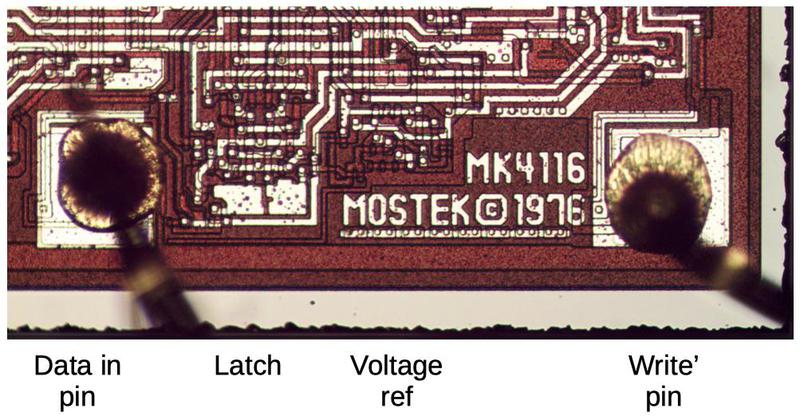

The clock IC is packaged in the small 10-pin metal can, marked with a blurry Rockwell logo (the inset shows the logo). This part number is CG1121 (probably standing for Clock Generator) and is similar to the CG2341 I examined. The date code 7047 indicates this IC was manufactured in the 47th week of 1970, i.e. late November.

Cutting the top off the metal can integrated circuit reveals the tiny silicon die. Although the metal can has 10 pins, only seven pins are wired to the die. The metal tab at the top of the photo indicates pin 1 of the integrated circuit.

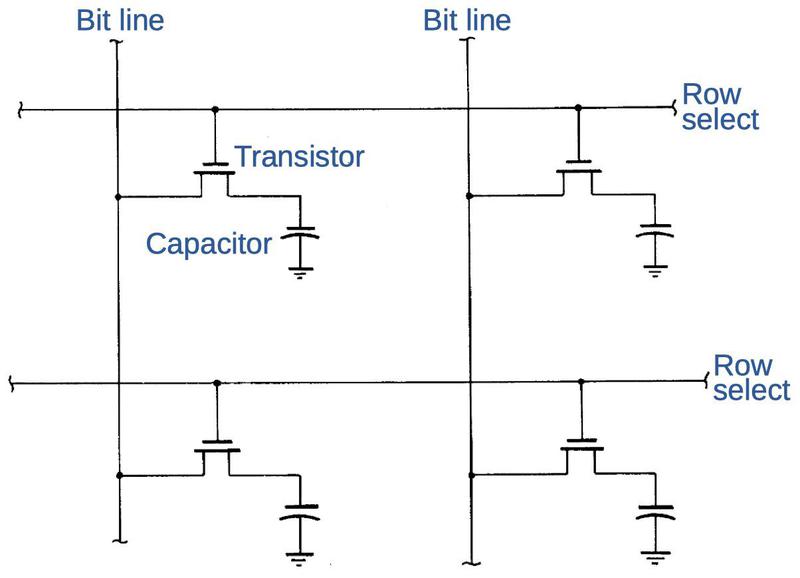

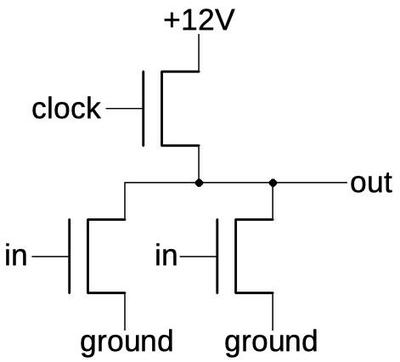

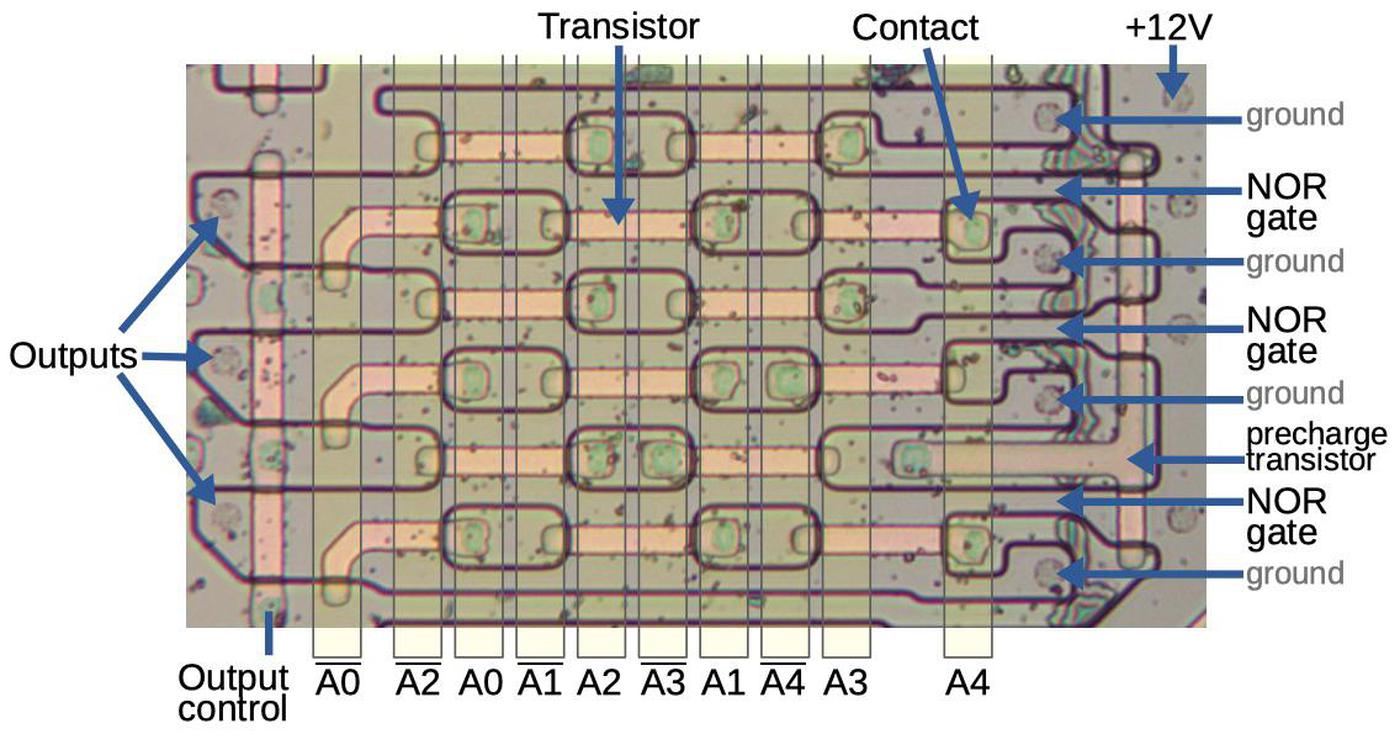

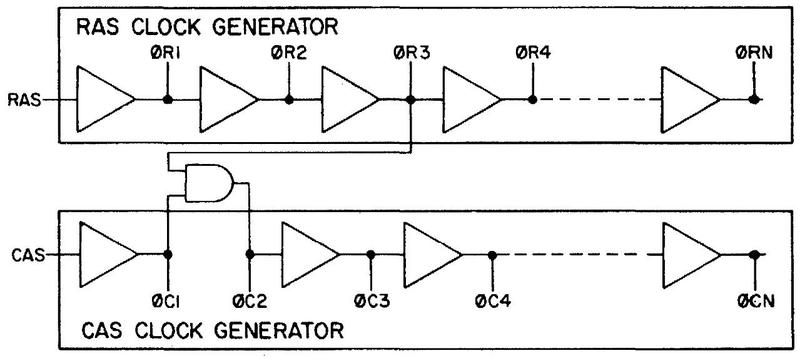

Why do the calculator chips require a complex four-phase clock? In 1966, Autonetics invented a technique for building logic circuits called four-phase logic. Unlike standard static logic gates, these logic gates held values dynamically using the capacitance of the wiring. The four-phase clock stepped the gates through sequences of precharging and then computing the logic function. This sounds complicated, but four-phase logic had ten times the density of standard logic gates, as well as using 1/10 the power and having 10 times the speed. As a result, many early high-density MOS chips used four-phase logic.5

Constructing transistors, resistors, and capacitors

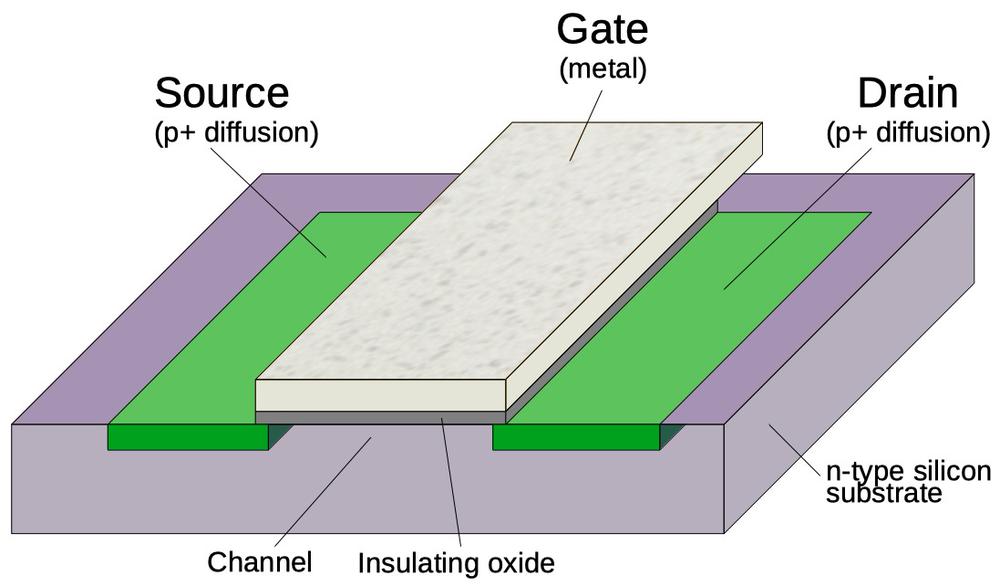

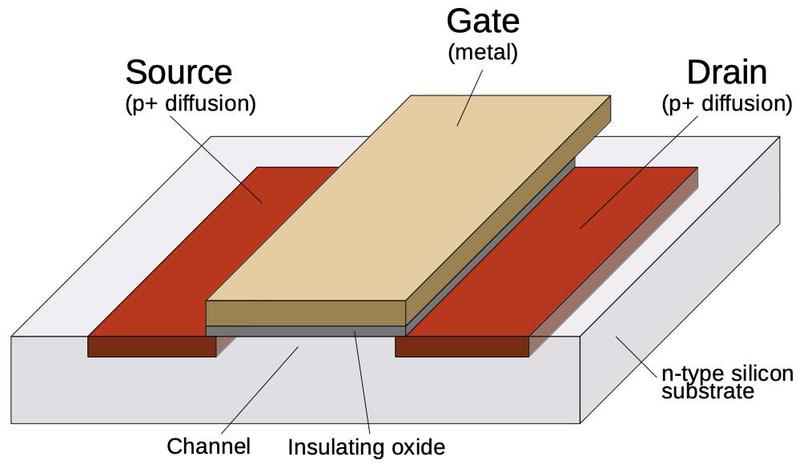

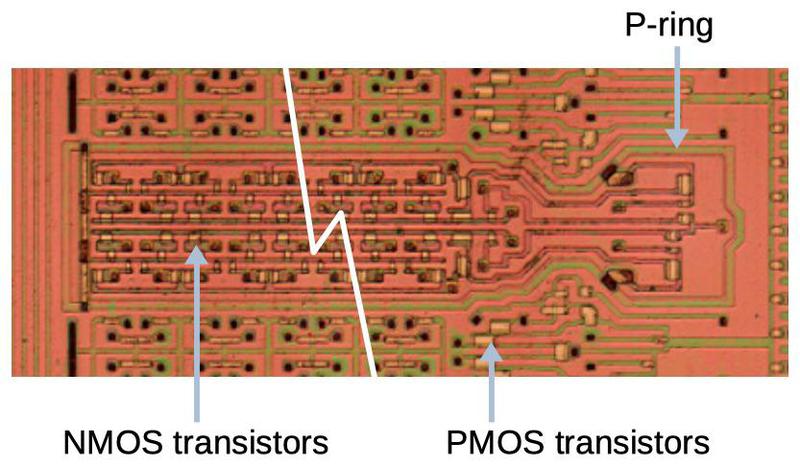

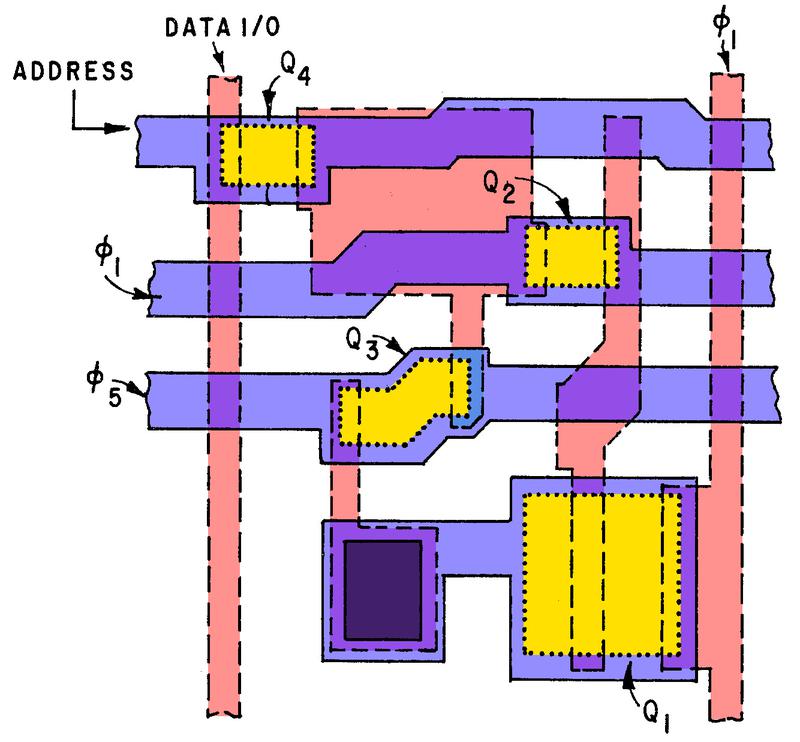

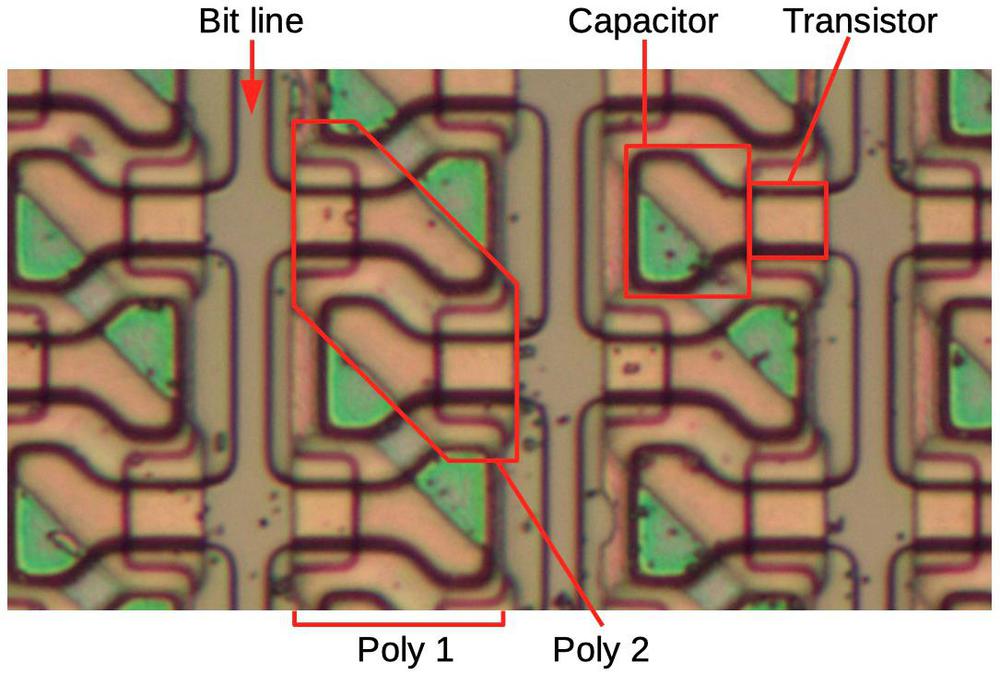

Transistors are the key component of the chip. The diagram below shows a metal-gate PMOS transistor, the (somewhat primitive) type of transistor used in this IC. At the bottom, two regions of silicon (green) are doped to make them conductive, forming the source and drain of the transistor The gate is formed by a metal strip between the silicon regions, separated from the silicon by a thin layer of insulating oxide. (These layers—Metal, Oxide, Semiconductor—--give the MOS transistor its name.) The transistor can be considered a switch between the source and drain, controlled by the gate. To simplify the behavior, a PMOS transistor turns on when the gate is pulled negative (-25 volts), while the transistor turns off when the gate is at 0 volts. (These early PMOS transistors required an inconveniently large negative voltage.)

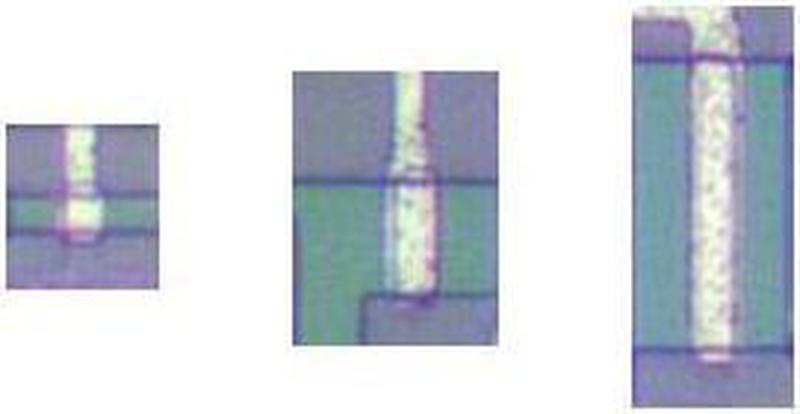

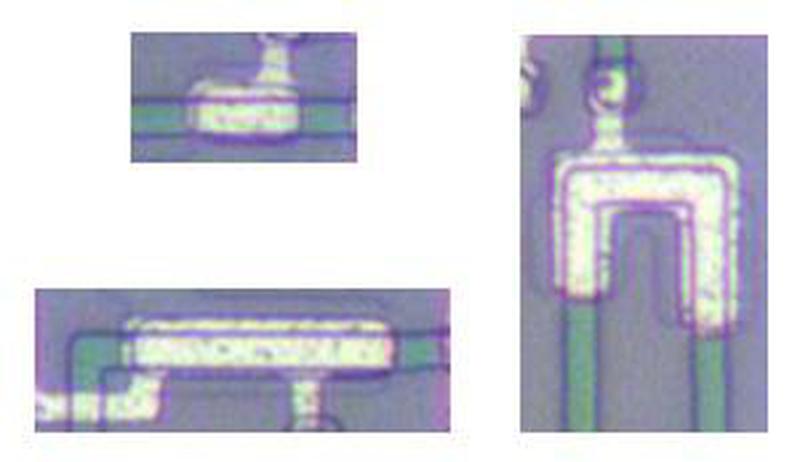

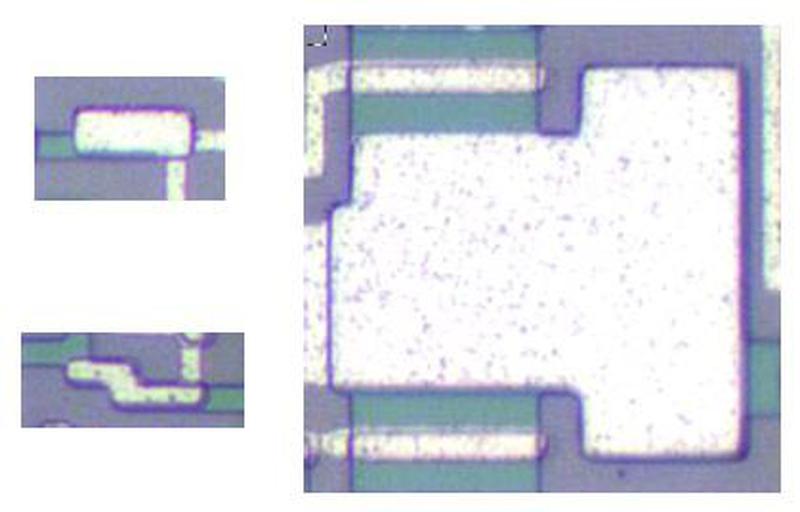

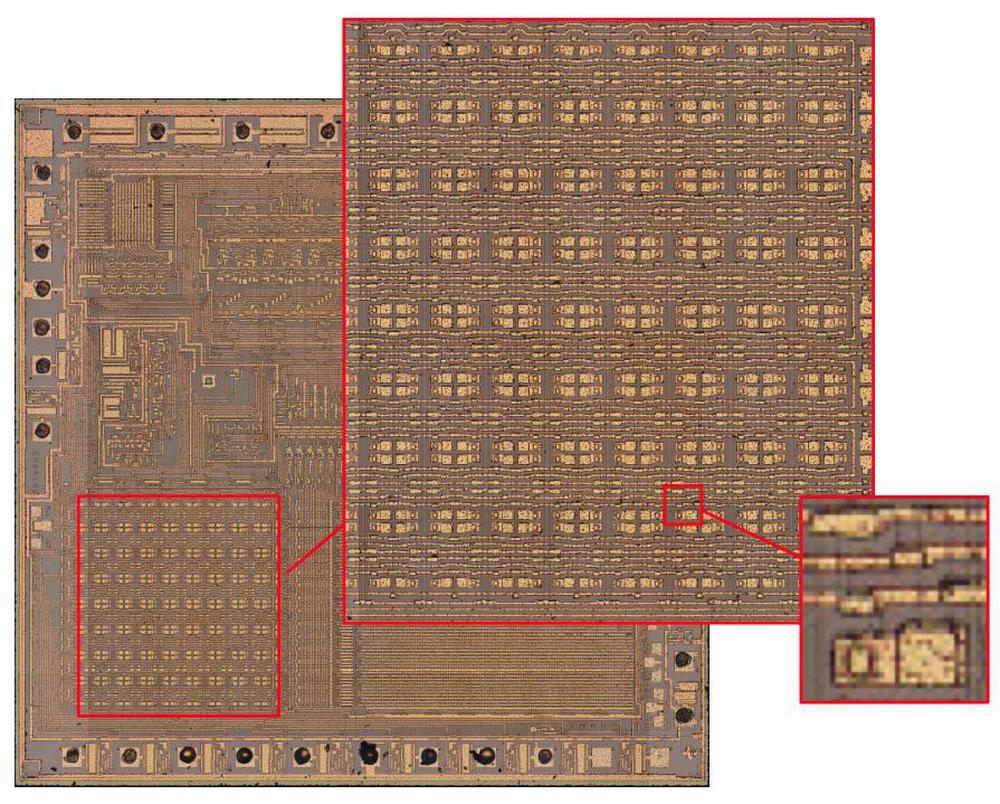

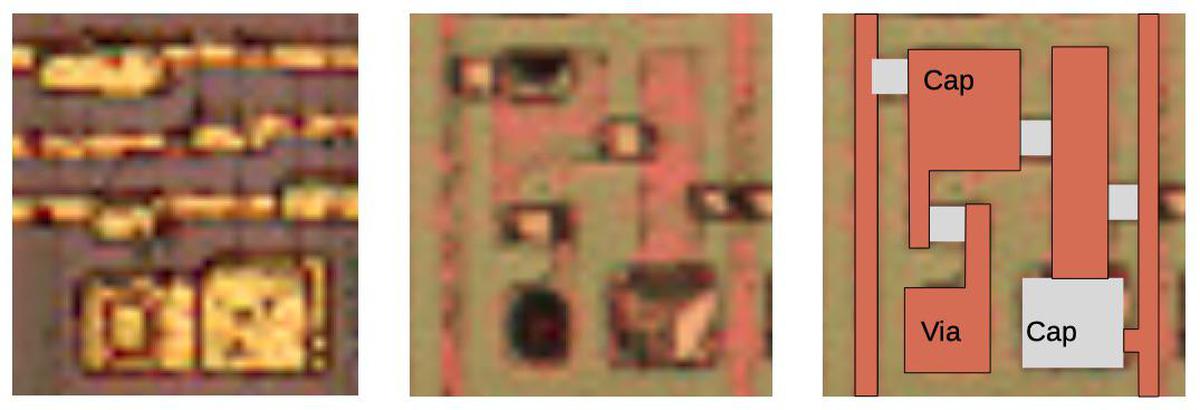

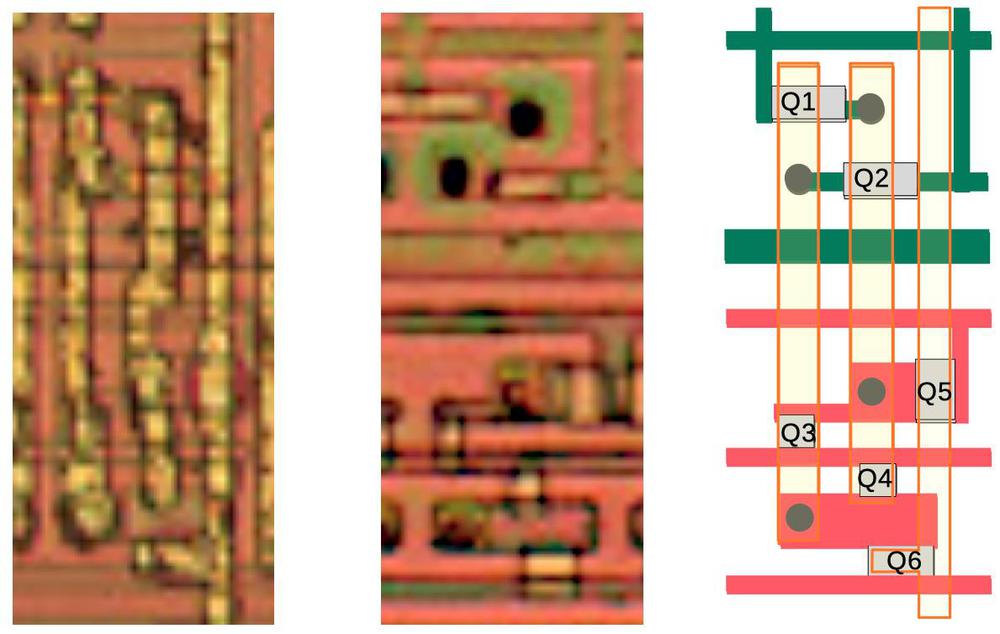

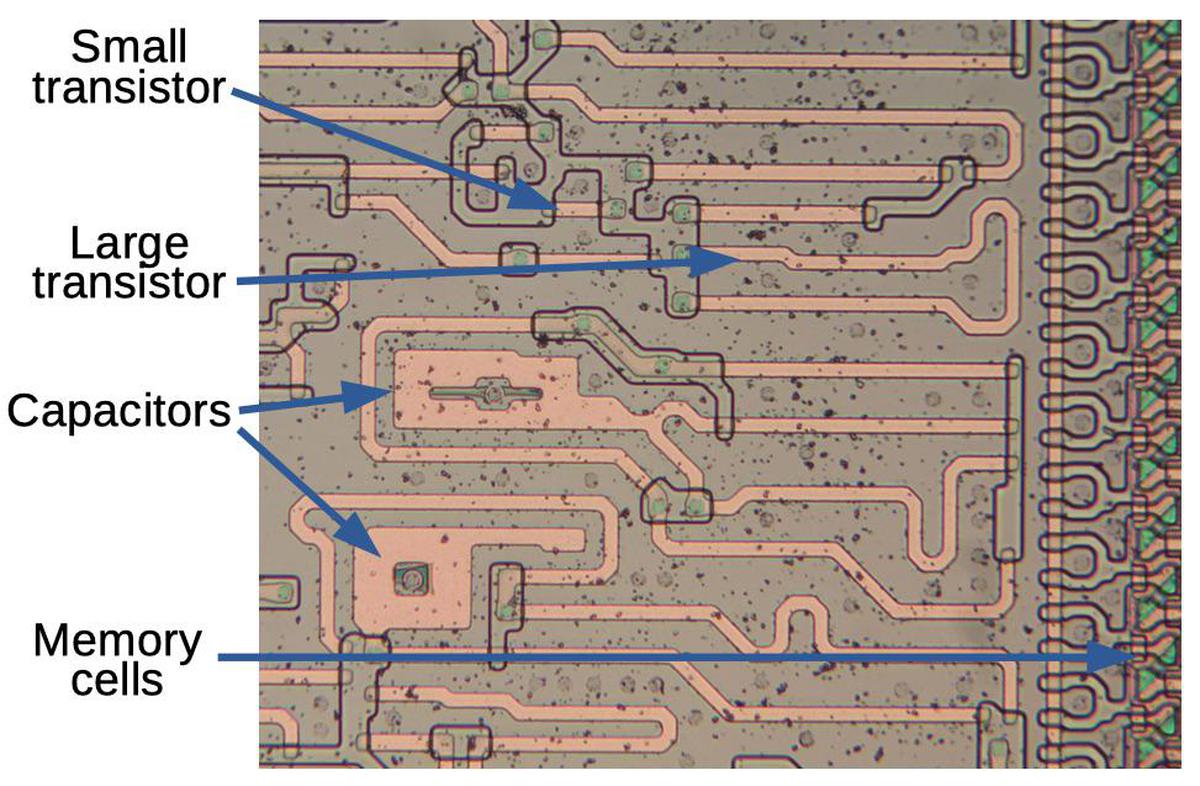

The photos below show transistors on the die as they appear under a microscope. The silicon and metal layers match the diagram above; the doped silicon is greenish while the metal layer on top is white. The gate is formed where the metal and silicon overlap, with a faint oval where the oxide is thinned. These transistors are three different sizes: the wider transistors allow higher current. The transistors are carefully sized in the circuits based on the required current.

The next important component is the resistor; the photo below shows three resistors. These resistors may look like transistors, and that's because they are transistors. While the transistors above were widened to support more current, these transistors are made longer so the long path reduces the current flow through the transistors. This makes them act as resistors. The metal gate of these transistors is tied to -25 volts, so the transistors are always on, rather than operating as switches.

The final important component of the integrated circuit is the capacitor. A capacitor is formed by using metal for one plate and doped silicon (green) for the other plate, separated by the insulating oxide layer. The photo below shows two small capacitors and one large capacitor, at the same scale. The large capacitor is used in the output circuitry; the metal stripes above and below it are transistors that drive it.

Implementing an inverter and NAND gate

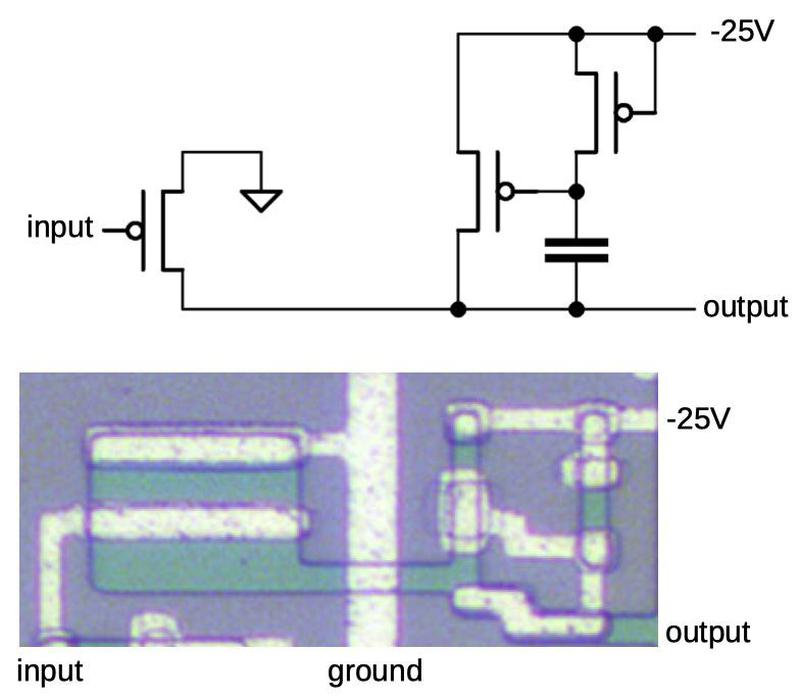

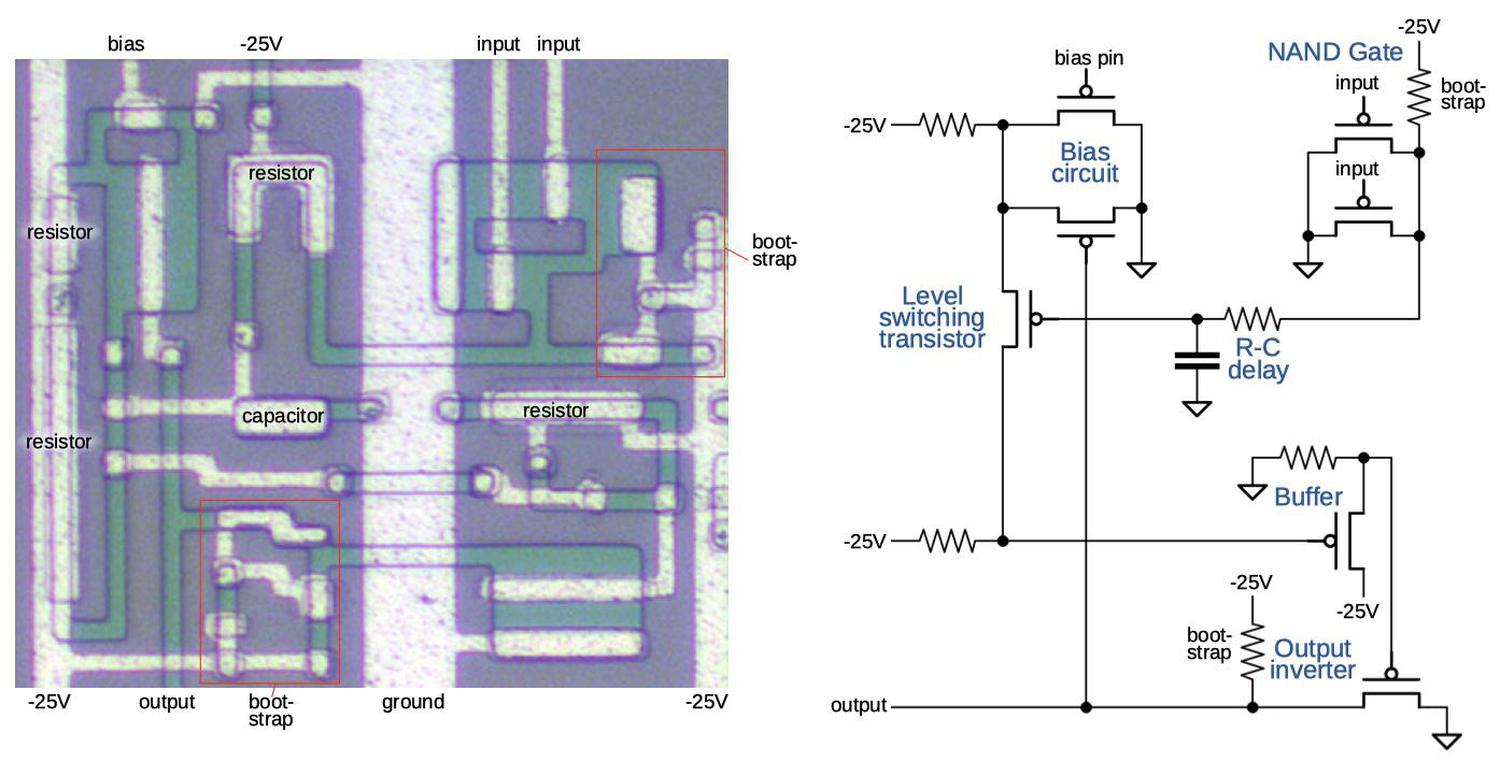

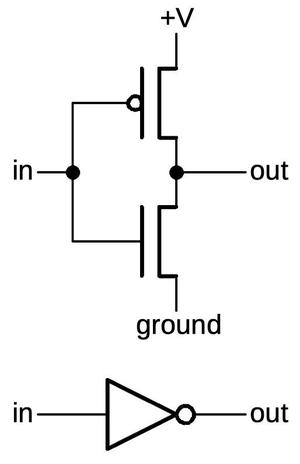

With these components, logic gates can be constructed. The schematic below shows how an inverter is implemented in the IC. The layout of the schematic matches the die image underneath, so hopefully the transistors and capacitor can be recognized. If the input is low, the input transistor turns on, pulling the output to ground (i.e. high). If the input is high, the input transistor turns off and the "bootstrap load", the tricky circuit on the right pulls the output to -25V (i.e. low). Thus, the circuit inverts the input.

Conceptually, you can think of the bootstrap load as a pull-down resistor. The implementation is complex to compensate for the poor characteristics of transistors at the time. The capacitor acts as a charge pump, providing a necessary voltage boost when the circuit switches. (For more details on bootstrap loads, see my earlier article.)

The implementation of a NAND gate is similar to the inverter above, but with multiple input transistors in parallel. If any input is low, the corresponding input transistor turns on, pulling the output to ground (i.e. high), as required by a NAND gate.

The NAND delay gate

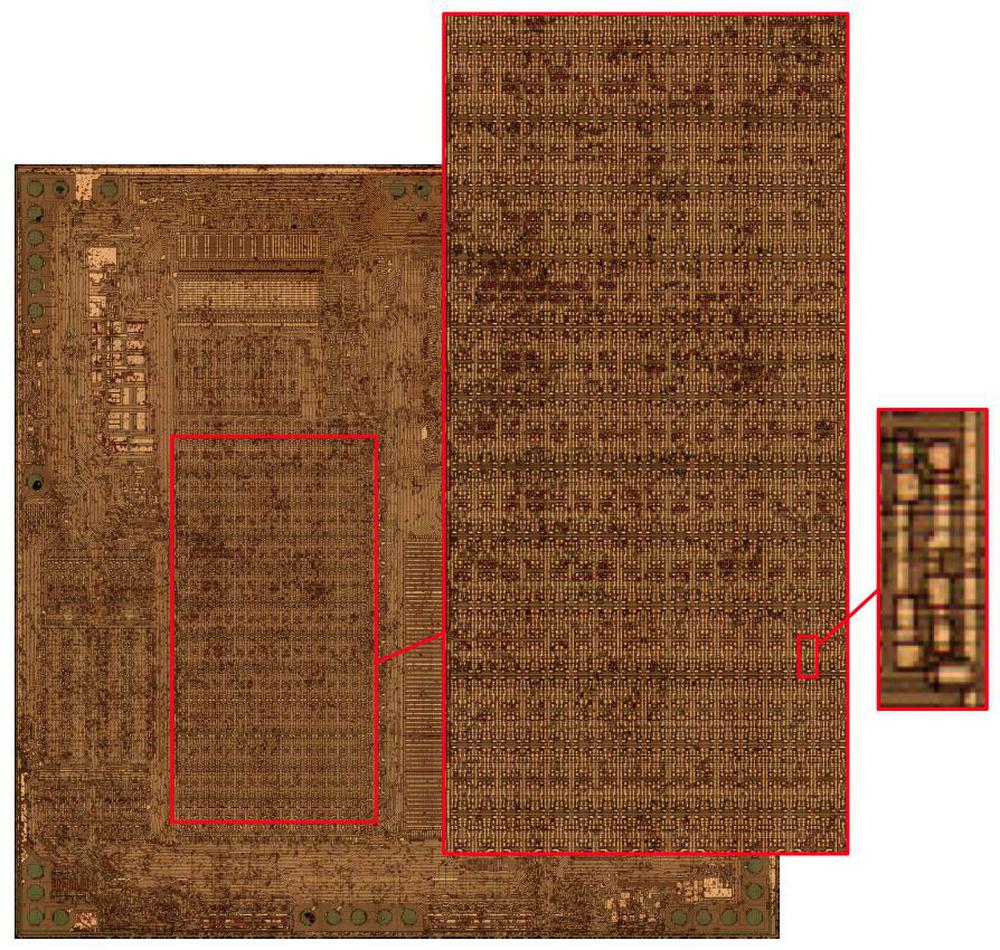

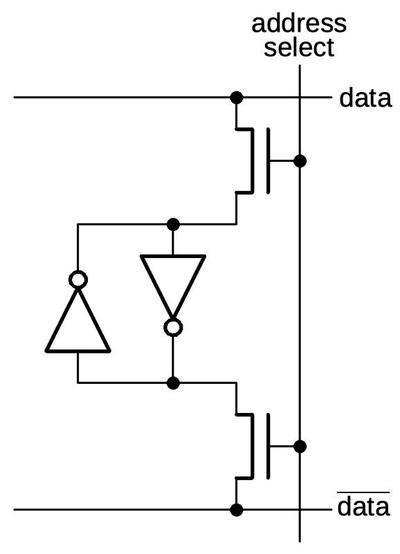

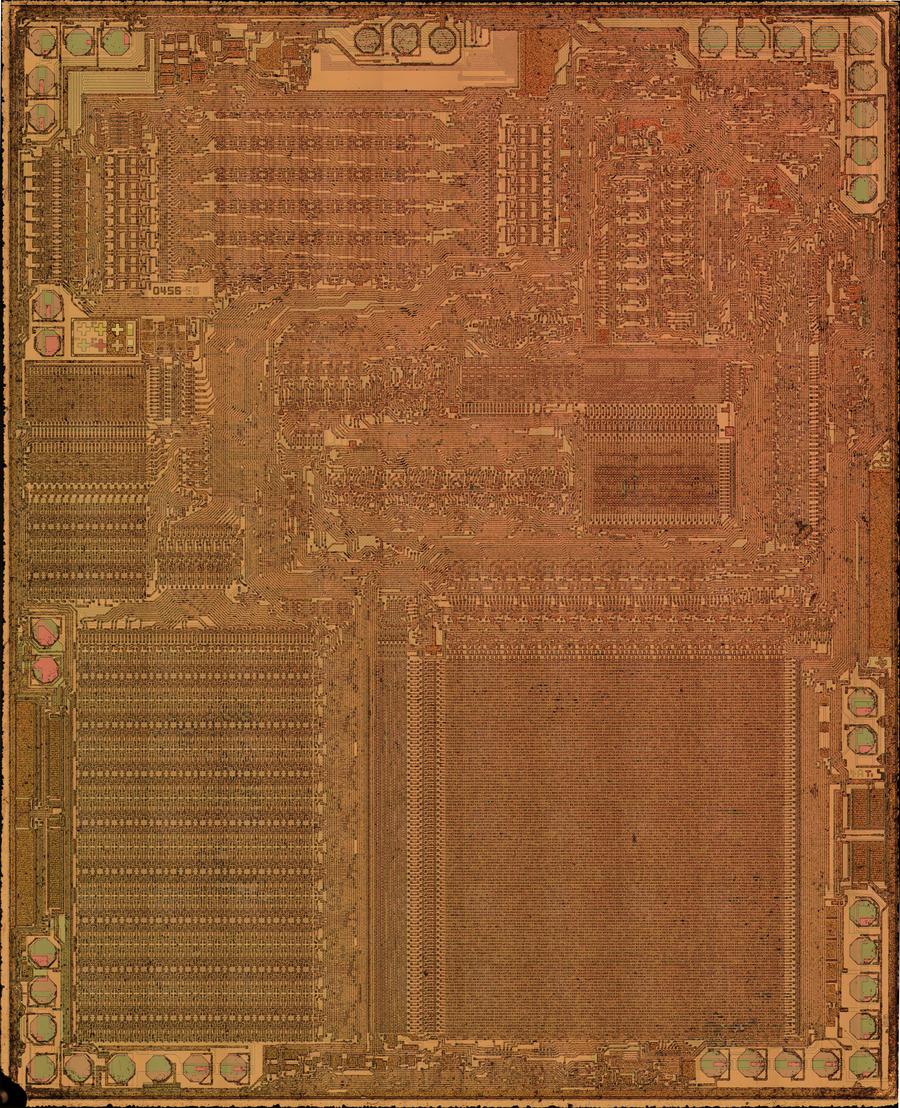

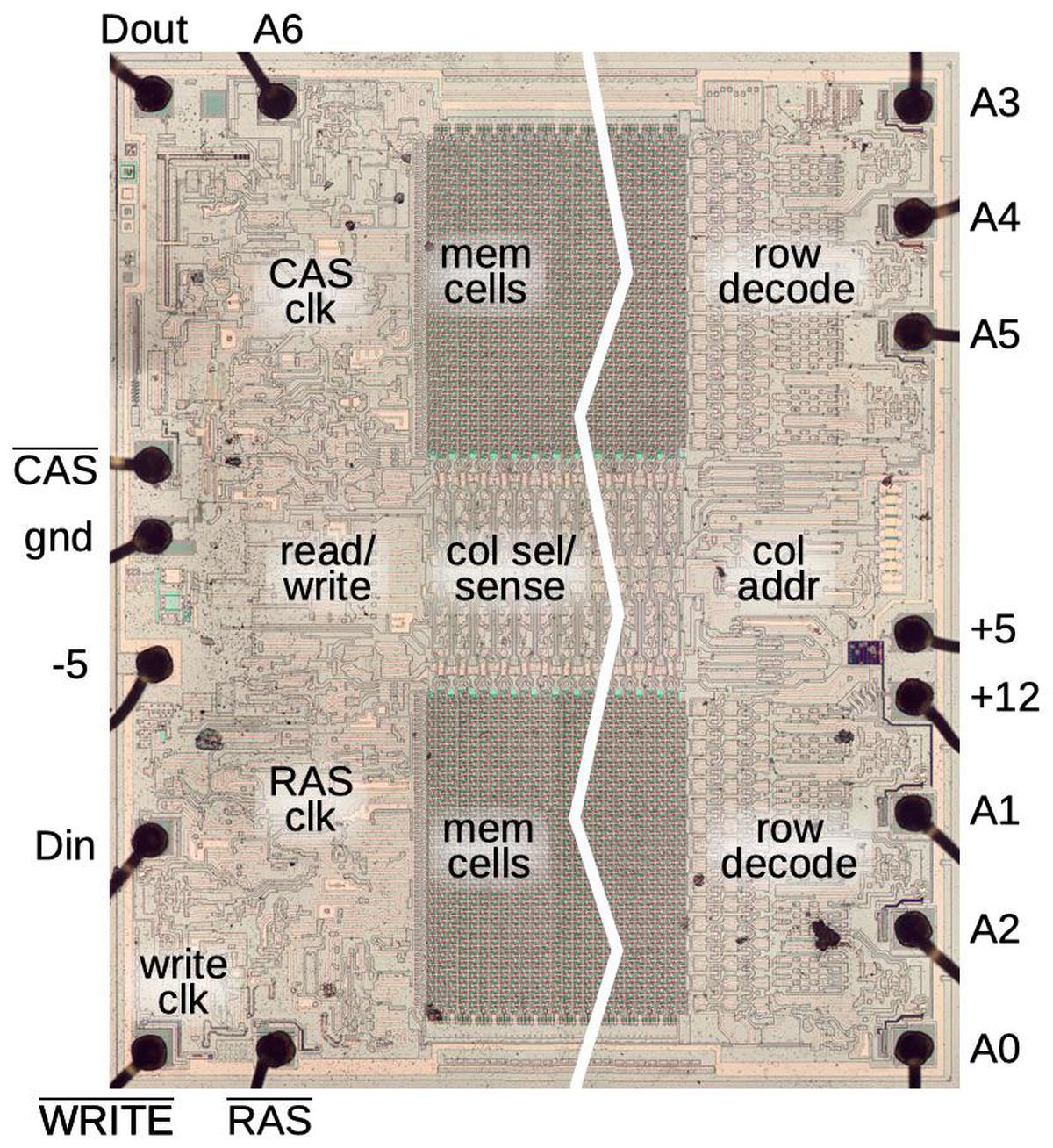

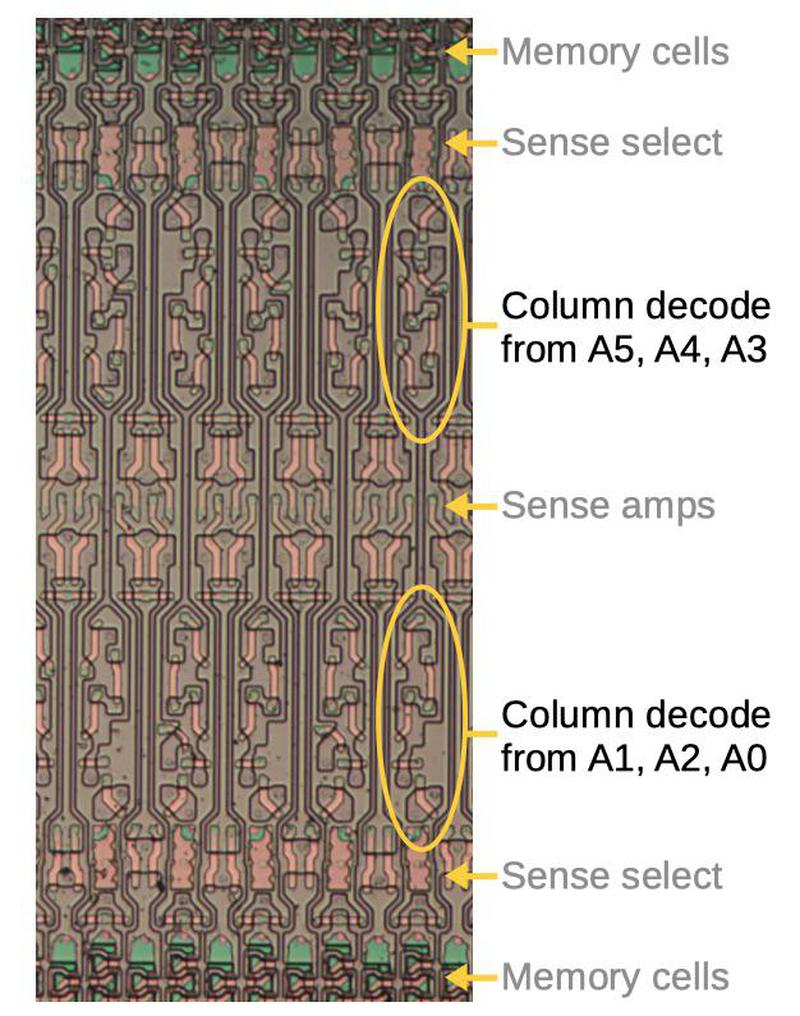

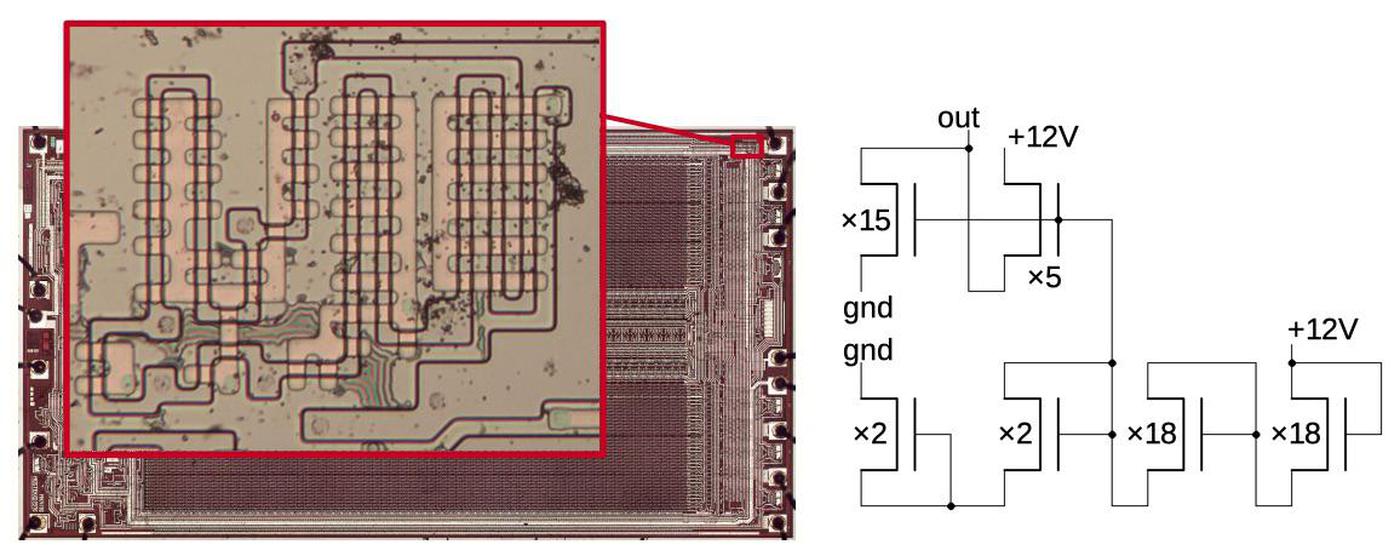

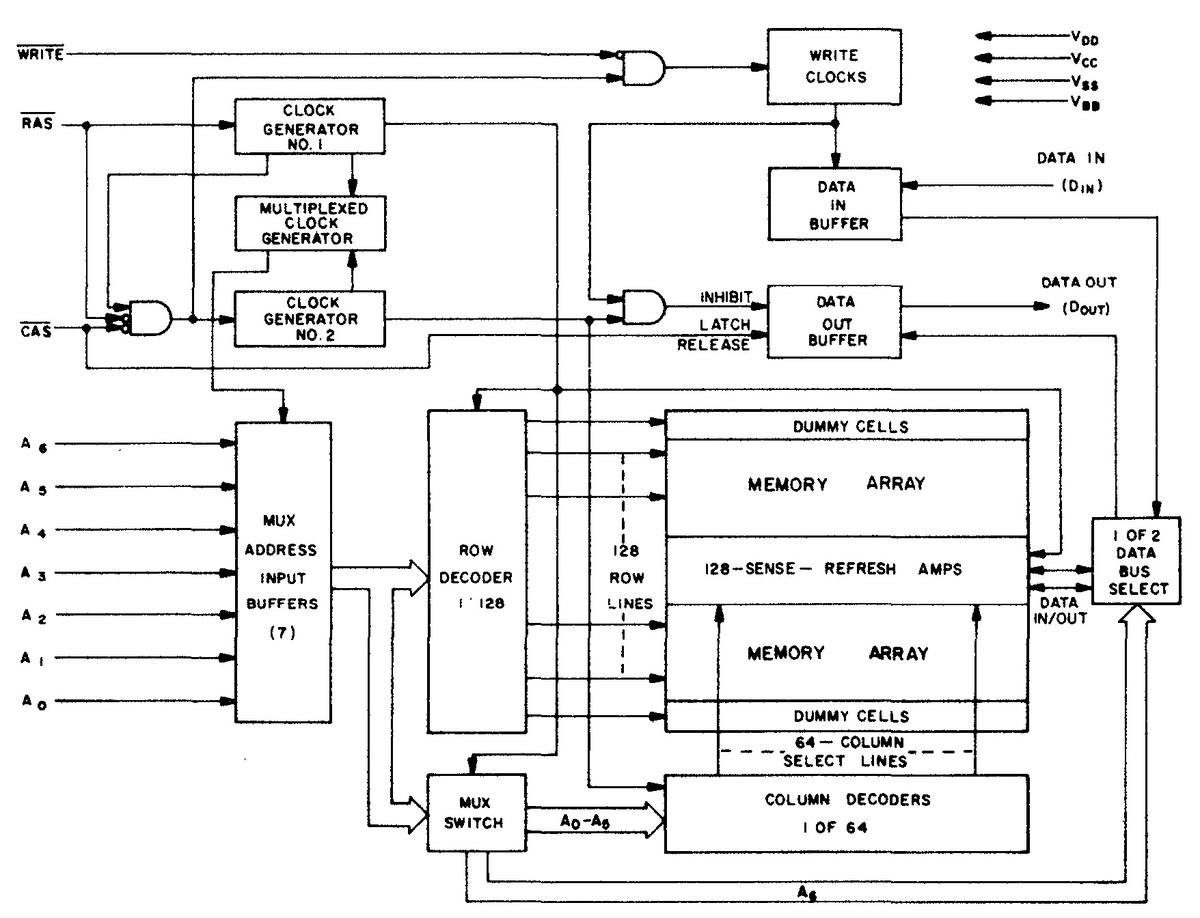

The die photo below shows the functional blocks of the clock chip. Eight NAND gates (red) form an oscillating 4-bit shift register. Four gates (yellow) generate the four-phase clock signals from the shift register outputs. Finally, four output driver circuits (orange) amplify these signals to produce high-current outputs.

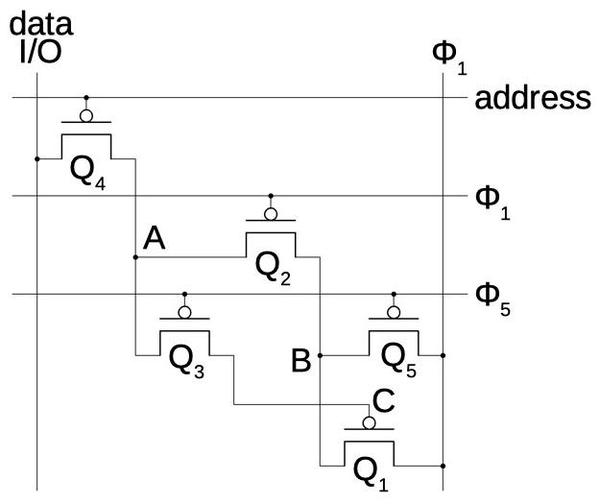

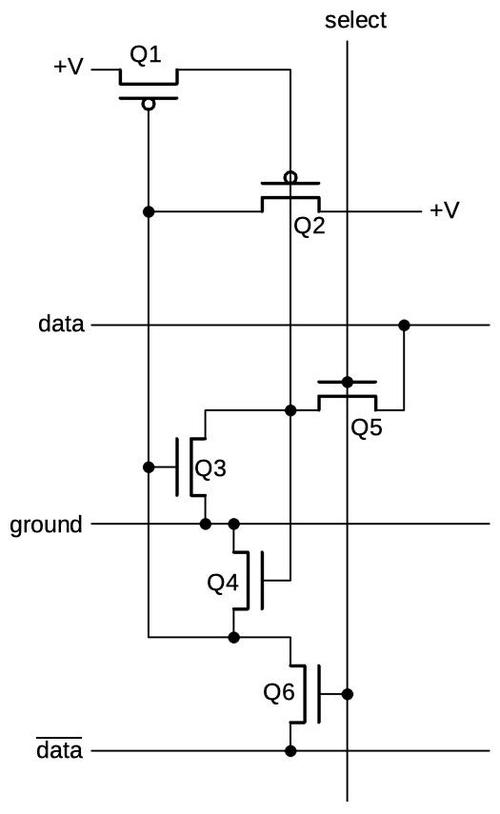

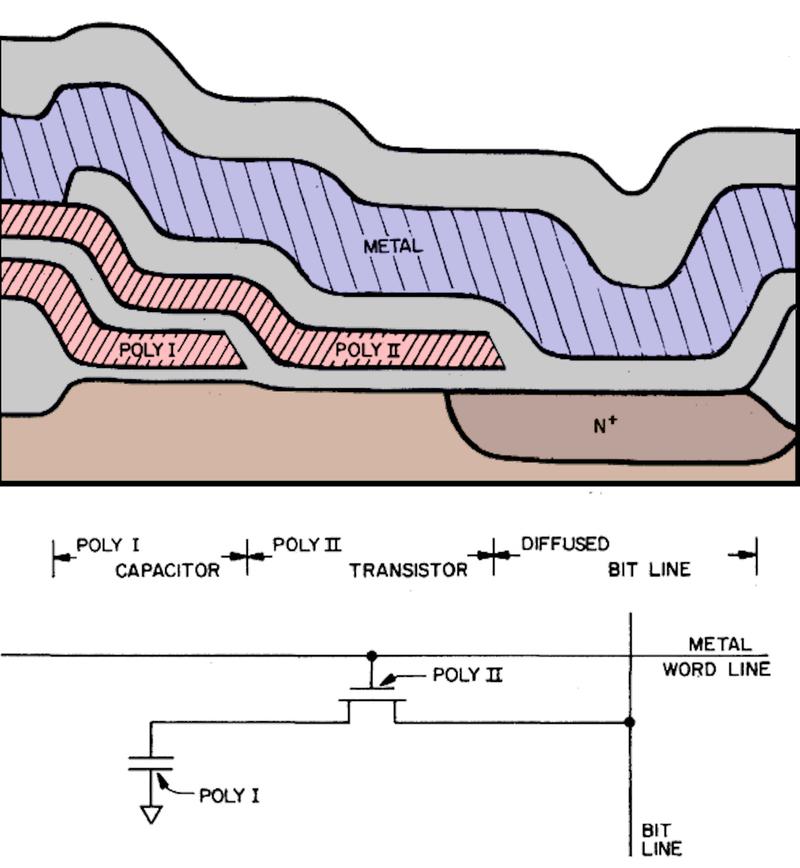

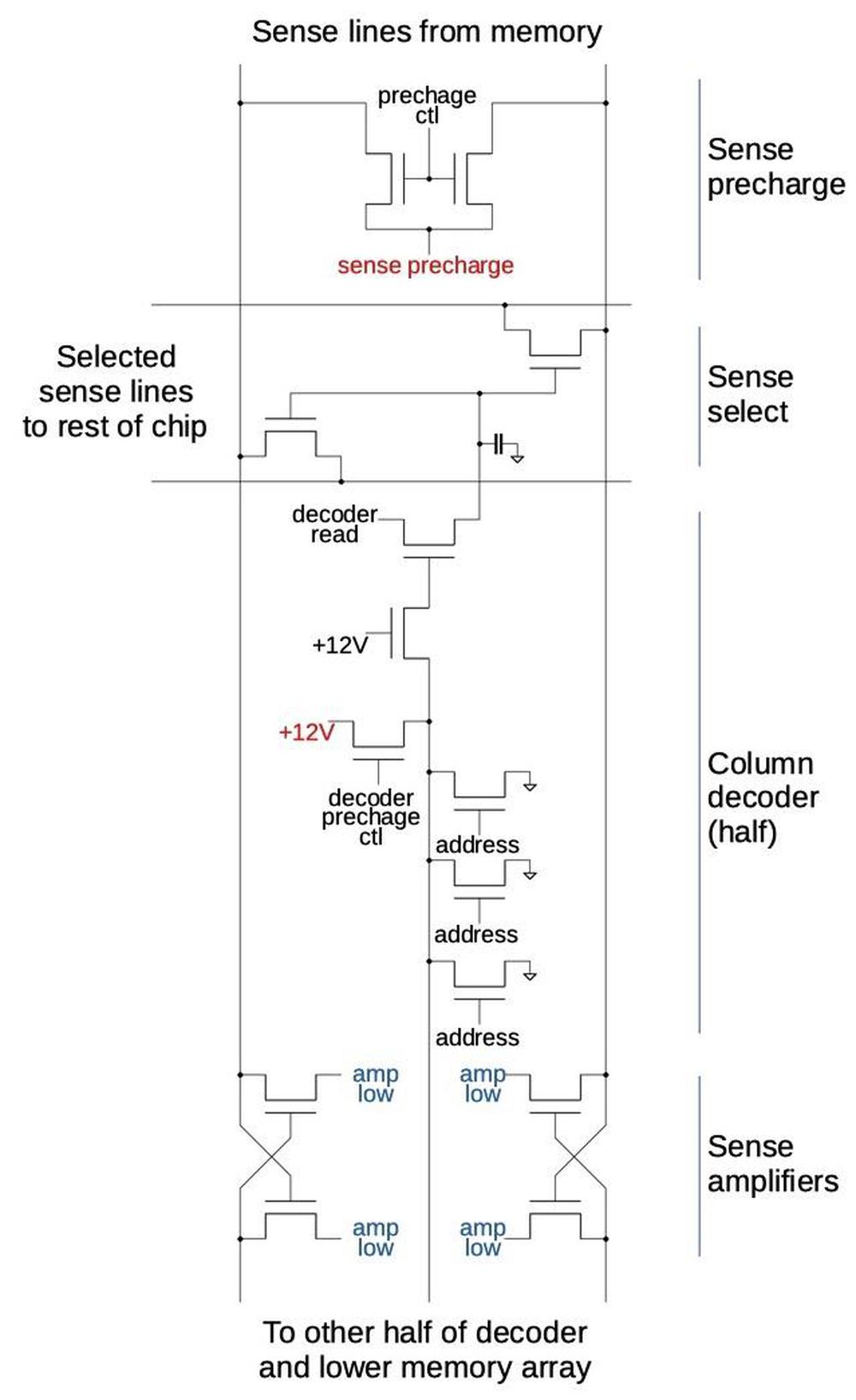

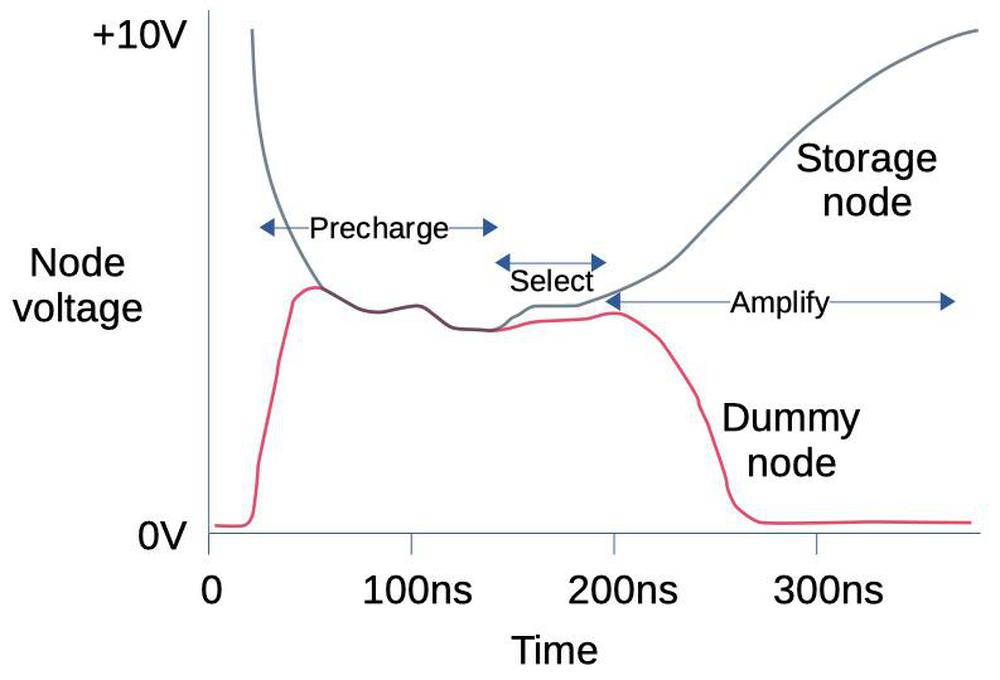

The main building block of the clock chip is a NAND gate that has a delay when its output goes low. This delay creates the timing of the clock signal.6 The diagram below shows how the gate is constructed; the schematic corresponds to the layout of the circuit on the die. The delay makes this circuit somewhat complex and partially analog, but I'll try to explain it.

The NAND circuit is in the upper right; two input transistors and a bootstrap load implement the NAND circuit described earlier. The output of the NAND gate goes through a resistor-capacitor circuit. This delays the output as the capacitor slowly charges through the resistor. The speed of the clock is controlled by the bias pin, which sets a threshold voltage. This voltage controls the point in the resistor-capacitor curve when the level switching transistor turns on.7 By lowering the voltage on the bias pin, the transistor switches sooner, increasing the clock speed. The typical clock speed is 60 kHz, a slow clock even compared to early microprocessors, but calculators didn't require much speed.

When the level switching transistor turns on, it pulls the buffer high,8 and driving the inverter's output low. The inverter has a bootstrap load to provide sufficient output current. Finally, the output is fed back to the bias circuit, probably to sharpen the transition and provide hysteresis. To summarize, this complex circuit implements a delayed NAND gate. It is the key functional block of the chip, repeated ten times.

The clock shift register

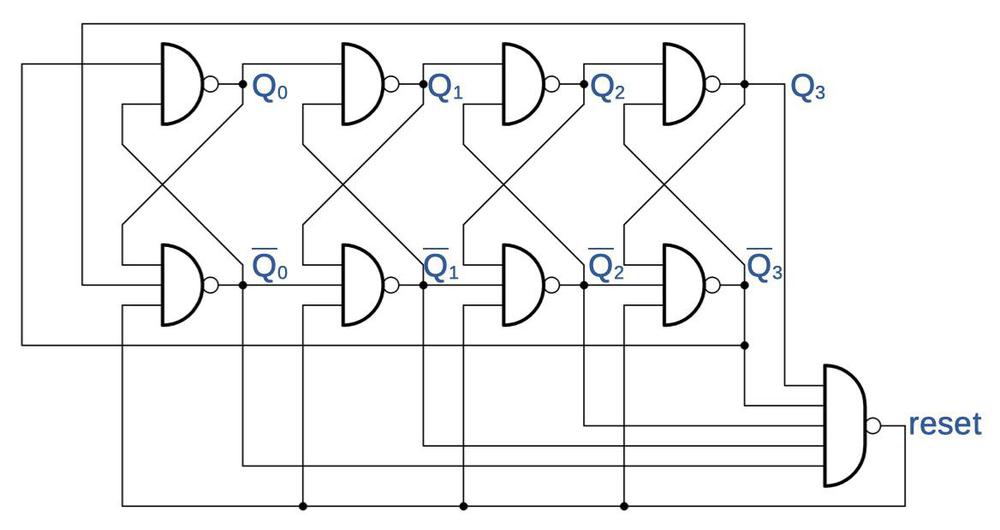

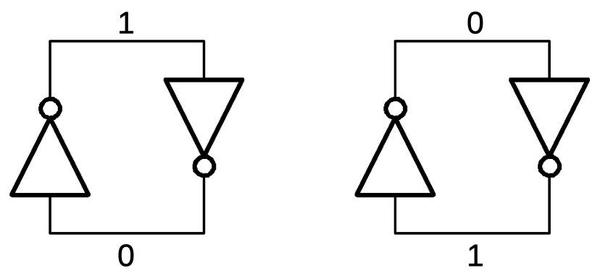

The clock is built from a 4-stage shift register. The idea is that each stage of the shift register shifts its bit to the right, after a delay. The bit on the right is inverted and shifted into the left side of the shift register. Thus, the shift register implements a ring counter, first shifting in 1's at the left and then shifting in 0's: the bit pattern is 0000, 1000, 1100, 1110, 1111, 0111, 0011, 0001, and back to 0000. This complete cycle corresponds to one 60 kilohertz clock cycle for the calculator.

The schematic below shows how the shift register is built from eight cross-coupled NAND gates with delay, using the circuit described earlier. Each pair of NAND gates forms a latch, storing either a 0 or a 1. The latch outputs are labeled Q0 through Q3 while the inverted outputs are labeled Q0 through Q3. The outputs from each latch are connected to the inputs of the next stage, so the bits are shifted to the right. Note that the wires from the last stage back to the first stage are crossed; this causes the bit to be inverted. Each stage consists of two cross-coupled NAND gates, forming a latch that holds one bit. If the delay is decreased (through the bias pin), the speed of the shift register increases, increasing the clock speed.

The shift register must be initialized to the proper state, which is the job of the reset gate. When the shift register is powered up, the reset gate initializes the latches to hold zeros by pulling the lower inputs to the latches low.

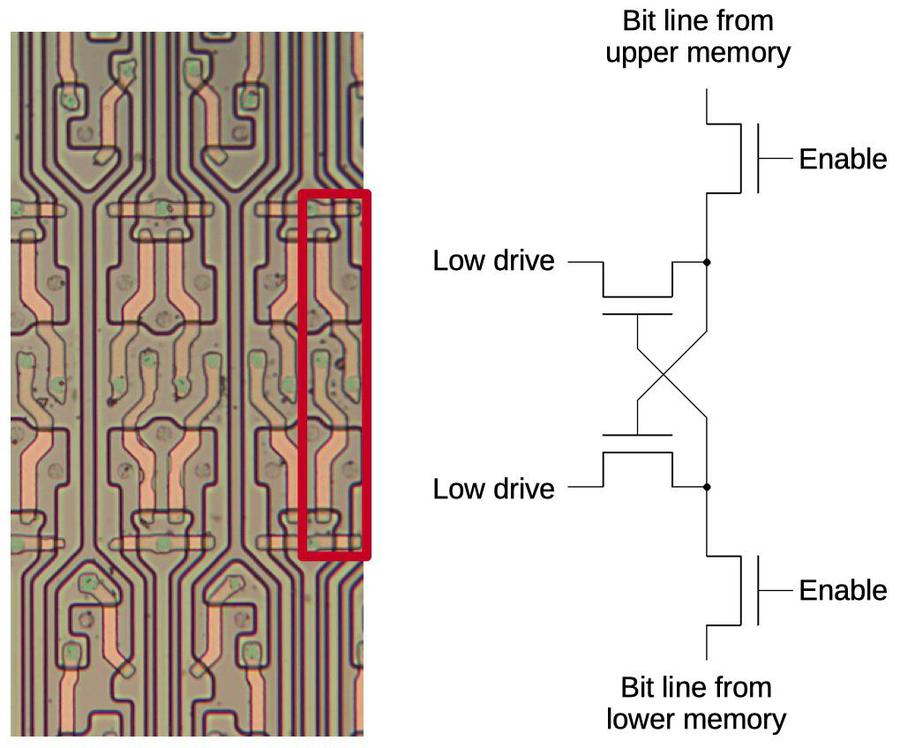

Output circuit

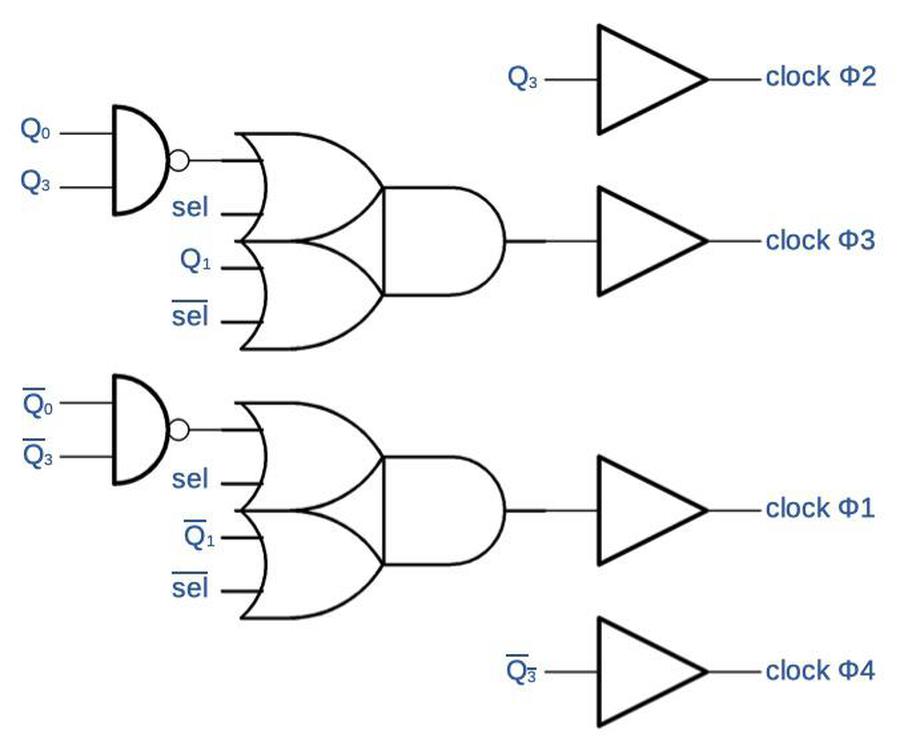

The output circuitry generates the four clock phase outputs from the shift register values. Two phases come from the last shift register stage and its complement. The other two phases are more complex. An unwired "select" pin selects between two outputs for these pins; presumably this pin was wired in other versions of the clock chip to provide different clock signals for a different calculator. In the normal case, these clock outputs are formed by NANDing together two shift register outputs to produce a shorter pulse.

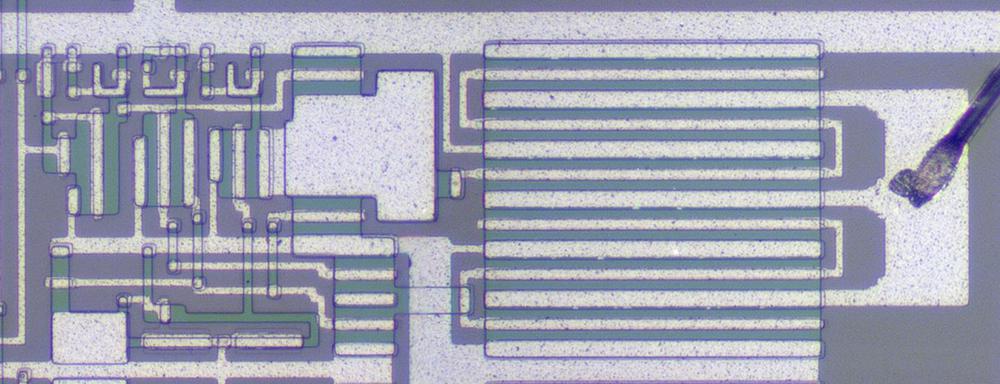

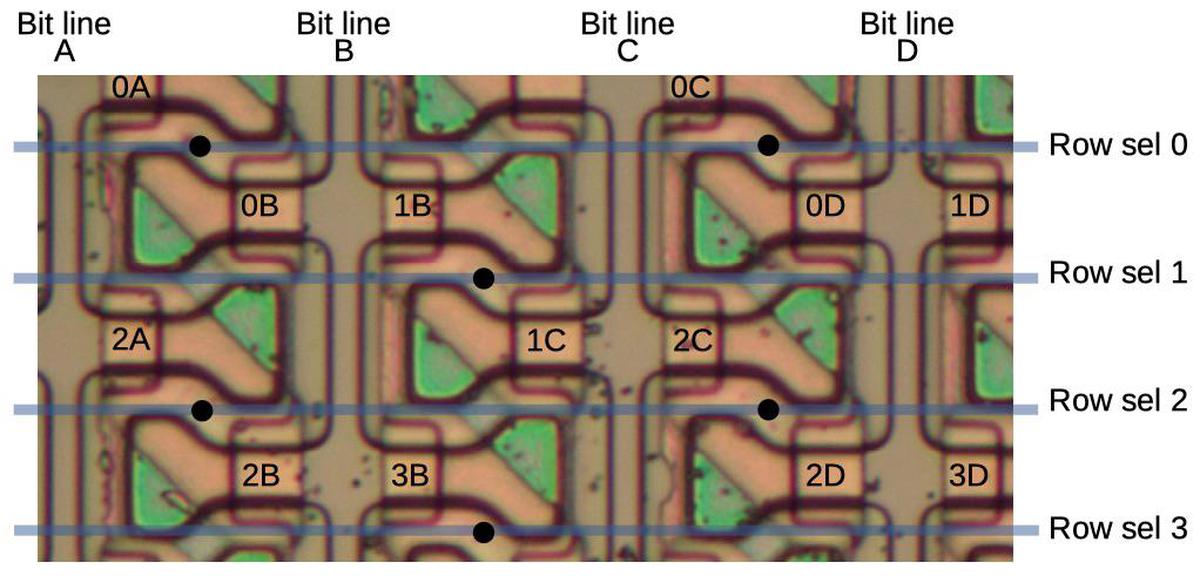

The photo below shows one of the output buffers. The output signal enters at the left, travels through the buffer circuitry, and exits the chip through the bond wire on the right. The right half consists of two large transistors to provide the high output currents: one transistor pulls the output up to ground, while the other transistor pulls the output down to -25V. The remainder of the circuitry amplifies the small internal signal so it can drive the output transistors. Note the large bootstrap capacitor near the center; it helps drive one of the output transistors. There are also much smaller bootstrap capacitors in the upper left. This output buffer circuit is repeated four times, once for each output pin.

The output buffer transistors must be large due to an unusual characteristic of four-phase logic. Normal clocked logic uses the clock signals for timing, while the logic gates are connected to power and ground. In four-phase logic, however, the clock signals provide the power for the logic gates; there are no separate power and ground connections. When the gates are precharged and discharged by the clock signals, this provides the power for the gates. Thus, four-phase logic requires relatively high-current clock signals, since they are powering the circuits.9

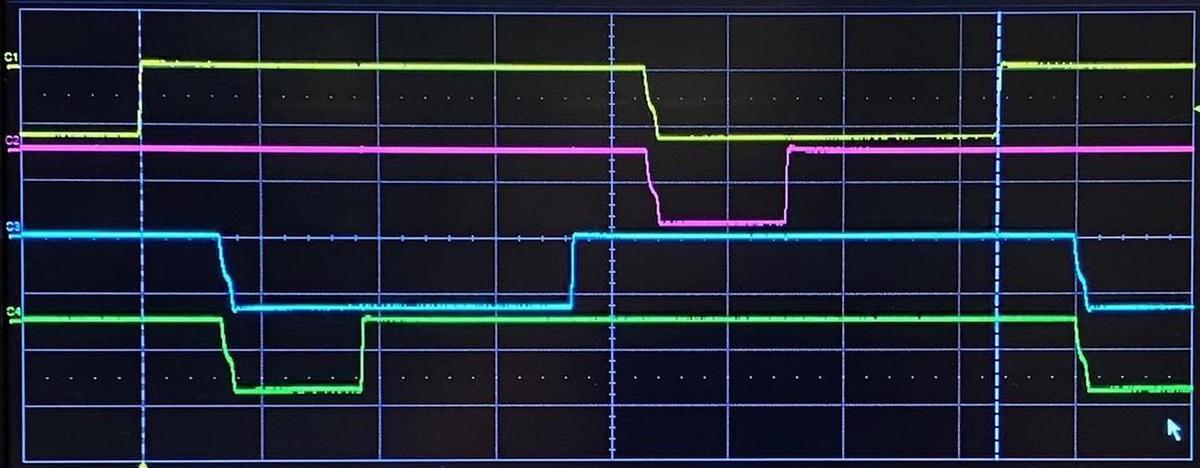

To see the chip in action, the oscilloscope trace below shows the four clock outputs as measured from the chip. The yellow and blue traces are the main phases; note that the active (low) parts do not overlap. The magenta and green outputs are active during the first part of the yellow and blue phases, respectively. These clocks are used to precharge the logic circuits. (The clock phases match those on Wikipedia's four-phase article, except the polarity is reversed because of the PMOS transistors.)

Conclusion

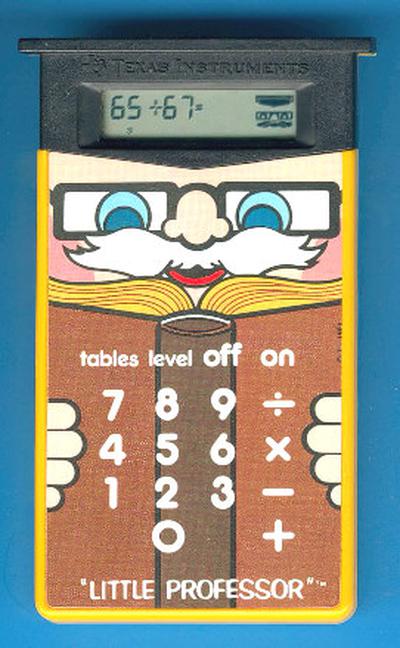

Rockwell fit a calculator onto five chips, making the handheld calculator possible. However, Texas Instruments, Mostek, and other companies soon fit all the circuitry onto a single chip, creating the calculator-on-a-chip. Selling calculators was highly profitable for a short time and 11 million calculators were sold in the US in 1974. Although calculators sold for hundreds of dollars in 1969, competition and the improvements in technology caused calculator prices to plummet to $15 by 1975. The profit margin collapsed during the "calculator wars"; Texas Instruments alone lost $16 million in 1975.4

Although the calculator market was risky, the massive sales of calculators provided an important boost to MOS chip technology in the early 1970s, and thus the computer industry. In particular, microprocessors started with the Intel 4004, a chip designed for a calculator. And microcontrollers were created out of Texas Instruments' line of calculator chips. While a chip such as the CG2341 clock generator is trivial by modern standards with about 200 transistors, it provides a historical window into how chips were constructed in the early days of MOS ICs.

Thanks to Francois Gueissaz for doing all the hard work of obtaining the calculator ICs, decapping them, and providing me with die photos and other information. I announce my latest blog posts on Twitter, so follow me at kenshirriff. I also have an RSS feed.

Notes and references

-

See this interesting vintage commercial for the Sharp EL-8 calculator for more information. ↩

-

Measuring the die photo, I believe this chip uses a 15 µm process, so the transistors and features are very large by modern standards. (This is why five chips were required to implement the calculator.) In comparison, many modern chips use a 14 nm process, so the width of a modern transistor is roughly 1000 times smaller, and the area is roughly a million times smaller. This shows the amazing progress in silicon technology described by Moore's Law. ↩

-

It's hard to follow the spin-offs and acquisitions of the companies involved. Autonetics was founded as the research laboratory for North American Aviation in 1945. Among other things, Autonetics developed guidance computers for the Minuteman missile. Although North American Aviation is mostly forgotten now, it was a major aerospace company, building everything from the P-51 Mustang in World War II to the command and service module for the Apollo landing. It merged with Rockwell in 1967, becoming North American Rockwell. In 1970, about 800 employees from Autonetics were split off to form North American Rockwell MicroElectronics to develop and manufacture commercial integrated circuits. This later became Rockwell Semiconductor, then spun off into Conexant, which was later acquired by Synaptics. Rockwell was sold to Boeing in 1996.

Sharp, on the other hand, started as Hayakawa Metal Works in 1924, eventually being renamed Sharp Corporation in 1970. (The name came from the Ever-Sharp mechanical pencil, one of Hayakawa's early inventions.) Foxconn bought the majority of Sharp in 2016; Foxconn, also known as Hon Hai Precision Industry, is a Taiwanese electronics manufacturer. Although best known for manufacturing the iPhone for Apple, Foxconn is estimated to manufacture 40% of the world's consumer electronics. ↩

-

Much of the historical information in this post comes from the books To the Digital Age and History of Semiconductor Engineering. These books provide a detailed look at the rise of MOS integrated circuits. ↩↩

-

One of the main proponents of four-phase logic was Lee Boysel, who founded a company Four-Phase Systems around it. The company built 24-bit computers, which were some of the earliest MOS-based computers. Boysel's EECS presentation describes the advantages of four-phase logic. ↩

-

One important characteristic of the delayed NAND gate is that the delay is much larger when the output goes low than when the output goes high. This ensures that the output clock phases do not overlap while active (low). This is necessary for four-phase logic to ensure that logic gates don't conflict with each other. ↩

-

The level switching transistor (like other PMOS transistors) will turn on when the gate voltage is lower than the source voltage by Vt (the transistor's threshold voltage). Thus, by controlling the bias voltage on the transistor's source, the transistor can be made to turn on sooner or later, controlling the frequency. ↩

-

Note that the buffer circuit is constructed "backward" compared to a standard PMOS inverter. A PMOS inverter has the transistor connected to ground with a load resistor to -25V, while the buffer has the transistor connected to -25V and the load resistor to ground. I think it is constructed this way to shift the voltage levels from the level switching transistor. ↩

-

Although the four-phase clocks power the logic gates, the chips also have regular power and ground connections. These power the output pins since the current demands are too large to be reasonably satisfied by the clocks. ↩