A Field-Programmable Gate Array (FPGA) can implement arbitrary digital logic, anything from a microprocessor to a video generator or crypto miner. An FPGA consists of many logic blocks, each typically consisting of a flip flop and a logic function, along with a routing network that connects the logic blocks. What makes an FPGA special is that it is programmable hardware: you can redefine each logic block and the connections between them. The result is you can build a complex digital circuit without physically wiring up individual gates and flip flops or going to the expense of designing a custom integrated circuit.

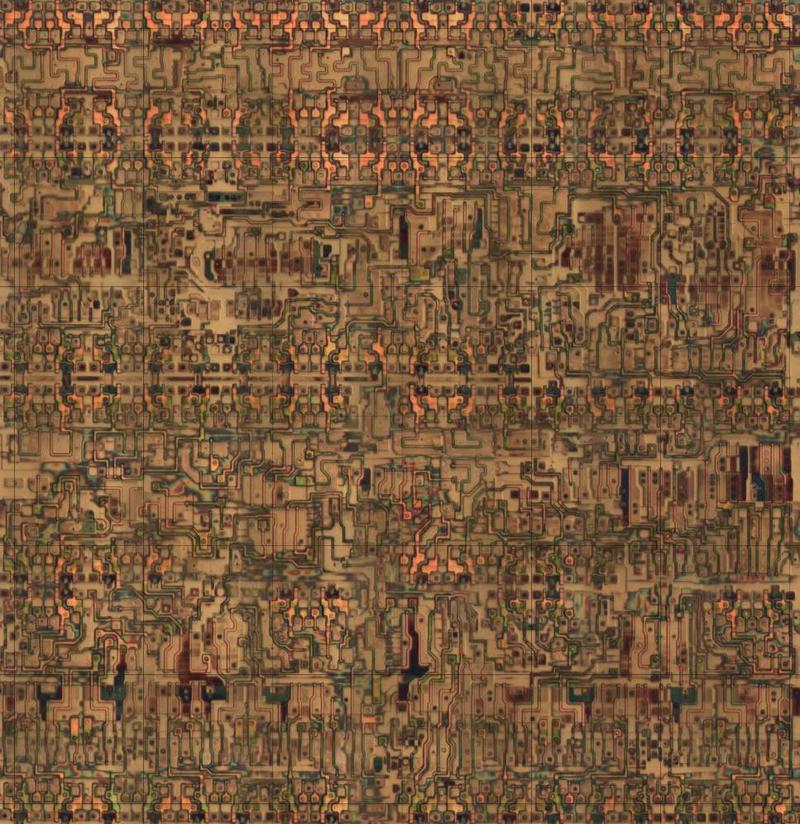

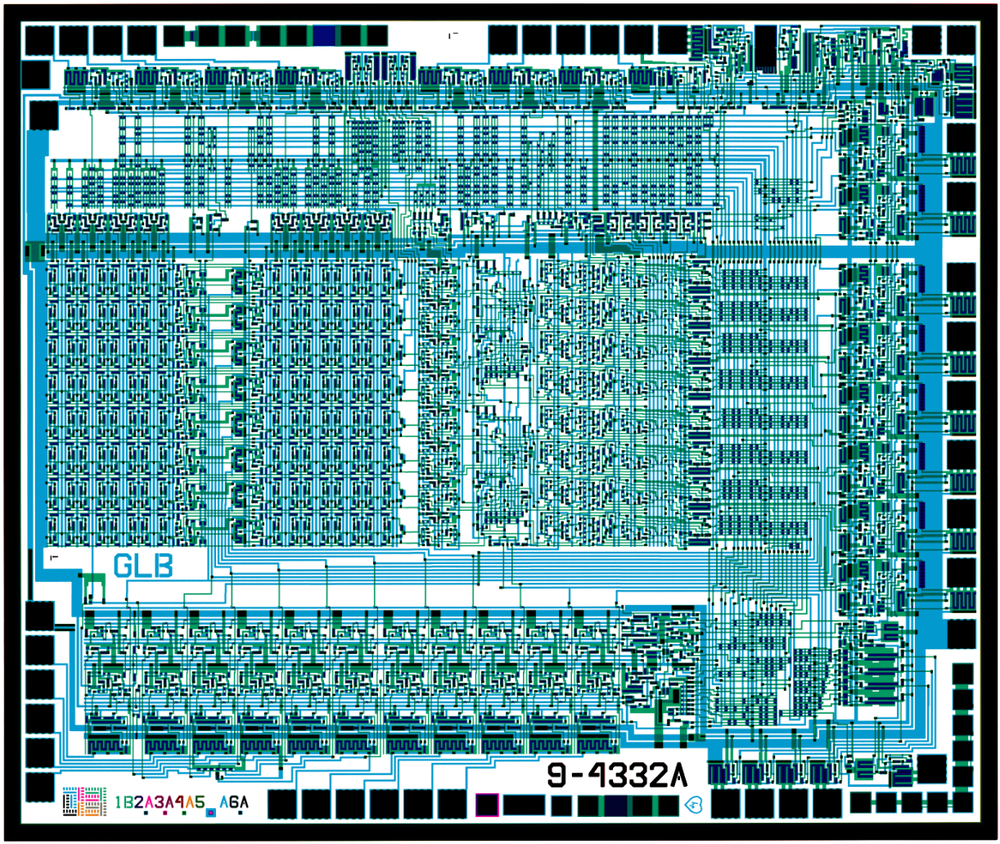

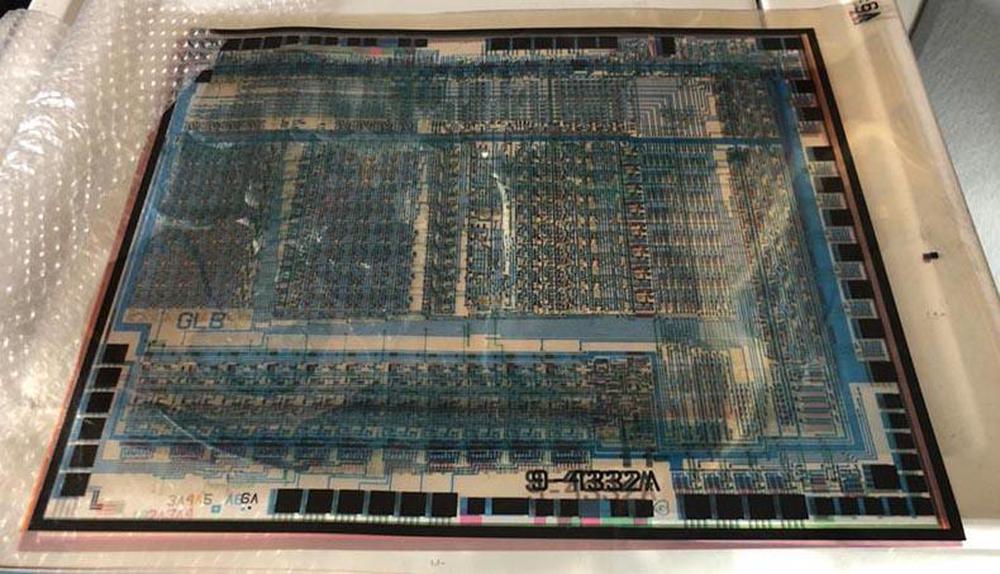

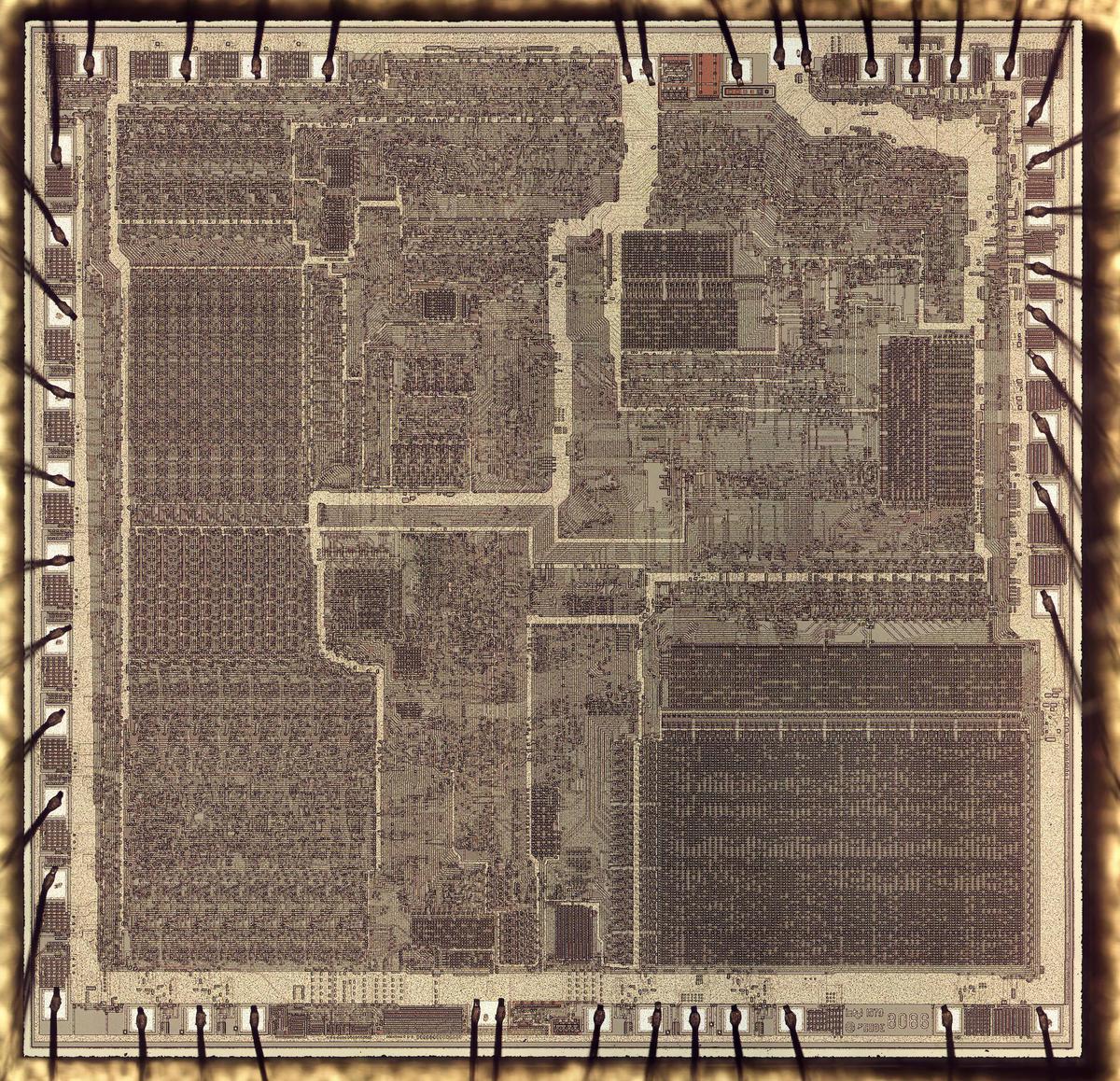

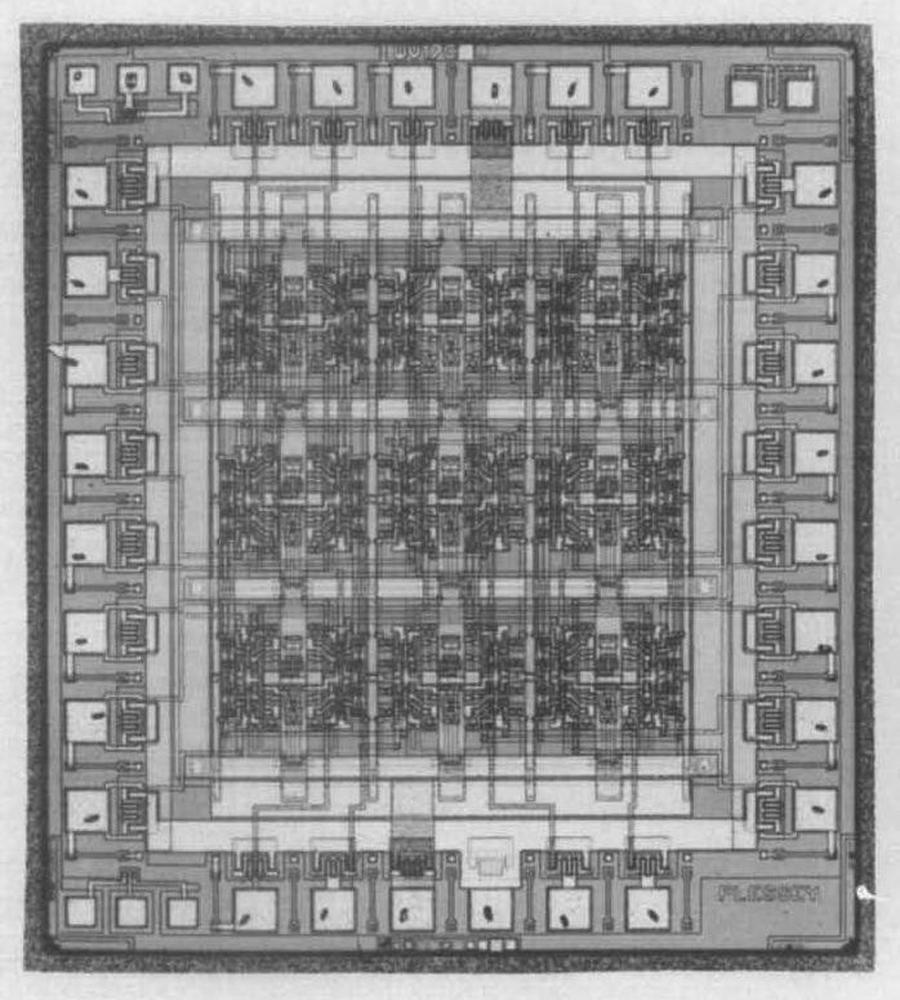

The FPGA was invented by Ross Freeman1 who co-founded Xilinx2 in 1984 and introduced the first FPGA, the XC2064. 3 This FPGA is much simpler than modern FPGAs—it contains just 64 logic blocks, compared to thousands or millions in modern FPGAs—but it led to the current multi-billion-dollar FPGA industry. Because of its importance, the XC2064 is in the Chip Hall of Fame. I reverse-engineered Xilinx's XC2064, and in this blog post I explain its internal circuitry (above) and how a "bitstream" programs it.

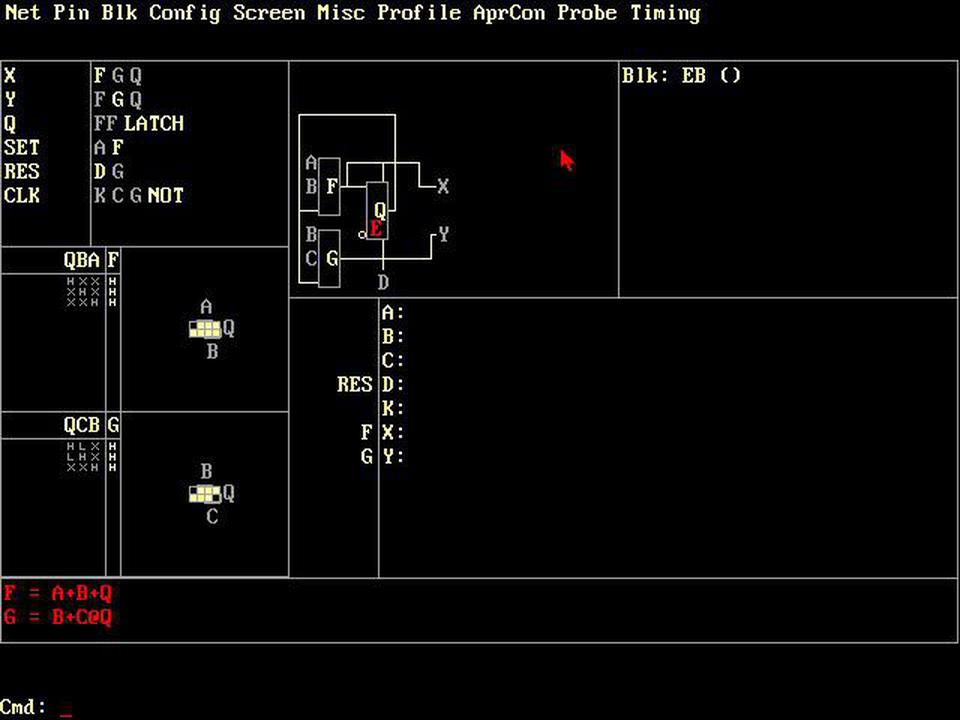

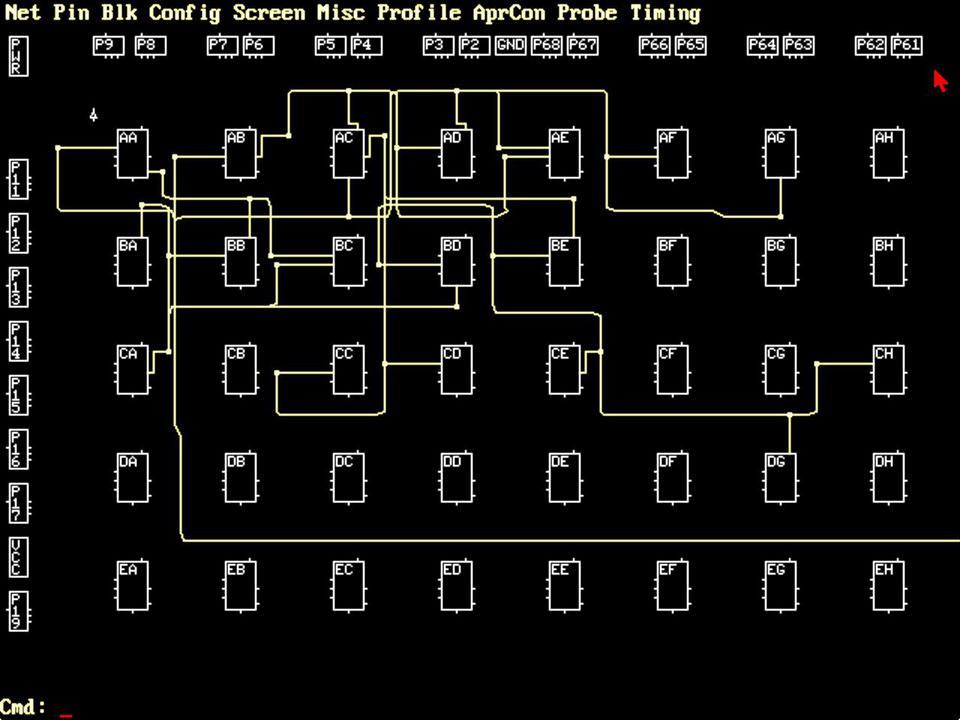

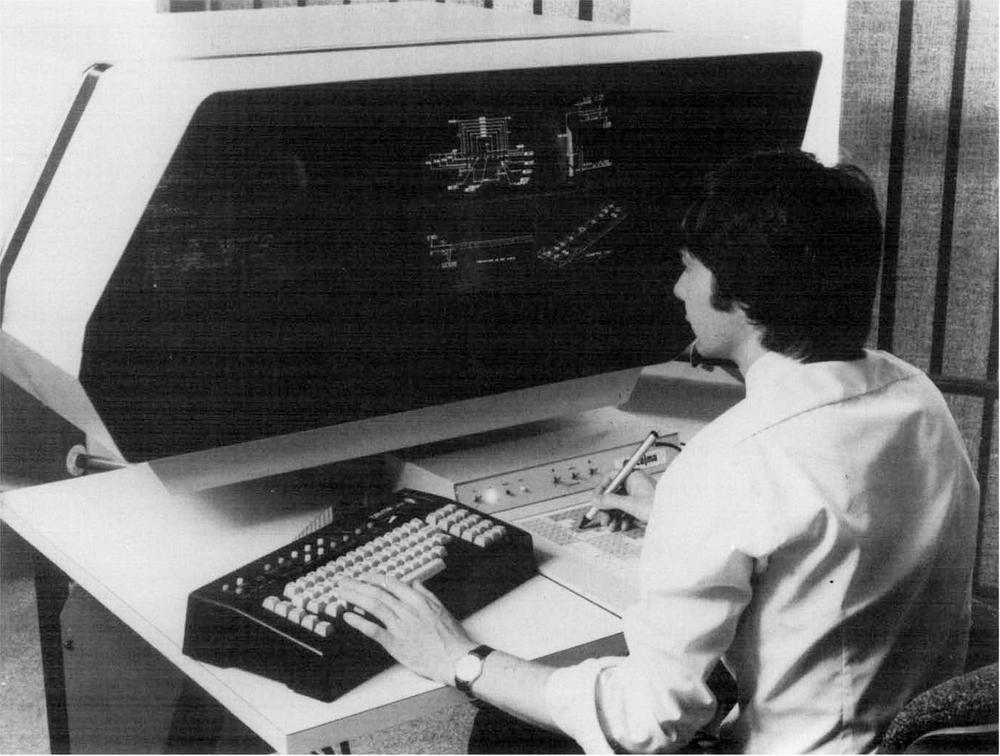

Nowadays, an FPGA is programed in a hardware description language such as Verilog or VHDL, but back then Xilinx provided their own development software, an MS-DOS application named XACT with a hefty $12,000 price tag. XACT operated at a lower level than modern tools: the user defined the function of each logic block, as shown in the screenshot below, and the connections between logic blocks. XACT routed the connections and generated a bitstream file that could be loaded into the FPGA.

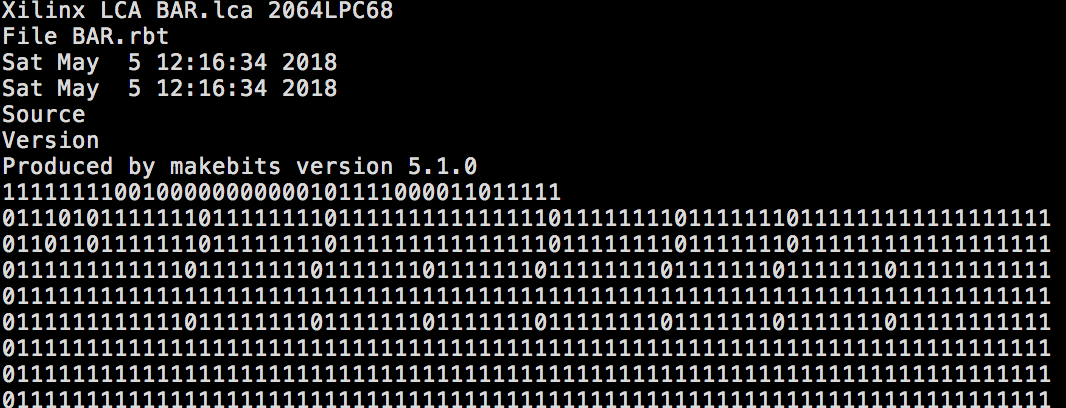

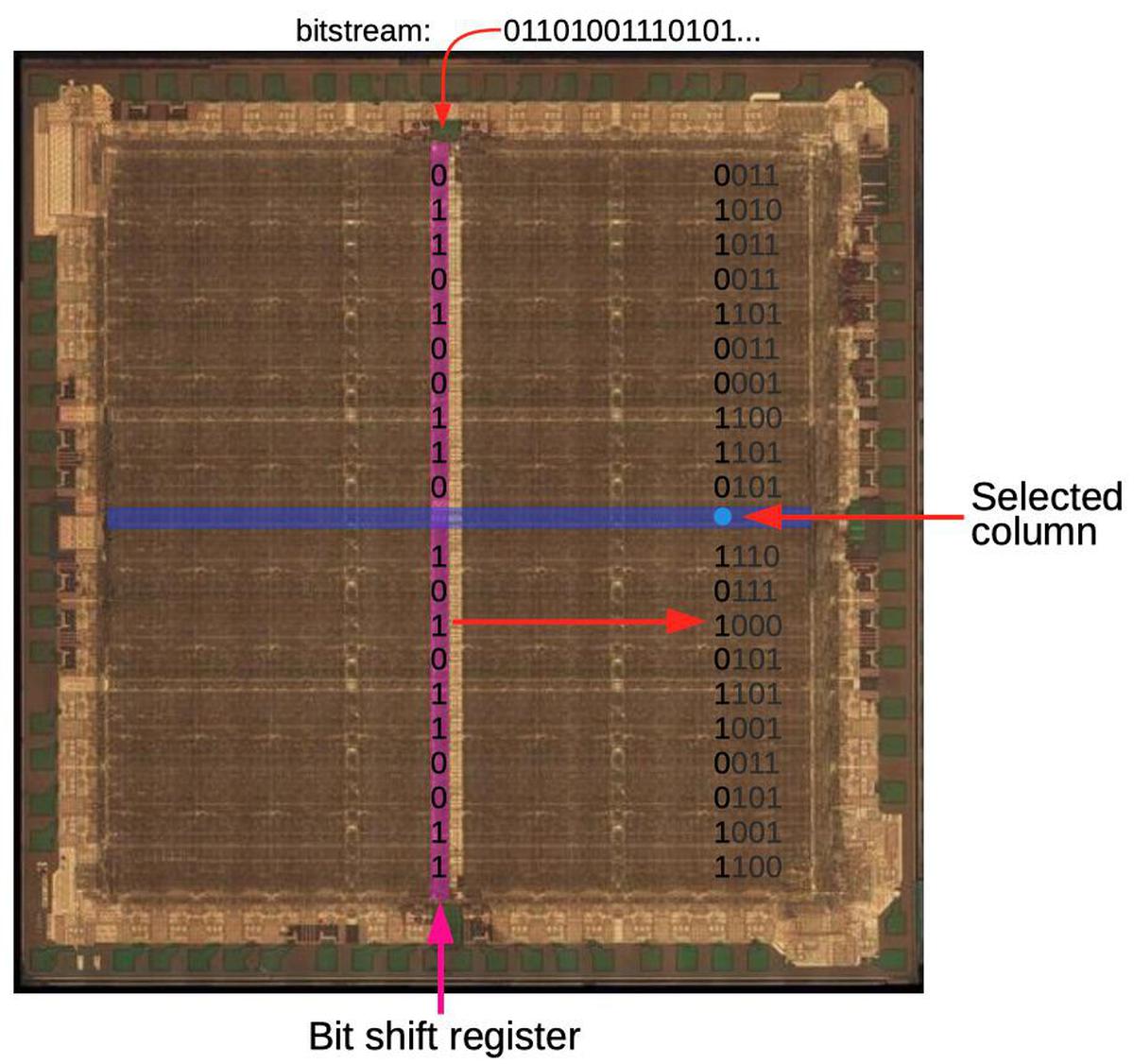

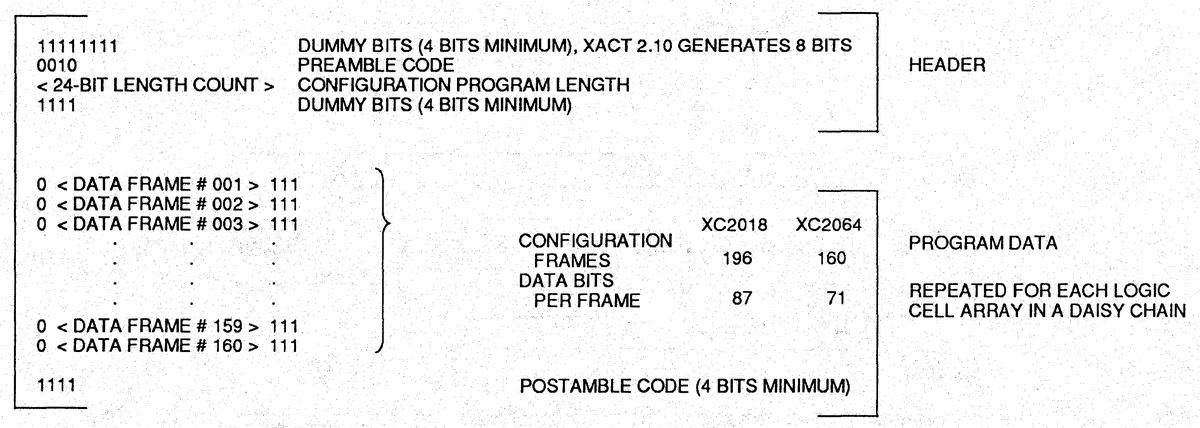

An FPGA is configured via the bitstream, a sequence of bits with a proprietary format. If you look at the bitstream for the XC2064 (below), it's a puzzling mixture of patterns that repeat irregularly with sequences scattered through the bitstream. There's no clear connection between the function definitions in XACT and the data in the bitstream. However, studying the physical circuitry of the FPGA reveals the structure of the bitstream data and it can be understood.

How does an FPGA work?

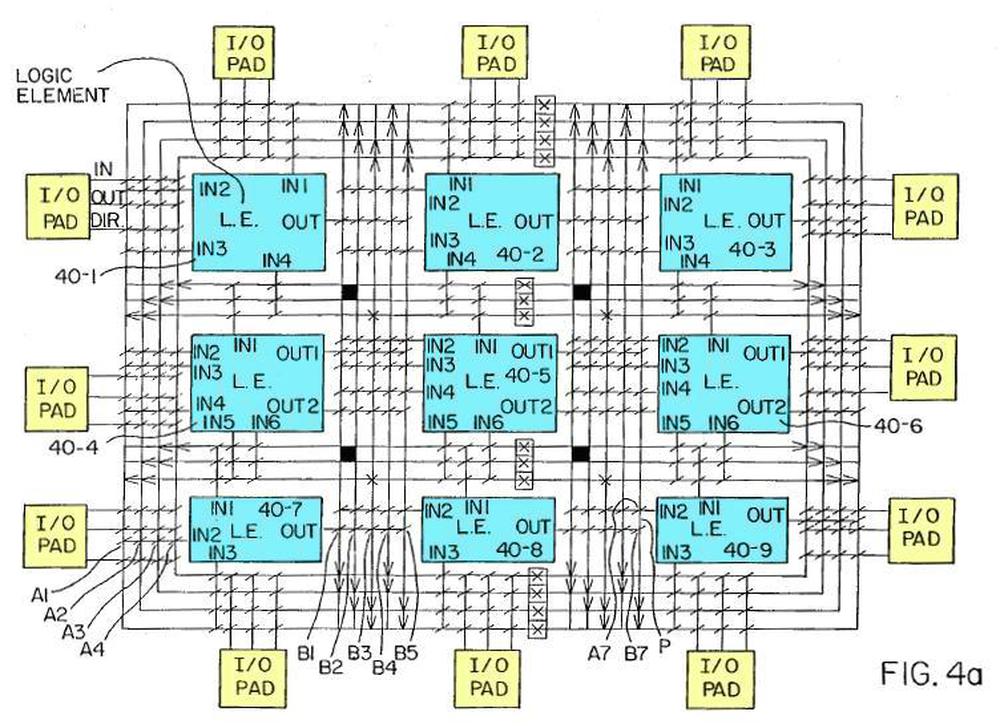

The diagram below, from the original FPGA patent, shows the basic structure of an FPGA. In this simplified FPGA, there are 9 logic blocks (blue) and 12 I/O pins. An interconnection network connects the components together. By setting switches (diagonal lines) on the interconnect, the logic blocks are connected to each other and to the I/O pins. Each logic element can be programmed with the desired logic function. The result is a highly programmable chip that can implement anything that fits in the available circuitry.

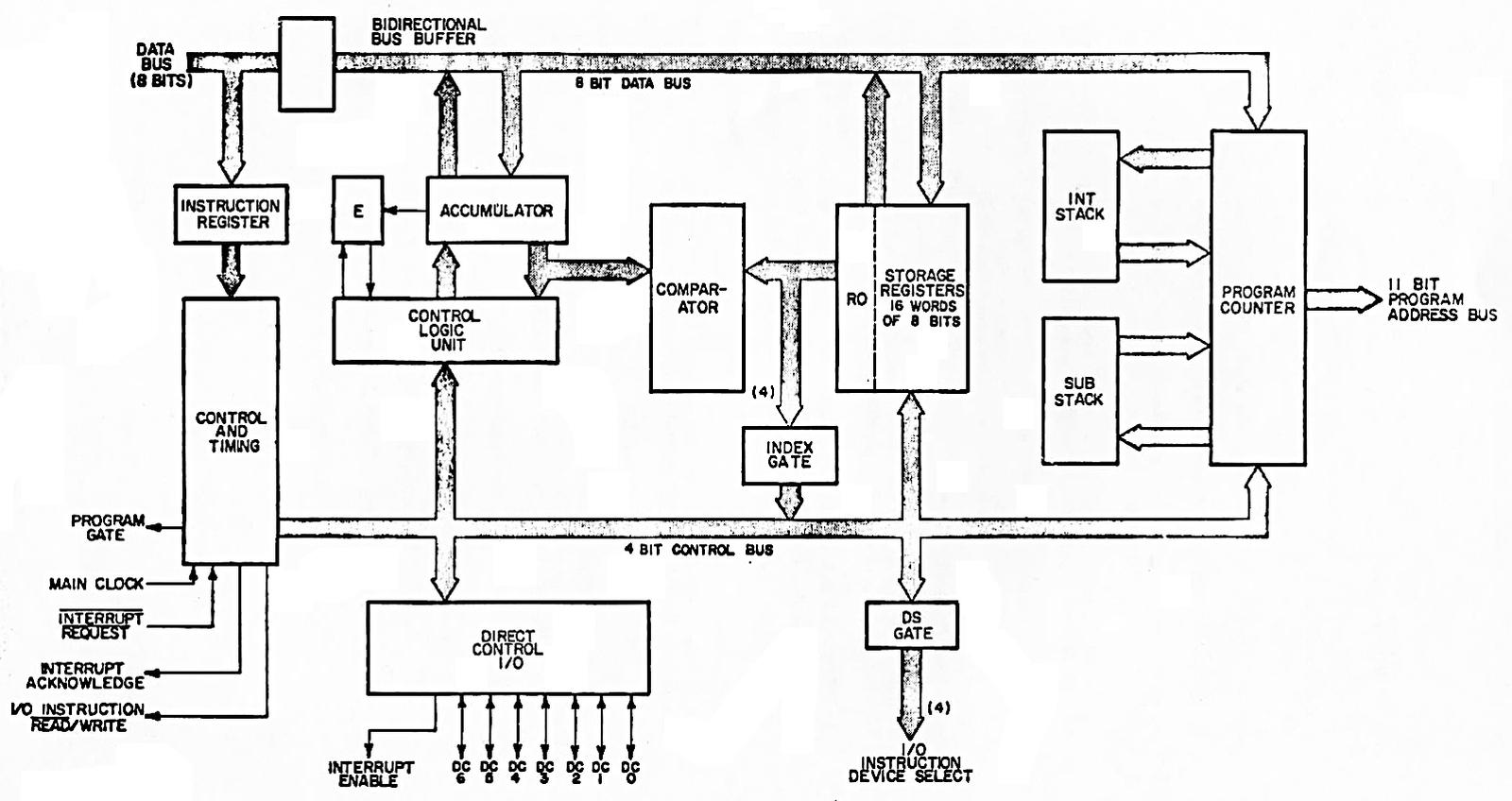

CLB: Configurable Logic Block

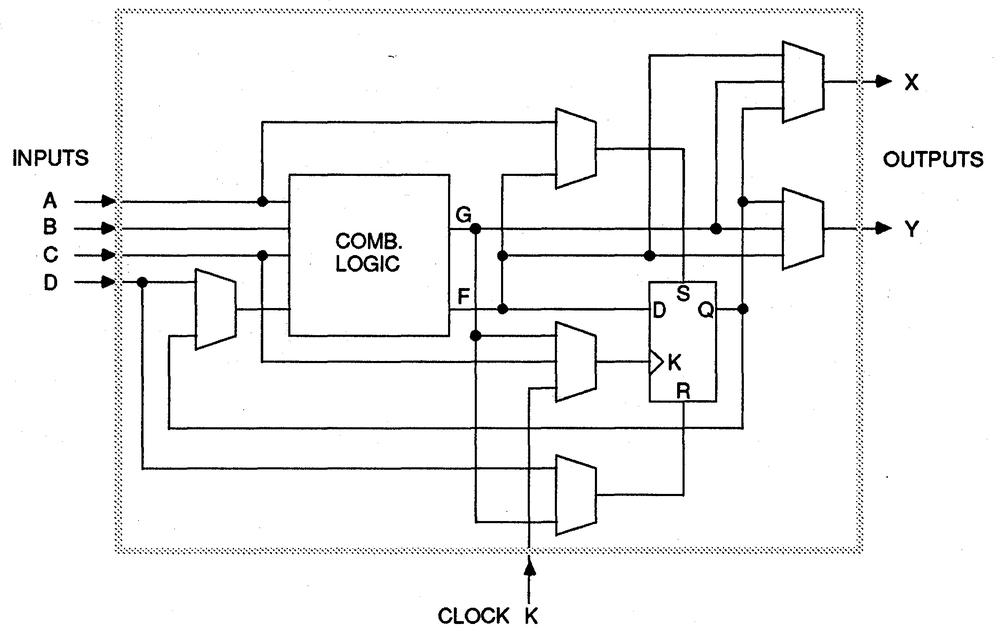

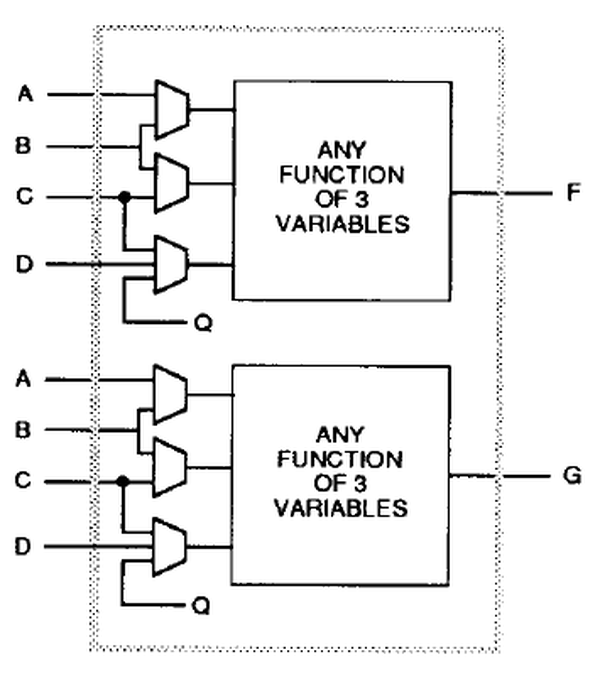

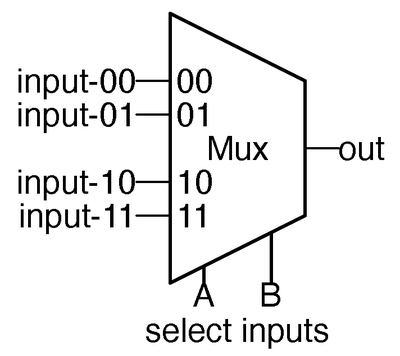

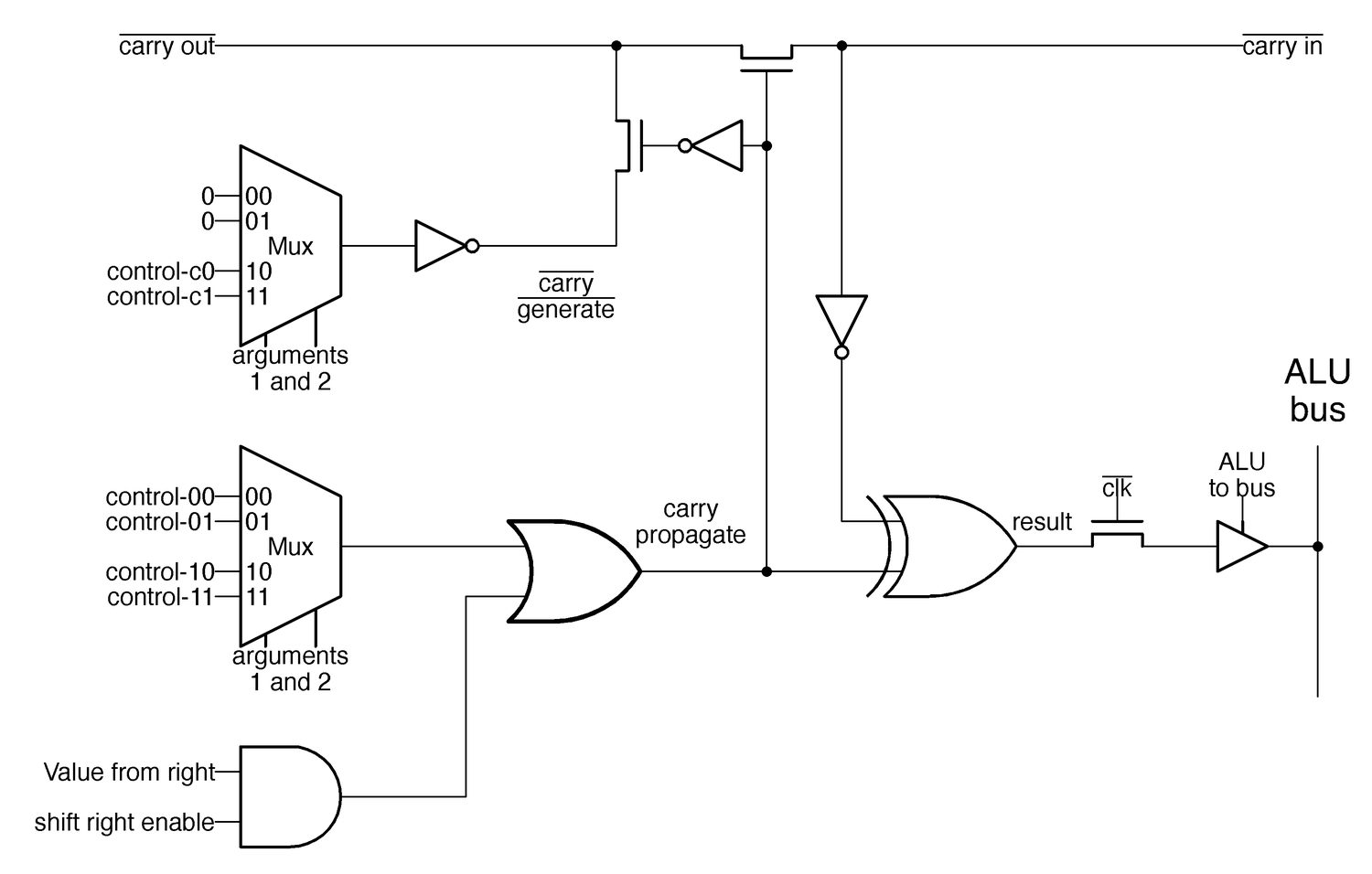

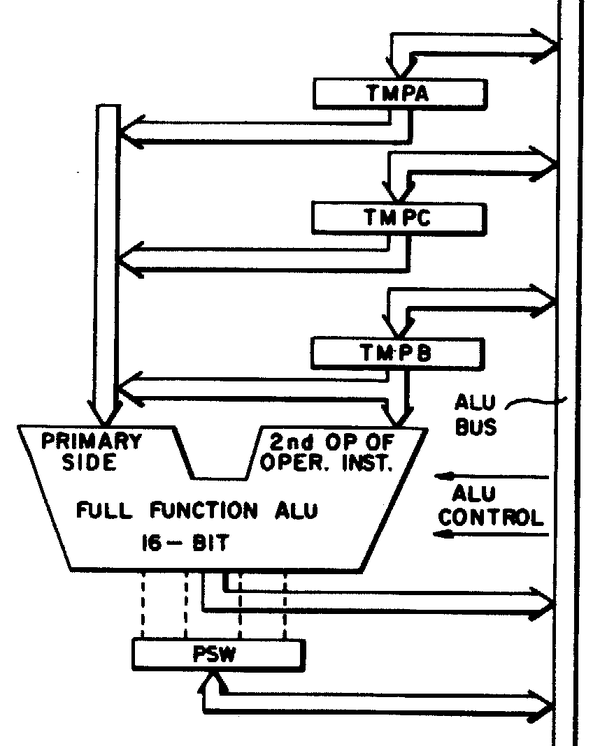

While the diagram above shows nine configurable logic blocks (CLBs), the XC2064 has 64 CLBs. The diagram below shows the structure of each CLB. Each CLB has four inputs (A, B, C, D) and two outputs (X and Y). In between is combinatorial logic, which can be programmed with any desired logic function. The CLB also contains a flip flop, allowing the FPGA to implement counters, shift registers, state machines and other stateful circuits. The trapezoids are multiplexers, which can be programmed to pass through any of their inputs. The multiplexers allow the CLB to be configured for a particular task, selecting the desired signals for the flip flop controls and the outputs.

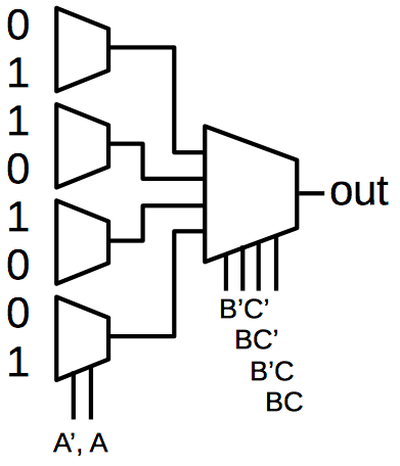

You might wonder how the combinatorial logic implements arbitrary logic functions. Does it select between a collection of AND gates, OR gates, XOR gates, and so forth? No, it uses a clever trick called a lookup table (LUT), in effect holding the truth table for the function. For instance, a function of three variables is defined by the 8 rows in its truth table. The LUT consists of 8 bits of memory, along with a multiplexer circuit to select the right value. By storing values in these 8 bits of memory, any 3-input logic function can be implemented.4

The interconnect

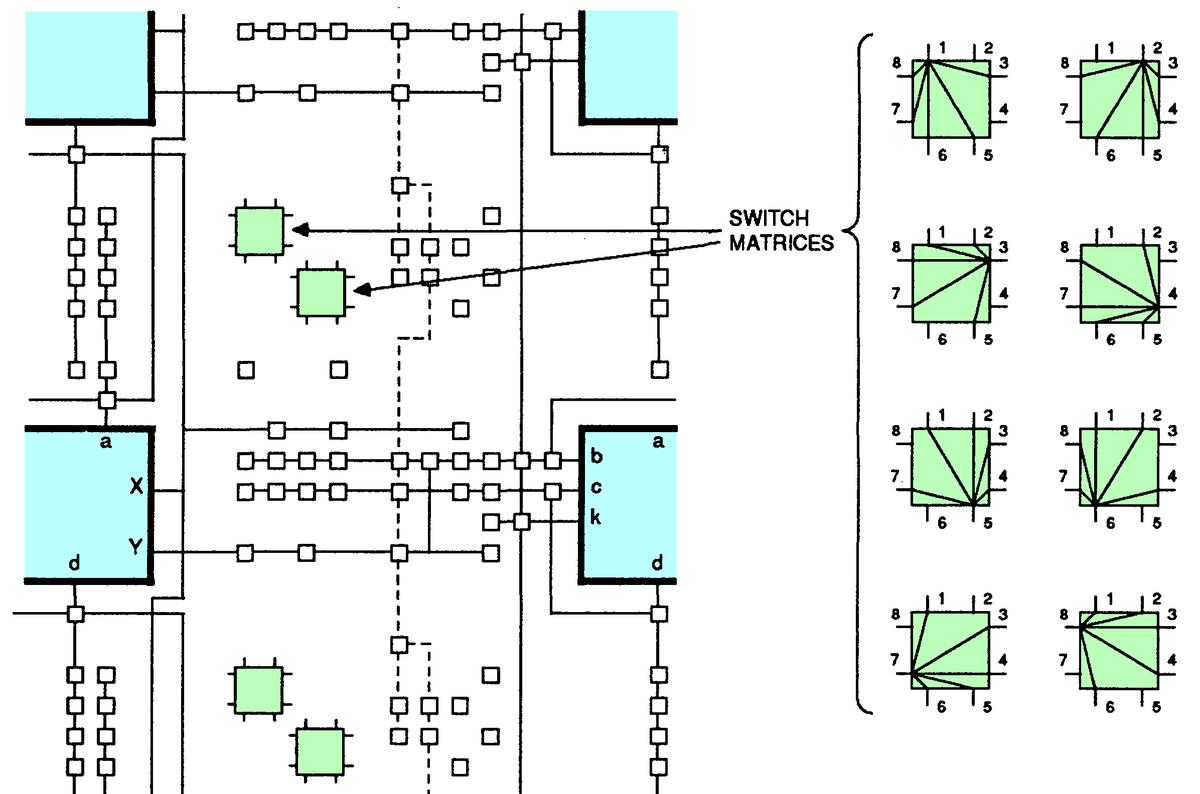

The second key piece of the FPGA is the interconnect, which can be programmed to connect the CLBs in different ways. The interconnect is fairly complicated, but a rough description is that there are several horizontal and vertical line segments between each CLB. CLB. Interconnect points allow connections to be made between a horizontal line and a vertical line, allowing arbitrary paths to be created. More complex connections are done via "switch matrices". Each switch matrix has 8 pins, which can be wired together in (almost) arbitrary ways.

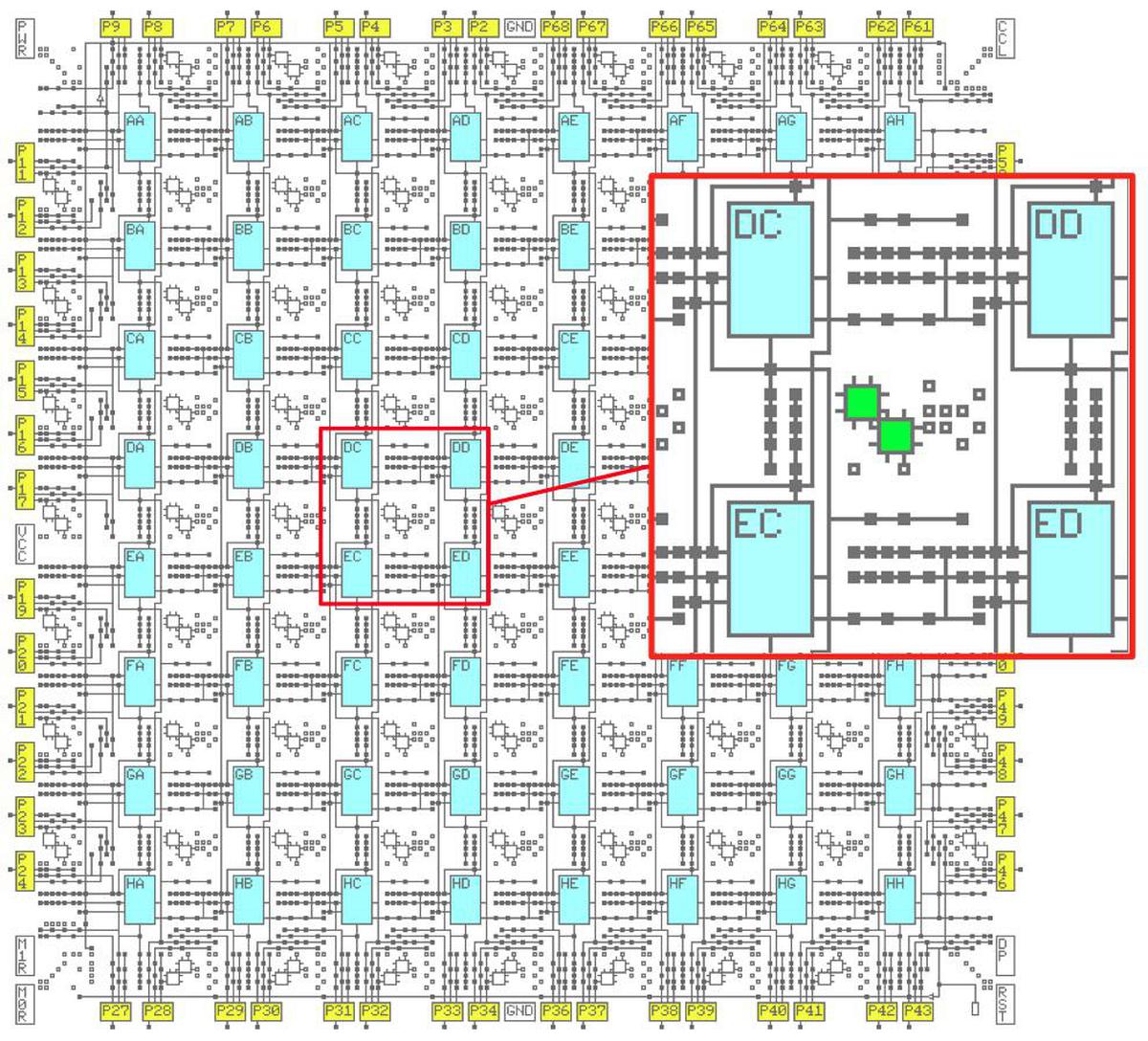

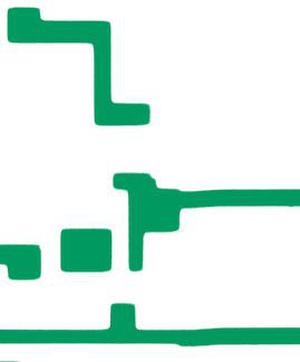

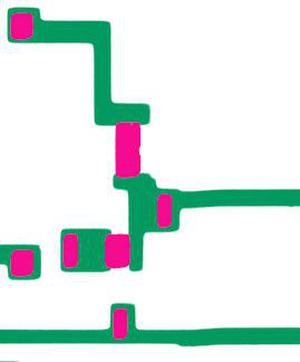

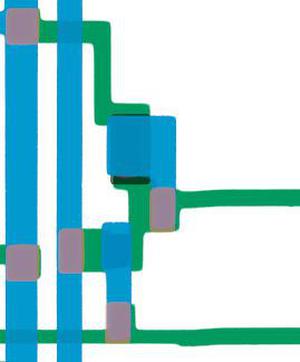

The diagram below shows the interconnect structure of the XC2064, providing connections to the logic blocks (cyan) and the I/O pins (yellow). The inset shows a closeup of the routing features. The green boxes are the 8-pin switch matrices, while the small squares are the programmable interconnection points.

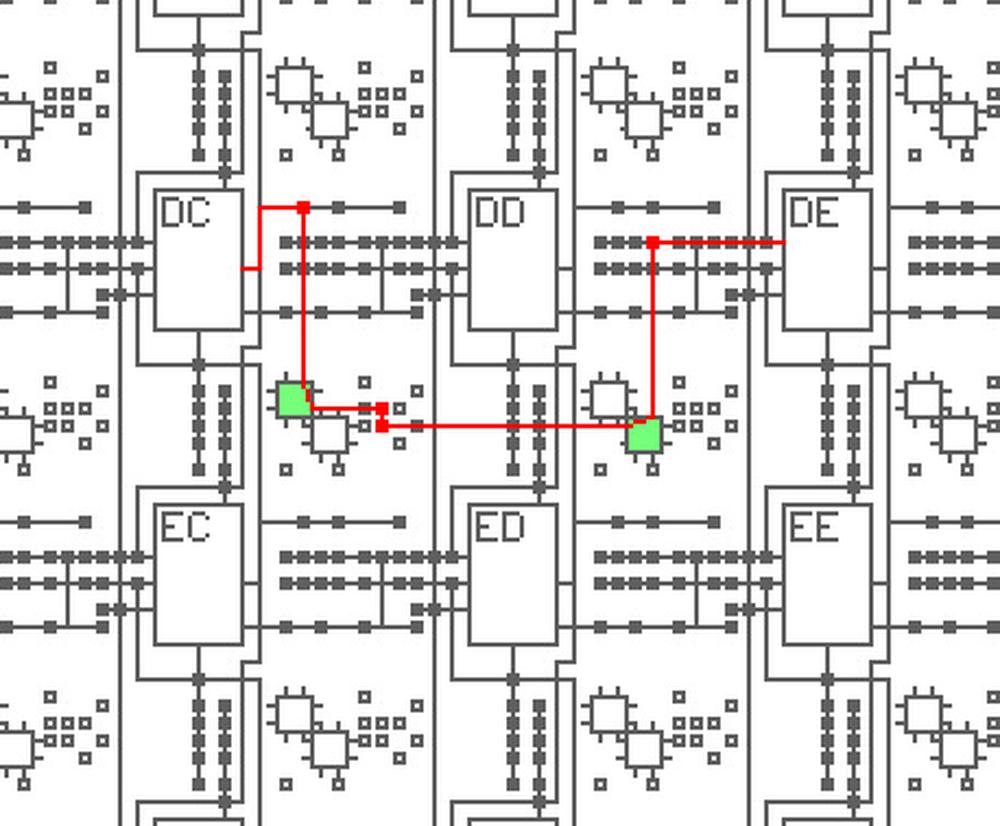

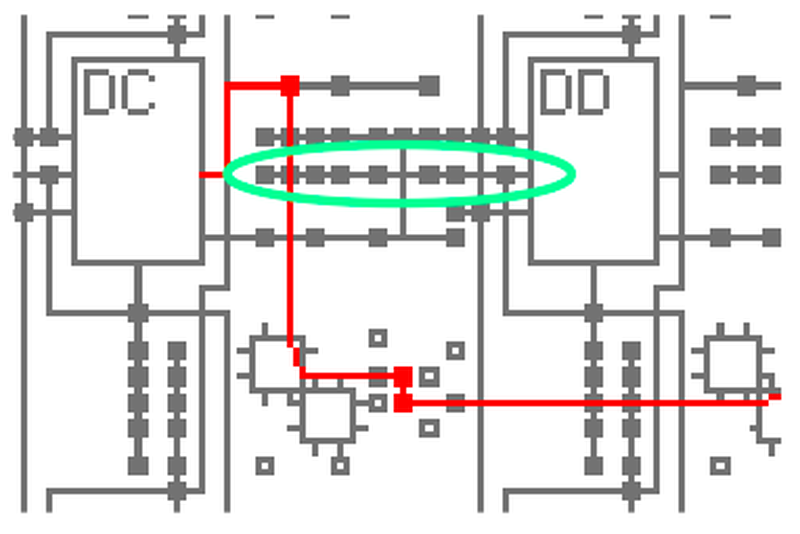

The interconnect can wire, for example, an output of block DC to an input of block DE, as shown below. The red line indicates the routing path and the small red squares indicate activated routing points. After leaving block DC, the signal is directed by the first routing point to an 8-pin switch (green) which directs it to two more routing points and another 8-pin switch. (The unused vertical and horizontal paths are not shown.) Note that routing is fairly complex; even this short path used four routing points and two switches.

The screenshot below shows what routing looks like in the XACT program. The yellow lines indicate routing between the logic blocks. As more signals are added, the challenge is to route efficiently without the paths colliding. The XACT software package performs automatic routing, but routes can also be edited manually.

The implementation

The remainder of this post discusses the internal circuitry of the XC2064, reverse-engineered from die photos.5 Be warned that this assumes some familiarity with the XC2064.

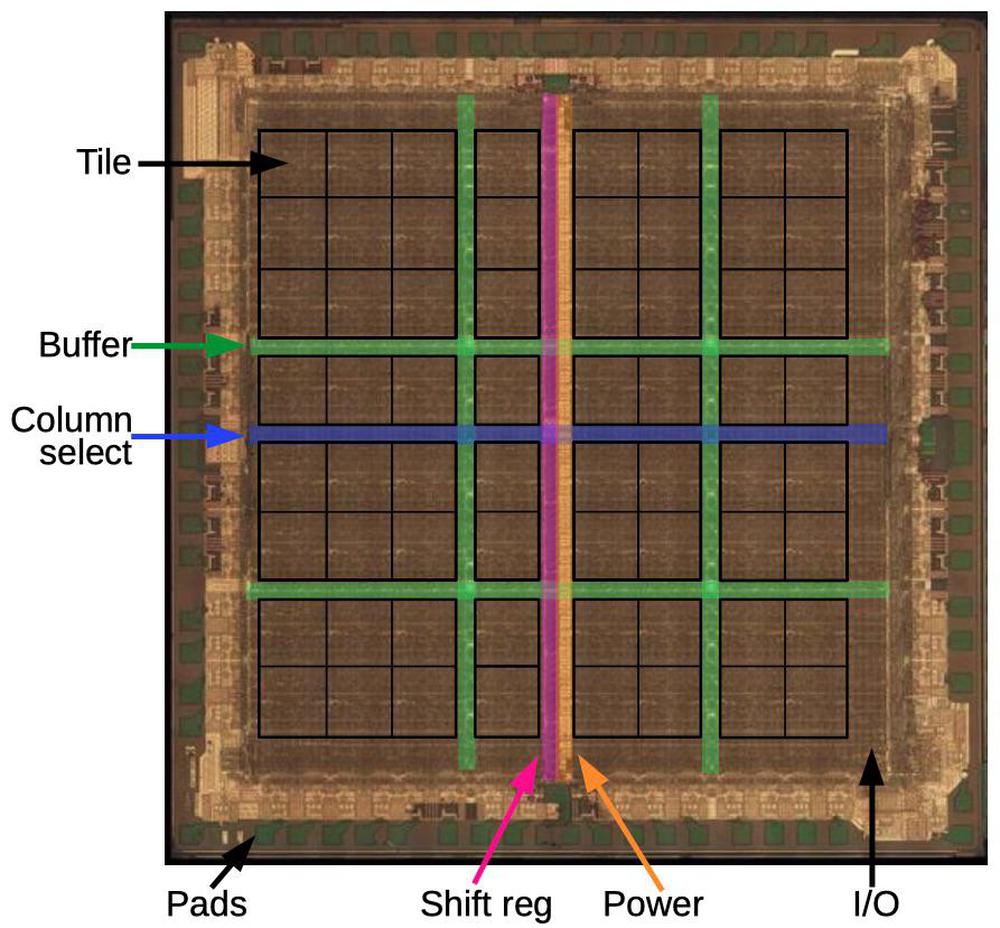

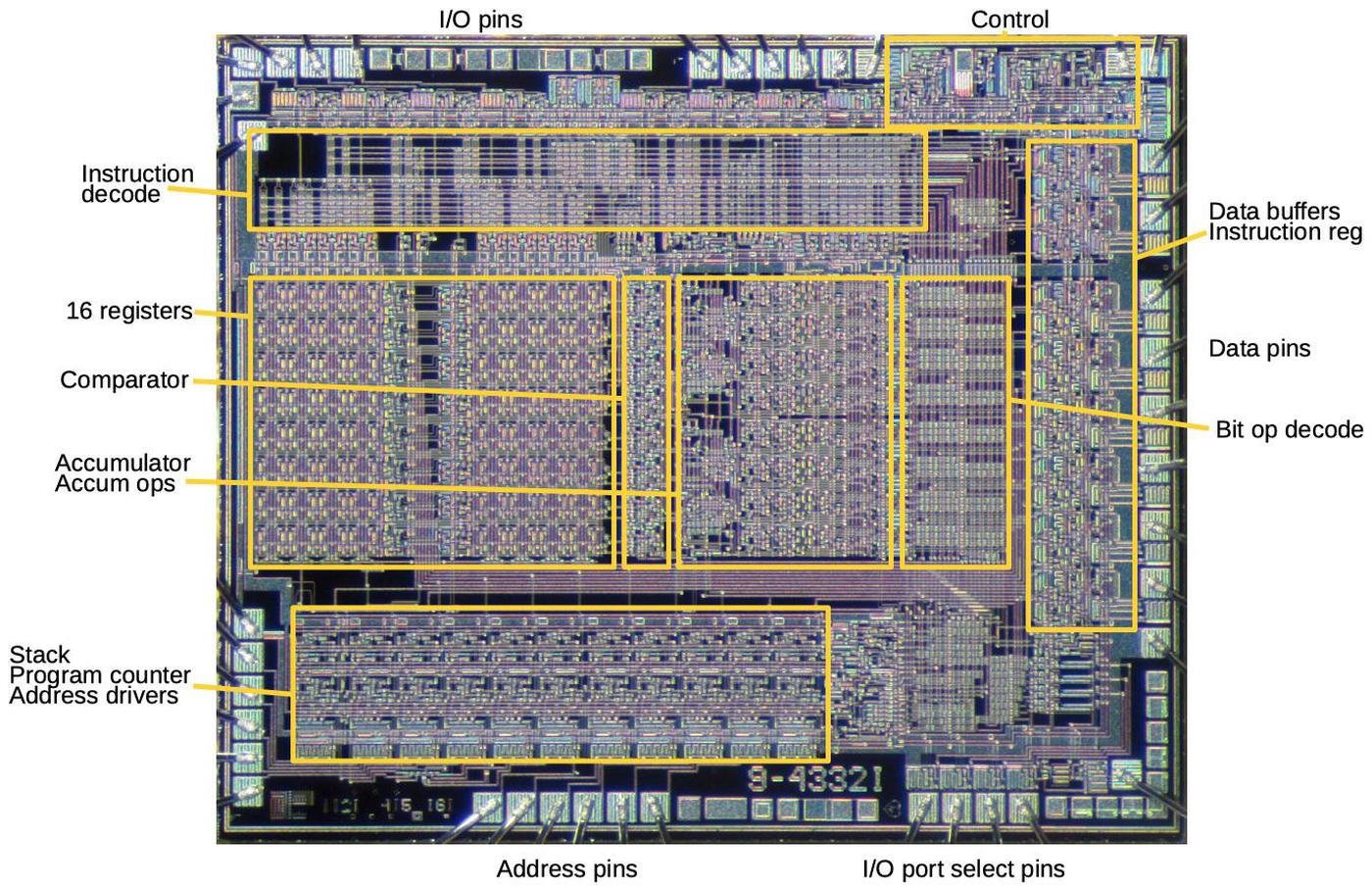

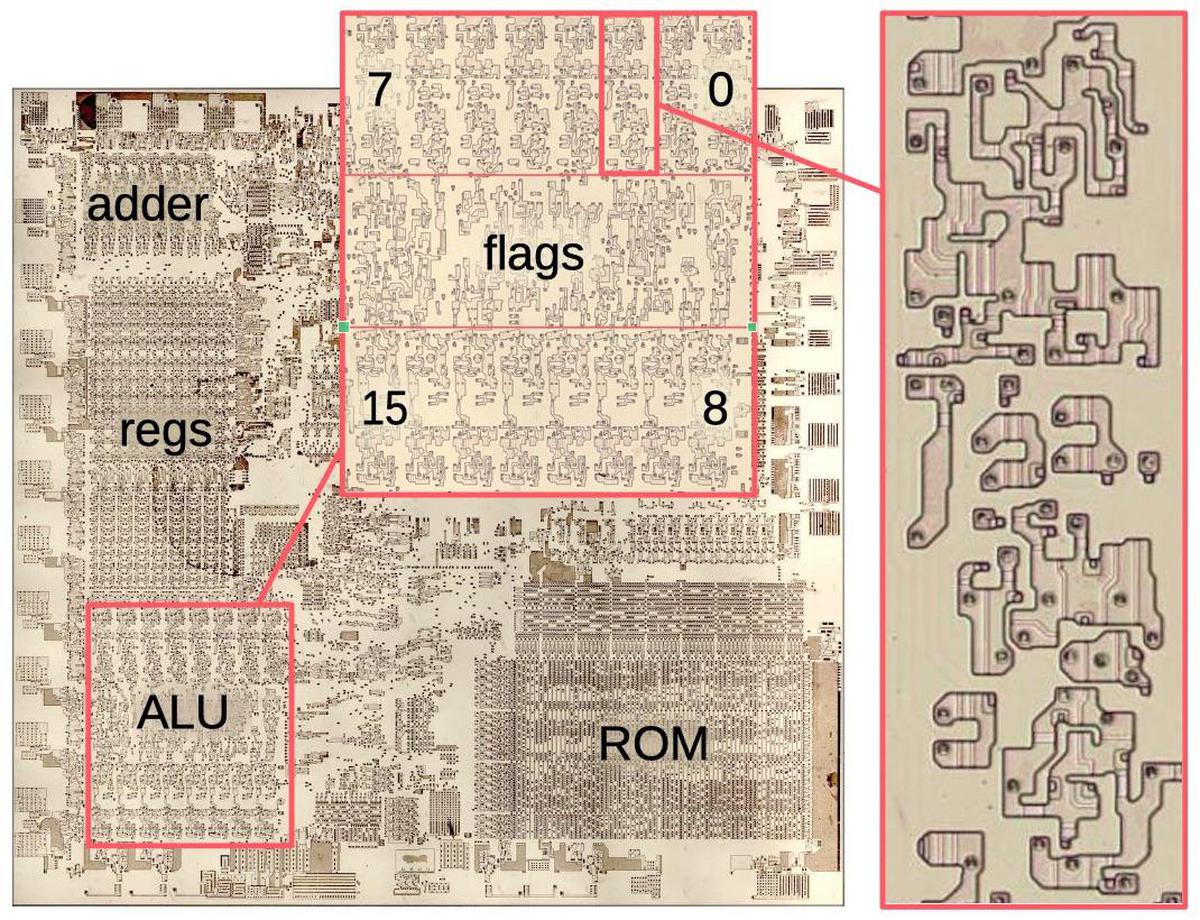

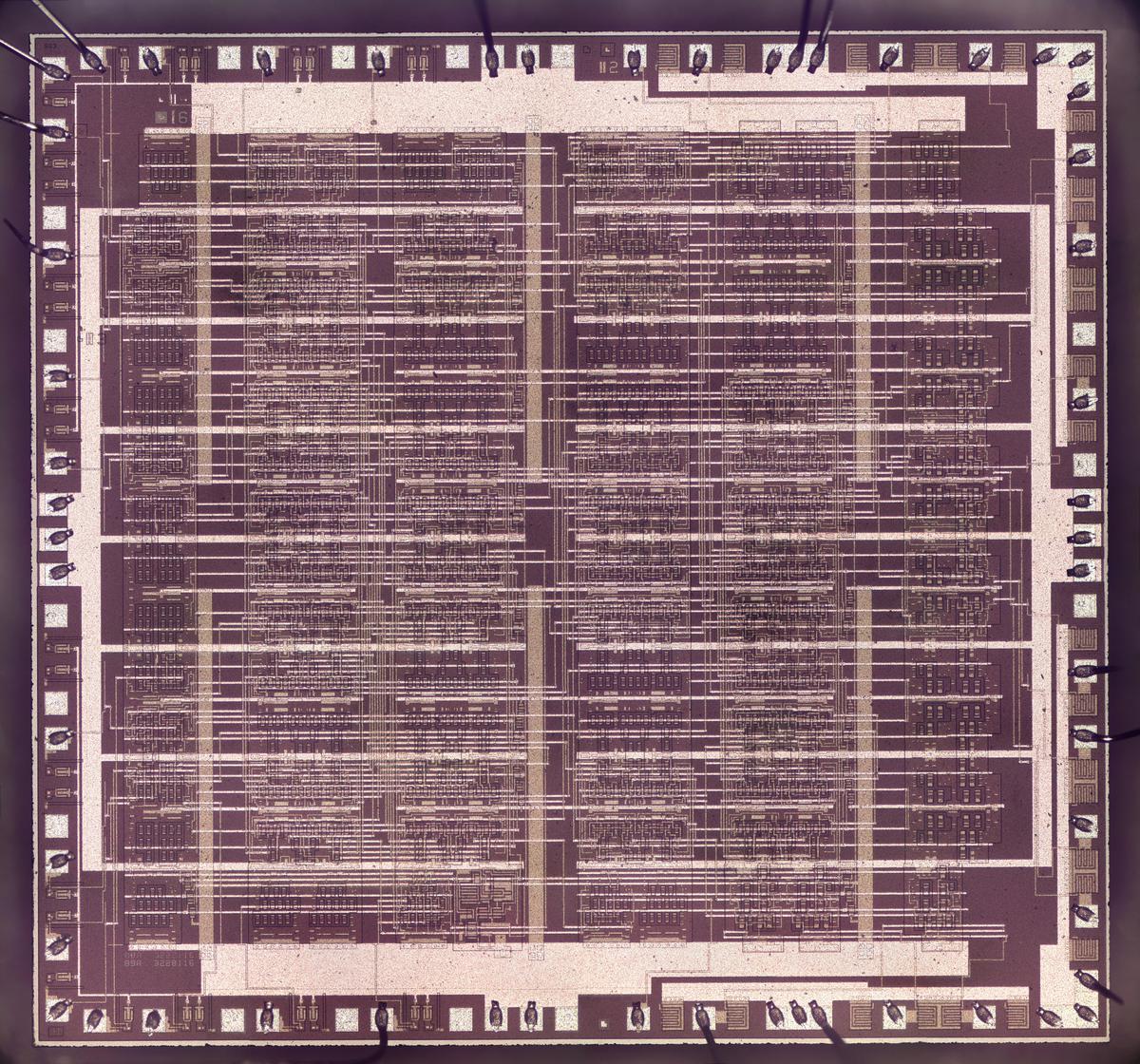

The die photo below shows the layout of the XC2064 chip. The main part of the FPGA is the 8×8 grid of tiles; each tile holds one logic block and the neighboring routing circuitry. Although FPGA diagrams show the logic blocks (CLBs) as separate entities from the routing that surrounds them, that is not how the FPGA is implemented. Instead, each logic block and the neighboring routing are implemented as a single entity, a tile. (Specifically, the tile includes the routing above and to the left of each CLB.)

Around the edges of the integrated circuit, I/O blocks provide communication with the outside world. They are connected to the small green square pads, which are wired to the chip's external pins. The die is divided by buffers (green): two vertical and two horizontal. These buffers amplify signals that travel long distances across the circuit, reducing delay. The vertical shift register (pink) and horizontal column select circuit (blue) are used to load the bitstream into the chip, as will be explained below.

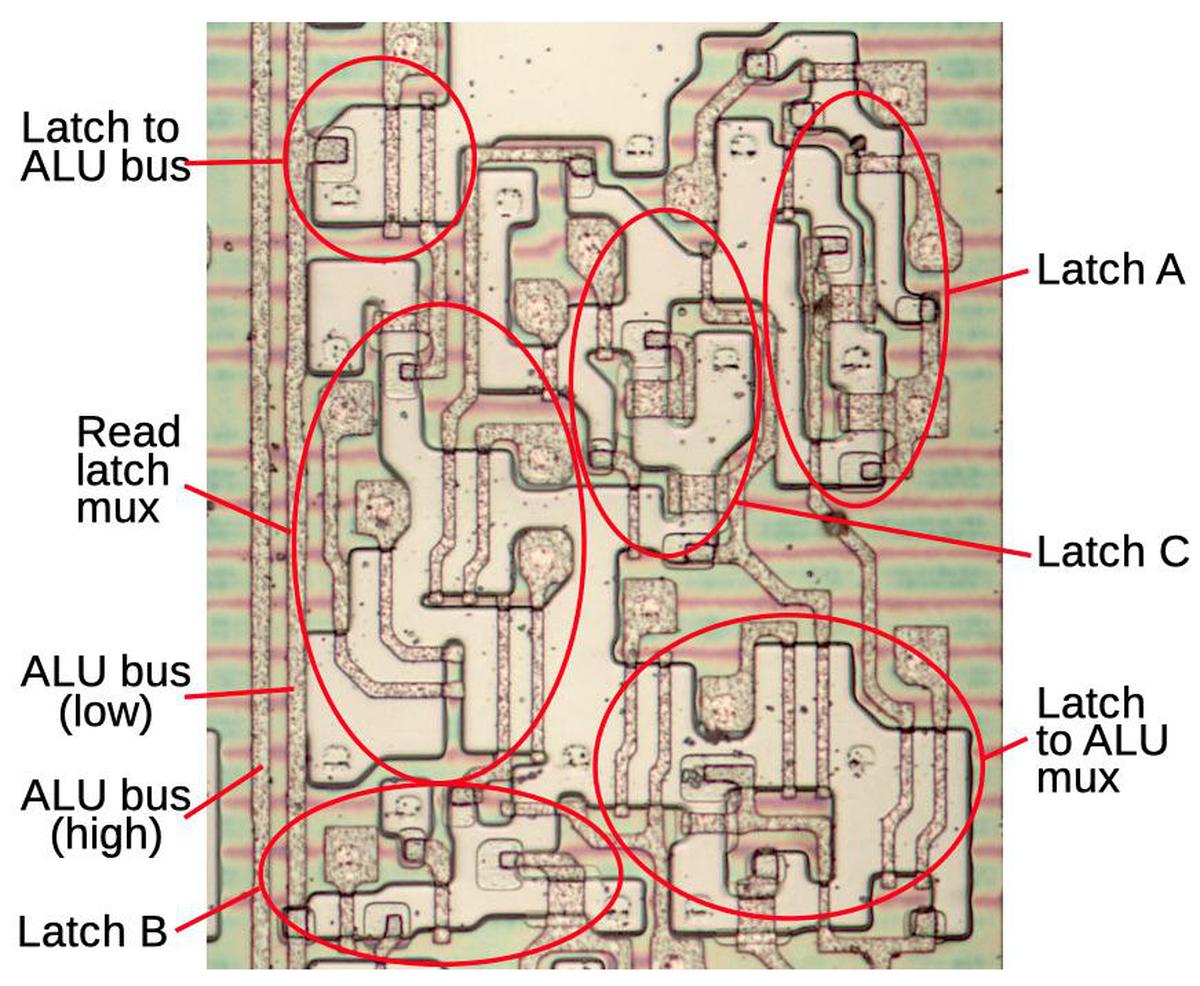

Inside a tile

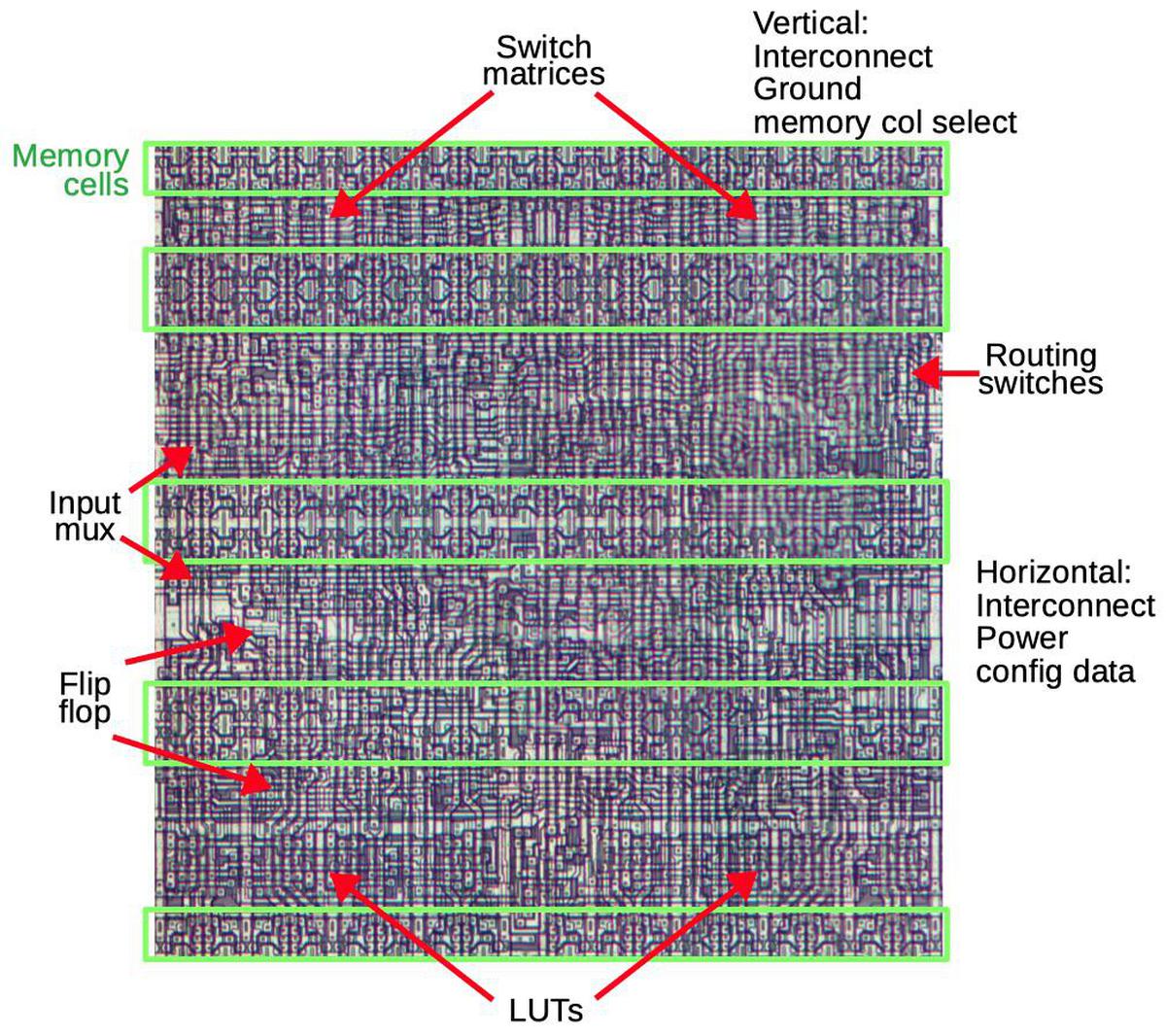

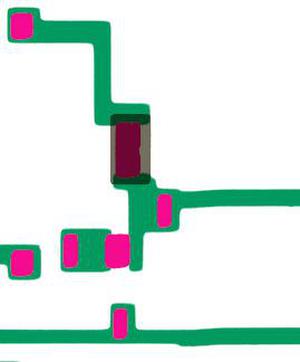

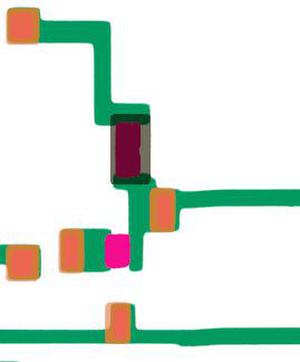

The diagram below shows the layout of a single tile in the XC2064; the chip contains 64 of these tiles packed together as shown above. About 40% of each tile is taken up by the memory cells (green) that hold the configuration bits. The top third (roughly) of the tile handles the interconnect routing through two switch matrices and numerous individual routing switches. Below that is the logic block. Key parts of the logic block are multiplexers for the input, the flip flop, and the lookup tables (LUTs). The tile is connected to neighboring tiles through vertical and horizontal wiring for interconnect, power and ground. The configuration data bits are fed into the memory cells horizontally, while vertical signals select a particular column of memory cells to load.

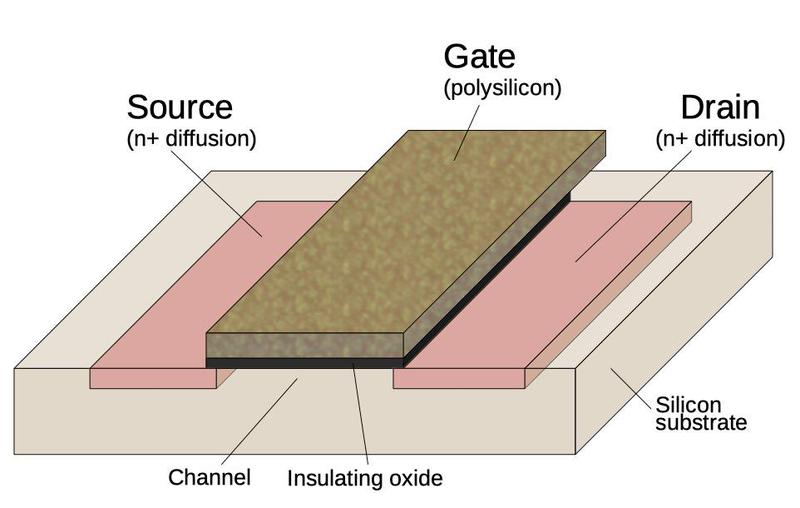

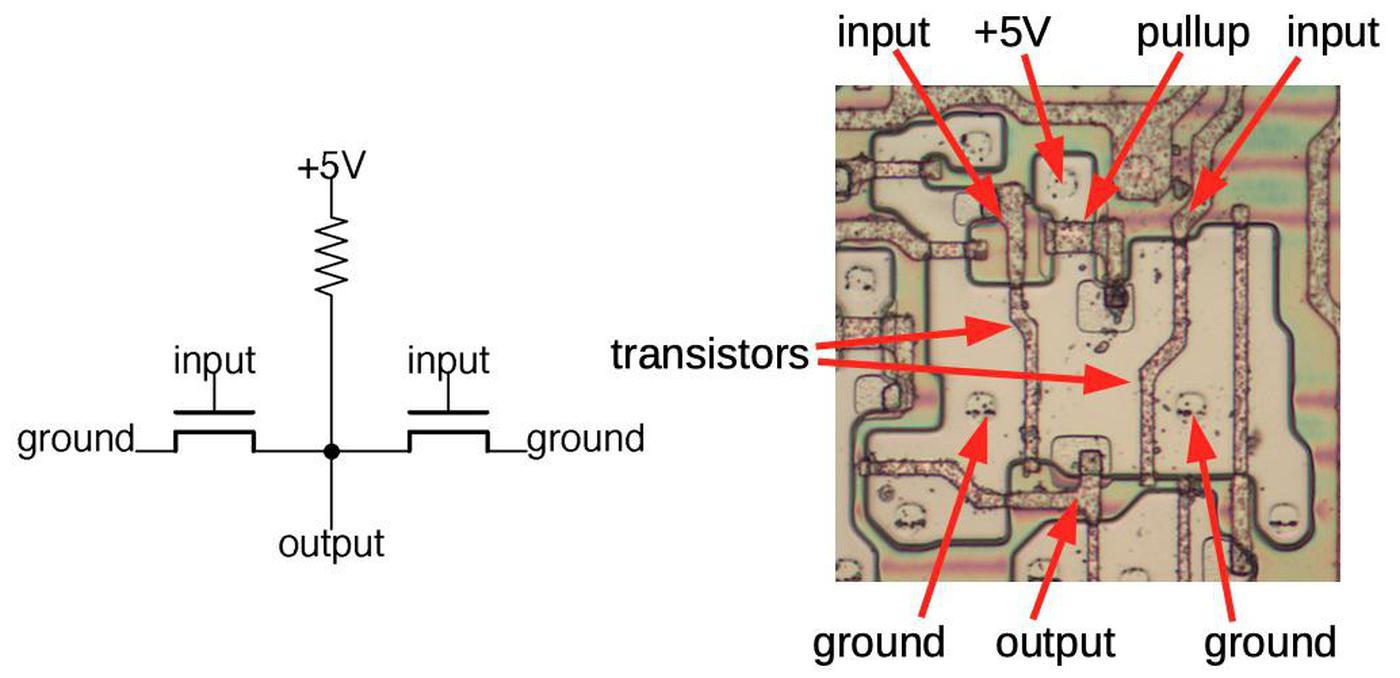

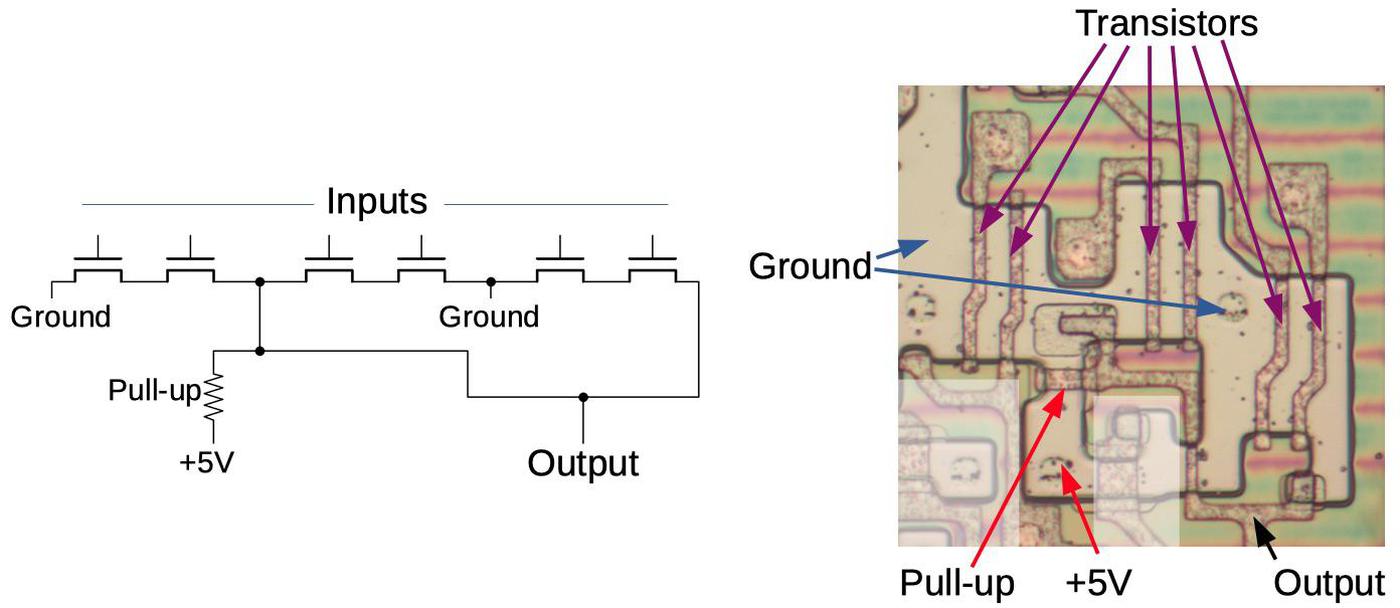

Transistors

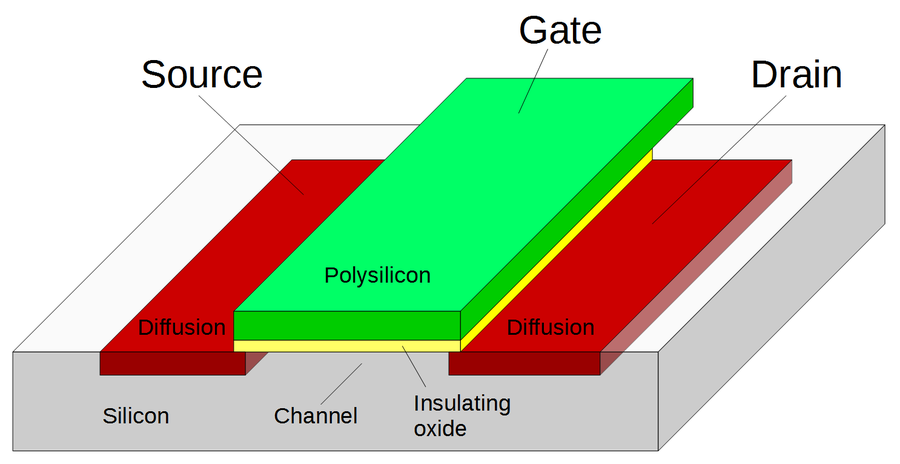

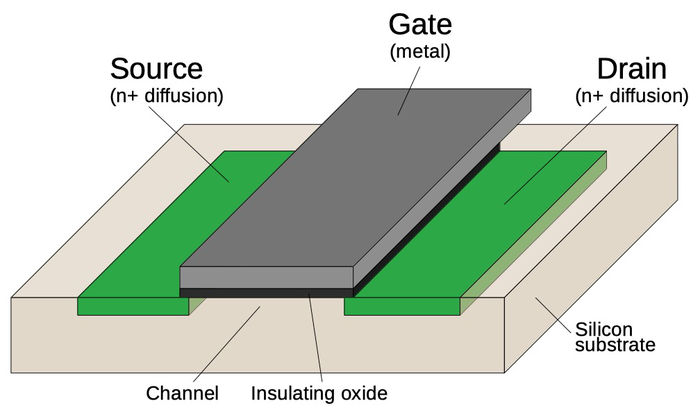

The FPGA is implemented with CMOS logic, built from NMOS and PMOS transistors. Transistors have two main roles in the FPGA. First, they can be combined to form logic gates. Second, transistors are used as switches that signals pass through, for instance to control routing. In this role, the transistor is called a pass transistor. The diagram below shows the basic structure of an MOS transistor. Two regions of silicon are doped with impurities to form the source and drain regions. In between, the gate turns the transistor on or off, controlling current flow between the source and drain. The gate is made of a special type of silicon called polysilicon, and is separated from the underlying silicon by a thin insulating oxide layer. Above this, two layers of metal provide wiring to connect the circuitry.

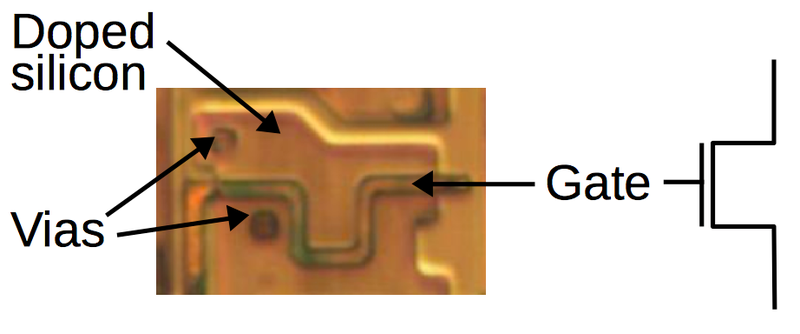

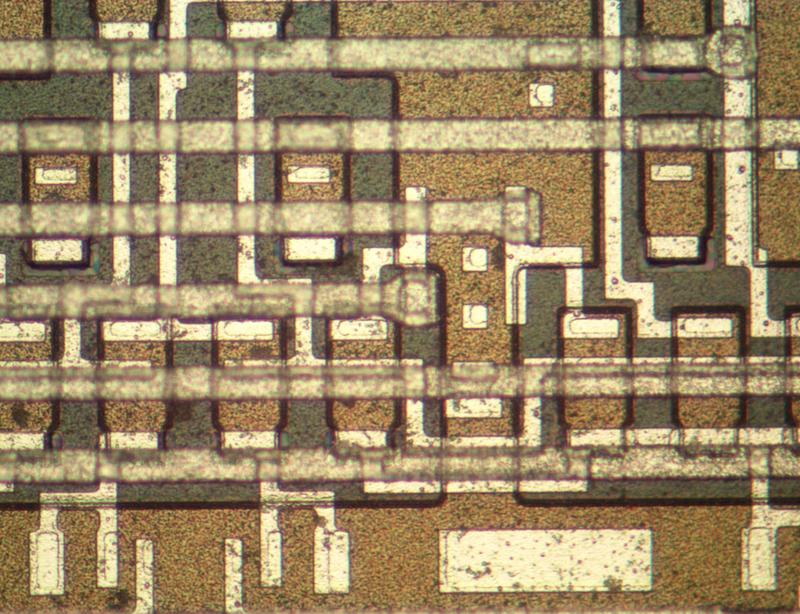

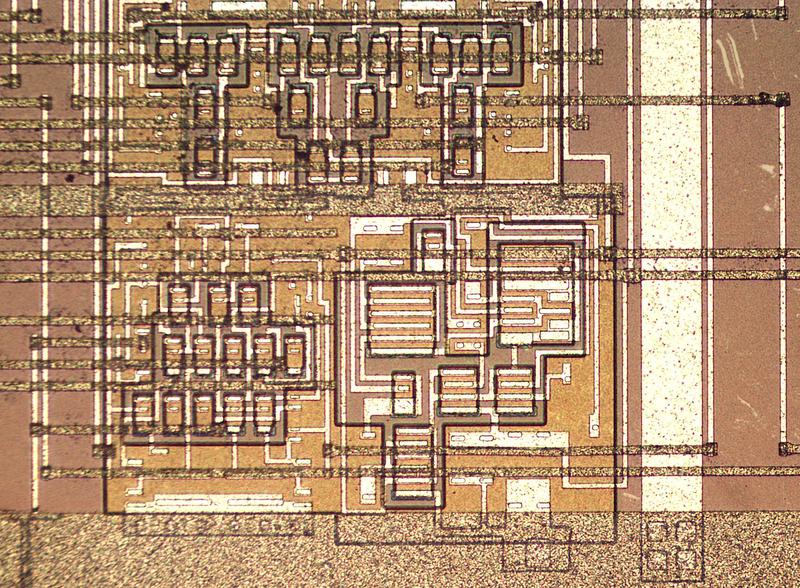

The die photo closeup below shows what a transistor looks like under a microscope. The polysilicon gate is the snaking line between the two doped silicon regions. The circles are vias, connections between the silicon and the metal layer (which has been removed for this photo).

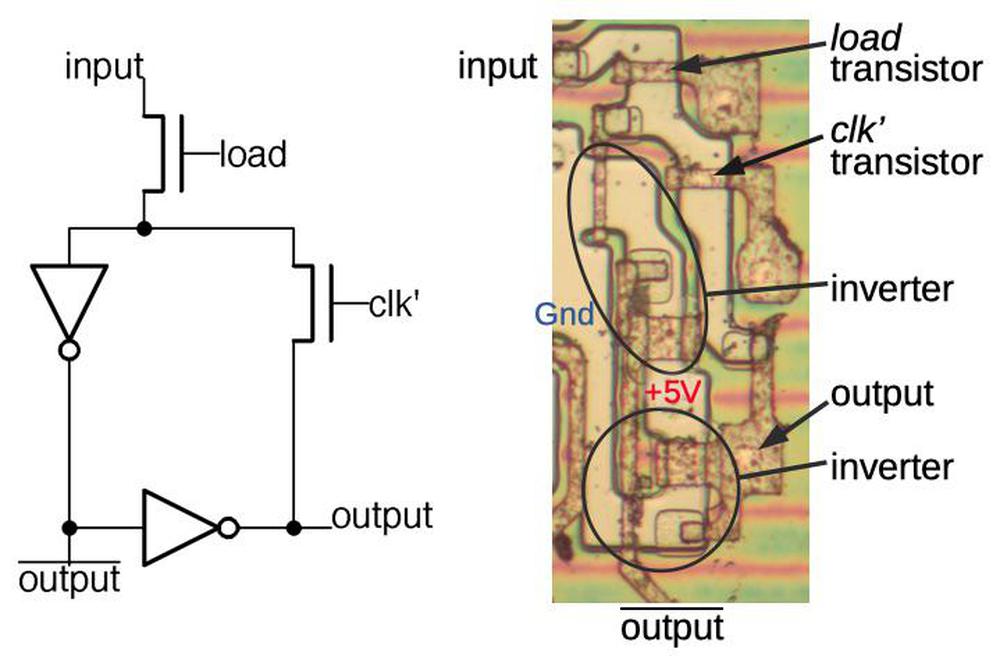

The bitstream and configuration memory

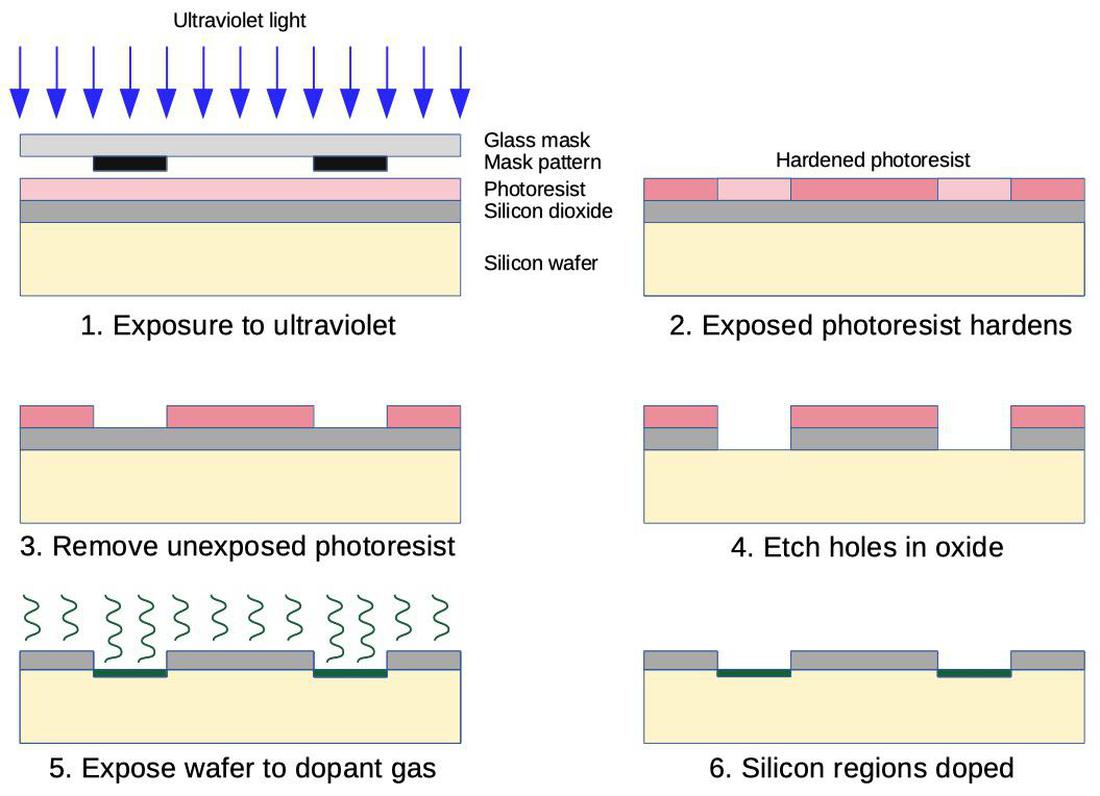

The configuration information in the XC2064 is stored in configuration memory cells. Instead of using a block of RAM for storage, the FPGA's memory is distributed across the chip in a 160×71 grid, ensuring that each bit is next to the circuitry that it controls. The diagram below shows how the configuration bitstream is loaded into the FPGA. The bitstream is fed into the shift register that runs down the center of the chip (pink). Once 71 bits have been loaded into the shift register, the column select circuit (blue) selects a particular column of memory and the bits are loaded into this column in parallel. Then, the next 71 bits are loaded into the shift register and the next column to the left becomes the selected column. This process repeats for all 160 columns of the FPGA, loading the entire bitstream into the chip. Using a shift register avoids bulky memory addressing circuitry.

The important point is that the bitstream is distributed across the chip exactly as it appears in the file: the layout of bits in the bitstream file matches the physical layout on the chip. As will be shown below, each bit is stored in the FPGA next to the circuit it controls. Thus, the bitstream file format is directly determined by the layout of the hardware circuits. For instance, when there is a gap between FPGA tiles because of the buffer circuitry, the same gap appears in the bitstream. The content of the bitstream is not designed around software concepts such as fields or data tables or configuration blocks. Understanding the bitstream depends on thinking of it in hardware terms, not in software terms.7

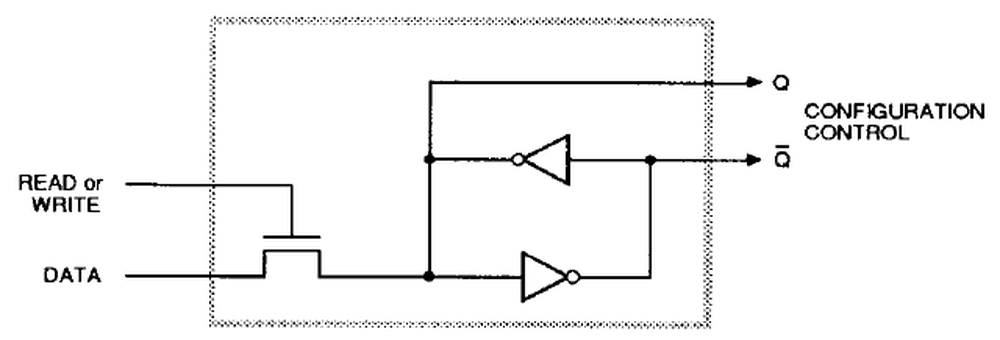

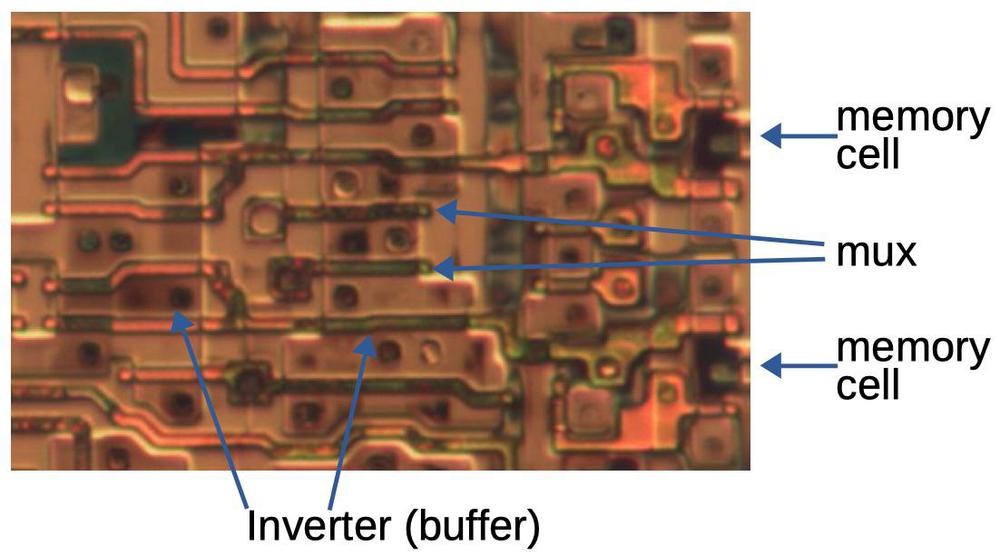

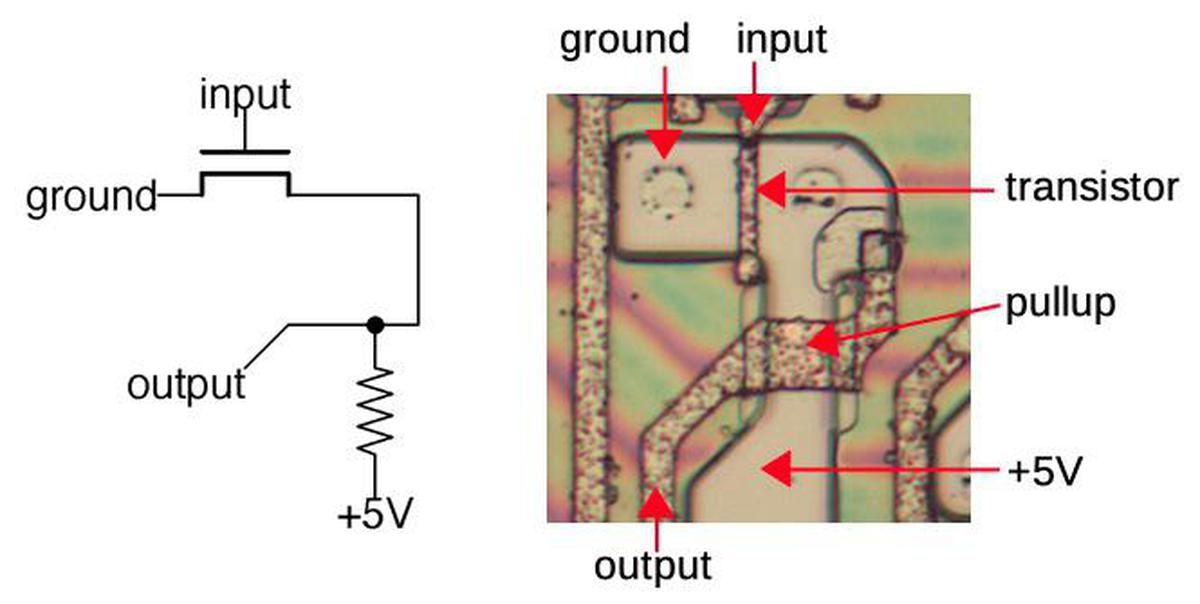

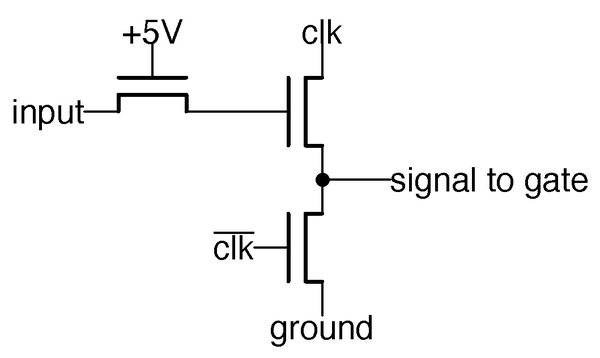

Each bit of configuration memory is implemented as shown below.8 Each memory cell consists of two inverters connected in a loop. This circuit has two stable states so it can store a bit: either the top inverter is 1 and the bottom is 0 or vice versa. To write to the cell, the pass transistor on the left is activated, passing the data signal through. The signal on the data line simply overpowers the inverters, writing the desired bit. (You can also read the configuration data out of the FPGA using the same path.) The Q and inverted Q outputs control the desired function in the FPGA, such as closing a routing connection, providing a bit for a lookup table, or controlling the flip flops. (In most cases, just the Q output is used.)

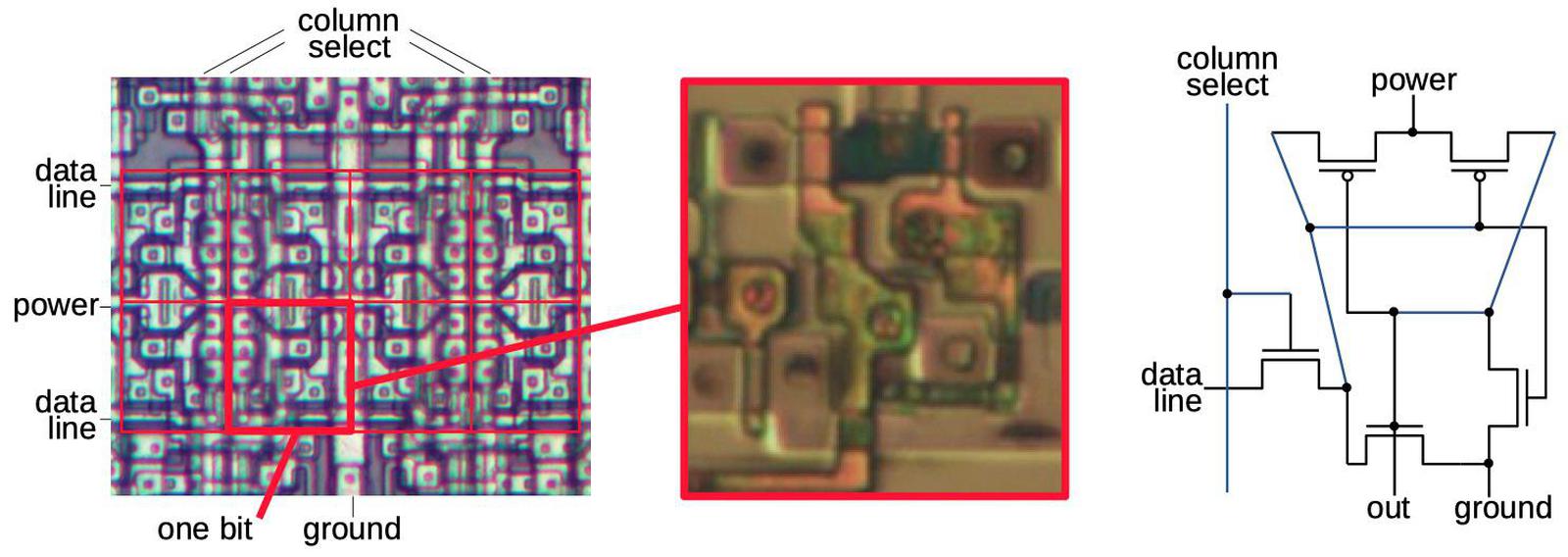

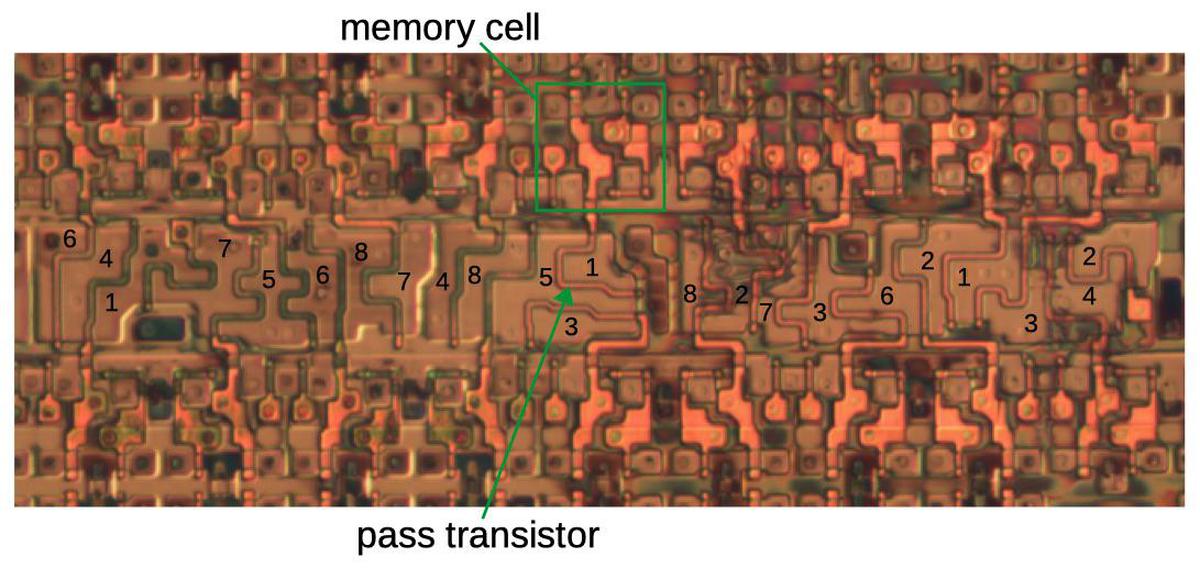

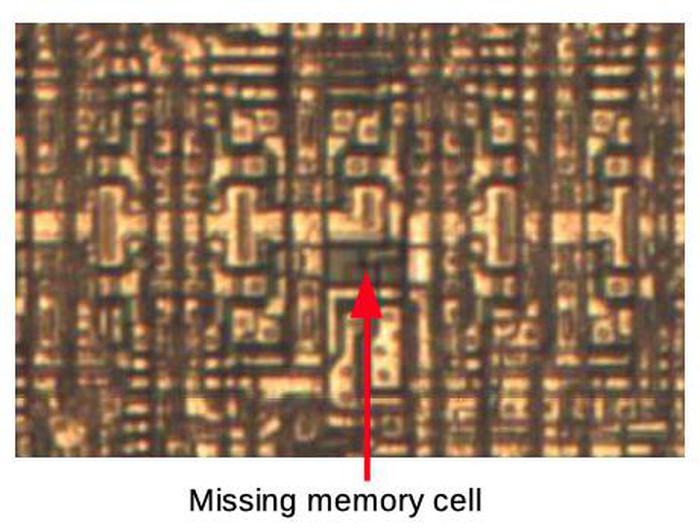

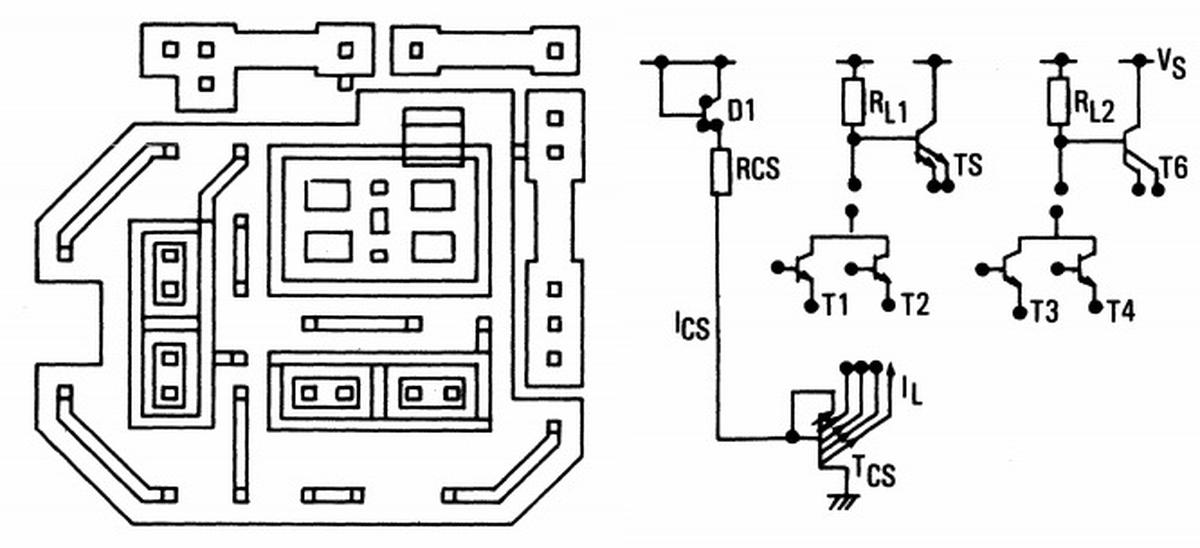

The diagram below shows the physical layout of memory cells. The photo on the left shows eight memory cells, with one cell highlighted. Each horizontal data line feeds all the memory cells in that row. Each column select line selects all the memory cells in that column for writing. The middle photo zooms in on the silicon and polysilicon transistors for one memory cell. The metal layers were removed to expose the underlying transistors. The metal layers wire together the transistors; the circles are connections (vias) between the silicon or polysilicon and the metal. The schematic shows how the five transistors are connected; the schematic's physical layout matches the photo. Two pairs of transistors form two CMOS inverters, while the pass transistor in the lower left provides access to the cell.

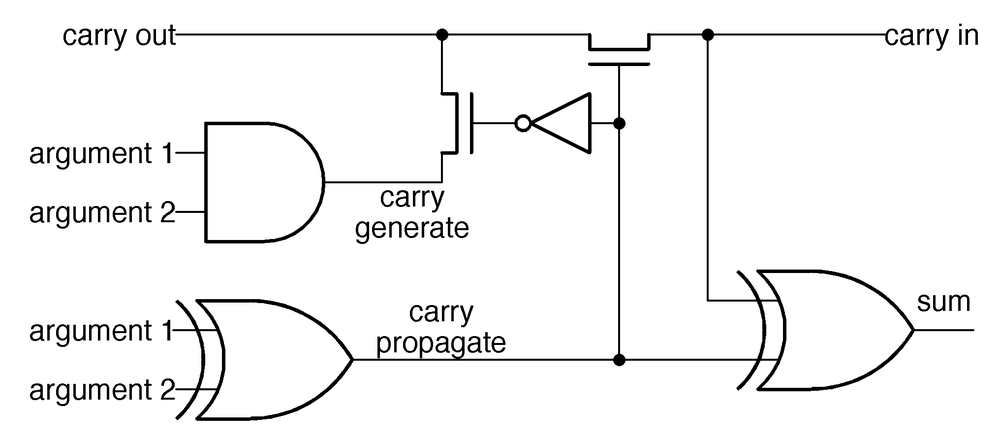

Lookup table multiplexers

As explained earlier, the FPGA implements arbitrary logic functions by using a lookup table.

The diagram below shows how a lookup table is implemented in the XC2064.

The eight values on the left are stored in eight memory cells.

Four multiplexers select one of each pair of values, depending on the value of the A input; if A is 0, the top value is selected and if A is 1

the bottom value is selected.

Next, a larger multiplexer selects one of the four values based on B and C. The result is the desired value, in this case A XOR B XOR C.

By putting different values in the lookup table, the logic function can be changed as desired.

Each multiplexer is implemented with pass transistors. Depending on the control signals, one of the pass transistors is activated, passing that input to the output. The diagram below shows part of the LUT circuitry, multiplexing two of the bits. At the right are two of the memory cells. Each bit goes through an inverter to amplify it, and then passes through the multiplexer's pass transistors in the middle, selecting one of the bits.

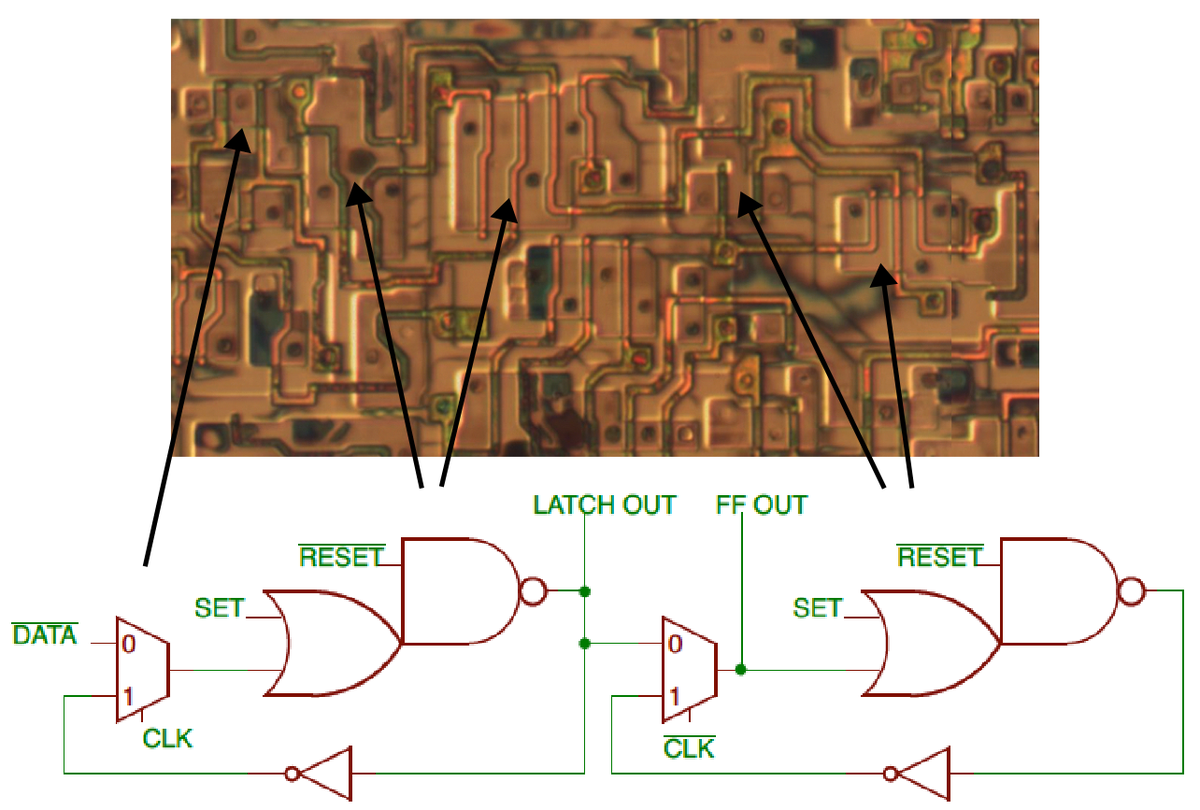

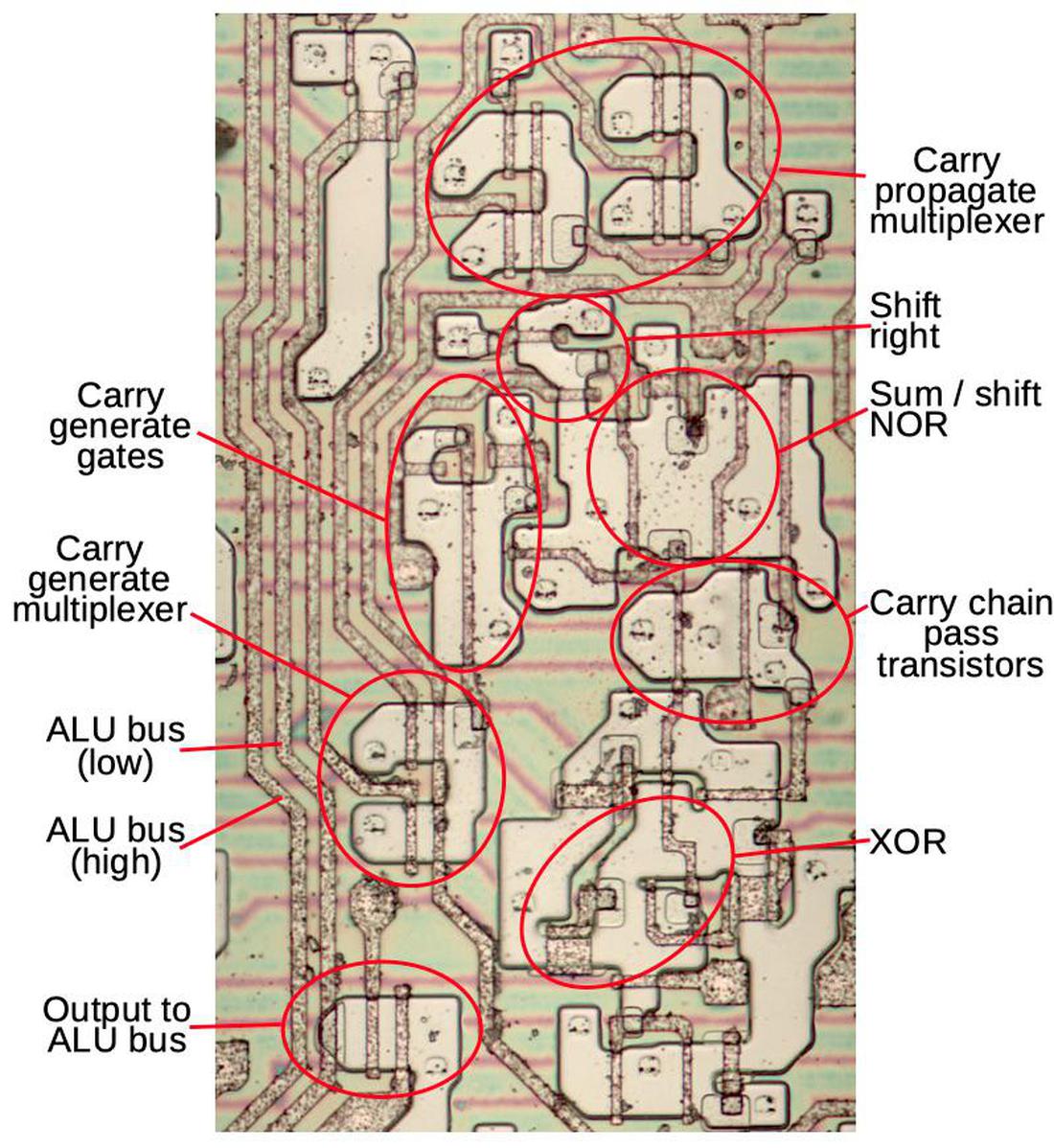

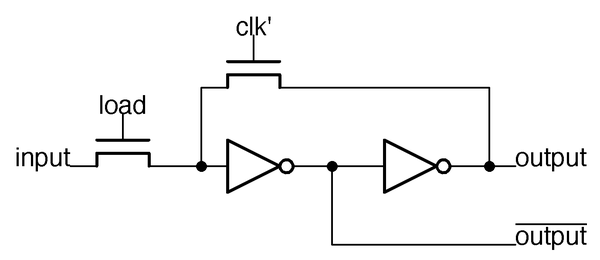

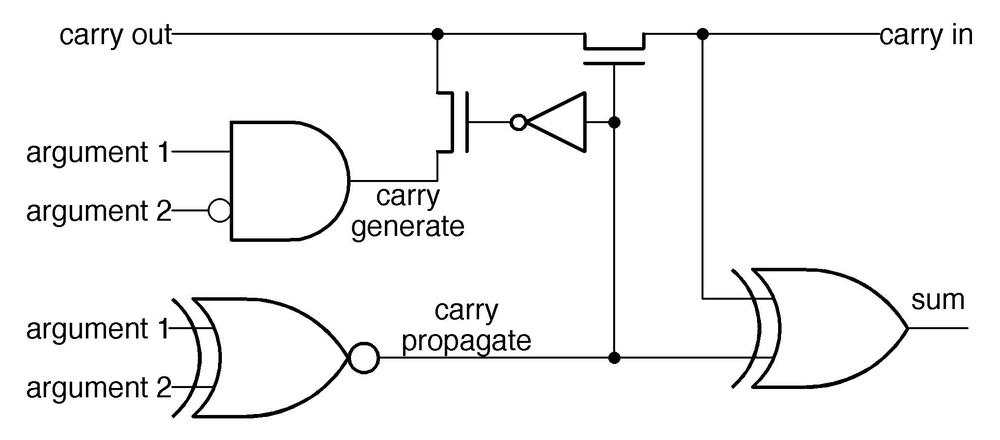

Flip flop

Each CLB contains a flip flop, allowing the FPGA to implement latches, state machines, and other stateful circuits. The diagram below shows the (slightly unusual) implementation of the flip flop. It uses a primary/secondary design. When the clock is low, the first multiplexer lets the data into the primary latch. When the clock goes high, the multiplexer closes the loop for the first latch, holding the value. (The bit is inverted twice going through the OR gate, NAND gate, and inverter, so it is held constant.) Meanwhile, the secondary latch's multiplexer receives the bit from the first latch when the clock goes high (note that the clock is inverted). This value becomes the flip flop's output. When the clock goes low, the secondary's multiplexer closes the loop, latching the bit. Thus, the flip flop is edge-sensitive, latching the value on the rising edge of the clock. The set and reset lines force the flip flop high or low.

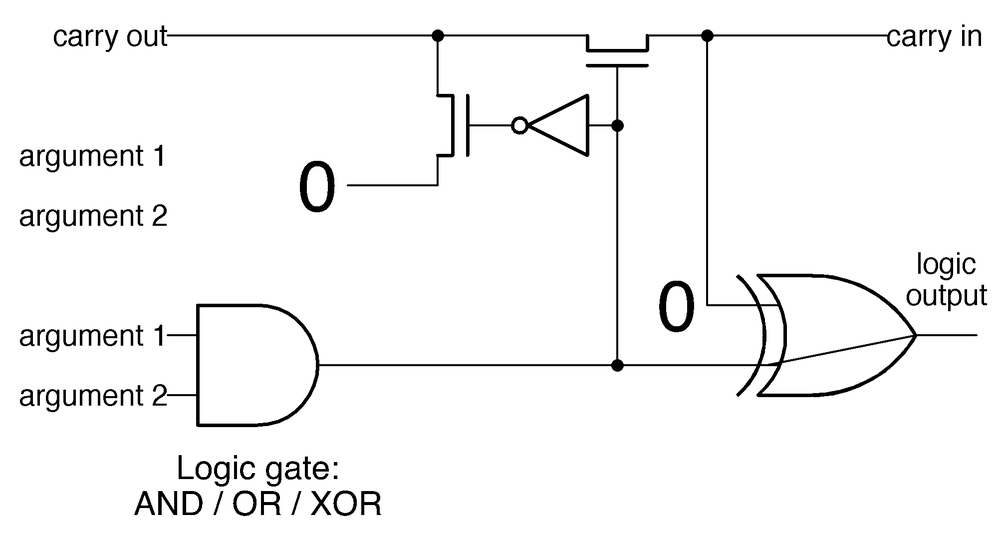

8-pin switch matrix

The switch matrix is an important routing element. Each switch has eight "pins" (two on each side) and can connect almost any combination of pins together. This allows signals to turn, split, or cross over with more flexibility than the individual routing nodes. The diagram below shows part of the routing network between four CLBs (cyan). The switch matrices (green) can be connected with any combination of the connections on the right. Note that each pin can connect to 5 of the 7 other pins. For instance, pin 1 can connect to pin 3 but not pin 2 or 4. This makes the matrix almost a crossbar, with 20 potential connections rather than 28.

The switch matrix is implemented by a row of pass transistors controlled by memory cells above and below. The two sides of the transistor are the two switch matrix pins that can be connected by that transistor. Thus, each switch matrix has 20 associated control bits;9 two matrices per tile yields matrix 40 control bits per tile. The photo below indicates one of the memory cells, connected to the long squiggly gate of the pass transistor below. This transistor controls the connection between pin 5 and pin 1. Thus, the bit in the bitstream corresponding to that memory cell controls the switch connection between pin 5 and pin 1. Likewise, the other memory cells and their associated transistors control other switch connections. Note that the ordering of these connections follows no particular pattern; consequently, the mapping between bitstream bits and the switch pins appears random.

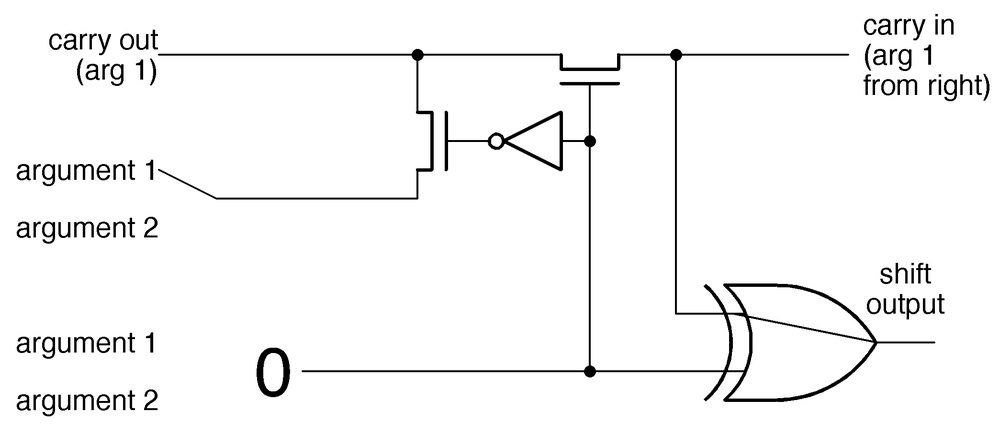

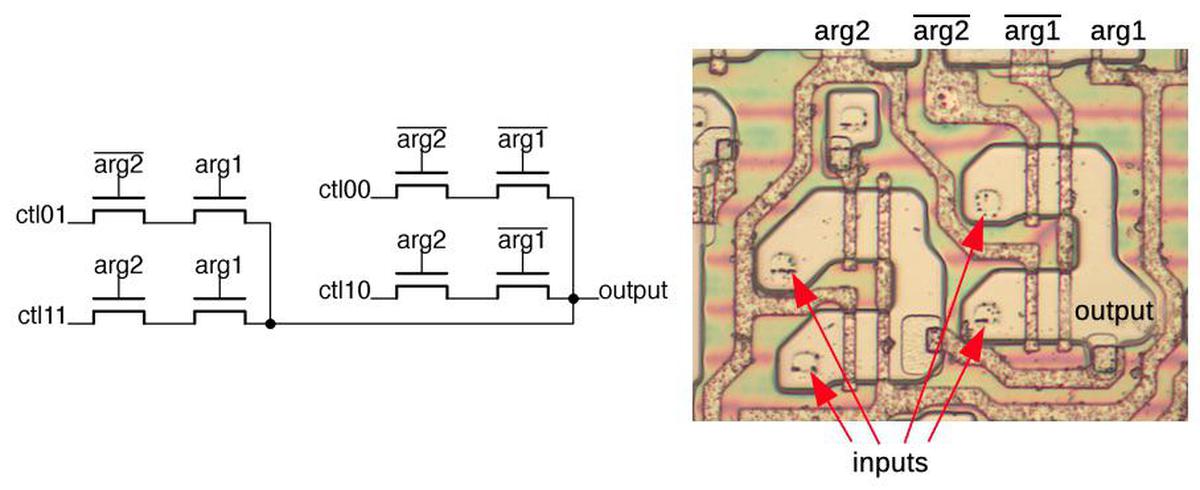

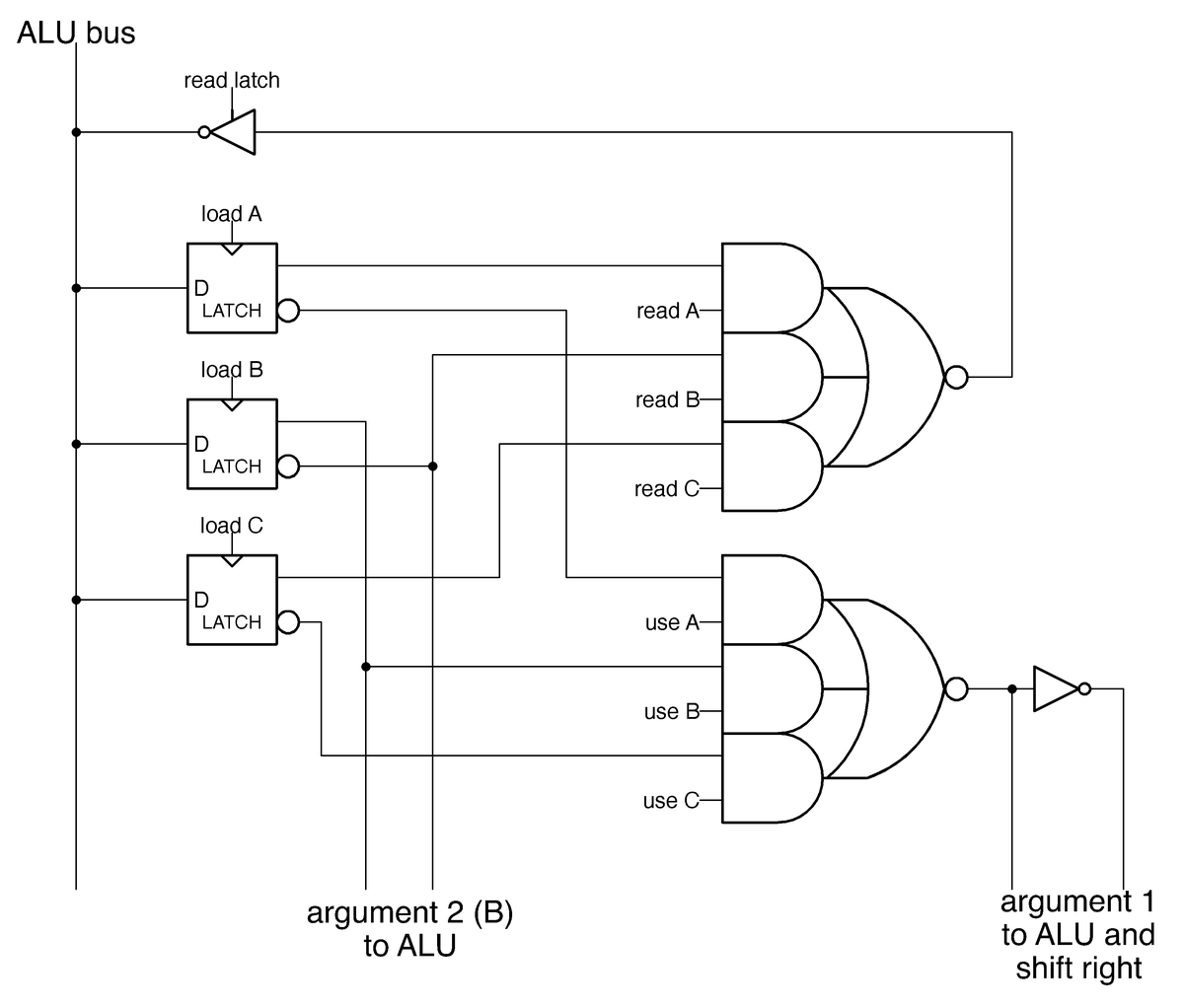

Input routing

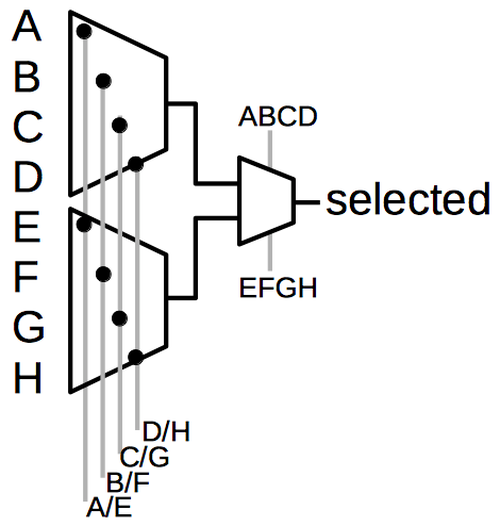

The inputs to a CLB use a different encoding scheme in the bitstream, which is explained by the hardware implementation. In the diagram below, the eight circled nodes are potential inputs to CLB box DD. Only one node (at most) can be configured as an input, since connecting two signals to the same input would short them together.

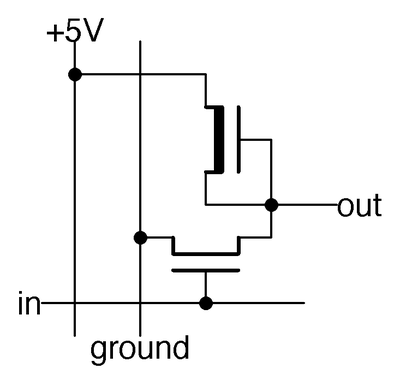

The desired input is selected using a multiplexer. A straightforward solution would use an 8-way multiplexer, with 3 control bits selecting one of the 8 signals. Another straightforward solution would be to use 8 pass transistors, each with its own control signal, with one of them selecting the desired signal. However, the FPGA uses a hybrid approach that avoids the decoding hardware of the first approach but uses 5 control signals instead of the eight required by the second approach.

The schematic above shows the two-stage multiplexer approach used in the FPGA.

In the first stage, one of the control signals is activated.

The second stage picks either the top or bottom signal for the output.10

For instance, suppose control signal B/F is sent to the first stage and 'ABCD' to the second stage; input B is the only one that will pass through to the output.

Thus, selecting one of the eight inputs requires 5 bits in the bitstream and uses 5 memory cells.

Conclusion

The XC2064 uses a variety of highly-optimized circuits to implement its logic blocks and routing. This circuitry required a tight layout in order to fit onto the die. Even so, the XC2064 was a very large chip, larger than microprocessors of the time, so it was difficult to manufacture at first and cost hundreds of dollars. Compared to modern FPGAs, the XC2064 had an absurdly small number of cells, but even so it sparked a revolutionary new product line.

Two concepts are the key to understanding the XC2064's bitstream. First, the FPGA is implemented from 64 tiles, repeated blocks that combine the logic block and routing. Although FPGAs are described as having logic blocks surrounded by routing, that is not how they are implemented. The second concept is that there are no abstractions in the bitstream; it is mapped directly onto the two-dimensional layout of the FPGA. Thus, the bitstream only makes sense if you consider the physical layout of the FPGA.

I've determined how most of the XC2064 bitstream is configured (see footnote 11) and I've made a program to generate the CLB information from a bitstream file. Unfortunately, this is one of those projects where the last 20% takes most of the time, so there's still work to be done. One problem is handling I/O pins, which are full of irregularities and their own routing configuration. Another problem is the tiles around the edges have slightly different configurations. Combining the individual routing points into an overall netlist also requires some tedious graph calculations.

I announce my latest blog posts on Twitter, so follow me at kenshirriff for updates. I also have an RSS feed. Thanks to John McMaster, Tim Ansell and Philip Freidin for discussions.12

Notes and references

-

Ross Freeman tragically died of pneumonia at age 45, five years after inventing the FPGA. In 2009, Freeman was recognized as the inventor of the FPGA by the Inventor's Hall of Fame. ↩

-

Xilinx was one of the first fabless semiconductor companies. Unlike most semiconductor companies that designed and manufactured semiconductors, Xilinx only created the design while a fab company did the manufacturing. Xilinx used Seiko Epson Semiconductor Division (as in Seiko watches and Epson printers) for their initial fab. ↩

-

Custom integrated circuits have the problems of high cost and the long time (months or years) to design and manufacture the chip. One solution was Programmable Logic Devices (PLD), chips with gate arrays that can be programmed with various functions, which were developed around 1967. Originally they were mask-programmable; the metal layer of the chip was designed for the desired functionality, a new mask was made, and chips were manufactured to the specifications. Later chips contained a PROM that could be "field programmed" by blowing tiny fuses inside the chip to program it, or an EPROM that could be reprogrammed. Programmable logic devices had a variety of marketing names including Programmable Logic Array, Programmable Array Logic (1978), Generic Array Logic and Uncommitted Logic Array. For the most part, these devices consisted of logic gates arranged as a sum-of-products, although some included flip flops. The main innovation of the FPGA was to provide a programmable interconnect between logic blocks, rather than a fixed gate architecture, as well as logic blocks with flip flops. For an in-depth look at FPGA history and the effects of scalability, see Three Ages of FPGAs: A Retrospective on the First Thirty Years of FPGA Technology. Also see A Brief History of FPGAs. ↩

-

The lookup tables in the XC2064 are more complex than just a table. Each CLB contains two 3-input lookup tables. The inputs to the lookup tables in the XC2064 have programmable multiplexers, allowing selection of four different potential inputs. In addition, the two lookup tables can be tied together to create a function on four variables or other combinations.

Logic functions in the XC2064 FPGA are implemented with lookup tables. From the datasheet. -

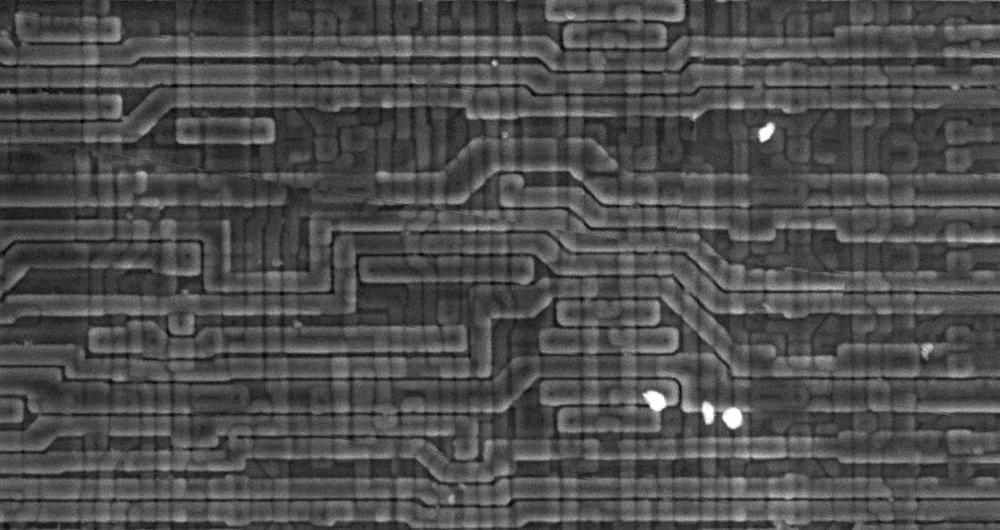

To analyze the XC2064, I used my own die photos of the XC20186 as well as the siliconpr0n photos of the XC2064 and XC2018. Under a light microscope, the FPGA is hard to analyze because it has two metal layers. John McMaster used his electron microscope to help disambiguate the two layers. The photo below shows how the top metal layer is emphasized by the electron microscope.

Electron microscope photo of the XC2064, courtesy of John McMaster. -

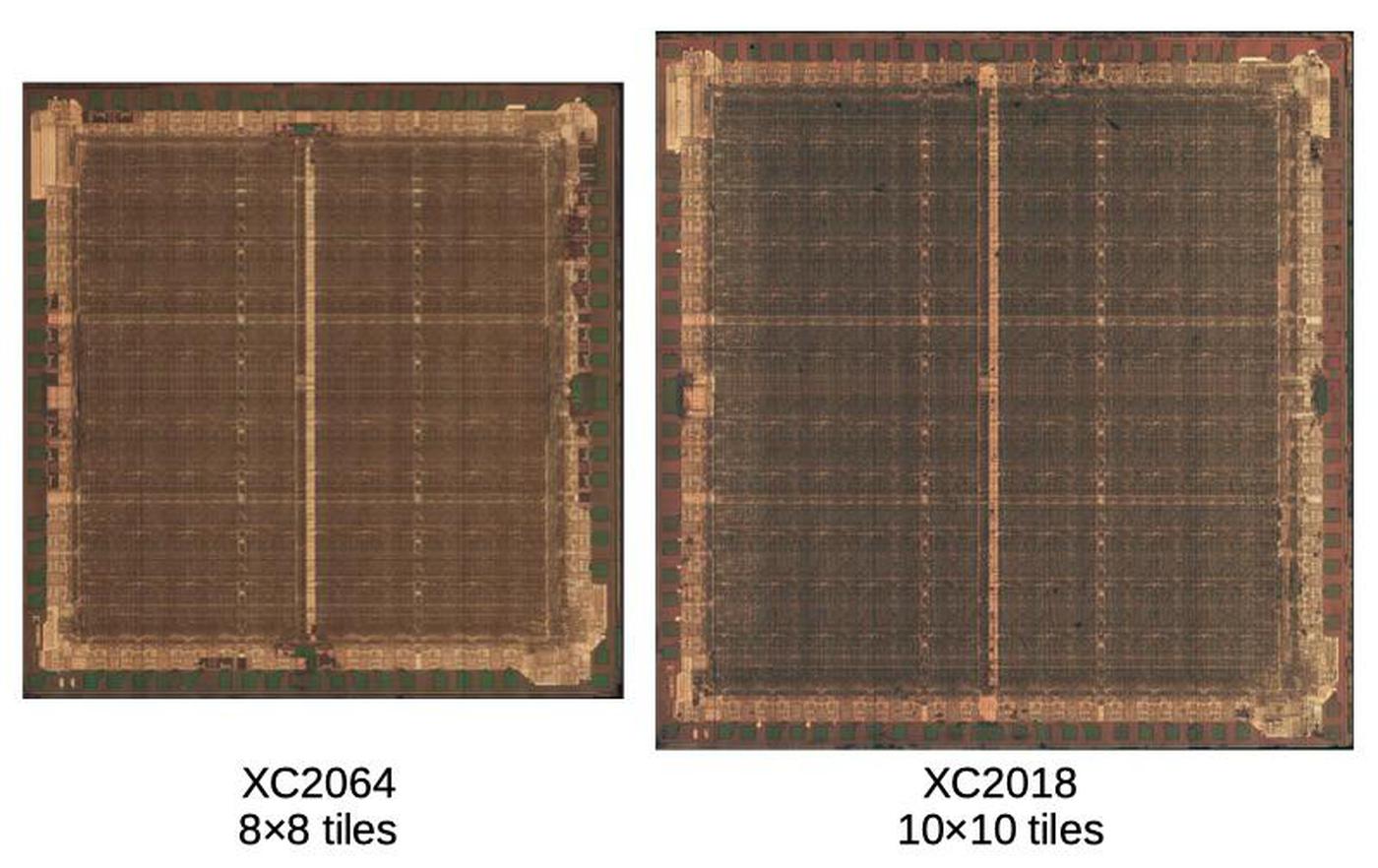

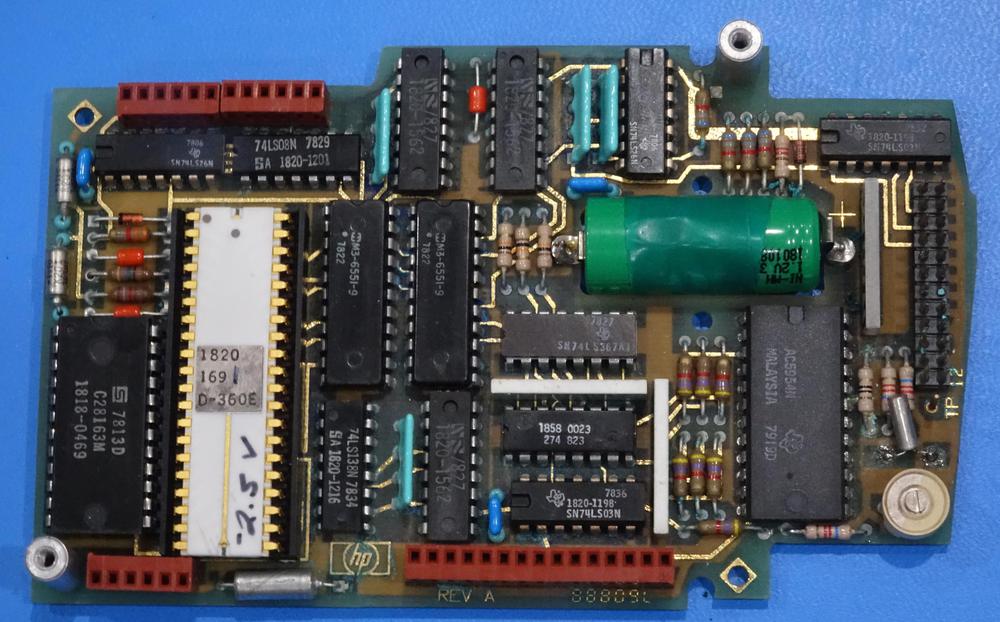

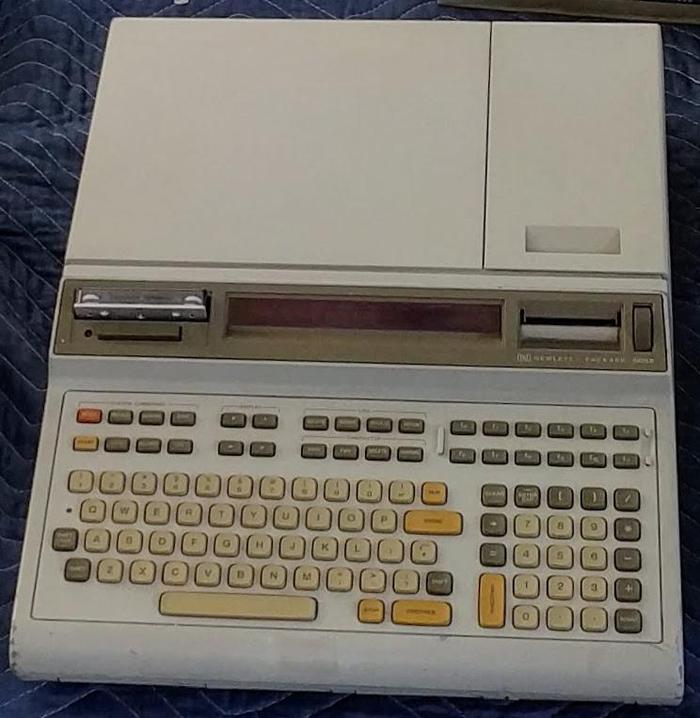

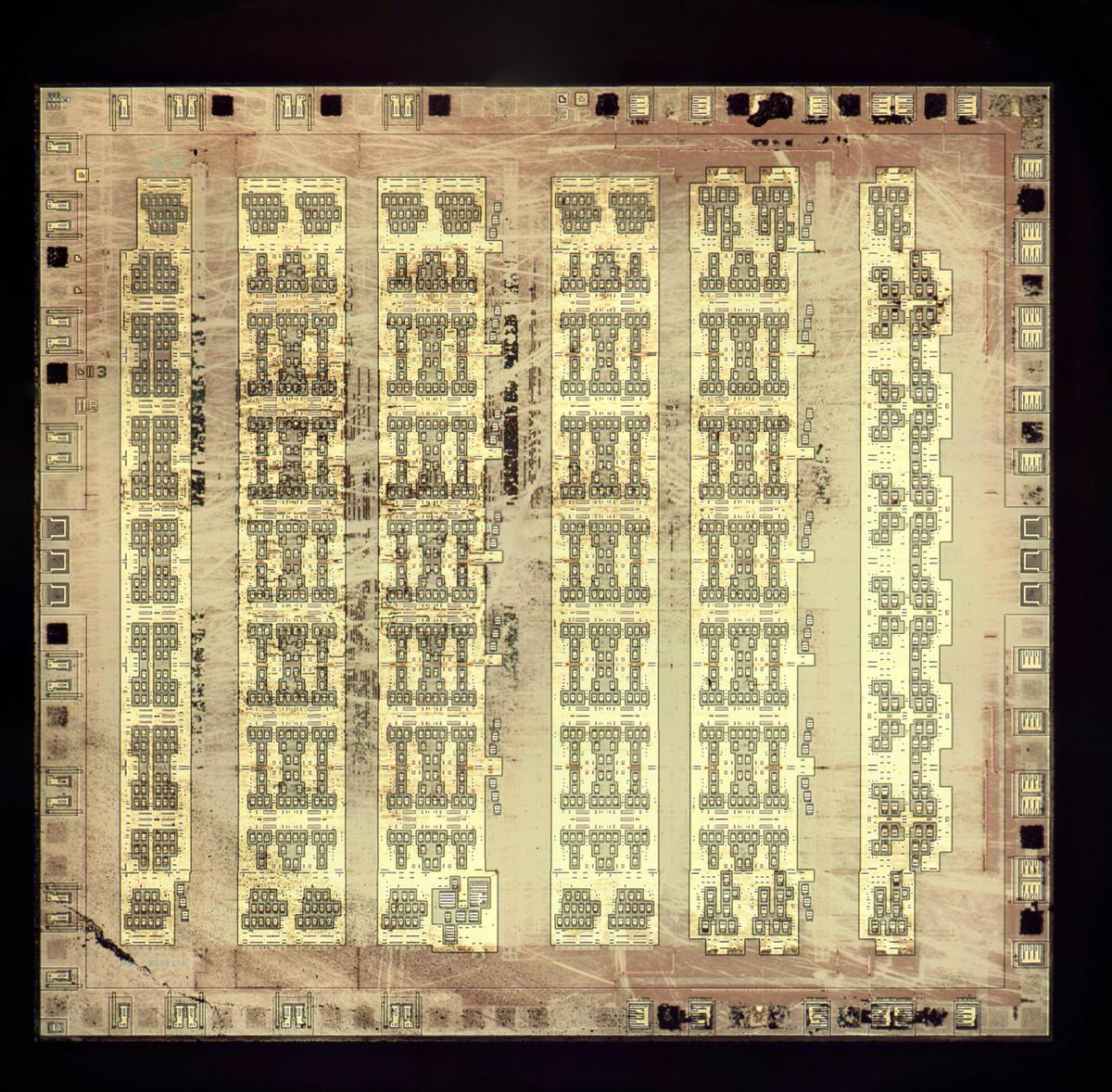

The Xilinx XC2018 FPGA (below) is a 100-cell version of the XC2064 FPGA. Internally, it uses the same tiles as the 64-cell XC2064, except it has a 10×10 grid of tiles instead of an 8×8 grid. The bitstream format of the XC2018 is very similar, except with more entries.

The Xilinx XC2018 FPGA. On the right, the lid has been removed, showing the silicon die. The tile pattern is faintly visible on the die.The image below compares the XC2064 die with the XC2018 die. The dies are very similar, except the larger chip has two more rows and columns of tiles.

Comparison of the XC2064 and XC2018 dies. The images are scaled so the tile sizes match; I don't know how the physical sizes of the dies compare. Die photos from siliconpr0n. -

While the bitstream directly maps onto the hardware layout, the bitstream file (.RBT) does have a small amount of formatting, shown below.

The format of the bitstream data, from the datasheet. -

The configuration memory is implemented as static RAM (SRAM) cells. (Technically, the memory is not RAM since it must be accessed sequentially through the shift register, but people still call it SRAM.) These memory cells have five transistors, so they are known as 5T SRAM.

One question that comes up is if there are any unused bits in the bitstream. It turns out that many bits are unused. For instance, each tile has an 18×8 block of bits assigned to it, of which 27 bits are unused. Looking at the die shows that the memory cell for an unused bit is omitted entirely, allowing that die area to be used for other circuitry. The die photo below shows 9 implemented bits and one missing bit.

Memory cells, showing a gap where one cell is missing. Die photo from siliconpr0n. -

The switch matrix has 20 pass transistors. Since each tile is 18 memory cells wide, two of the transistors are connected to slightly more distant memory cells. ↩

-

A few notes on the CLB input multiplexer. The control signal

EFGHis the complement ofABCD, so only one control signal is needed in the bitstream and only one memory cell for this signal. Second, other inputs to the CLB have 6 or 10 choices; the same two-level multiplexer approach is used, changing the number of inputs and control signals. Finally, a few of the control signals are inverted (probably because the inverted memory output was closer). This can cause confusion when trying to understand the bitstream, since some bits appear to select 6 inputs instead of 2. Looking at the complemented bit, instead, restores the pattern. ↩ -

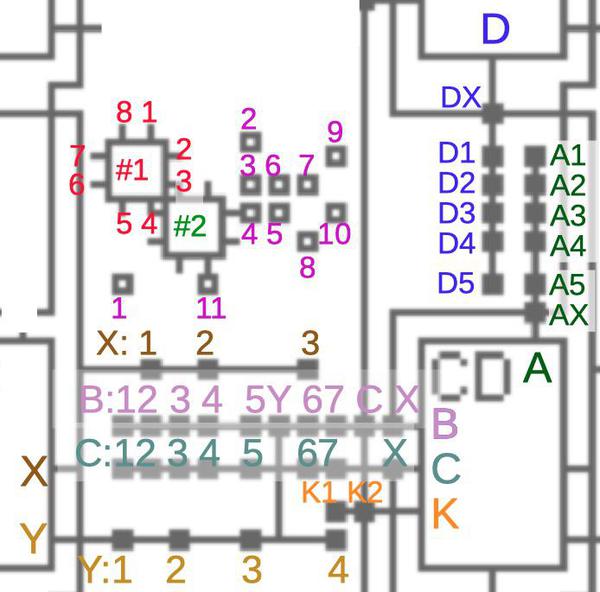

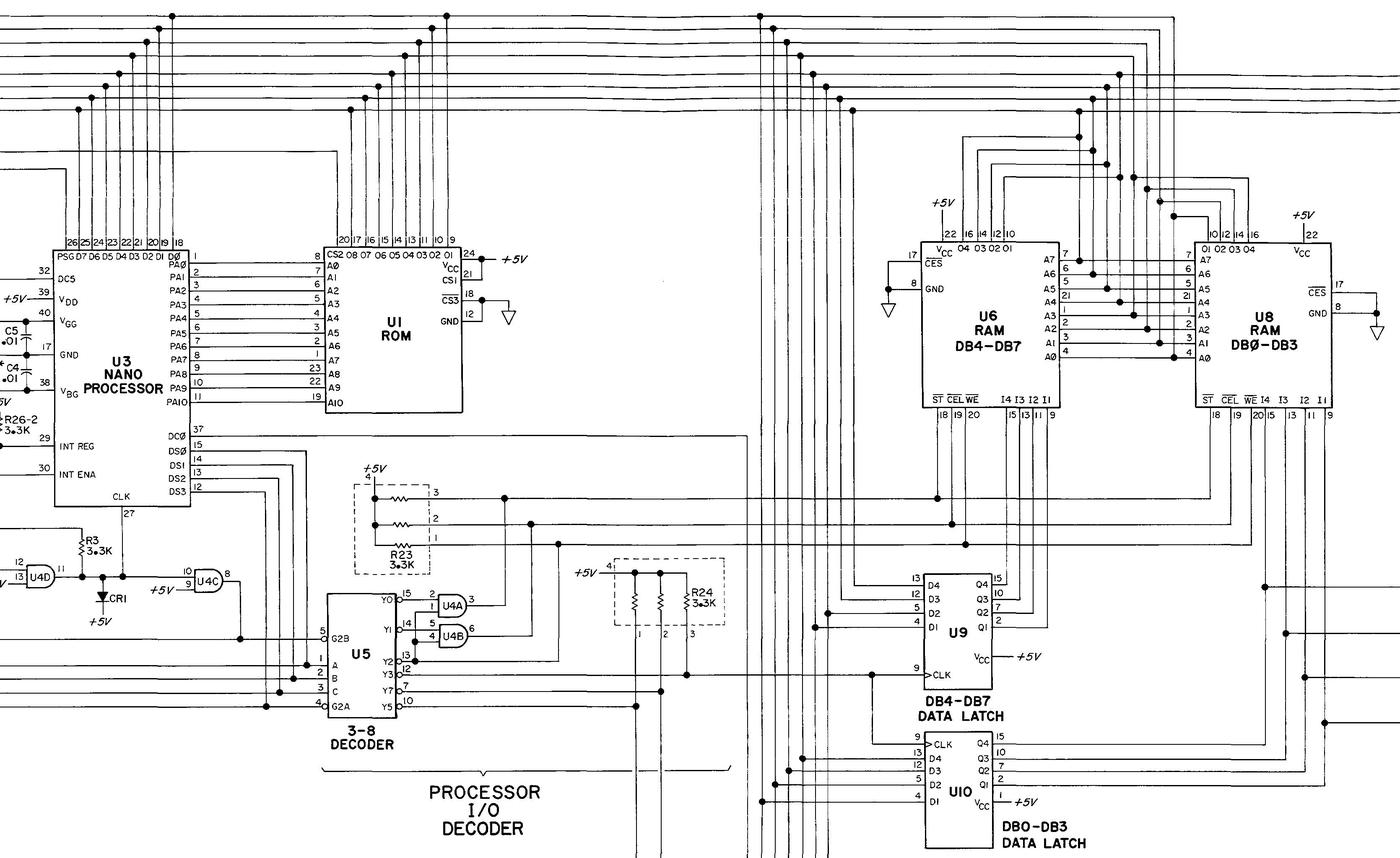

The following table summarizes the meaning of each bit in a tile's 8×18 part of the bitstream. Each entry in the table corresponds to one bit in the bitstream and indicates what part of the FPGA is controlled by that bit. Empty entries indicate unused bits.

#2: 1-3 #2: 3-4 PIP D2,D5 (bit inverted) Gin_3 = D G = 1 2' 3' #2: 1-2 #2: 2-6 #2: 2-4 PIP A2,A5 (bit inverted) Gin_3 = C G = 1' 2' 3' #2: 3-7 #2: 3-6 PIP D3, D4, D5 PIP A3, A4, A5 G = 1' 2 3' #2: 2-7 #2: 2-8 ND 11 PIP A1, A4 G = 1 2 3' #2: 1-5 #2: 3-5 PIP A3, AX PIP D1, D4 Y=F G = 1 2' 3 #2: 4-8 #2: 5-8 ND 10 PIP D3, DX Y=G Gin_2 = B G = 1' 2' 3 #2: 7-8 #2: 6-8 ND 9 PIP B2, B5, B6, BX, BY PIP Y2 X=G Gin_1 = A G = 1' 2 3 #2: 5-6 #2: 5-7 ND 8 PIP B3,BX (bit inverted) PIP Y4 X=F G = 1 2 3 #2: 4-6 #2: 1-4 #2: 1-7 PIP C1, C3, C4, C7 PIP X3 Q = LATCH Base FG (separate LUTs) #1: 3-5 #1: 5-8 #1: 2-8 PIP X2 #1: 3-4 #1: 2-4 ND 7 PIP C3,CX (bit inverted) PIP X1 Fin_1 = A F = ! 1 2 3 #1: 1-2 #1: 1-3 ND 6 PIP B6, B7 CLK = enabled Fin_2 = B F = 1' 2 3 #1: 1-5 #1: 1-4 ND 5 PIP C6, C7 CLK = inverted (FF), noninverted (LATCH) F = 1' 2' 3 #1: 4-8 #1: 4-6 ND 4 PIP C4, C5 CLK = C F = 1 2' 3 #1: 2-7 #1: 1-7 ND 3 PIP B4, B5 PIP K1 SET = F F = 1 2 3' #1: 2-6 #1: 3-6 ND 2 PIP B2, BC PIP K2 SET = none F = 1' 2 3' #1: 7-8 #1: 3-7 ND 1 PIP C1, C2 PIP Y3 RES = D or G Fin_3 = C F = 1' 2' 3' #1: 6-8 #1: 5-6 #1: 5-7 PIP B1, BY PIP Y1 RES = G Fin_3 = D F = 1 2' 3' The first two columns of the table indicate the switch matrices. There are two switch matrices, labeled #1 (red) and #2 (green) in my diagram below. The 8 pins on matrix #1 are labeled 1-8 clockwise. (Switch #2 is the same, but there wasn't room for the labels.) For example, "#2: 1-3" indicates that bit connects pins 1 and 3 on switch #2. The next column defines the "ND" non-directional connections, the boxes below with purple numbers near the switch matrices. Each ND bit in the table controls the corresponding ND connection.

Diagram of the interconnect showing the numbering scheme I made up for the bitstream table.The next two columns describe what I'm calling the PIP connections, the solid boxes on lines above. The connections from output X (brown) are controlled by individual bits (X1, X2, C3). Likewise, the connections from output Y (yellow). The connections to input B (light purple) are different. Only one of these input connections can be active at a time, so they are encoded with multiple bits using the multiplexer scheme. Inputs C (cyan), D (blue) and A (green) are similar. The remaining table columns describe the CLB; refer to the datasheet for details. Bits control the clock, set and reset lines. The X and Y outputs can be selected from the F or G LUTs. The last two columns define the LUTs. There are three inputs for LUT F and three inputs for LUT G, with multiplexers controlling the inputs. Finally, the 8 bits for each LUT are defined, specifying the output for a particular combination of three inputs. ↩

-

Various FPGA patents provide some details on the chips: 4870302, 4642487, 4706216, 4758985, and RE34363. XACT documentation was formerly at Xilinx, but they seem to have removed it. It can now be found here. John McMaster has some xc2064 tools available. ↩