Onboard the Apollo spacecraft, the revolutionary Apollo Guidance Computer helped navigate to the Moon and land on its surface. One of the first computers to use integrated circuits, the Apollo Guidance Computer was lightweight enough and small enough (70 pounds and under a cubic foot) to fly in space. An unusual feature that contributed to its small size was core rope memory, a technique of physically weaving software into high-density storage. In this blog post, I take a close look at core rope and the circuitry that made it work.1

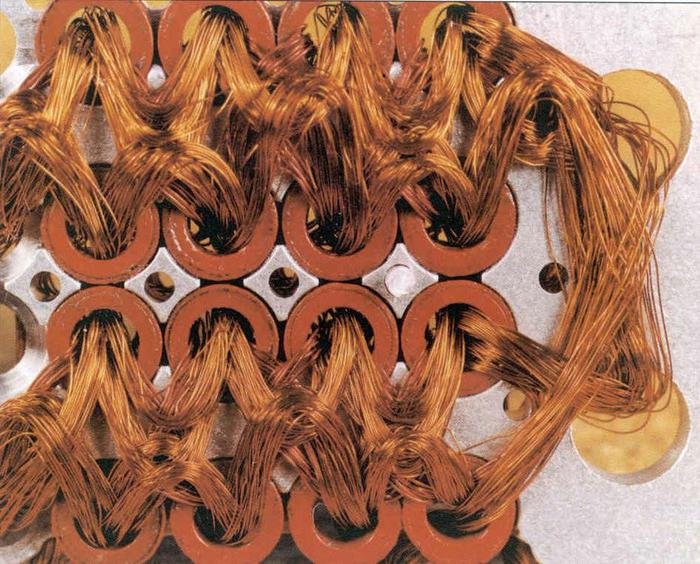

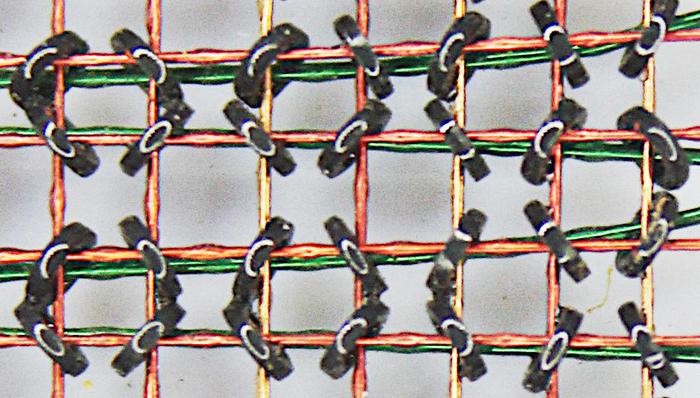

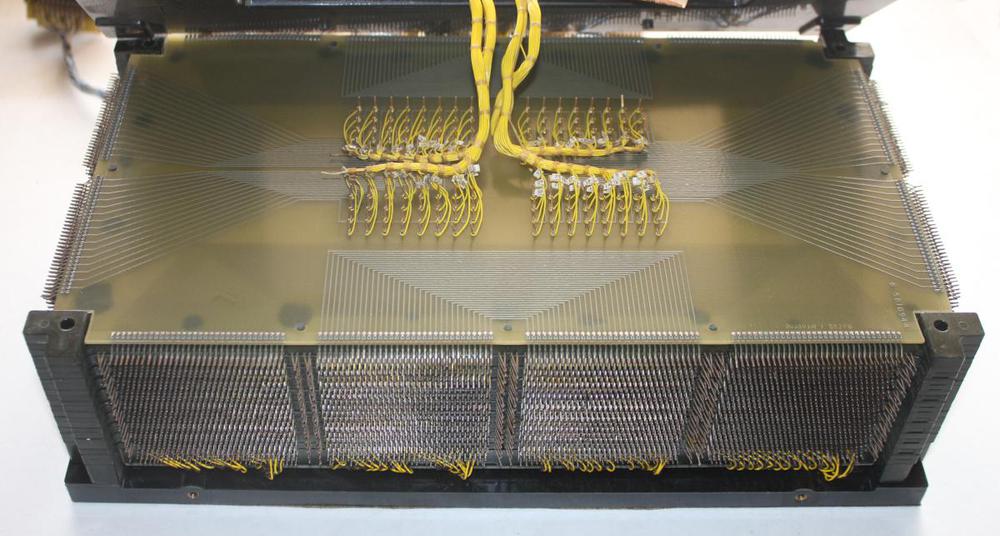

The Apollo Guidance Computer (AGC) had very little memory by modern standards: 2048 words of RAM in erasable core memory and 36,864 words of ROM in core rope memory. In the 1960s, most computers (including the AGC) used magnetic core memory for RAM storage, but core ropes were unusual and operated differently. Erasable core memory and core rope both used magnetic cores, small magnetizable rings. But while erasable core memory used one core for each bit, core rope stored an incredible 192 bits per core, achieving much higher density.2 The trick was to put many wires through each core (as shown above), hardwiring the data: a 1 bit was stored by threading a wire through a core, while the wire bypassed the core for a 0 bit. Thus, once a core rope was carefully manufactured, using a half-mile of wire, data was permanently stored in the core rope.

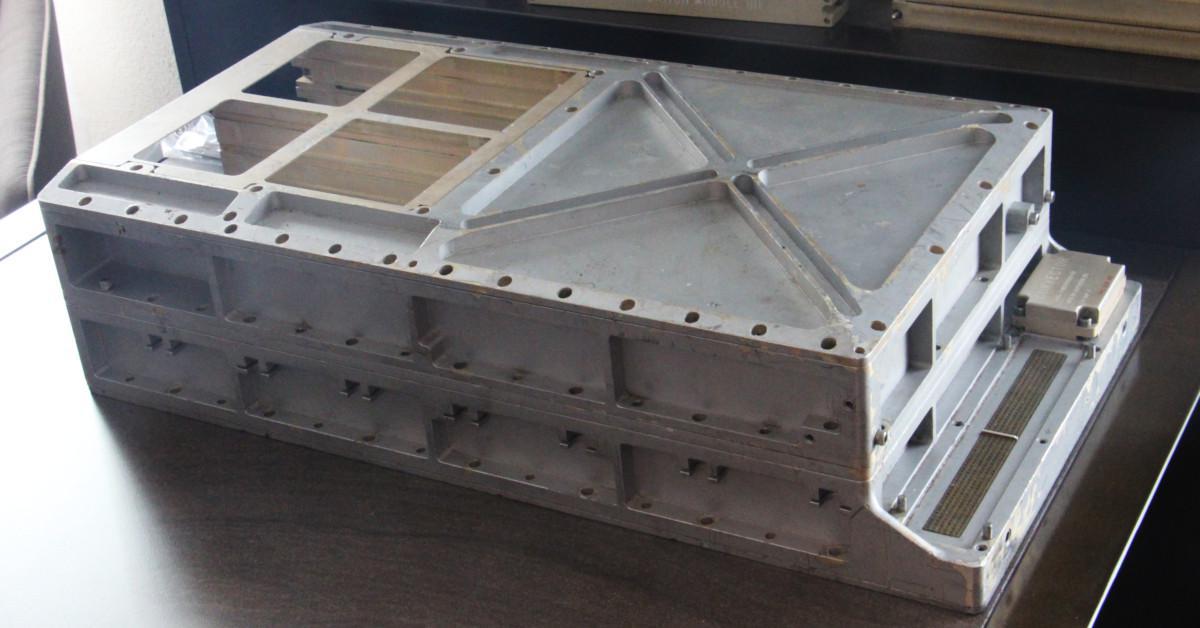

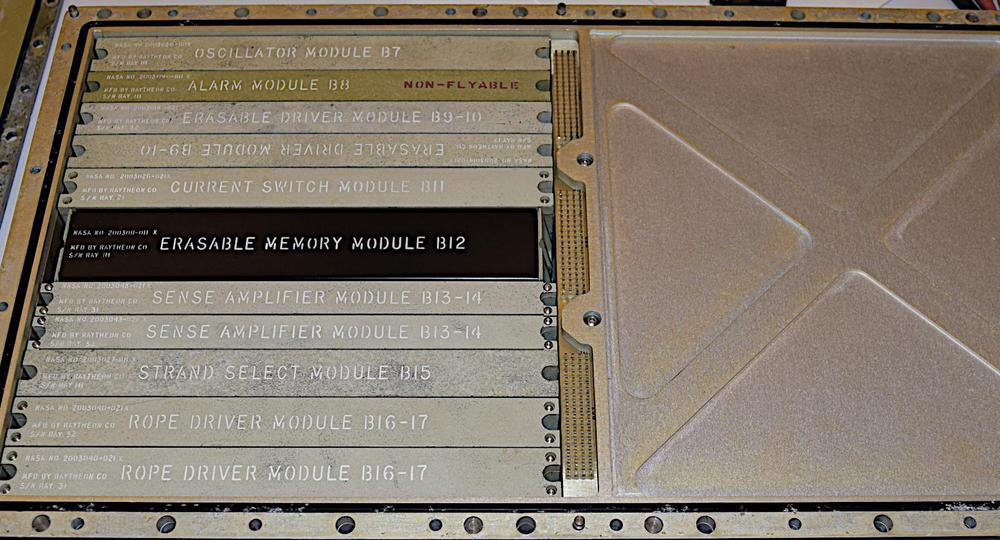

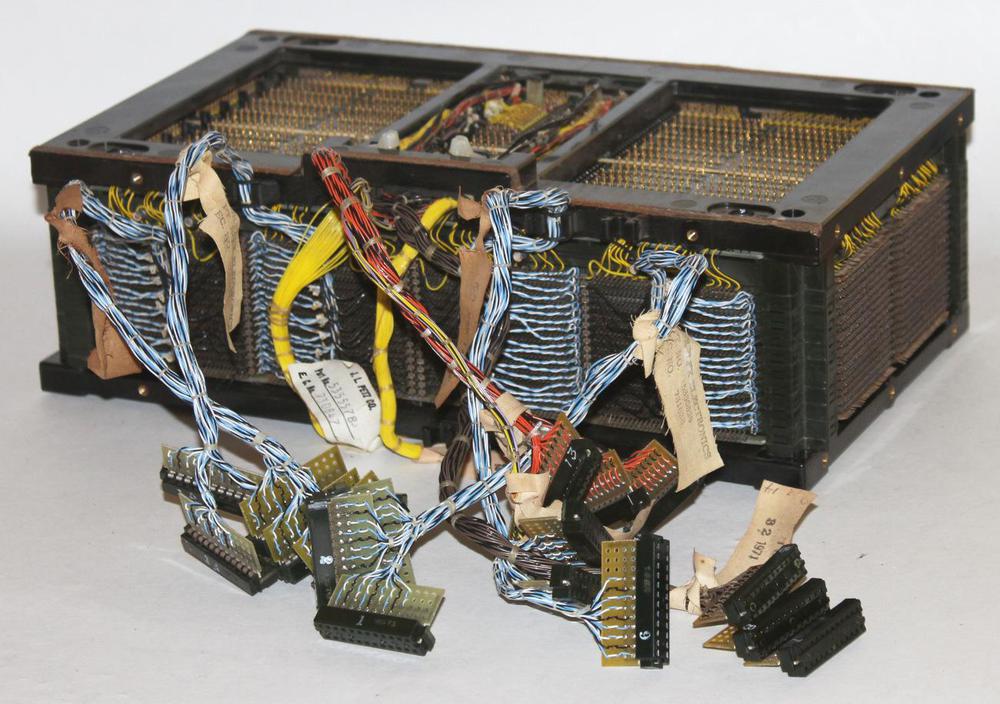

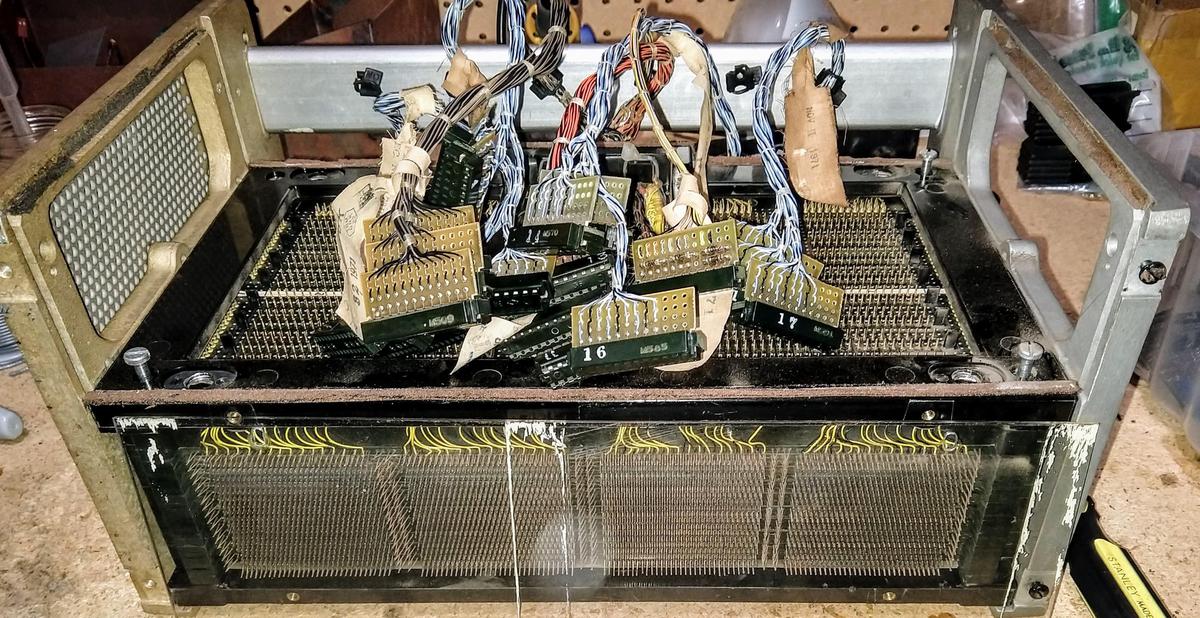

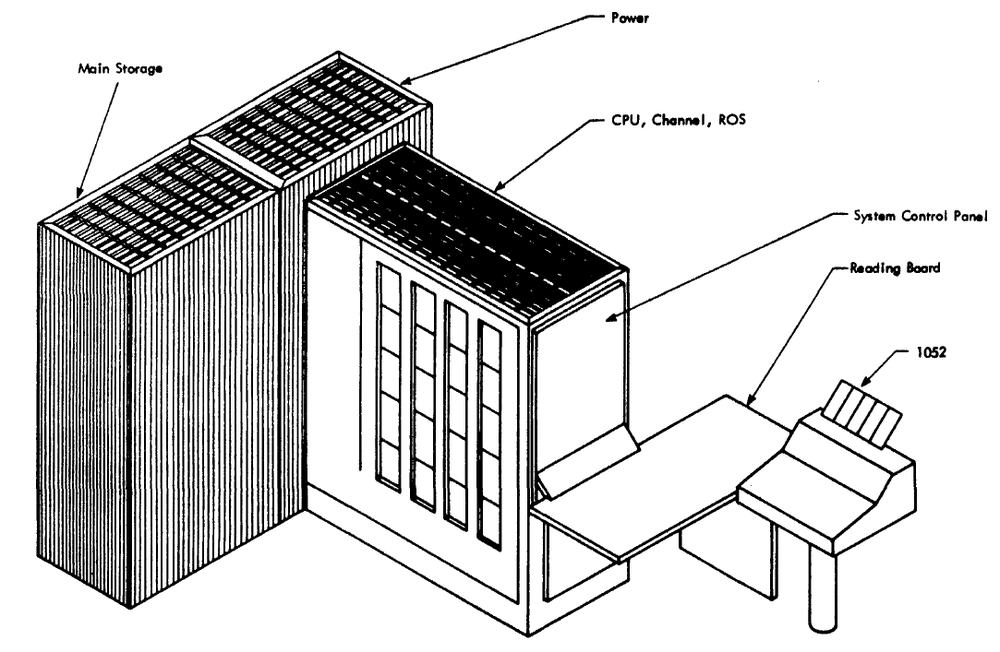

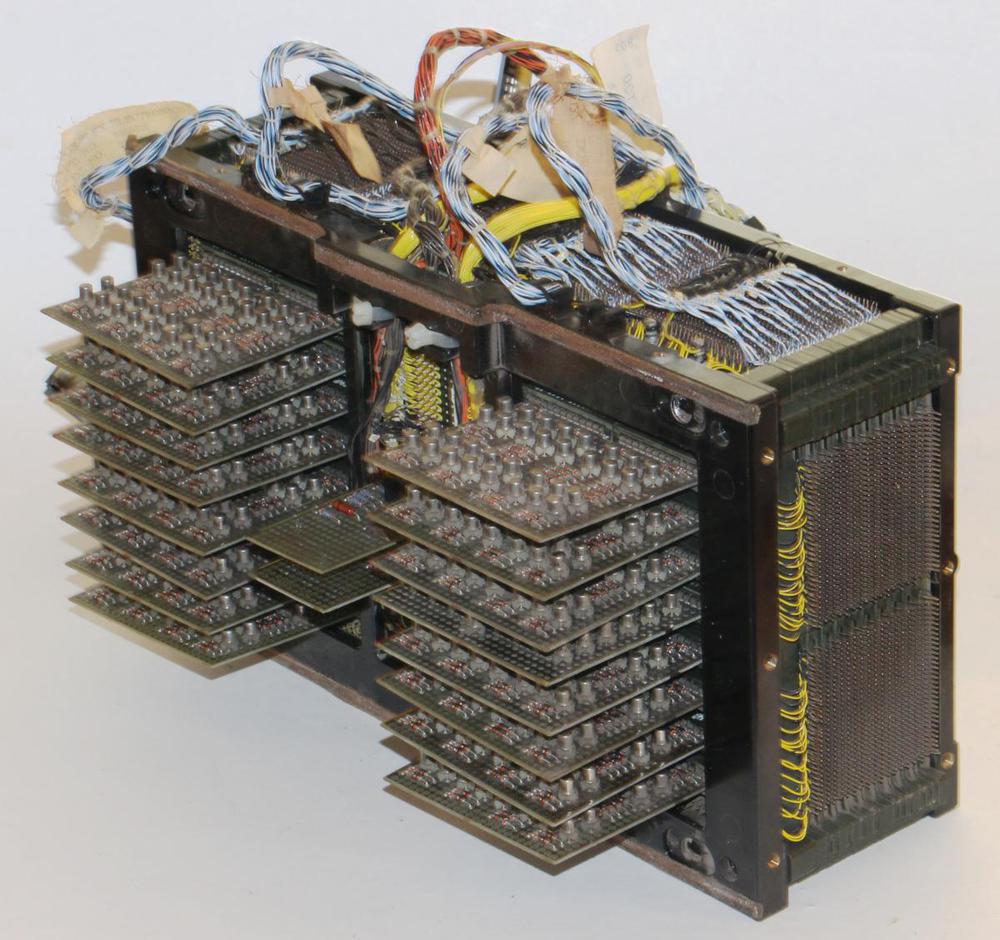

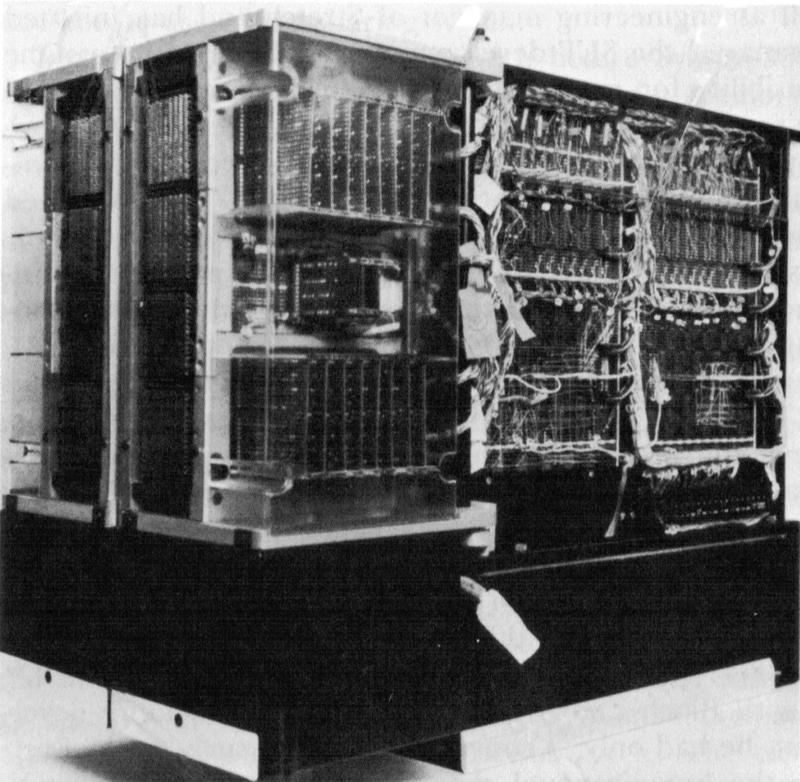

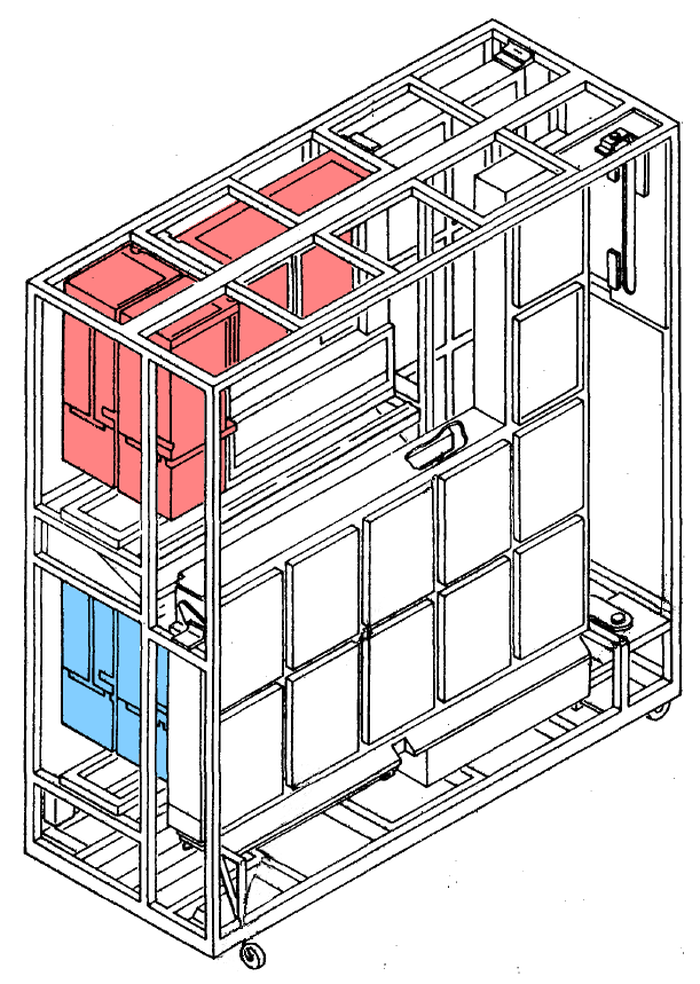

We3 are restoring the Apollo Guidance Computer shown above. The core rope modules (which we don't have)4 would be installed in the empty space on the left. On the right of the AGC, you can see the two connectors that connected the AGC to other parts of the spacecraft, including the DSKY (Display/Keyboard). By removing the bolts holding the two trays together, we could disassemble the AGC. Pulling the two halves apart takes a surprising amount of force because of the three connectors in the middle that join the two trays. The tray on the left is the "A" tray, which holds the logic and interface modules. The tray on the right is the "B" tray, which holds the memory circuitry, oscillator, and alarm. The six core rope modules go under the metal cover in the upper right. Note that the core ropes took up roughly a quarter of the computer's volume.

How core rope works

At a high level, core rope is simple: sense wires go through cores to indicate 1's, or bypass cores to indicate 0's. By selecting a particular core, the sense wires through that core were activated to provide the desired data bits.

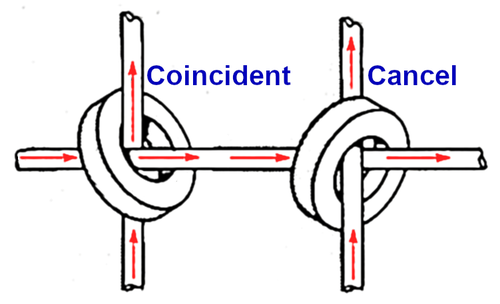

Magnetic cores have a few properties that made core memory work.7 By passing a strong current along a wire through the core, the core becomes magnetized, either clockwise or counterclockwise depending on the direction of the current. Normally the cores were all magnetized in one direction, called the "reset" state, and when a core was magnetized the opposite direction, this is called the "set" state. When a core flips from one state to another, the changing magnetic field induces a small voltage in any sense wires through the core. A sense amplifier detects this signal and produces a binary output.

The key advantage of core rope is that many sense wires pass through a single core, so you can store multiple bits per core and achieve higher-density storage. (In the case of the AGC, each core has 192 sense wires passing through (or around) it5, so each core stored 12 words of data.) This is in contrast to regular read/write core memory, where each core held one bit.

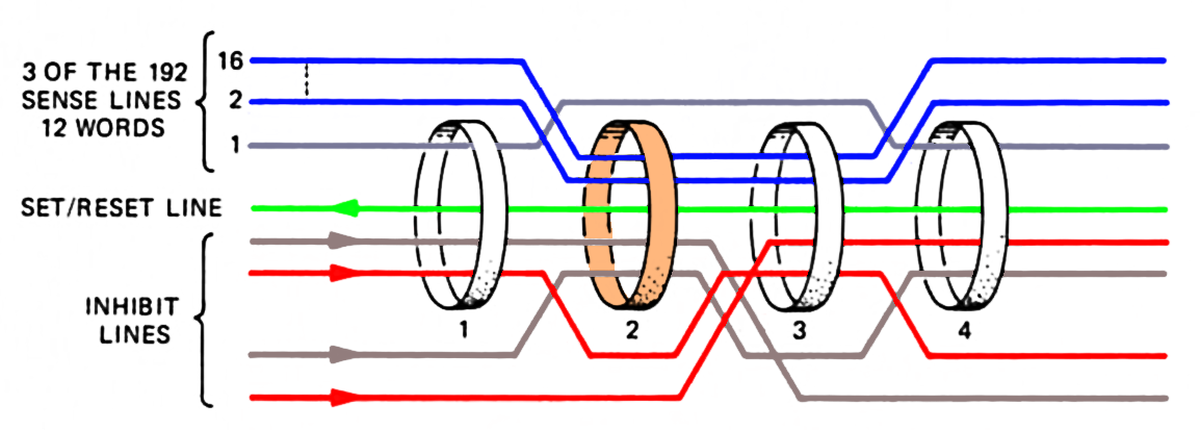

Core rope used an unusual technique to select a particular core to flip and read. Instead of directly selecting the desired core, inhibit lines blocked the flipping of every core except the desired one. In the diagram below, the current on the set line (green) would potentially flip all the cores. However, various inhibit lines (red) have a current in the opposite direction. This cancels out the set current in all the cores except #2, so only core #2 flips.

In the diagram above, only the sense lines (blue) passing through core #2 pick up an induced voltage. Thus, the weaving pattern of the sense lines controls what data is read from core #2. To summarize, the inhibit lines control which core is selected, and the sense wires woven through that core control what data value is read.

The inhibit lines are driven from the address lines and arranged so that all inhibit lines will be inactive for just the desired core. For any other address, at least one inhibit line will be activated, preventing the core from flipping and being read. 6

The AGC's core ropes

The Apollo Guidance Computer contained six core rope modules, each storing 6 kilowords of program information. The AGC was a 15-bit machine: each word consisted of 15 data bits and a parity bit. (While a word that isn't a power of two may seem bizarre now, computers in the 1960s were designed with whatever word size fit the problem.8) Each module contained 512 cores, each storing 12 words of data. That is, each module had 192 (12×16) sense wires going either through or around each core. Each group of 16 sense wires for a word was called a "strand", so there were 12 strands.

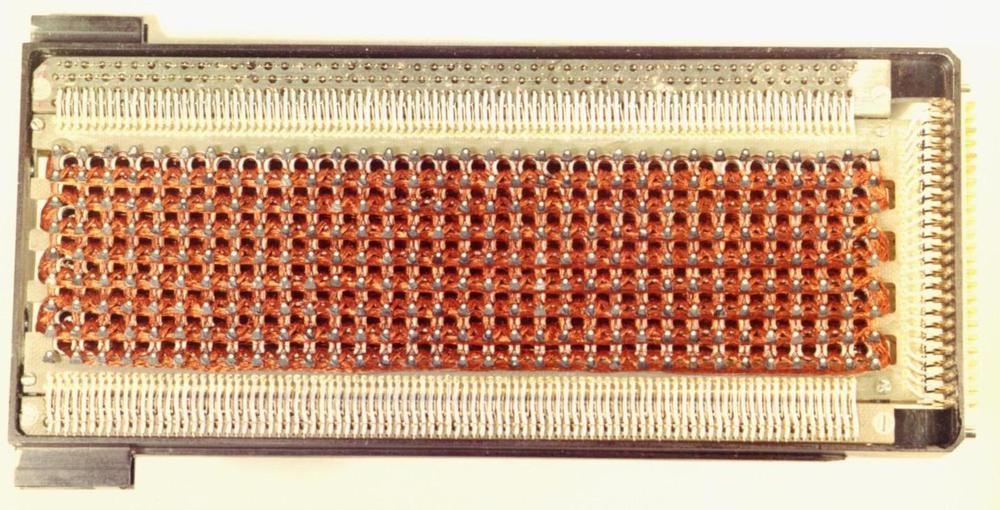

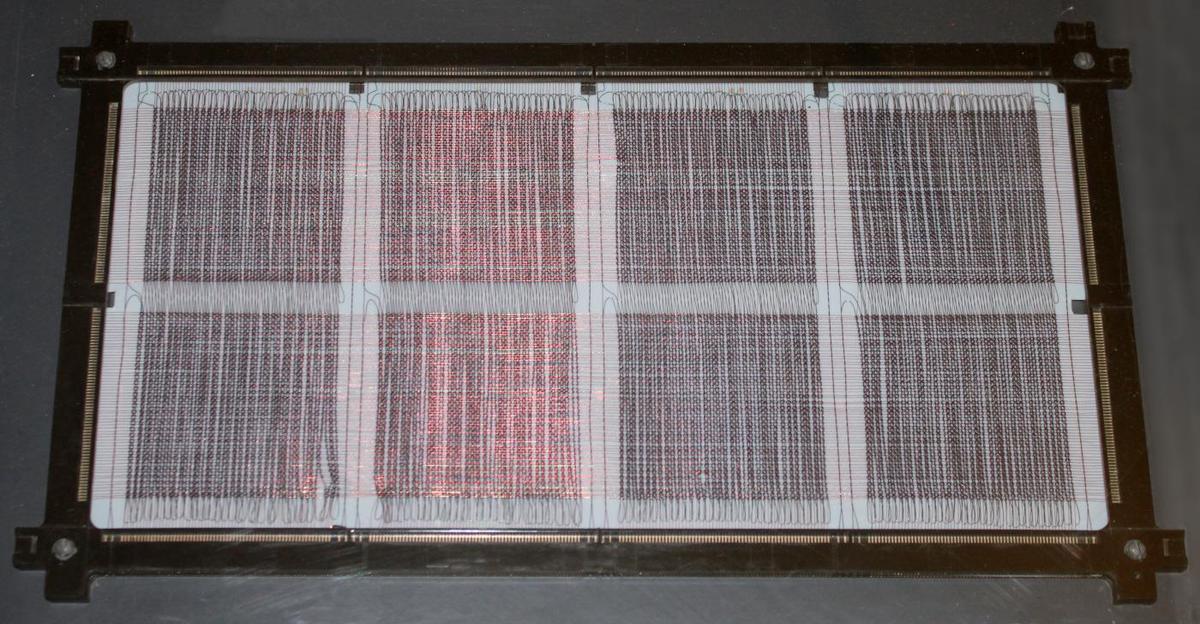

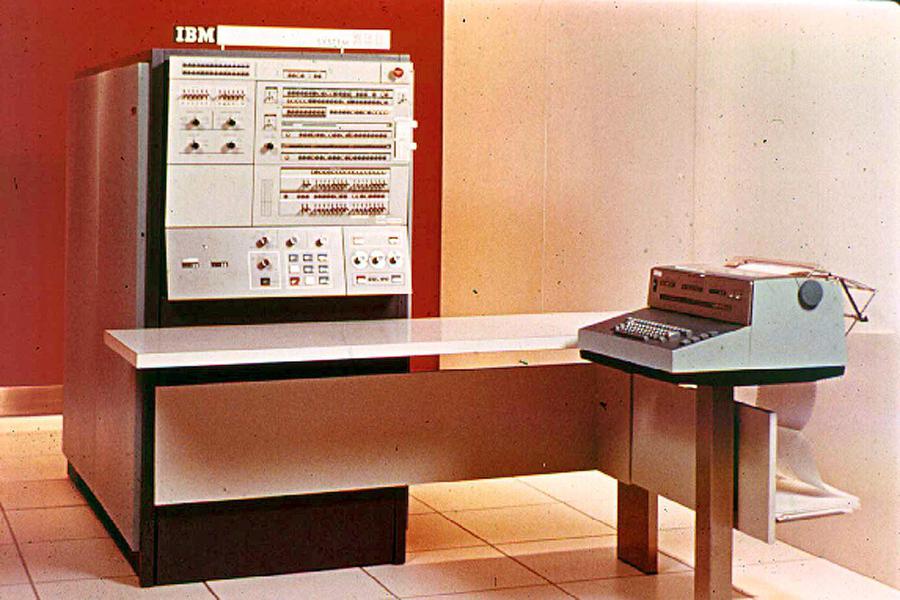

The photo above shows how the core rope modules slid into the Apollo Guidance Computer; the pins on the end of each module meshed with connectors in the AGC. The core rope module below (and its companion) held an early Lunar Module program called Retread 50. We took our AGC to the Computer History Museum to read the data from these modules and we put the results online.

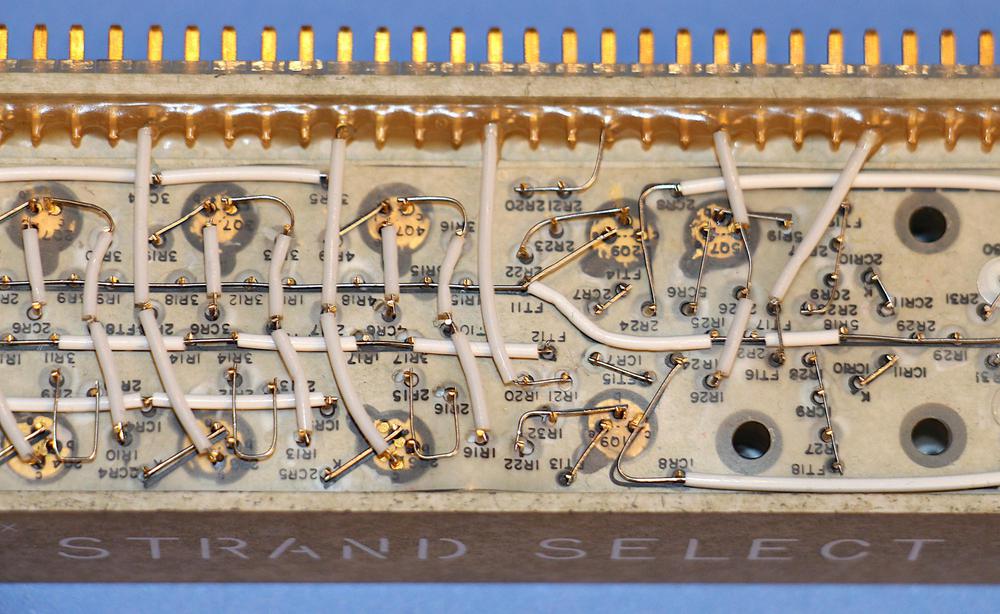

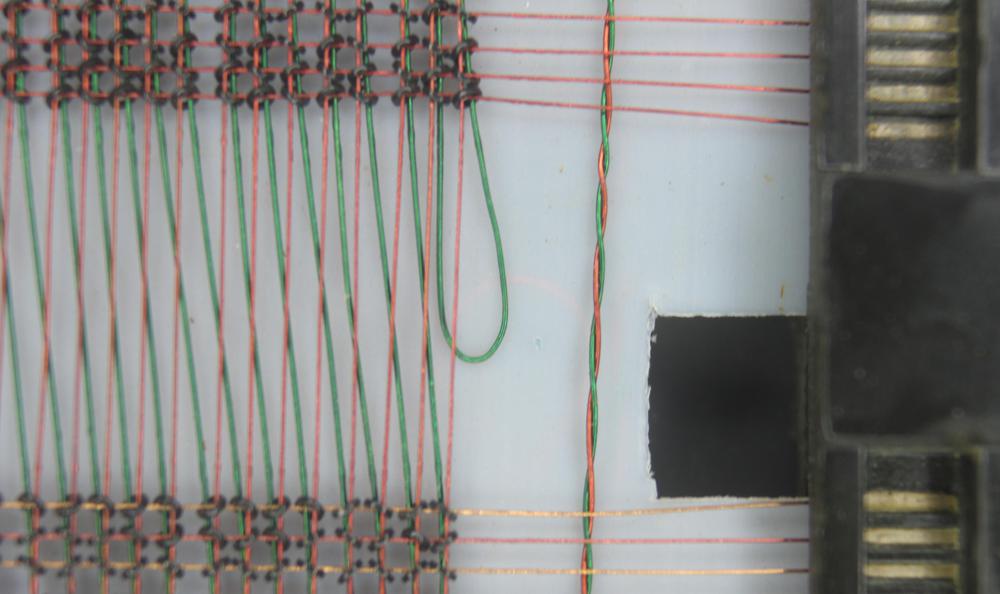

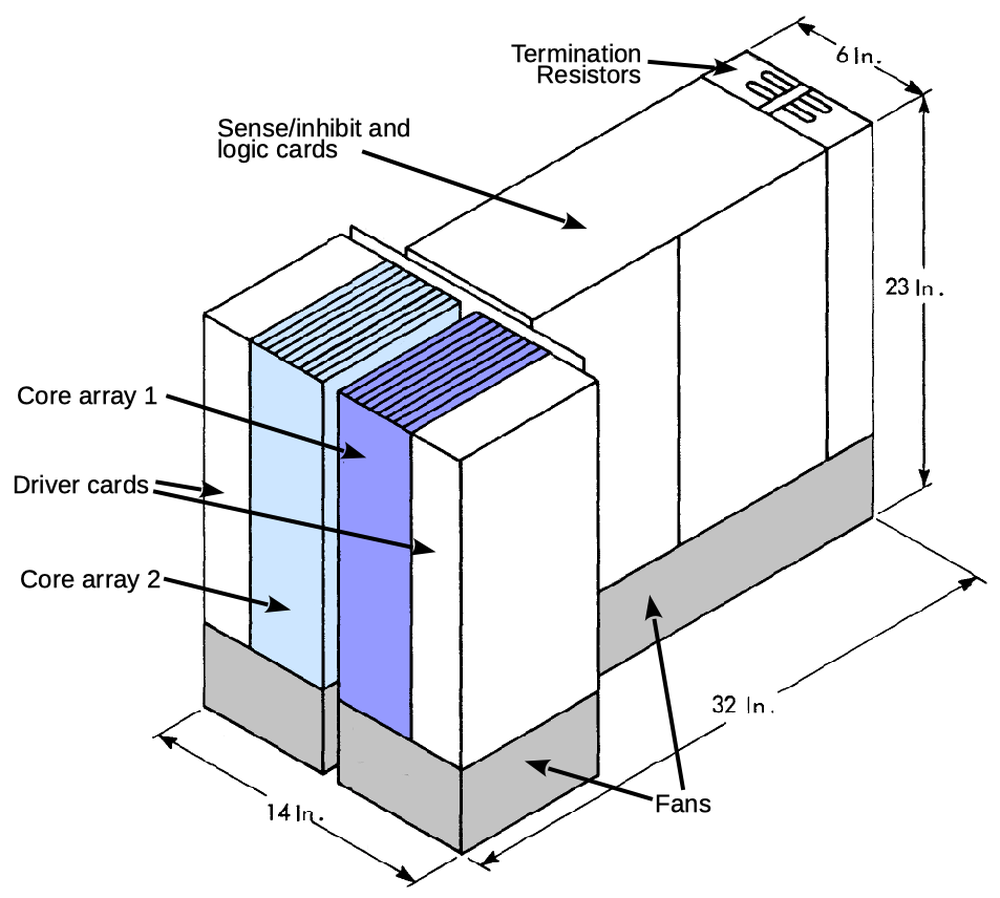

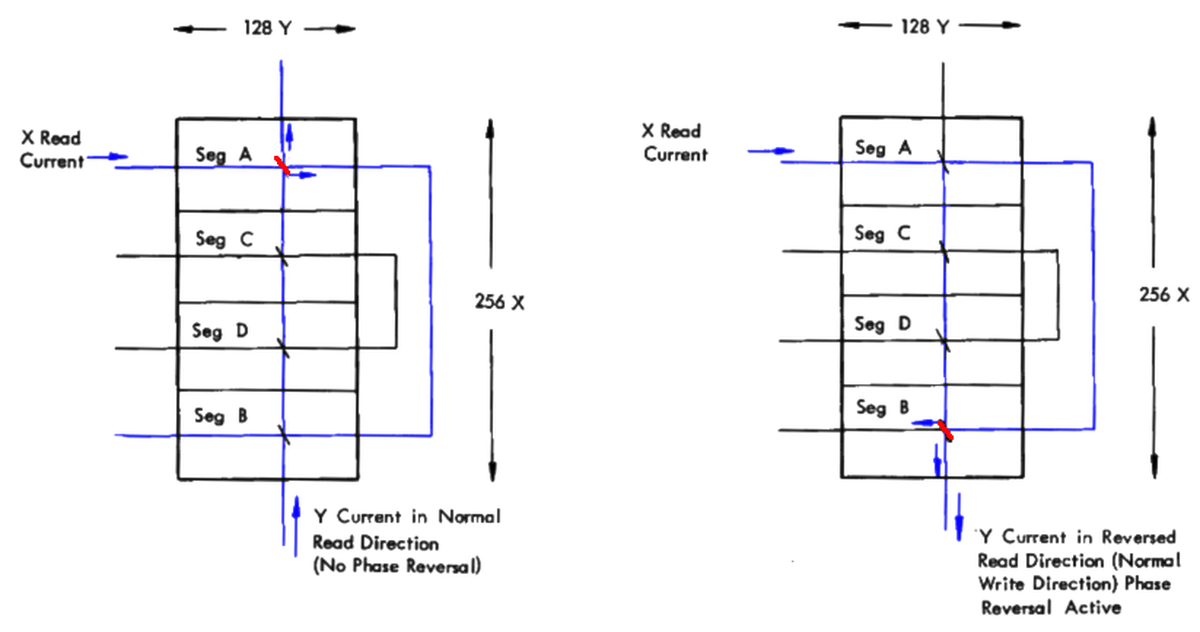

The 512 cores in each module were arranged physically as two layers of 256 cores (but electrically as four planes of 128 cores).9 A set and reset line went through all the cores in a plane, allowing a particular plane in the module to be selected.6 The photo below shows the interior of a core rope module. One layer of 256 cores is visible, with the tiny wires threaded through them. (The second layer of 256 cores is underneath.) Note that the cores only take up about half the module space. Surrounding the cores are hundreds of resistors and diodes that were used to select the desired word.10: These components were mounted with cordwood construction, with the components installed vertically through holes in the module.

The photo below shows one of the Retread 50 modules from the Computer History Museum with the cover removed. The cores were encased (potted) in protective epoxy to protect them during flight, so the cores are not visible.

Manufacturing the core rope

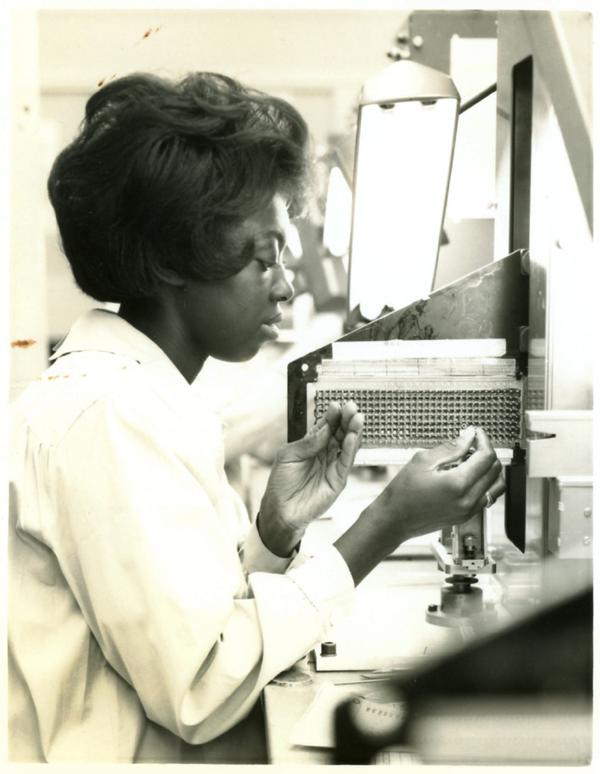

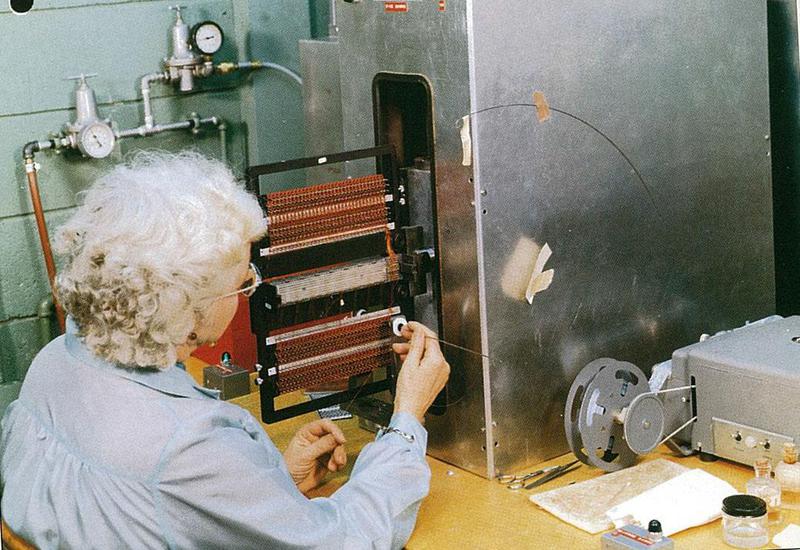

Wiring of the core rope was a tedious process that took about 8 weeks and cost $15,000 per module. As a result, the computer code needed to be frozen months in advance and last-minute patches to the code were not possible.11 The core ropes (and the AGC) were manufactured by Raytheon in Waltham, Massachusetts. Many of the women building the ropes were hired from the local textile industry for their sewing skills; other skilled women came from the Waltham Watch Company, a company that also helped with the high-precision gyroscopes used on the Apollo missions.12

Much of the core rope wiring (address inhibit wires, set, reset, etc.) was the same for all core rope modules. Two women passed a needle back and forth through the cores to create this wiring. The needle was hollow and contained the necessary length of wire. The clip above (from Computers for Apollo via xpascal) shows this process.

To store the desired binary data, the core rope's sense lines were threaded through or around cores in the proper sequence. Originally, this wiring was done entirely manually, which was slow and error-prone. Raytheon improved the process by combining automated positioning with manual threading. First, the program's assembly code was fed into an assembler called YUL that produced a Mylar punched tape. An automated system (above, below) read this tape and step-by-step moved the proper core into position. A woman manually threaded the sense line through an aperture into the indicated core. The aperture then jogged down to pull the wire around a nylon pin, moving the wire out of the way for the next sense wire to be threaded. Once all the cores were threaded, the nylon pins were removed and the final core rope module was tested by an automated system, again controlled by punched tape.

Core rope circuitry

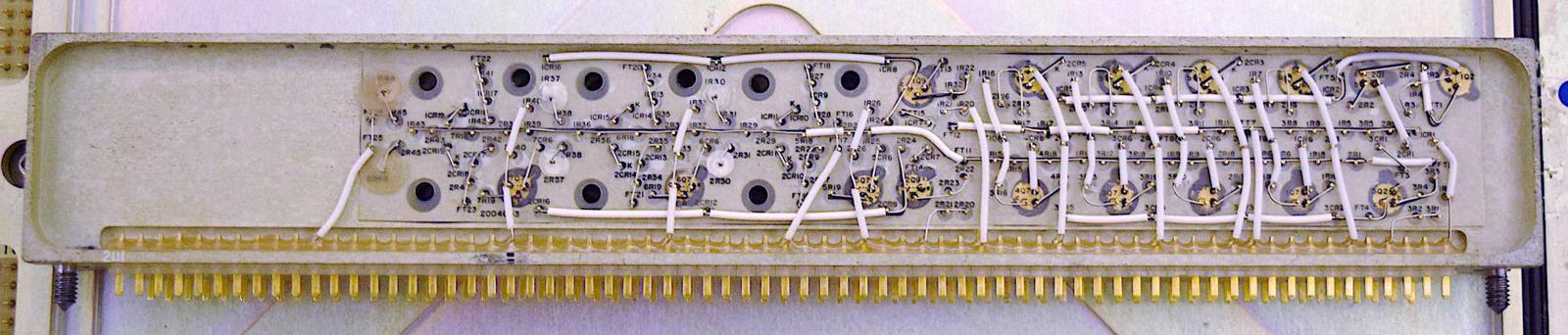

Core rope required a lot of digital and analog circuitry to drive and read the ropes. This section briefly describes this circuitry and shows the modules that implemented it. The bottom four modules in the picture below (Sense Amplifier, Strand Select, and two Rope Drivers) implemented the analog circuitry. Logic modules (in the "A" tray shown earlier) decoded the address into rope, module, and strand select signals. We carefully tested the analog and digital modules individually before powering up the AGC.

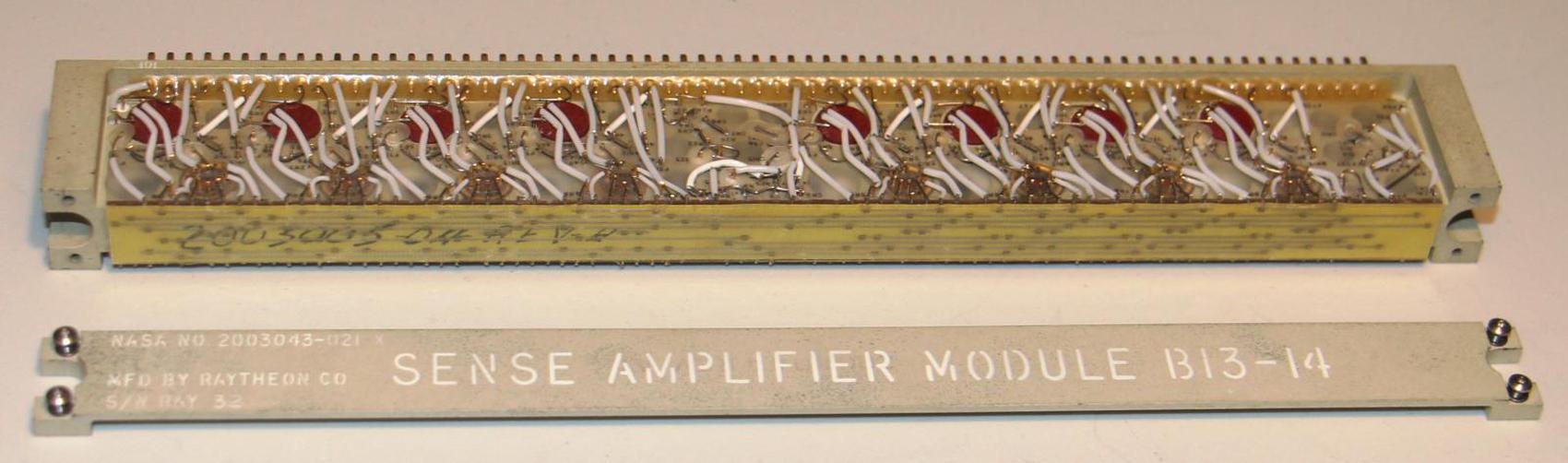

Sense Amplifier Modules

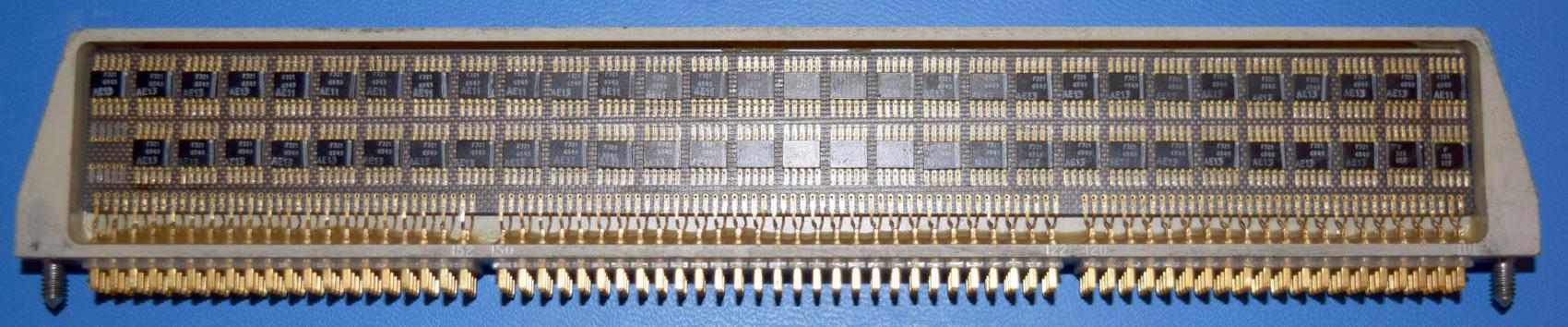

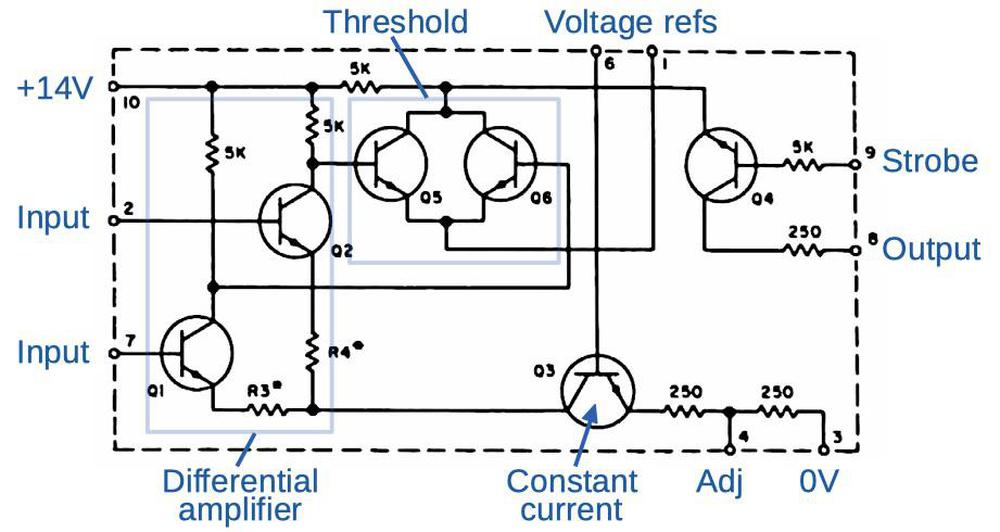

When a core flipped (either in fixed memory or erasable memory), the changing magnetic field induced a weak signal in a sense line, one sense line for each bit in the word. This signal needed to be amplified and converted to a logic signal; this was the job of the sense amplifiers. The sense amplifiers were implemented using a custom sense amplifier IC. (The AGC used only two different types of integrated circuits, the sense amplifier and a dual NOR gate.) The AGC had two identical sense amplifier modules; one (in slot B13) was used by the erasable core memory, while the other (B14) was used by the fixed core rope memory.

The photo below shows a sense amp module. Eight sense amplifiers are visible and eight other sense amplifiers are on the other side of the module. The sense amplifiers required carefully-tuned voltage levels for bias and thresholds so the modules included voltage regulation circuitry (center and right in photo). On top of the module (front in the photo), you can see the horizontal lines of the nickel ribbon that connected the circuits; it is somewhat similar to a printed circuit board.

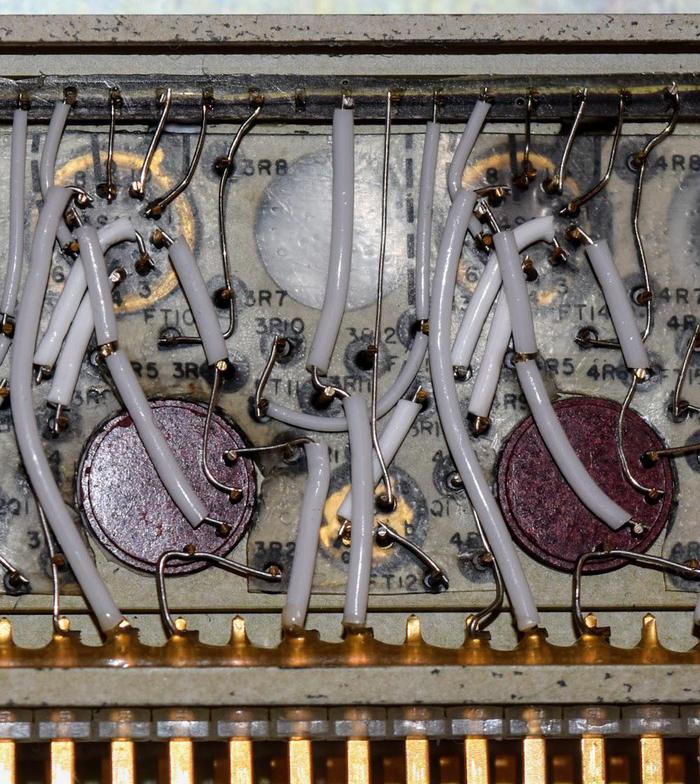

The closeup photo below shows the module's cordwood construction. In this high-density construction technique, components were inserted into holes in the module. Resistors and capacitors passed through from one side of the module to the other, with one lead on either side. On each side of the module, components were connected by point-to-point wiring. This wiring was welded, not soldered. White insulating sleeves were placed over the wires to prevent short circuits.

Near the top of the photo are two amplifier integrated circuits in metal cans. Below are two reddish pulse transformers. An output driver transistor is between the pulse transformers.13 Only the ends of resistors are visible, due to the cordwood construction. At the top of the module are connections to the nickel ribbon interconnect. Modules that were flown on spacecraft were potted in plastic so the components were protected against vibration. Since our AGC was used on the ground, most modules were unpotted and the components are visible.

Address decoding

Address decoding for the core rope required a fair amount of logic for two reasons. The first problem was the AGC's instructions used 12-bit addresses, which could only address 4 kilowords of storage. Since the AGC had 36 kilowords of fixed memory and 2 kilowords of erasable memory, it used a complex system of bank registers and mapping logic to convert a 12-bit address into the correct physical memory location. The second problem was that each core rope module held 6 kilowords, which is not a power of two. Thus, moderately complex decoding circuitry was required to generate module and strand select signals from the address.

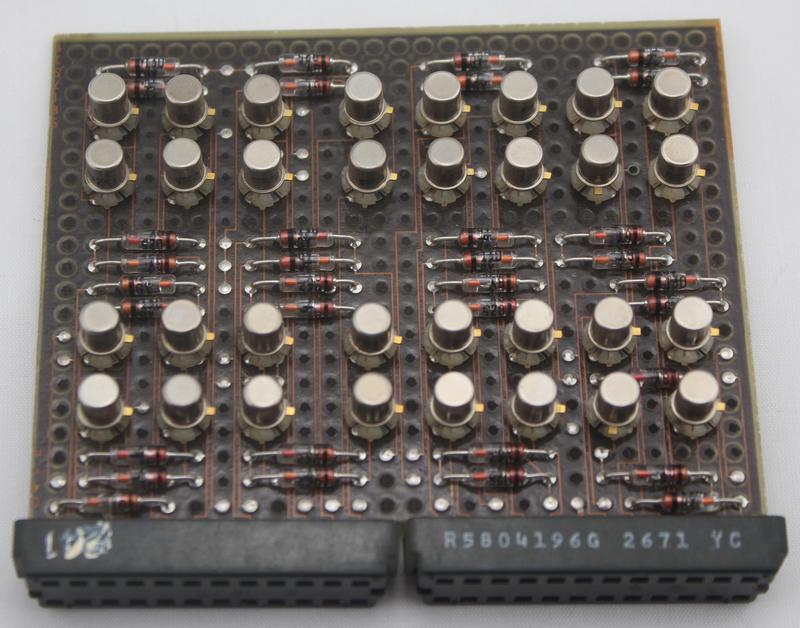

The AGC's logic circuitry (including the processor) was implemented with NOR gates. Each integrated circuit implemented two NOR gates using RTL (resistor-transistor logic), an early logic family. These ICs were costly; they cost $20-$30 each (around $150 in current dollars). There wasn't much inside each IC, just six transistors and eight resistors. Even so, the ICs provided a density improvement over the planned core-transistor logic, making the AGC practical. The decision to use ICs in the AGC was made in 1962, amazingly just four years after the IC was invented. The AGC was the largest consumer of ICs from 1962 to 1965 and ended up being a major driver of the integrated circuit industry.

Strand select

The address decoding logic described above produced signals indicating the desired strand and module. These logic-level signals needed to be converted to 14-volt pulses to drive the core rope modules. This task was performed by the strand select module, which consisted of transistor driver circuits using NPN and PNP transistors. The resistors in these circuits were individually selected to produce exactly the desired currents.

Rope driver

The rope driver modules generated the high-current pulses (up to 450mA) necessary to flip the cores. Like the strand select module, the rope driver modules used NPN and PNP transistor driver circuits with carefully-selected resistors to ensure the desired current. They also used inductors to control the pulse shape, necessary to keep switching noise from overwhelming the small signals generated by the cores. The modules generated 16 inhibit signals (7 address bits and parity, along with complements) as well as two core set signals and four core reset signals.

The core rope simulator

Unfortunately, the core ropes were missing from the Apollo Guidance Computer we are restoring. Instead, this AGC has core rope simulator boxes in place of the ropes. The purpose of these simulator boxes was to feed code into an AGC for development and ground testing without requiring a new core rope to be manufactured every time. The simulator allowed an external computer to supply data words in place of the core rope, allowing the AGC to run arbitrary programs.

The simulator consists of two boxes that plugged into the AGC's core rope slots. These boxes are visible in the upper-left side of the AGC above, with round military-style connectors for connection to the external computer. One box exported address information each time the AGC performed a core rope read, while the other box fed 16 data bits into the AGC, "tricking" the AGC into thinking it had read from core rope. I built an interface from the core rope simulator boxes to a Beaglebone and will write more about that project later.

Conclusion

Core memory was the most common storage technology for computers in the 1960s. However, the Apollo Guidance Computer also used core ropes for read-only storage, an uncommon storage technique that achieved high density but required considerable labor. Core ropes made it possible to fit complex software into the compact physical space of the AGC. While 36K of code seems ludicrously small by modern standards, it held enough code to navigate and land on the Moon. And now, decades later, we can recover this code from core rope modules and learn more about it.

Marc has a series of AGC videos; the video below discusses the core rope simulators. I announce my latest blog posts on Twitter, so follow me @kenshirriff for future articles. I also have an RSS feed. See the footnotes for more information sources15. Thanks to Mike Stewart for supplying images and extensive information.

Notes and references

-

Prototype core rope memories had a single bundle of wire that looked similar to a rope. The final core rope memories didn't look as rope-like, but kept the name. ↩

-

The core rope memory achieved a density of 1500 bits per cubic inch, including the driving hardware and packaging. This was about 5 times the density of erasable core memory. See MIT's Role in Project Apollo vol III pages 91 and 274.

The cores in core rope were not regular ferrite cores, but permalloy ribbon wound around a non-magnetic steel bobbin. If you're used to ferrite cores, winding a metallic ribbon around a bobbin may seem like a strange way to make a core, but that was how the earliest cores were built, until ferrite cores started to be used in the early 1950s. The ferromagnetic materials used in wound-ribbon cores were developed by Germany in World War II and used by the German navy in magnetic amplifiers. After the war, American personnel brought back the material along with a rolling mill, and started US manufacturing under the name Deltamax. In other words, core memory had its origin in Nazi technology captured by the US. See Memories that shaped an industry, pages 39-40, 52, 87, 90.

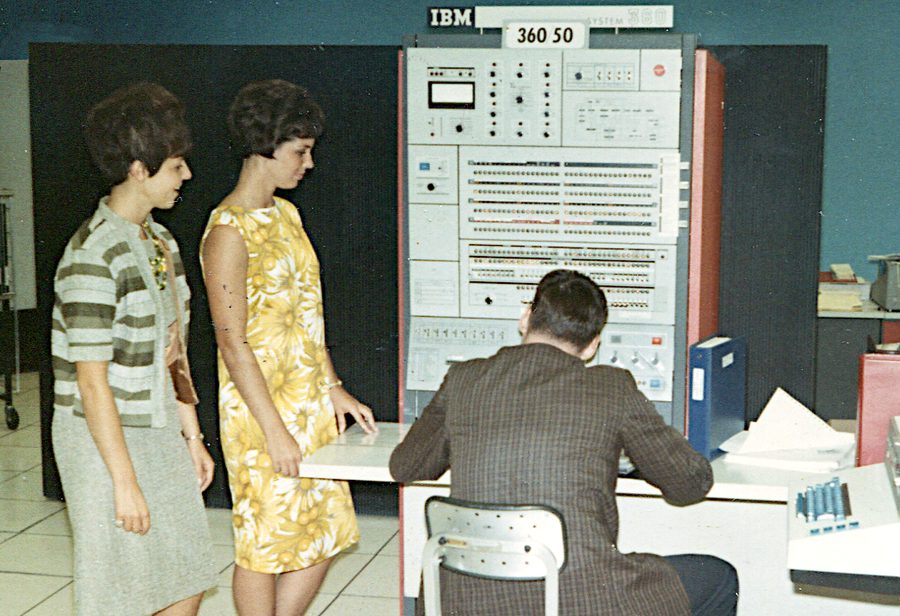

The cores used in the core rope were rather large, .249" in diameter, about 5 times the diameter of the cores used in the erasable core memory. (In comparison, some IBM 360 mainframes of the same era used tiny cores that were .021" in diameter.) When a rope core flipped, it yielded a fairly large voltage pulse of 215-430 mV. Properties of the cores were defined in NASA specification control drawing SCD-1006320. ↩

-

The AGC restoration team consists of Mike Stewart (creator of FPGA AGC), Carl Claunch, Marc Verdiell (CuriousMarc on YouTube) and myself. The AGC that we're restoring belongs to a private owner who picked it up at a scrap yard in the 1970s after NASA scrapped it. For simplicity, I refer to the AGC we're restoring as "our AGC".

The Apollo flights had one AGC in the command module (the capsule that returned to Earth) and one AGC in the lunar module. In 1968, before the Moon missions, NASA tested a lunar module with astronauts aboard in a giant vacuum chamber in Houston to ensure that everything worked in space-like conditions. We believe our AGC was installed in that lunar module (LTA-8). Since this AGC was never flown, most of the modules were not potted with epoxy. ↩

-

Yes, we know about Francois and the rope modules he read; those are ropes for the earlier Block I Apollo Guidance Computer and are not compatible with our Block II AGC. Also, many people have asked if we talked to Fran about the DSKY. Yes, we have. ↩

-

Mike Stewart pointed out that a core wouldn't have all 192 sense wires passing through it. Because the system used odd parity, at most 15 of the 16 bits can be high. Thus, at most 180 sense wires would pass through a core. ↩

-

The Apollo Guidance Computer had one additional pair of inhibit lines for address parity so all non-matching cores will have at least two inhibit lines activated. (If a core was one line away from being activated, the parity would also be different, yielding two active inhibit lines.) The purpose of this was to ensure that every non-selected core received two inhibit signals and was solidly inhibited. Otherwise, a core with just one inhibit line high might receive a bit of net set current, changing its magnetism slightly and introducing noise.

Note that 7 address lines select one of 128 cores. Each module consists of four (logical) planes of 128 cores, yielding 512 cores. To select one of the four planes, four different reset lines are used, to reset just the core in the desired plane. Thus, only that plane is read. Two set lines are used, one to set cores in planes A and B, and one for planes C and D. To avoid setting cores in two planes, the reset line in the undesired plane was activated at the same time as the set line, blocking the set in that plane. The obvious approaches would be to use four set lines (one per plane), or one set line (and use reset to block the others). I don't know why they used two set lines.

Each module also had a "clear" line that passed through all the cores. This was similar to reset, but was needed due to a complexity of the AGC's opcode decoding. To fit more opcodes into a 15-bit instruction, the AGC used "quartercode" instructions. The idea was that some instructions didn't make sense on a fixed memory address, such as increment. The AGC would perform an entirely different instruction in that case, allowing a larger instruction set. The problem was that by the time instruction decoding had decided that the instruction didn't apply to the specified address, the read of fixed memory had already started, and the core was set. The "clear" line allowed this core to be reset so it wouldn't interfere with the desired read. I don't know why the existing reset lines couldn't be used for this purpose. (Summary and details.) The schematic is here. ↩

-

If you are familiar with regular core memory (i.e. erasable RAM core memory), there are many similarities, but also many important differences. First, erasable core memory was arranged in a grid, and a particular core was selected by energizing an X line and a Y line in the grid. Second, erasable core memory stored a single bit per core, with the direction of magnetization indicating a 0 or 1. Core rope, on the other hand, stored many bits per core, with a 0 or 1 depending on if a sense wire goes through the core or not. The cores in core rope were much larger, since about 200 wires went through each core, while erasable core memory typically had 3 wires through each core. Finally, erasable core memory used the inhibit line for writing a 0, while core rope used inhibit lines for addressing. ↩

-

For more information on why the Apollo Guidance Computer used 15-bit words, see MIT's Role in Project Apollo vol III page 32. The short answer is they required about 27-32 bits accuracy for navigation computations, and about 15 bits for control variables. Using a 15-bit word for small values and double-precision values for navigation provided sufficient accuracy. A 14-bit word was too small. A 17- or 18- bit word would simplify some things but increase costs. ↩

-

Core rope memory in the earlier Block I Apollo Guidance Computer was configured slightly differently. The memory was folded into 4 layers of 128 cores instead of 2 layers of 256 cores. As a result, the Block I rope modules had a different shape: roughly square in cross-section, unlike the flat Block II modules. In early Block I modules, each core had 128 sense wires (8 words) threaded through it, yielding 4K words per module. With 6 modules, an early Block I AGC had 24K words of rope storage. In later Block I AGCs, the rope modules provided more storage: 192 sense wires (12 words) per core, yielding 6K words per module. Thus, 24K of rope storage required just 4 modules. For the Block II computers used for the Moon missions, 6 modules × 512 cores per module × 192 bits per core ÷ 16 bits per word = 36864 (36K) words in total. ↩

-

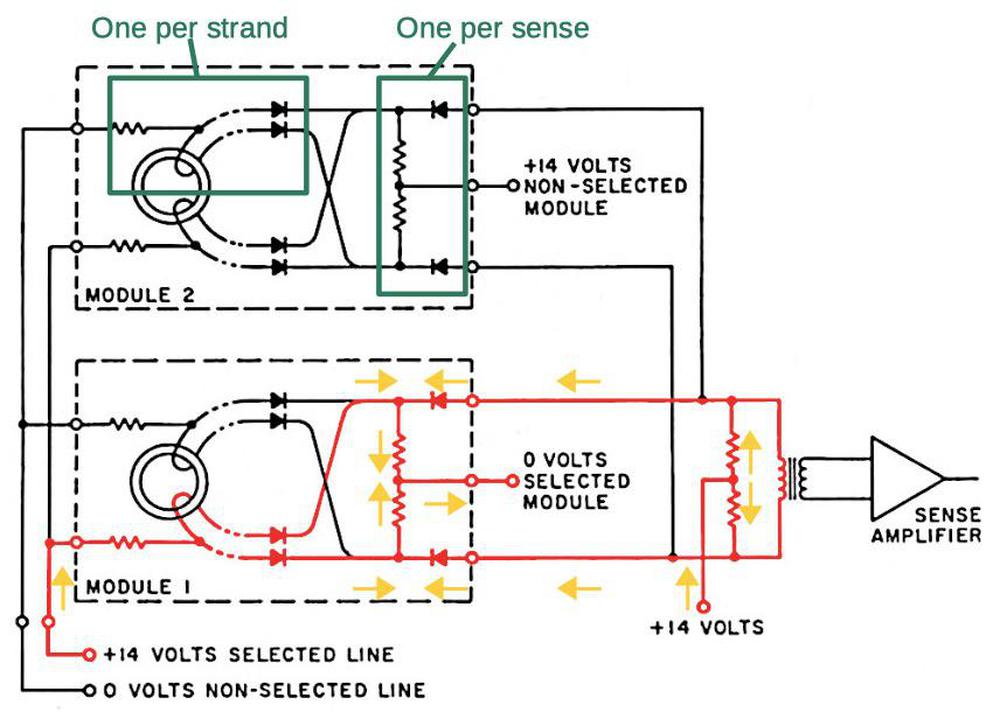

Selection of a particular module and strand was done through a resistor/diode biasing network using hundreds of resistors and diodes in each module. The diagram below shows how this network operated. The selected strand line was pulled high, while the selected module line was pulled low. This resulted in current (red) through the selected strand and through the sense amplifier transformer. The remaining diodes were reverse-biased, so no current flowed. A voltage pulse on the sense wire through the selected core perturbed the current flow, resulting in a voltage imbalance across the sense amplifier transformer. This signal was detected by the sense amplifier, resulting in a 1 bit. (This circuit is rather confusing; you might expect the circuit to be a loop through the sense wire and the sense amplifier transformer, but instead, the currents are flowing towards the 0-volt module line in the middle. Study the directional arrows carefully to see how the current flows. The current from a flipped core is essentially superimposed on this current flow.)

A particular strand and module were selected by a resistor/diode network. The non-selected diodes were reverse-biased, blocking those signals from the sense amplifiers. Based on MIT's Role in Project Apollo vol III, Fig. 3-13The resistor and diodes in the left green box were repeated once for each strand (i.e. 192 times in each module), while the resistor and diodes in the right box were repeated once for each sense line (i.e. 16 times in each module). ↩

-

The need to freeze the software design weeks in advance was viewed as a feature: "The inability to change the program without rebuilding one or more modules provides an effective management tool for the control of software changes. It also provides another incentive to make the software error free." See MIT's Role in Project Apollo vol III page 274. Much of the information in this section on core rope manufacturing is from One Giant Leap. The 1965 video Computers For Apollo shows AGC manufacturing process (including core ropes) in detail. ↩

-

There are interesting gender issues behind the manufacture of core rope and core memory that I'll only mention briefly. The core rope has been referred to as LOL memory, referencing the "Little Old Ladies" who assembled it, but this name erases the women of color who also assembled core ropes. (I'm also not convinced that the LOL name was used at the time.) The software for a particular flight was managed by "rope mother" who was generally male (although the famous Margaret Hamilton was rope mother on LUMINARY). Also see Making Core Memory: Design Inquiry into Gendered Legacies of Engineering and Craftwork.

Women weaving a core rope. Raytheon photo, via BBC. -

The sense amplifier output circuitry is a bit confusing because the erasable core memory (RAM) and fixed rope core memory (ROM) sense amp outputs were wired together to connect to the CPU. The RAM had one sense amp module with 16 amplifiers in slot B13, and the ROM had its own identical sense amp module in slot B14. However, each module only had 8 output transistors. The two modules were wired together so 8 output bits are driven by transistors in the RAM's sense amp module and 8 output bits are driven by transistors in the ROM's sense amp module. (The motivation behind this was to use identical sense amp modules for RAM and ROM, but only needing 16 output transistors in total. Thus, the transistors are split up 8 to a module.) ↩

-

I'll give a bit more detail on the sense amps here. The key challenge with the sense amps is that the signal from flipping a core is small and there are multiple sources of noise that the sense line can pick up. By using a differential signal (i.e. looking at the difference between the two inputs), noise that is picked up by both ends of the sense line (common-mode noise) can be rejected. The differential transformer improved the common-mode noise rejection by a factor of 30. (See page 9-16 of the Design Review.) The other factor is that the sense line goes through some cores in the same direction as the select lines, and through some cores the opposite direction. This helps cancel out noise from the select lines. However, the consequence is that the pulse on the select line may be positive or may be negative. Thus, the sense amp needed to handle pulses of either polarity; the threshold stage converted the bipolar signal to a binary output.

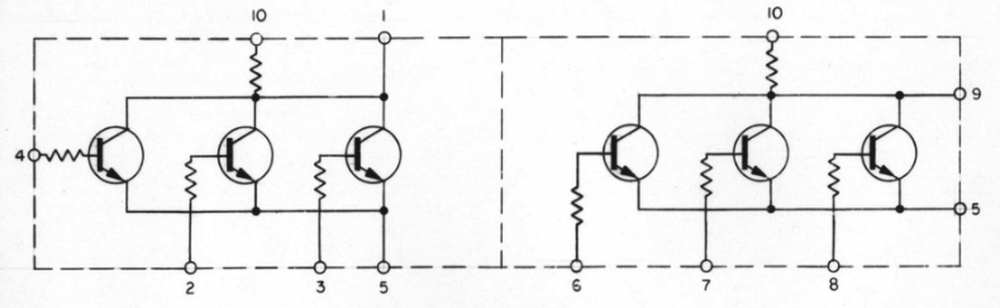

Schematic of the circuitry inside a sense amplifier IC.The sense amplifier (above) was a custom integrated circuit designed by Sperry Rand in 1962. This chip pushed the state of the art for analog ICs and it may be the first integrated circuit amplifier. The sense amp chips initially cost $200 each, equivalent to $1700 now. ↩

-

For more information on the AGC, the Virtual AGC site has tons of information on the AGC, in particular the ElectroMechanical page has lots of schematics and drawings. There's a video of Eldon Hall, designer of the AGC, disassembling our AGC in 2004. Eldon Hall's book Journey to the Moon describes development of the Apollo Guidance Computer in detail, and was very helpful for this blog post. If you want to try a simulated AGC in your browser, see moonjs.

The Apollo manuals provide detailed information on the memory system. The manual has a block diagram of the AGC's memory system. Engineering drawings of the AGC's core rope are here. Fixed memory is described in the AC Electronic LM Manual volume 2 starting at 4-594 and a core rope wiring diagram is here. Sense amplifiers: description, schematics. Strand and module select circuits: description, schematic, schematic. Set circuit: description, description, schematic, schematic. Reset circuit: description, description, schematic. Inhibit circuit: description, schematic. Address decoder: description, schematic. ↩