Intel released the powerful Pentium processor in 1993, a chip to "separate the really power-hungry folks from ordinary mortals." The original Pentium was followed by the Pentium Pro, the Pentium II, and others, spawning a long-running brand of high-performance processors, Intel's flagship line until the Core processors took over in 2006. The Pentium eventually became virtually synonymous with "PC" and even made it into pop culture.

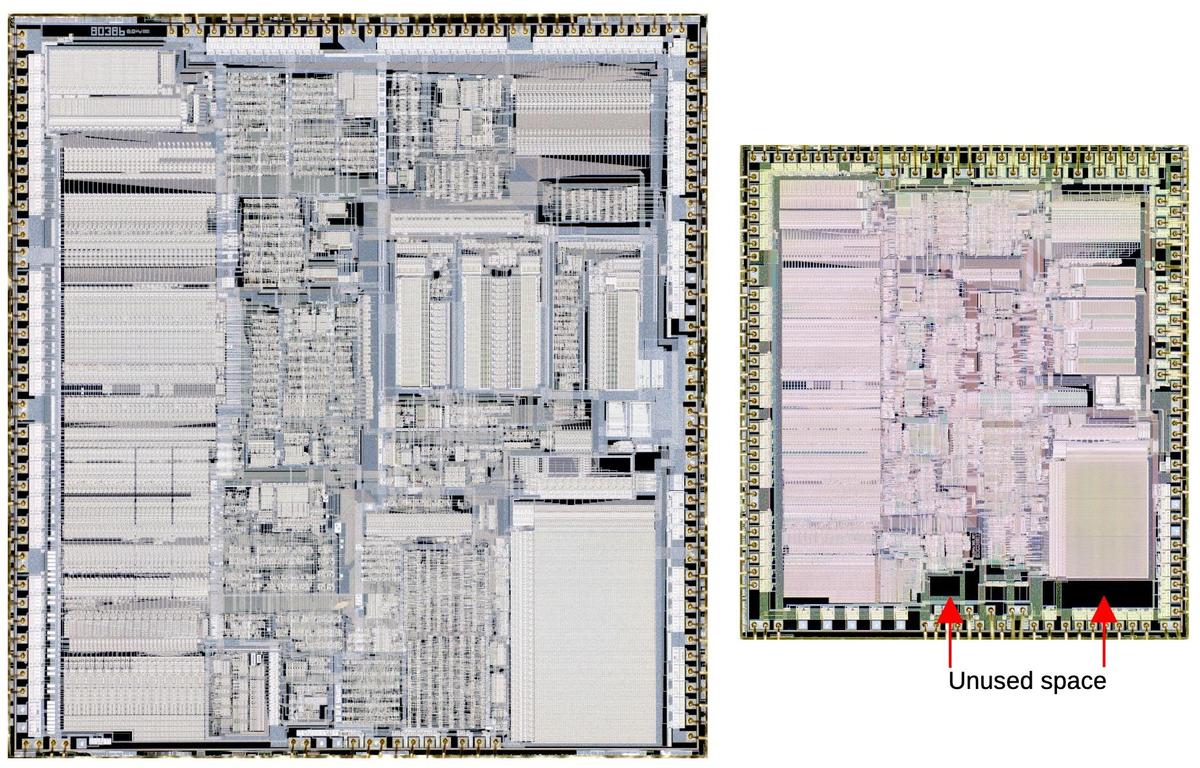

Even though the Pentium is a complex chip with 3.3 million transistors, its transistors are visible under a microscope, unlike modern chips. By examining the chip, we can see the interesting circuits used for gates, flip-flops, and other circuits, including the use of an unusual technology called BiCMOS. In this article, I take a close look at the original Pentium chip1, showing how much of its circuitry was built out of structured rows of tiny transistors, a technique known as standard-cell design.

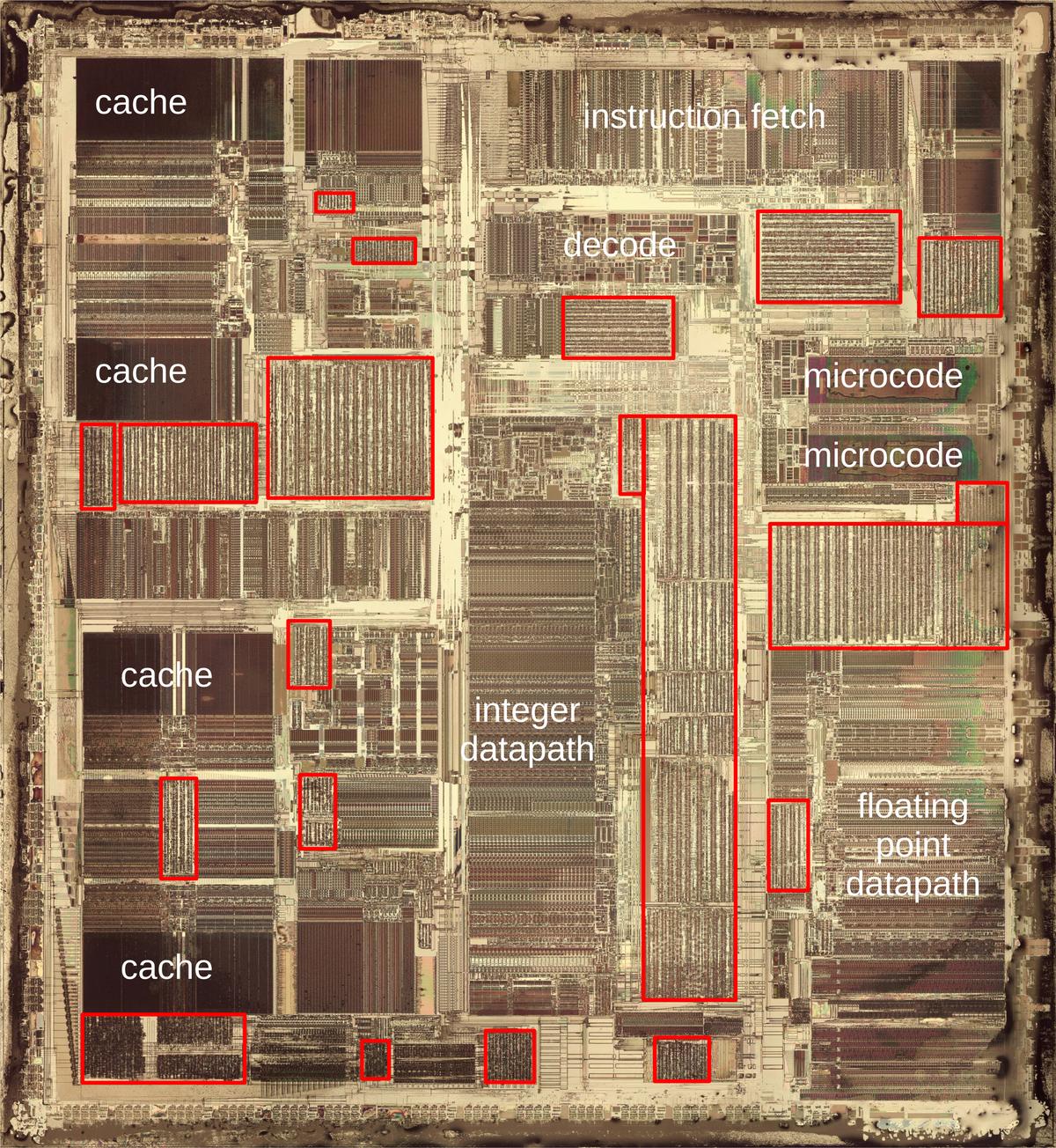

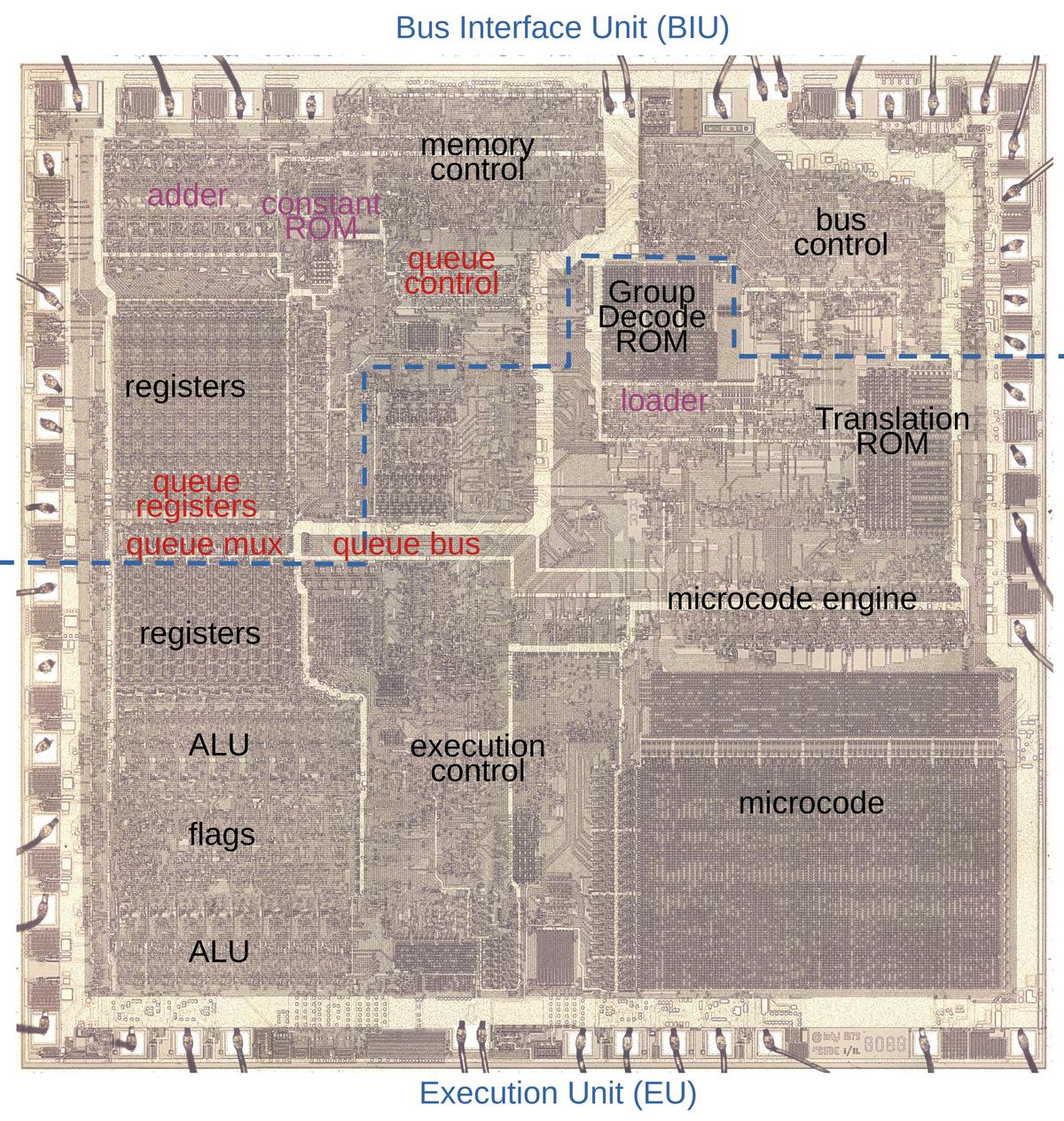

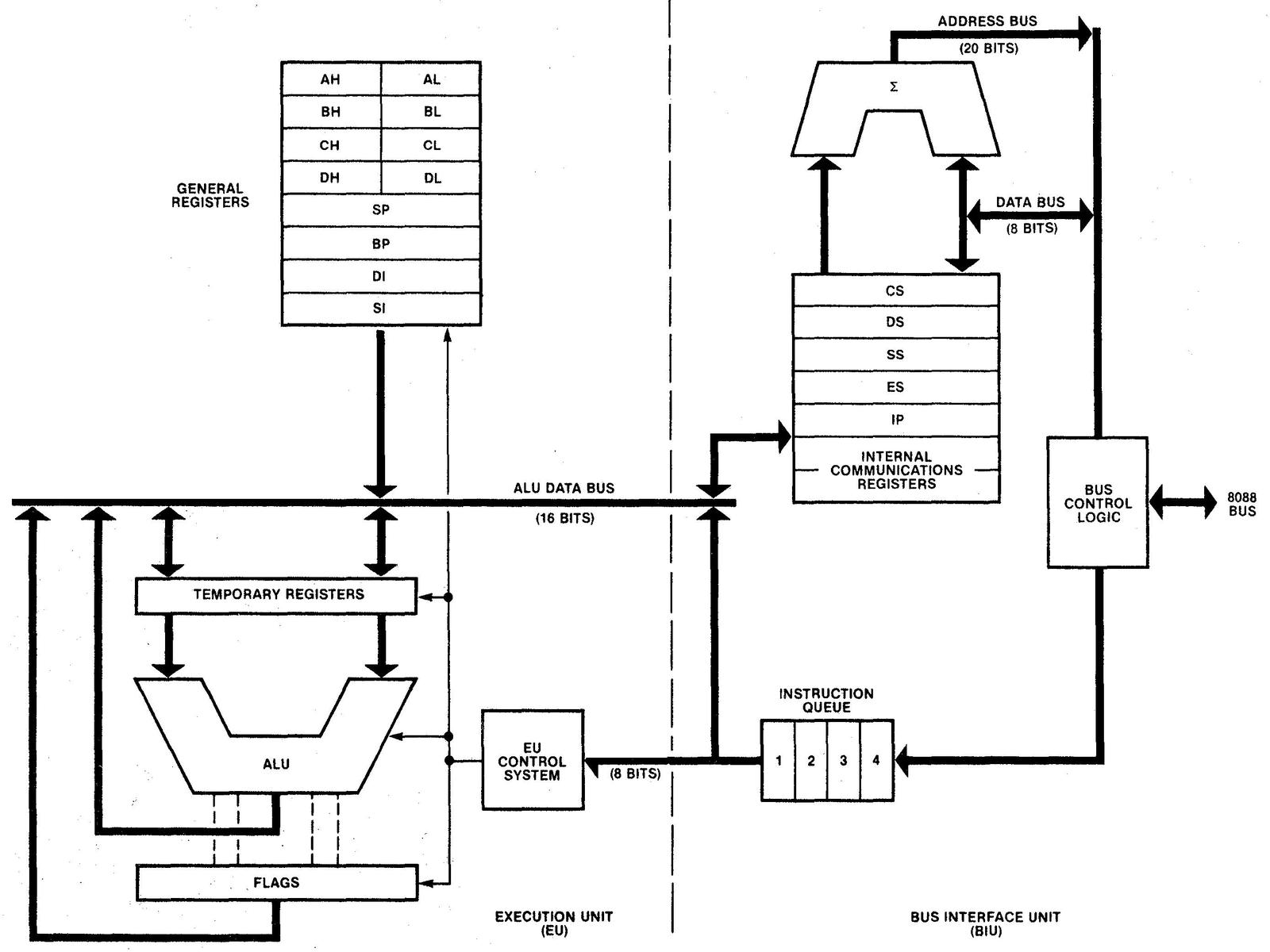

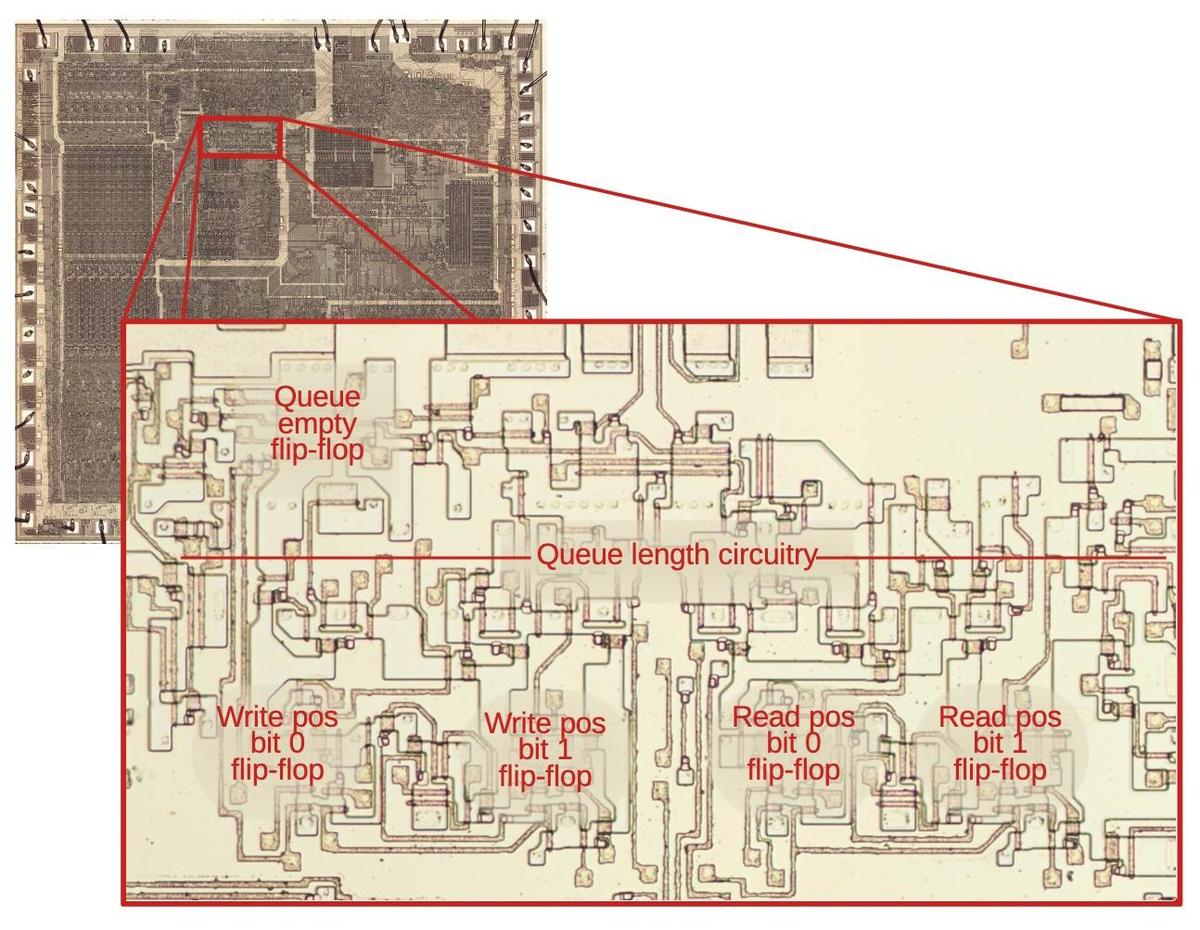

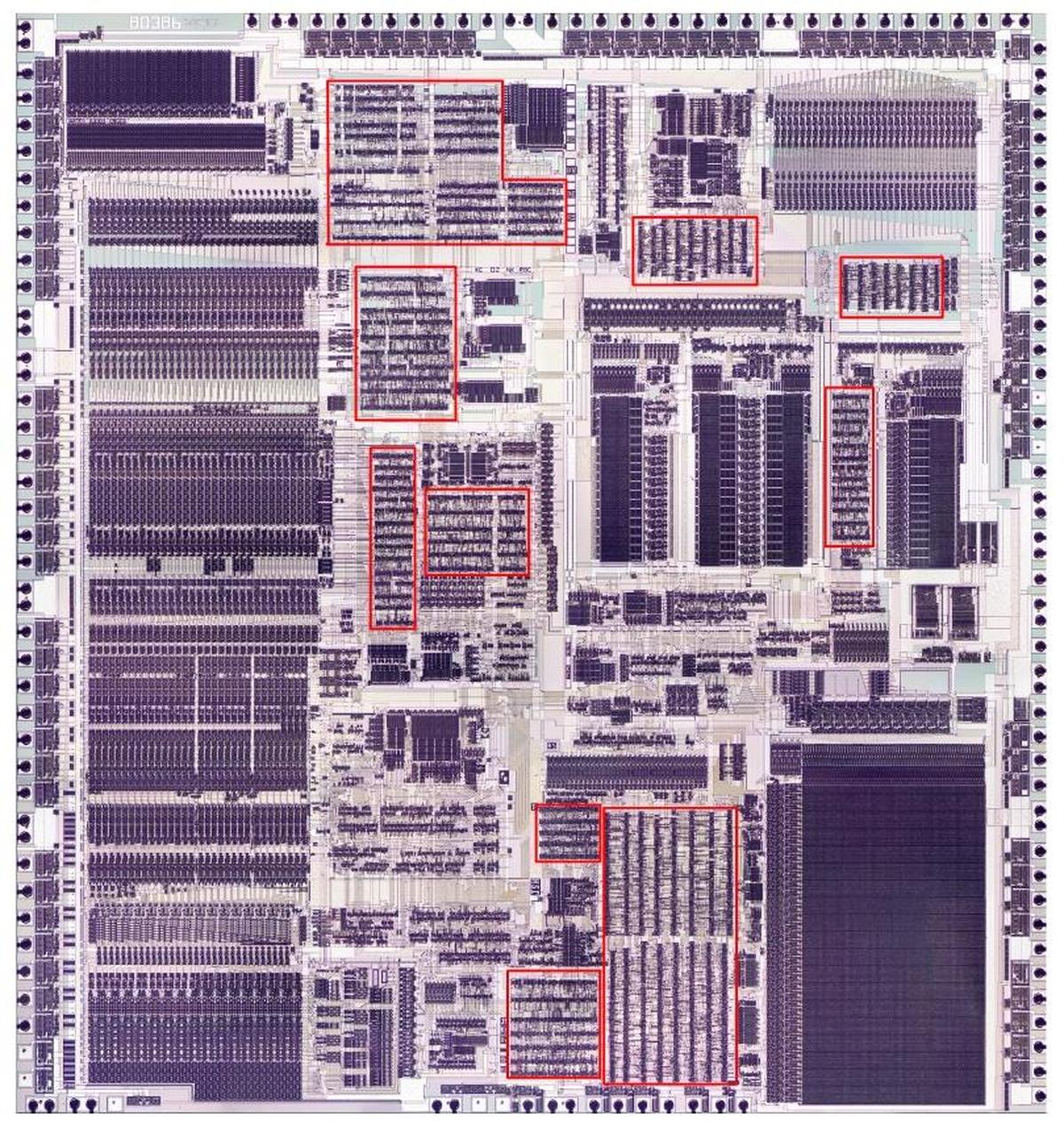

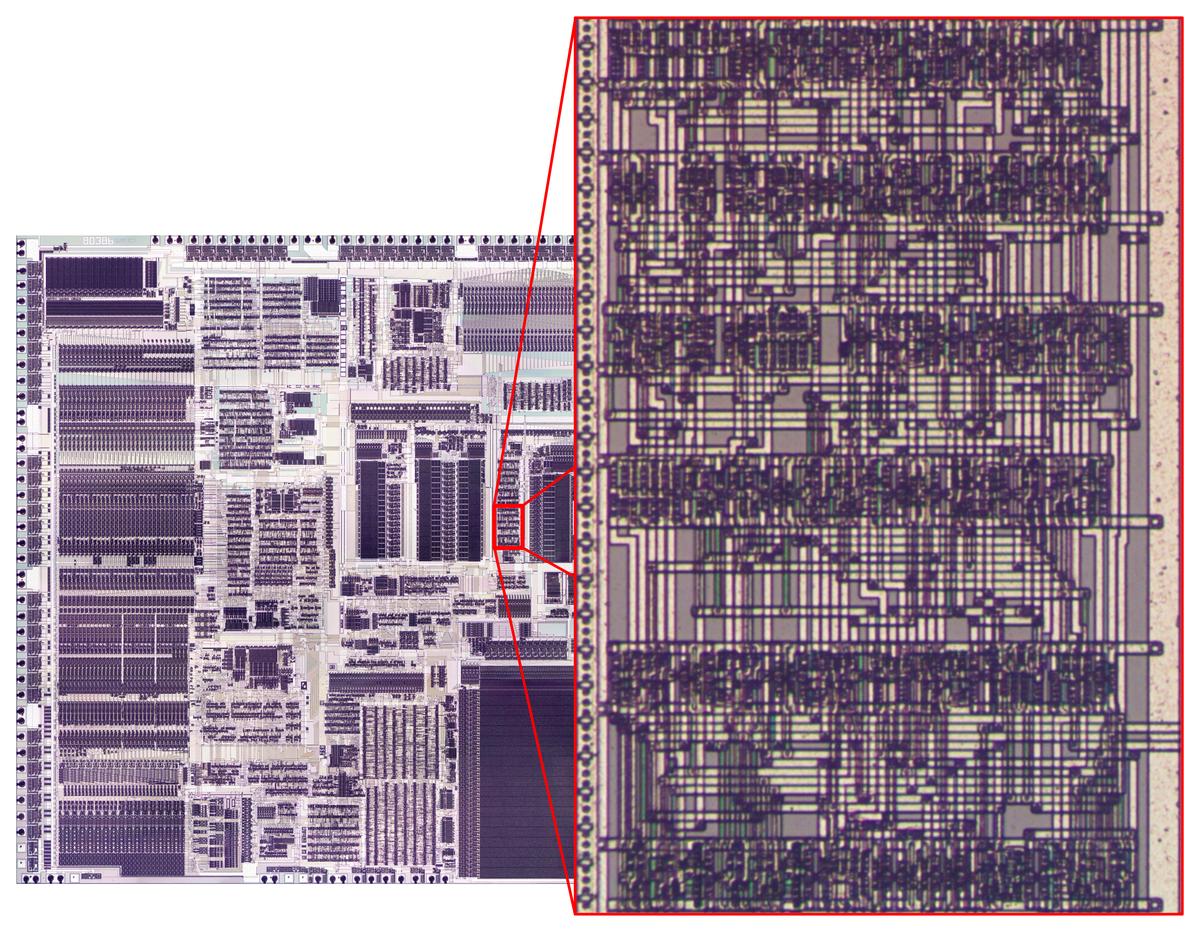

The die photo below shows the Pentium's fingernail-sized silicon die under a microscope. I removed the chip's four metal layers to show the underlying silicon, revealing the individual transistors, which are obscured in most die photos by the layers of metal. Standard-cell circuitry, indicated by red boxes, is recognizable because the circuitry is arranged in uniform columns of cells, giving it a characteristic striped appearance. In contrast, the chip's manually-optimized functional blocks are denser and more structured, giving them a darker appearance. Examples are the caches on the left, the datapaths in the middle, and the microcode ROMs on the right.

Standard-cell design

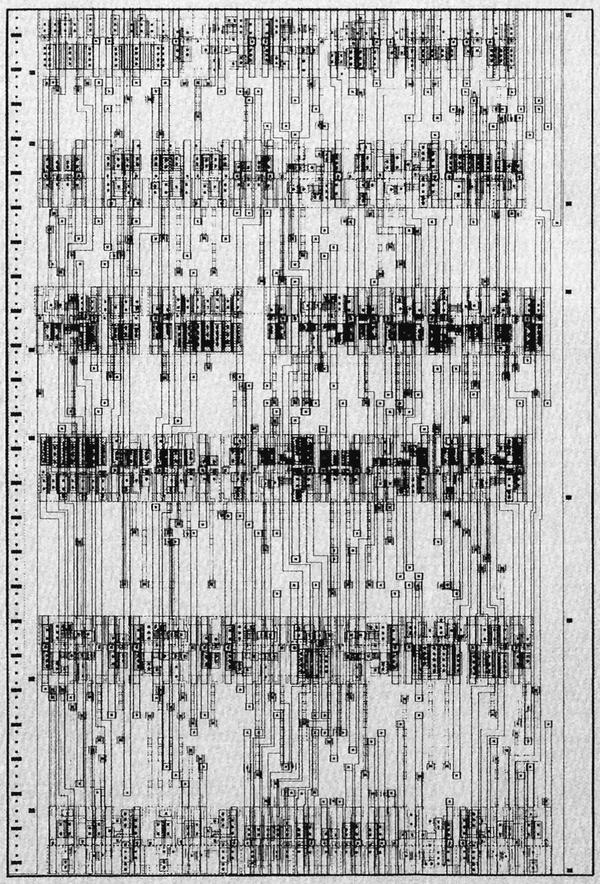

Early processors in the 1970s were usually designed by manually laying out every transistor individually, fitting transistors together like puzzle pieces to optimize their layout. While this was tedious, it resulted in a highly dense layout. Federico Faggin, designer of the popular Z80 processor, was almost done when he ran into a problem. The last few transistors wouldn't fit, so he had to erase three weeks of work and start over. The closeup of the resulting Z80 layout below shows that each transistor has a different, complex shape, optimized to pack the transistors as tightly as possible.2

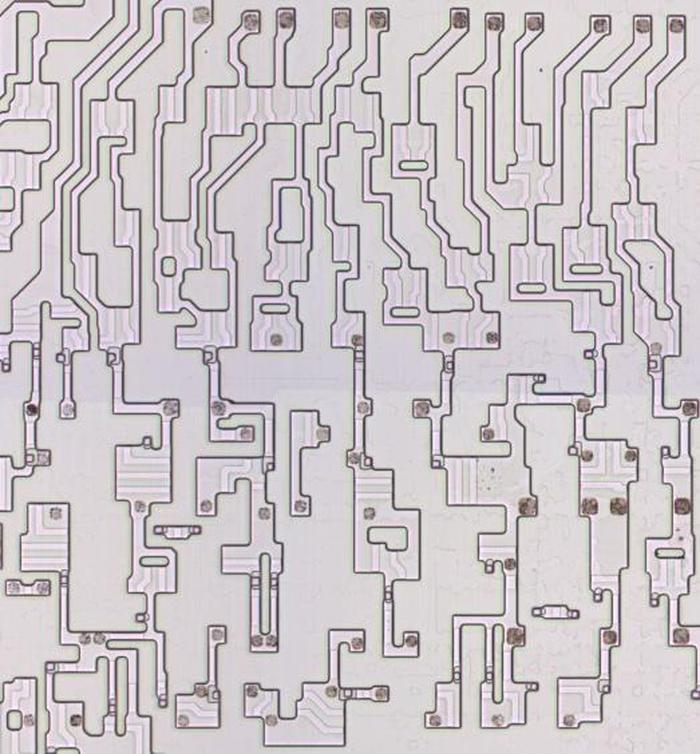

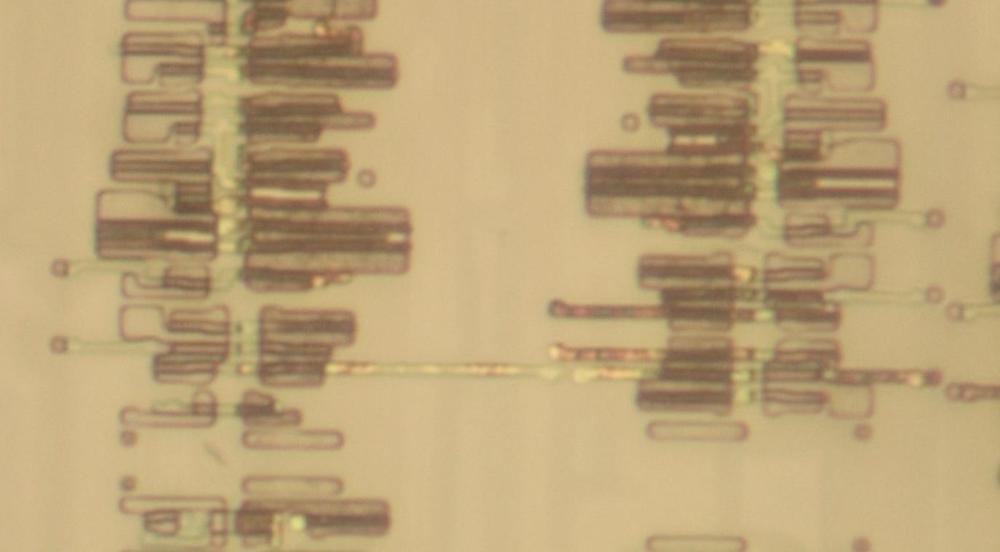

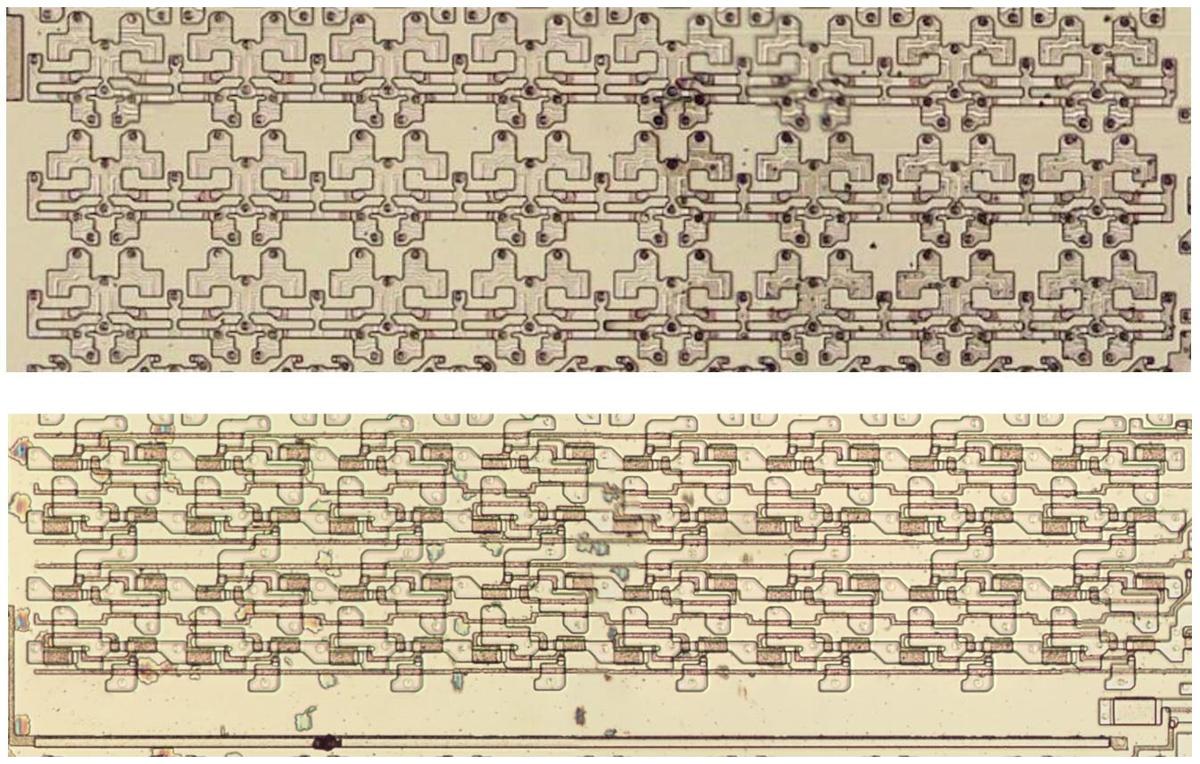

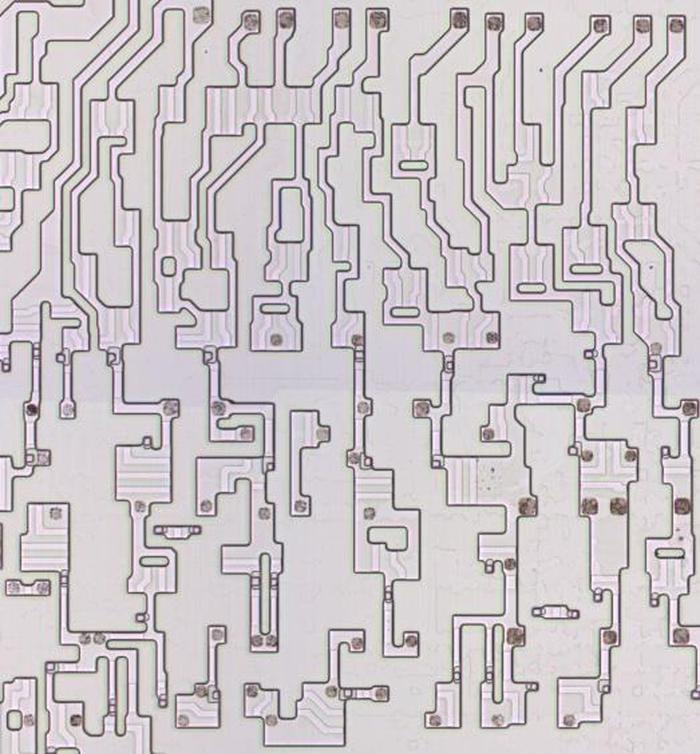

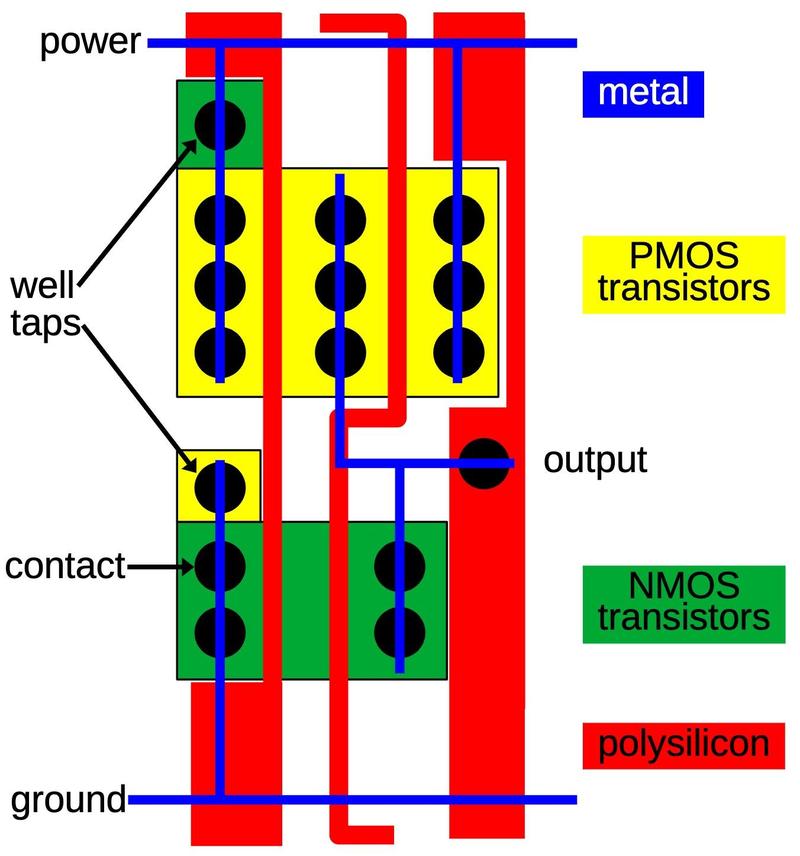

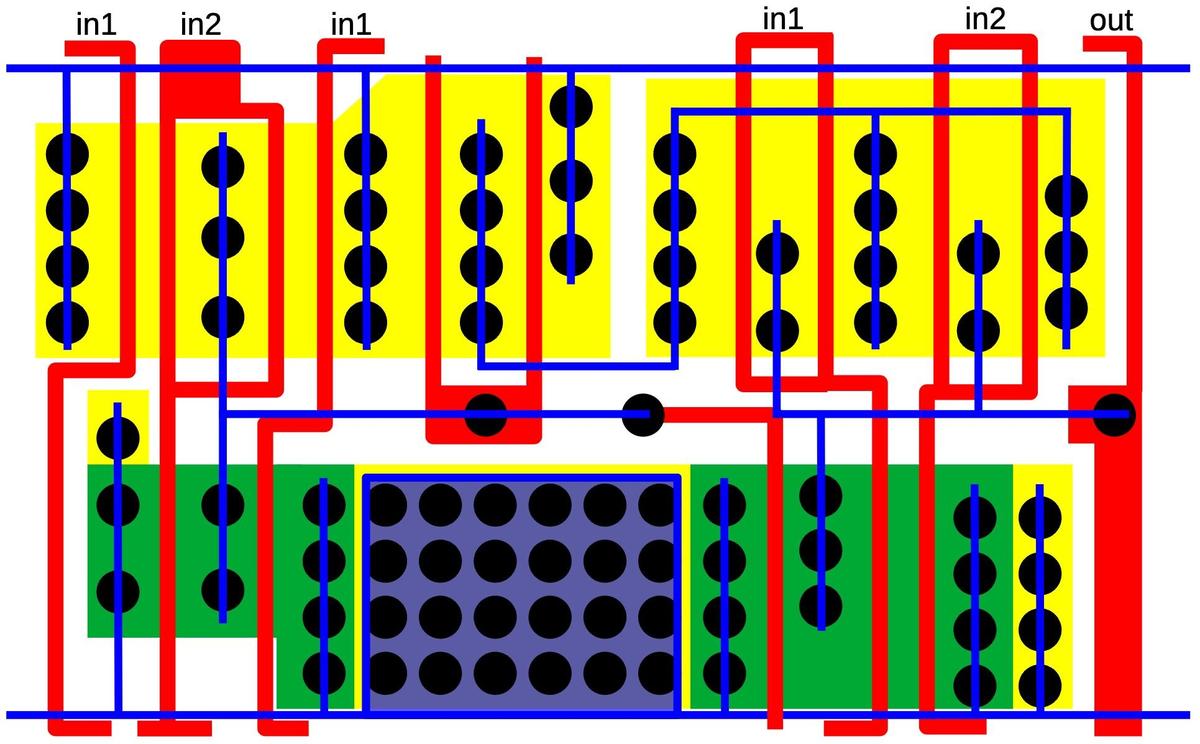

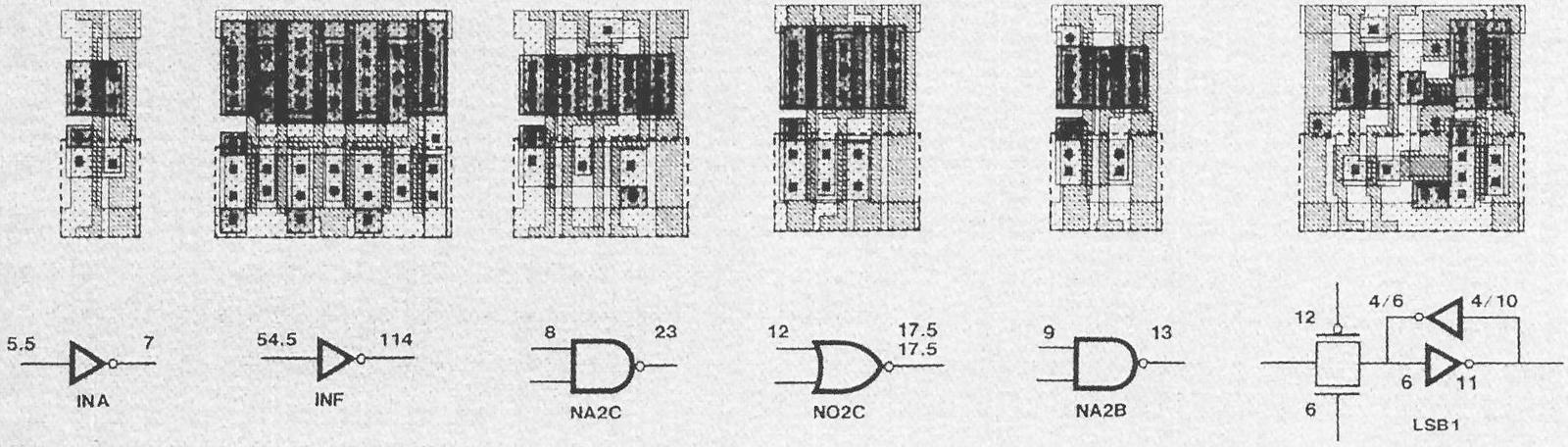

Because manual layout is slow, difficult, and error-prone, people developed automated approaches such as standard-cell.3 The idea behind standard-cell is to create a standard library of blocks (cells) to implement each type of gate, flip-flop, and other low-level component. To use a particular circuit, instead of arranging each transistor, you use the standard design from the library. Each cell has a fixed height but the width varies as needed, so the standard cells can be arranged in rows. The Pentium die photo below shows seven cells in a row. (The rectangular blobs are doped silicon while the long, thin vertical lines are polysilicon.) Compare the orderly arrangement of these transistors with the Z80 transistors above.

The photo below zooms out to show five rows of standard cells (the dark bands) and the wiring in between. Because CMOS circuitry uses two types of transistors (NMOS and PMOS), each standard-cell row appears as two closely-spaced bands: one of NMOS transistors and one of PMOS transistors. The space between rows is used as a "wiring channel" that holds the wiring between the cells. Power and ground for the circuitry run along the top and bottom of each row.

The fixed structure of standard cell design makes it suitable for automation, with the layout generated by "automatic place and route" software. The first step, placement, consists of determining an arrangement of cells that minimizes the distance between connected cells. Running long wires between cells wastes space on the die, since you end up with a lot of unnecessary metal wiring. But more importantly, long paths have higher capacitance, slowing down the signals. Once the cells are placed in their positions, the "routing" step generates the wiring to connect the calls. Placement and routing are both difficult optimization problems that are NP-complete.

Intel started using automated place and route techniques for the 386 processor, since it was much faster than manual layout and dramatically reduced the number of errors. Placement was done with a program called Timberwolf, developed by a Berkeley grad student. As one member of the 386 team said, "If management had known that we were using a tool by some grad student as a key part of the methodology, they would never have let us use it." Intel developed custom software for routing, using an iterative heuristic approach. Standard-cell design is still used in current processors, but the software is much more advanced.

A brief overview of CMOS

Before looking at the standard cell circuits in detail, I'll give a quick overview of how CMOS circuits are implemented.

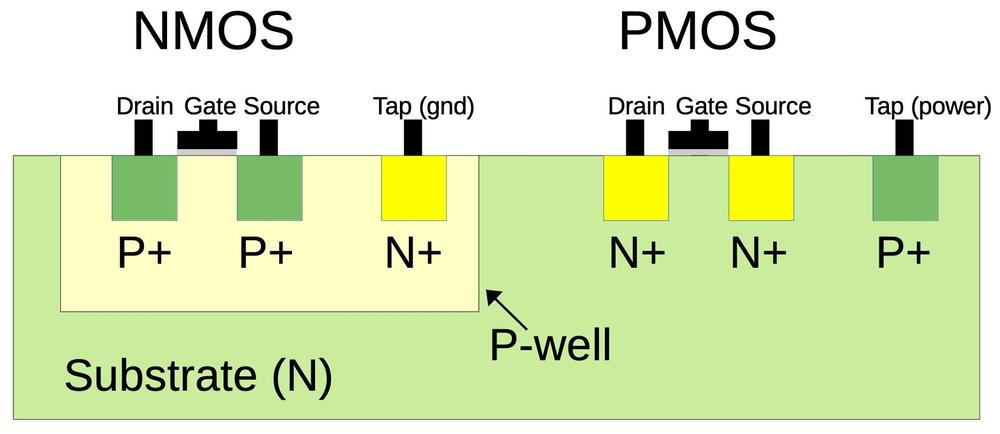

Modern processors are built from CMOS circuitry, which uses two types of transistors: NMOS and PMOS.

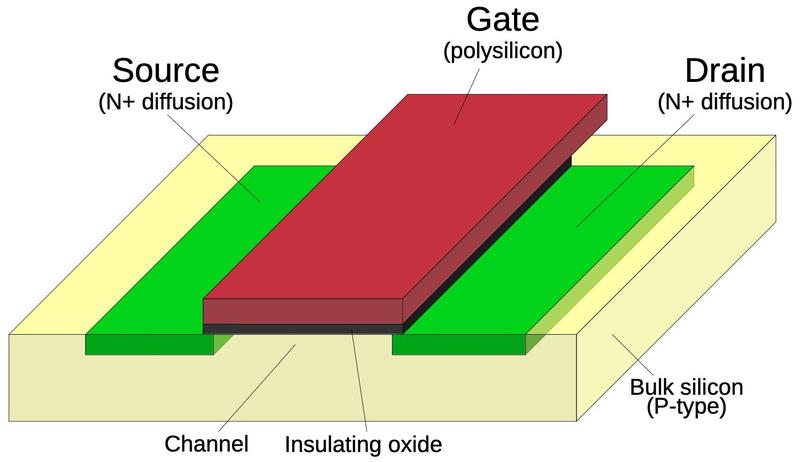

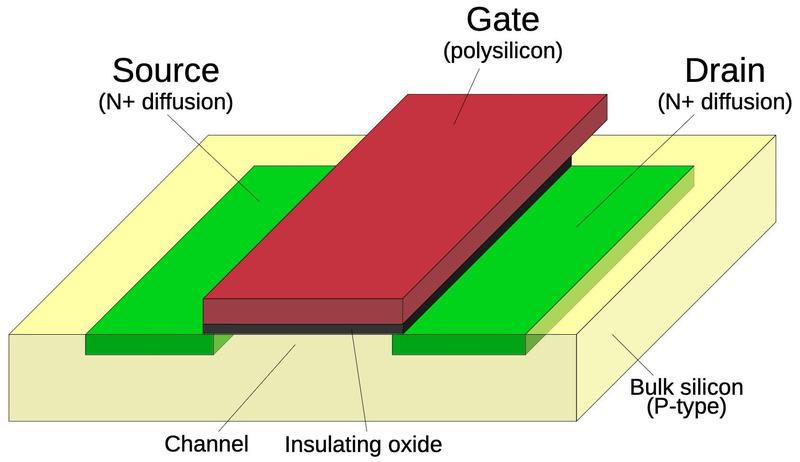

The diagram below shows how an NMOS transistor is constructed.

The transistor can be considered a switch between the source and drain, controlled by the gate.

The source and drain regions (green) consist of silicon doped with impurities to change its semiconductor properties, forming N+ silicon.

The gate consists of a layer of polysilicon (red), separated from the silicon by a very thin insulating oxide layer.

Whenever polysilicon crosses active silicon, a transistor is formed.

The NMOS and PMOS transistors are opposite in their construction and operation. A PMOS transistor swaps the N-type and P-type silicon, so it consists of P+ regions in a substrate of N silicon. In operation, an NMOS transistor turns on when the gate is high, while a PMOS transistor turns on when the gate is low.4 An NMOS transistor is best at pulling its output low, while a PMOS transistor is best at pulling its output high. In a CMOS circuit, the transistors work as a team, pulling the output high or low as needed; the "C" in CMOS indicates this "Complementary" approach. NMOS and PMOS transistors are not entirely symmetrical, however, due to the underlying semiconductor physics. Instead, PMOS transistors need to be larger than NMOS transistors, which helps to distinguish PMOS transistors from NMOS transistors on the die.

The layers of circuitry in the Pentium

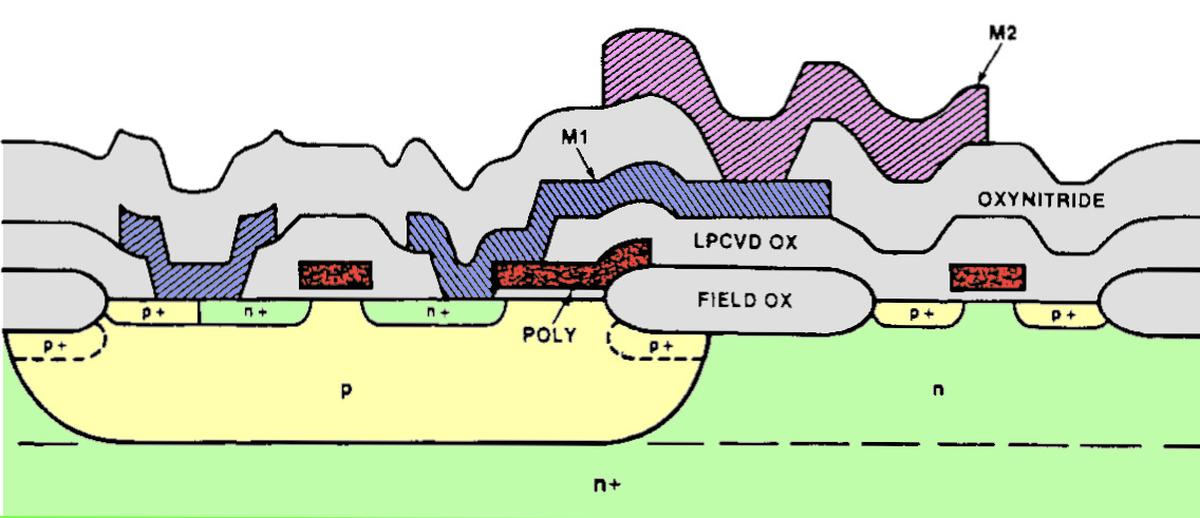

The construction of the Pentium is more complicated than the diagram above, with four layers of metal wiring that connect the transistors.5 Starting at the surface of the silicon die, the Pentium's transistors are similar to the diagram, with regions of silicon doped to change their semiconductor properties. Polysilicon wiring is created on top of the silicon. The most important role of the polysilicon is that when it crosses doped silicon, a transistor is formed, with the polysilicon as the gate. However, polysilicon is also used as wiring over short distances.

Above the silicon, four layers of metal connect the components: multiple metal layers allow signals to crisscross the chip without running into each other. The metal layers are numbered M1 through M4, with M1 on the bottom. A few rules control the wiring: a metal layer can connect with the layer above or below through a tungsten plug called a "via". Only the bottom metal, M1, can connect to the silicon or polysilicon, through a "contact". The layers usually alternate between horizontal wiring and vertical wiring (at least locally). Thus, a signal from a transistor may travel through M1, bounce up to M2 and M3 to cross other signals, and then go back down to M1 to connect to another transistor. As you can see, automated place and route software has a complicated task, producing millions of complicated wiring paths as densely as possible.

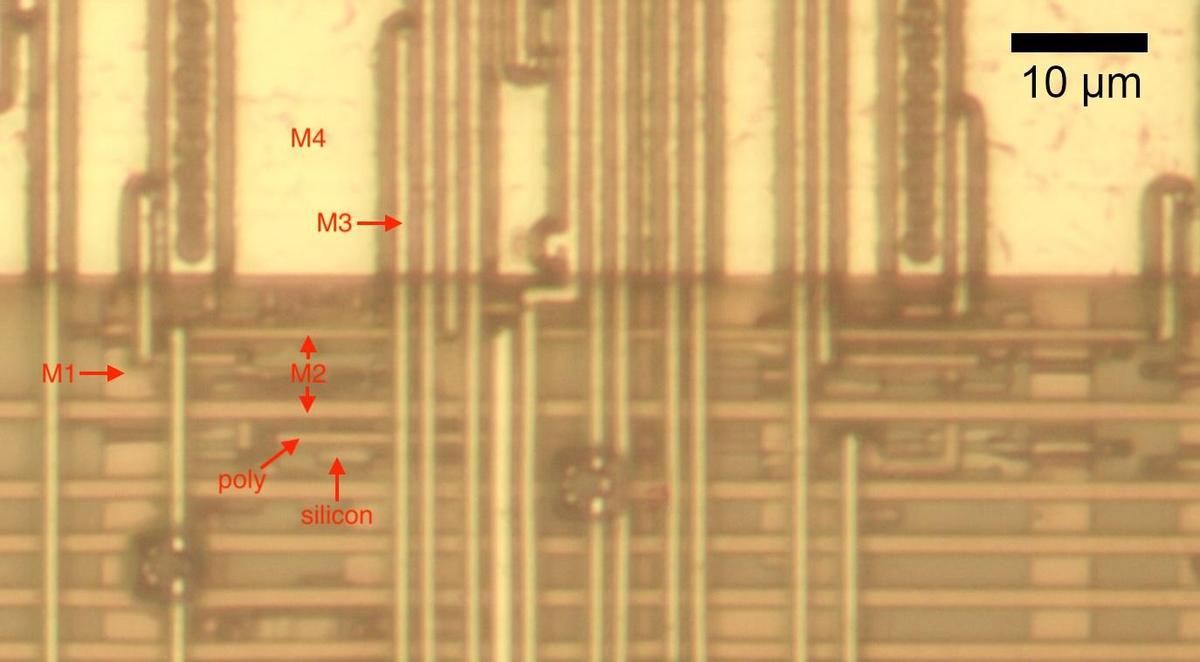

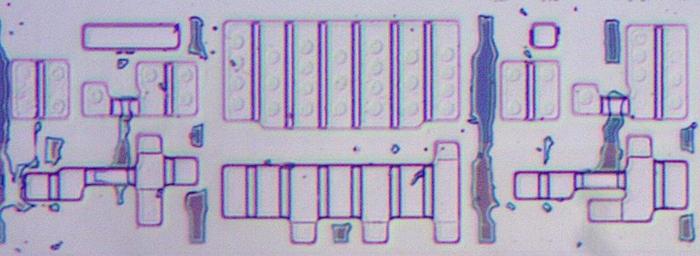

The diagram below shows how the layers appear on the chip. (This photo shows one of the rare spots on the chip where all the layers are visible.) The M4 metal layer on top of the chip is the thickest, so it is mostly used for power, ground, and clock signals rather than data. An M4 ground wire covers the top of this photo. The next layer down is M3. In this part of the chip, M3 lines run vertically. (Due to optical effects, the vertical M3 lines may look like they are on top of M4, but they are below.) The horizontal M2 metal lines are lower and appear brown rather than golden, due to the oxide layers that cover them. The bottom metal layer is M1. The vertical M1 lines are thick in this part of the chip because they provide power to the circuitry.

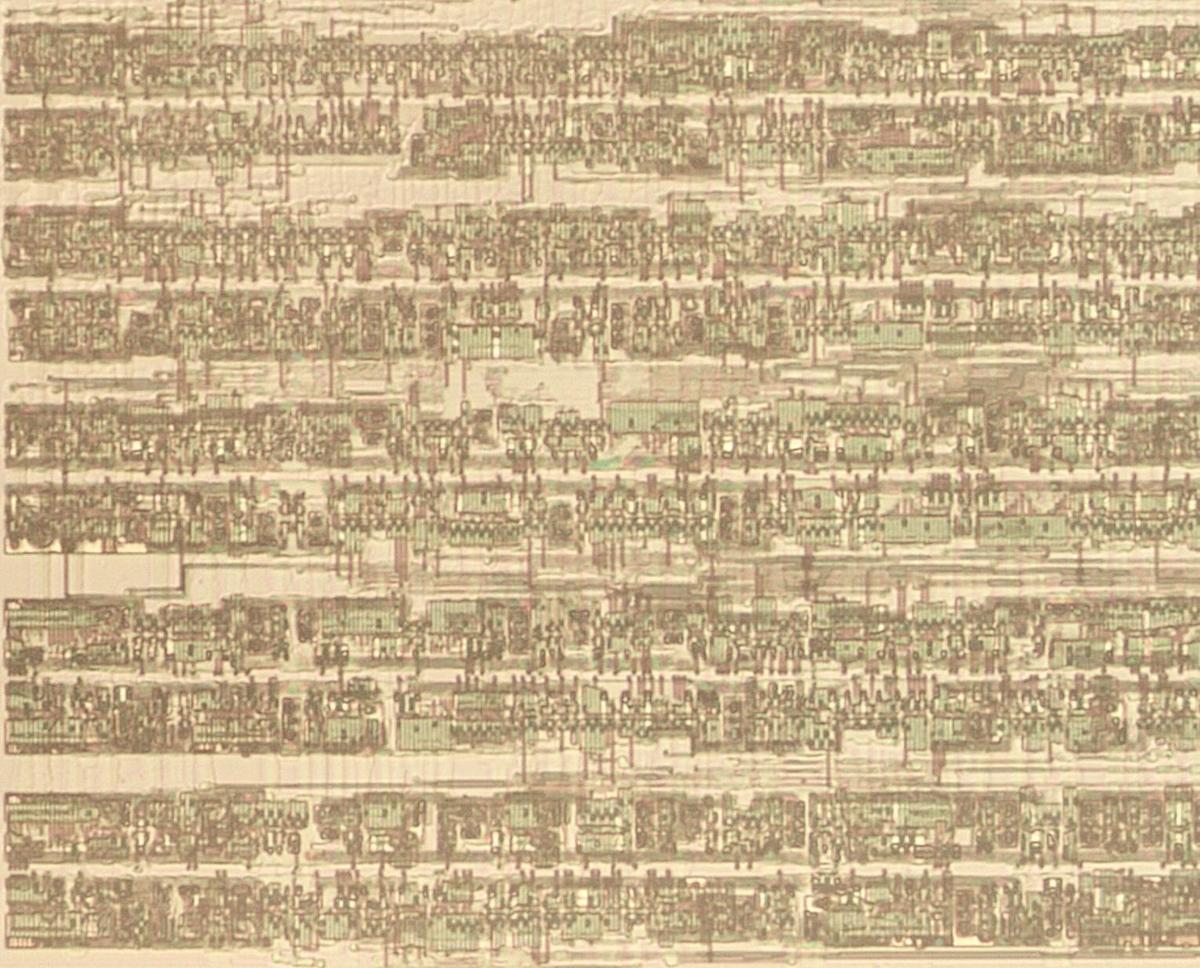

The silicon and polysilicon are mostly obscured in the above photo. By removing all the metal layers, I obtained the image below. This image shows the same region as the image above, but it is hard to see the correlation because the metal layers almost completely obscure the silicon. The orderly columns of transistors reveal the standard-cell design. The irregular dark regions are doped silicon, which forms the chip's transistors. The dark or shiny horizontal bands are polysilicon. I will explain below how these regions form gates and other circuits.

Inverter

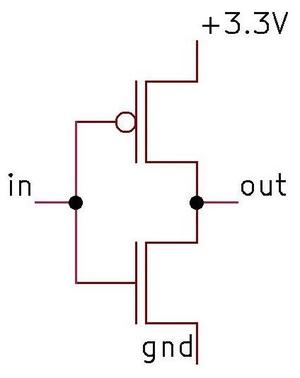

The fundamental CMOS gate is an inverter, shown in the schematic below. The inverter is built from one PMOS transistor (top) and one NMOS transistor (bottom). If the gate input is a "1", the bottom transistor turns on, pulling the output to ground (0). A "0" input turns on the top transistor, pulling the output high (1). Thus, this two-transistor circuit implements an inverter.10

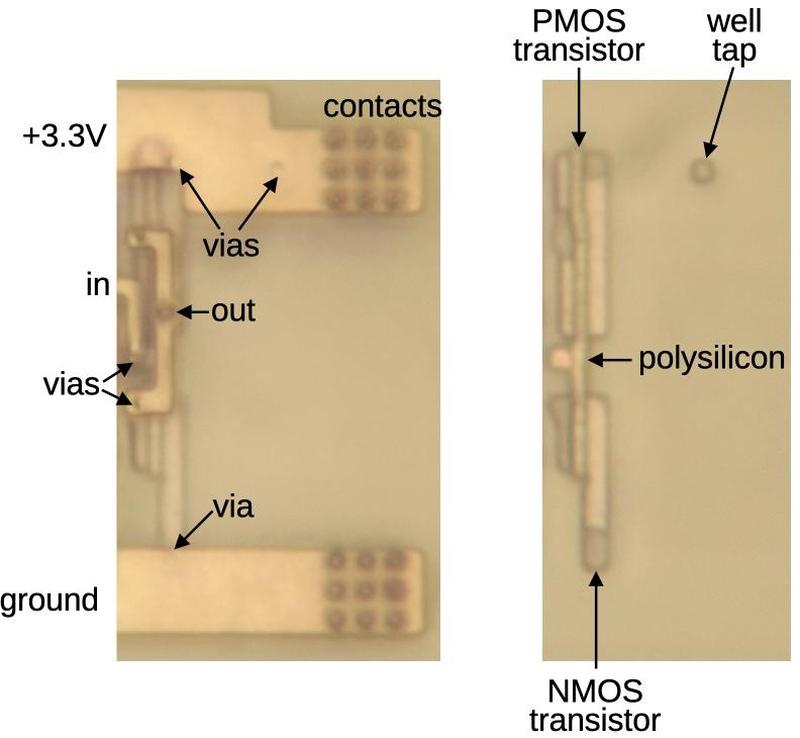

The diagram below shows two views of how a standard-cell inverter appears on the Pentium die, with and without metal. The inverter consists of two transistors, just like the schematic above. The input is connected to the two polysilicon gates of the transistors. The metal output wire is connected to the two transistors (the left sides, specifically).

In more detail, the image on the left includes the bottom (M1) metal layer, but I removed the other metal layers. Two thick metal lines at the top and bottom provide power and ground to the standard cells. The multiple dark circles are contacts between the M1 metal layer and the metal layer on top (M2), providing a path for power and ground that eventually reaches the top (M4) metal layer and then the chip's pins. (The power and ground wires are thick to provide sufficient current to the circuitry while minimizing voltage drops and noise.) The small, lighter circles are vias that connect the M1 metal layer to the underlying silicon or polysilicon. The input to the gate is provided from the M2 metal, which connects to the M1 layer at the indicated contact. The smaller black dots at the top and bottom of this metal strip are vias, connections to the underlying silicon.

For the image on the right, I removed all four metal layers, revealing the polysilicon and doped silicon. Recall that a transistor is constructed from regions of doped silicon with a stripe of polysilicon between the regions, forming the transistor's gate. The diagram shows the two transistors that form the inverter. When combined with the metal wiring, they form the inverter schematic shown earlier. The final feature is the "well tap". The PMOS transistors are constructed in a "well" of N-doped silicon. The well must be kept at a positive voltage, so periodic "taps" connect the well to the +3.3V supply. As mentioned earlier, the PMOS transistor is larger than the NMOS transistor, which allowed me to figure out the transistor types in the photo.

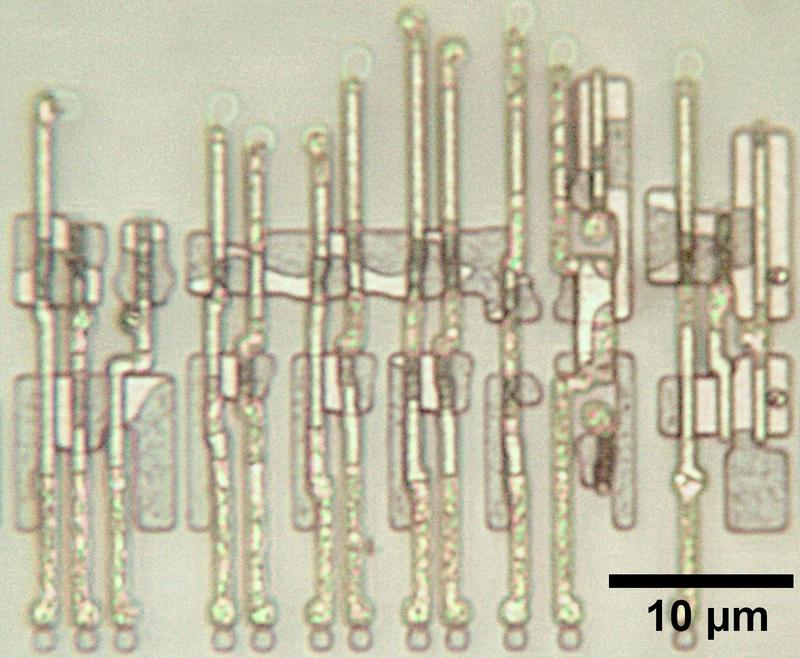

By the way, the chip is built with a 600 nm process, so the width of the polysilicon lines is approximately 600 nm. For comparison, the wavelength of visible light is 400 to 700 nm, with 600 nm corresponding to orange light. This explains why the microscope photos are somewhat fuzzy; the features are the size of the wavelength of light.6

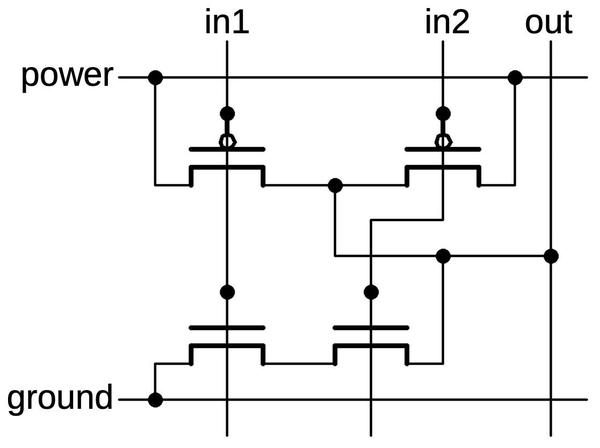

NAND gate

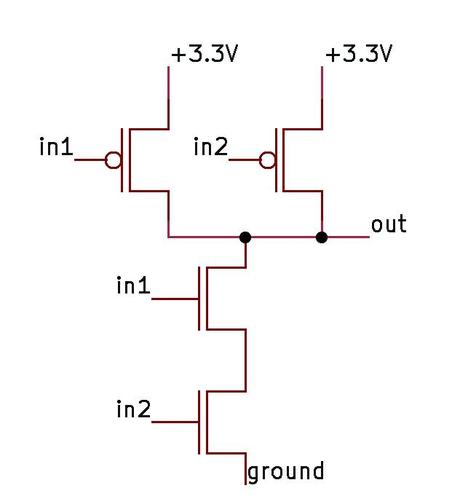

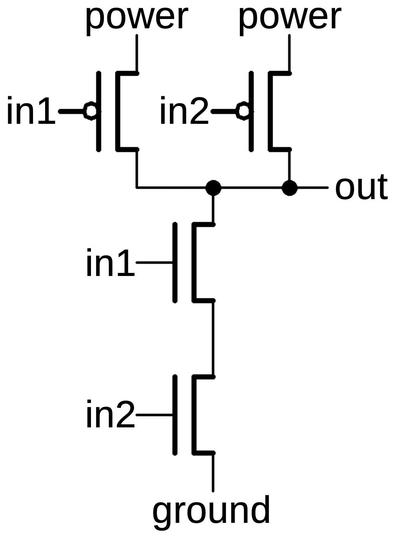

Another common gate in the Pentium is the NAND gate. The schematic below shows a NAND gate with two PMOS transistors above and two NMOS transistors below. If both inputs are high, the two NMOS transistors turn on, pulling the output low. If either input is low, a PMOS transistor turns on, pulling the output high. (Recall that NMOS and PMOS are opposites: a high voltage turns an NMOS transistor on while a low voltage turns a PMOS transistor on.) Thus, the CMOS circuit below produces the desired output for the NAND function.

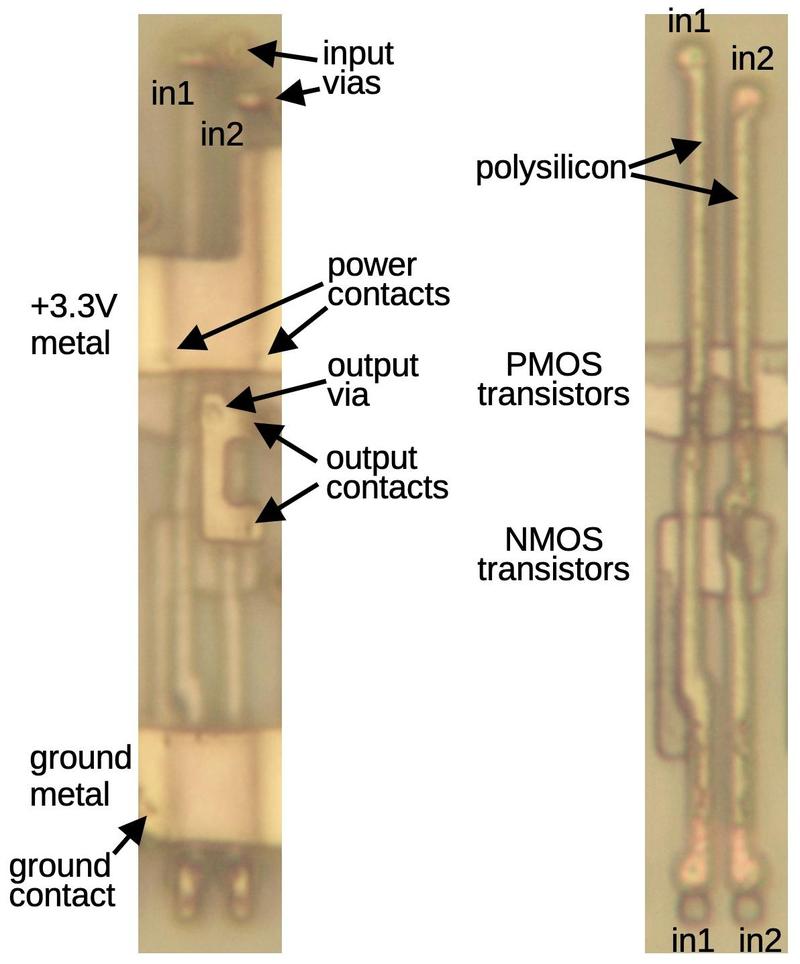

The implementation of the gate as a standard cell, below, follows the schematic. The left photo shows the circuit with one layer of metal (M1). A thick metal line provides 3.3 volts to the gate; it has two contacts that provide power to the two PMOS transistors. The metal line for ground is similar, except only one NMOS transistor is grounded. The thinner metal in the middle has two contacts to get the transistor outputs and a via to connect the output to the M2 metal layer on top. Finally, two tiny bits of M1 metal connect the inputs from the M2 layer to the underlying polysilicon.

The right photo shows the circuit with all metal removed, showing the polysilicon and silicon. Since a transistor is formed where a polysilicon line crosses doped silicon, the two polysilicon lines create four transistors. Polysilicon functions both as local wiring and as the transistor gates. In particular, the inputs can be connected at the top or bottom of the circuit (or both), depending on what works best for wiring the circuitry. Note that the transistors are squashed together so the silicon in the middle is part of two transistors. An important asymmetry is that the output is taken from the middle of the PMOS transistors, wiring them in parallel, while the output is taken from the right side of the NMOS transistors, wiring them in series.

Zooming out a bit, the photo below shows three NAND gates. Although the underlying standard cell is the same for each one, there are differences between the gates. At the top, horizontal wiring links the inputs to M2 through vias. The length of each polysilicon line depends on the position of the metal. Moreover, in the middle of each gate, the metal connection to the output is positioned differently. Finally, note that the power wiring shifts upward in the upper right corner; this is to make room for a larger cell to the right. The point is that the standard cells aren't simply copies of each other, but are adjusted in each case to put the inputs, outputs, and power in the right location. Also note that these standard cells are not isolated, but are squeezed together so the PMOS transistors are touching. This optimization slightly increases the density.

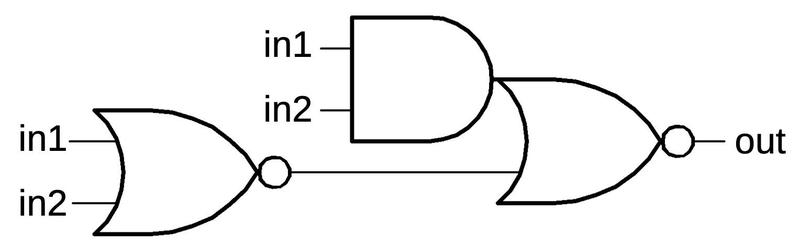

OR-NAND gate

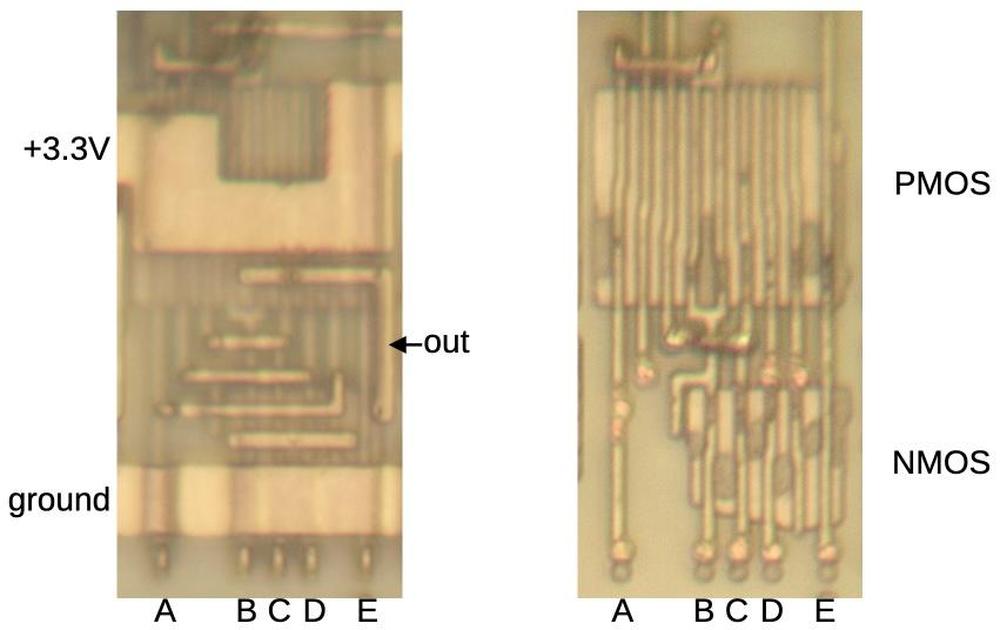

The standard cell library includes some complex gates. For instance, the gate below is a 5-input OR-NAND gate, computing

~((A+B+C+D)⋅E).

In the NMOS circuit, transistors A through D are paralleled while E is in series.

The PMOS circuit is the opposite, with A through D in series and E in parallel.

To provide sufficient current, the PMOS circuit has two sets of transistors for A through D, so the PMOS block is

much larger than the NMOS block.

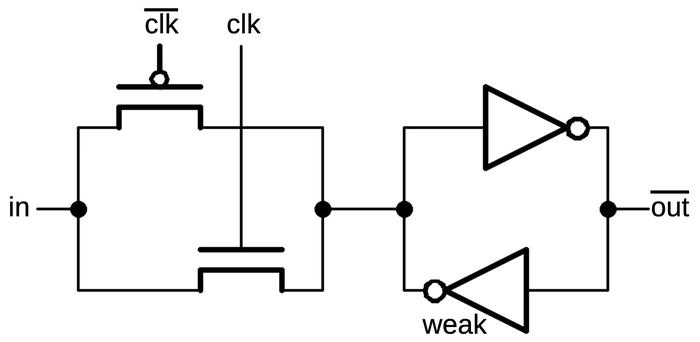

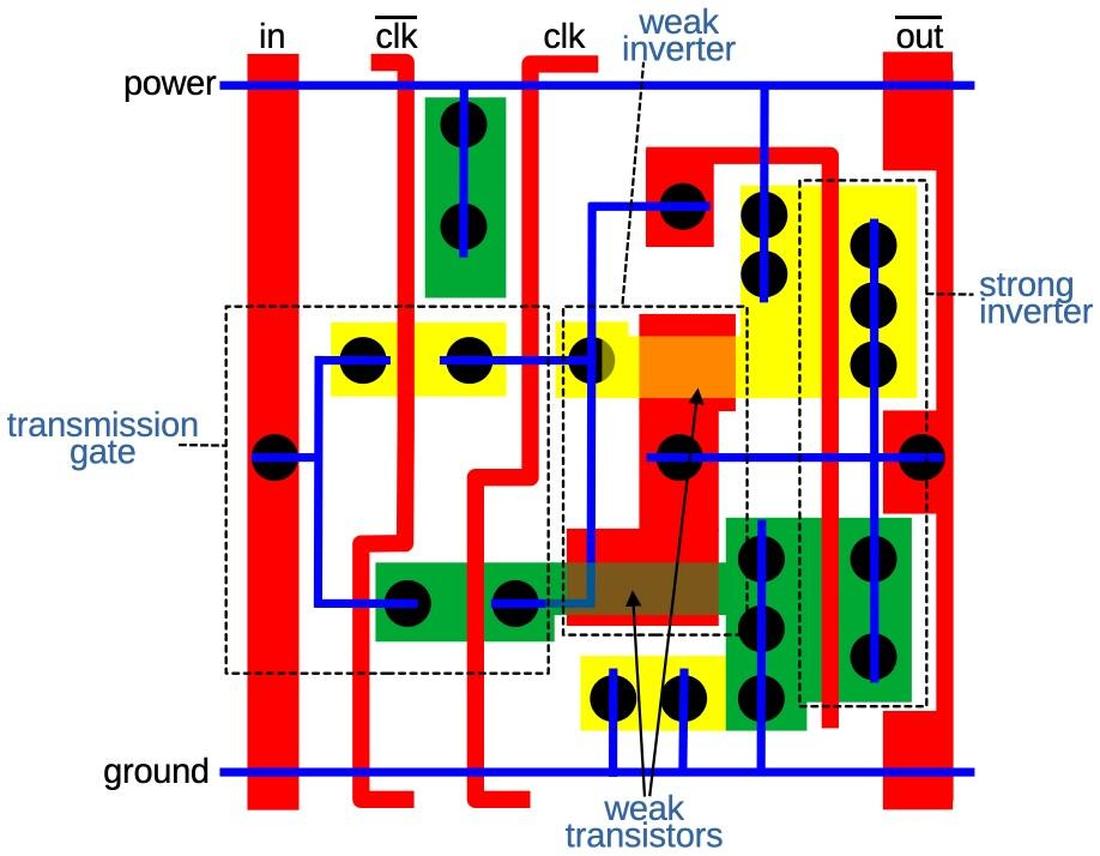

Latch

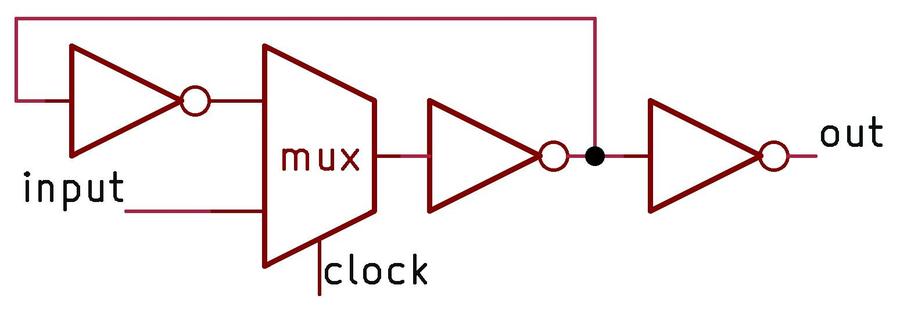

One of the key building blocks of the Pentium's circuitry is the latch. The idea of the latch is to hold one bit, controlled by the clock signal. A latch is "transparent": the latch's input immediately appears on the output while the clock is high. But when the clock is low, the latch holds its previous value. The latch is implemented with a feedback loop that passes the latch's output back into the latch. The heart of this latch circuit is the multiplexer (mux), which selects either the previous output (when the clock is low) or the new input (when the clock is high). The inverters amplify the feedback signal so it doesn't decay in the loop. An inverter also amplifies the output so it can drive other circuitry.

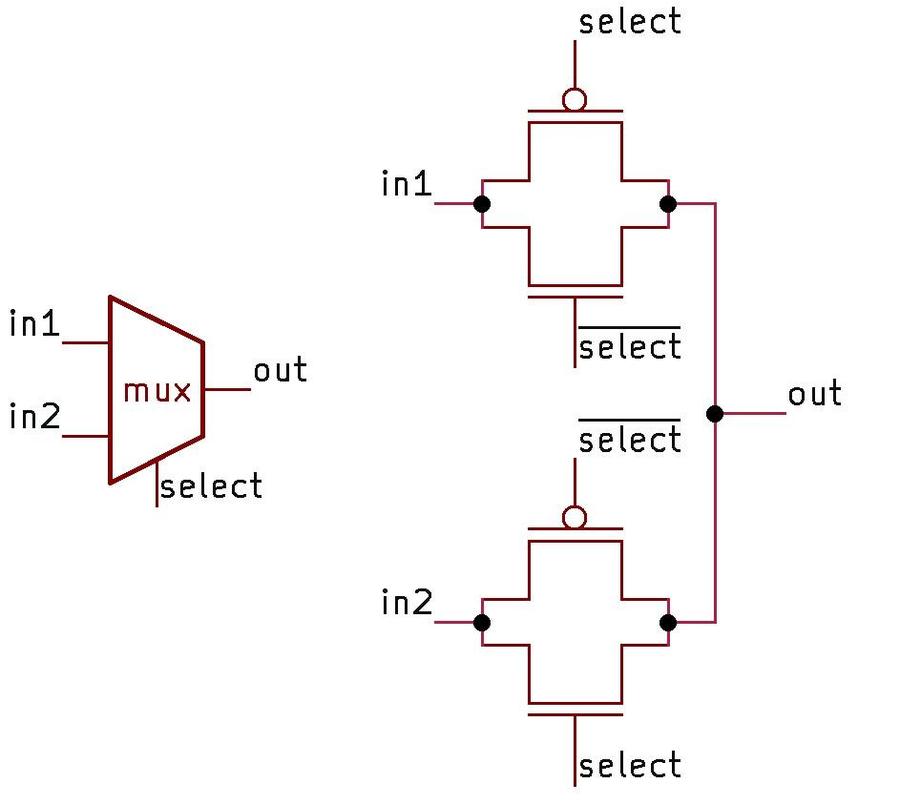

The circuit for a multiplexer is interesting since it uses "pass transistors". That is, the transistors simply pass their

input through to the output, rather than pulling a signal to power or ground as in a typical logic gate.

The schematic shows how this works.

First, suppose that the select line is low. This will turn on the two transistors connected to the first input, allowing

its level to flow to the output. Meanwhile, both transistors connected to the second input will be turned off, blocking

that signal.

But if the select line is high, everything switches. Now, the two transistors connected to the second input turn on, passing

its level to the output.

Thus, the multiplexer selects the first input if the control signal is low, and the second input if the control signal is high.

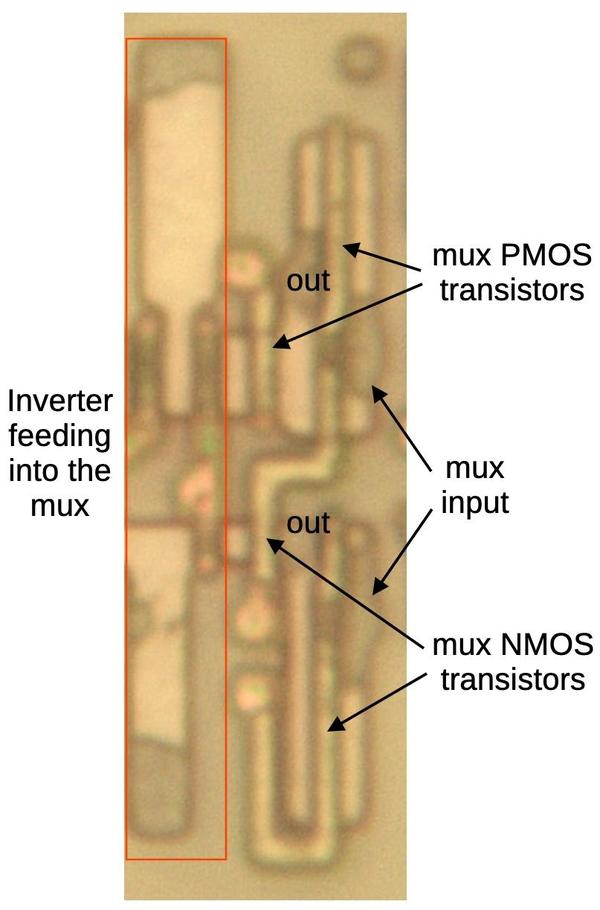

The diagram below shows a multiplexer, part of a latch. On the left, an inverter feeds into one input of the multiplexer.7 On the right is the other input to the multiplexer. The output is taken from the middle, between the pairs of the transistors.

Note that the multiplexer's circuit is opposite, in a way, to a logic gate. In a logic gate, you want either the NMOS transistor on or the PMOS transistor on, so the output is pulled low or high respectively. This is accomplished by giving the signals on the transistor gates the same polarity, so the same polysilicon line runs through both transistors. In a multiplexer, however, you want the corresponding PMOS and NMOS transistors to turn on at the same time, so they can pass the signal. This requires the signals on the transistor gates to have opposite polarity. One polysilicon line runs through the right PMOS transistor and the left NMOS transistor. The other polysilicon line runs through the left PMOS transistor and the right NMOS transistor, connected by metal wiring (not shown). The multiplexer includes an inverter to provide the necessary signal, but I cropped it out of the diagram below.

The flip-flop

The Pentium makes extensive use of flip-flops. A flip-flop is similar to a latch, except its clock input is edge-sensitive instead of level-sensitive. That is, the flip-flop "remembers" its input at the moment the clock goes from low to high, and provides that value as its output. This difference may seem unimportant, but it turns out to make the flip-flop more useful in counters, state machines, and other clocked circuits.

In the Pentium, a flip-flop is constructed from two latches: a primary latch and a secondary latch. The primary latch passes its value through while the clock is low and holds its value when the clock is high. The output of the primary latch is fed into the secondary latch, which has the opposite clock behavior. The result is that when the clock switches from low to high, the primary latch stops updating its output at the same time that the secondary starts passing this value through, providing the desired flip-flop behavior.

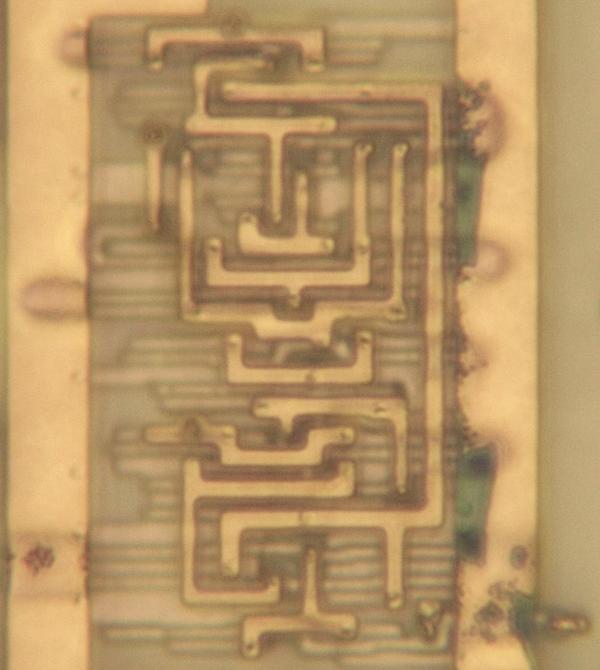

The photo above shows a standard-cell flop-flop, with an intricate pattern of metal wiring connecting the various sub-components. There are a few variants; with minor logic changes, the flip-flop can have "set" or "reset" inputs, bypassing the clock to force the output to the desired state. (Set and reset functions are useful for initializing flip-flops to a desired value, for example when the processor starts up.)

The BiCMOS buffer

Although I've been discussing CMOS circuits so far, the Pentium was built with BiCMOS, a process that allows circuits to use bipolar transistors in addition to CMOS. By adding a few extra processing steps to the regular CMOS manufacturing process, bipolar (NPN and PNP) transistors can be created. The Pentium made extensive use of BiCMOS circuits since they reduced signal delays by up to 35%. Intel also used BiCMOS for the Pentium Pro, Pentium II, Pentium III, and Xeon processors (but not the Pentium MMX). However, as chip voltages dropped, the benefit from bipolar transistors dropped too and BiCMOS was eventually abandoned.

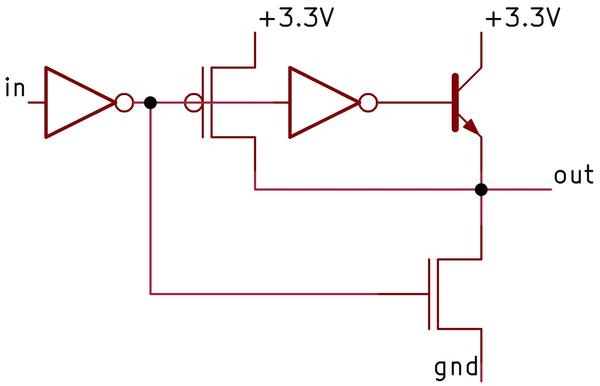

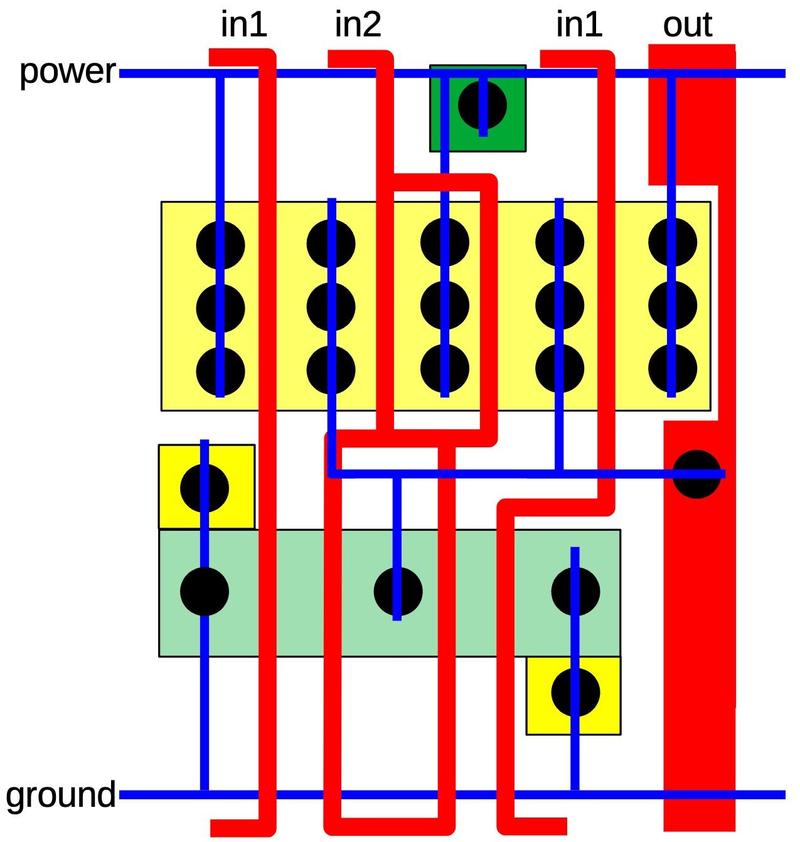

The schematic below shows a standard-cell BiCMOS buffer in the Pentium chip.8 This circuit is more complex than a CMOS buffer: it uses two inverters, an NPN pull-up transistor, an NMOS pull-down transistor, and a PMOS pull-up transistor.9

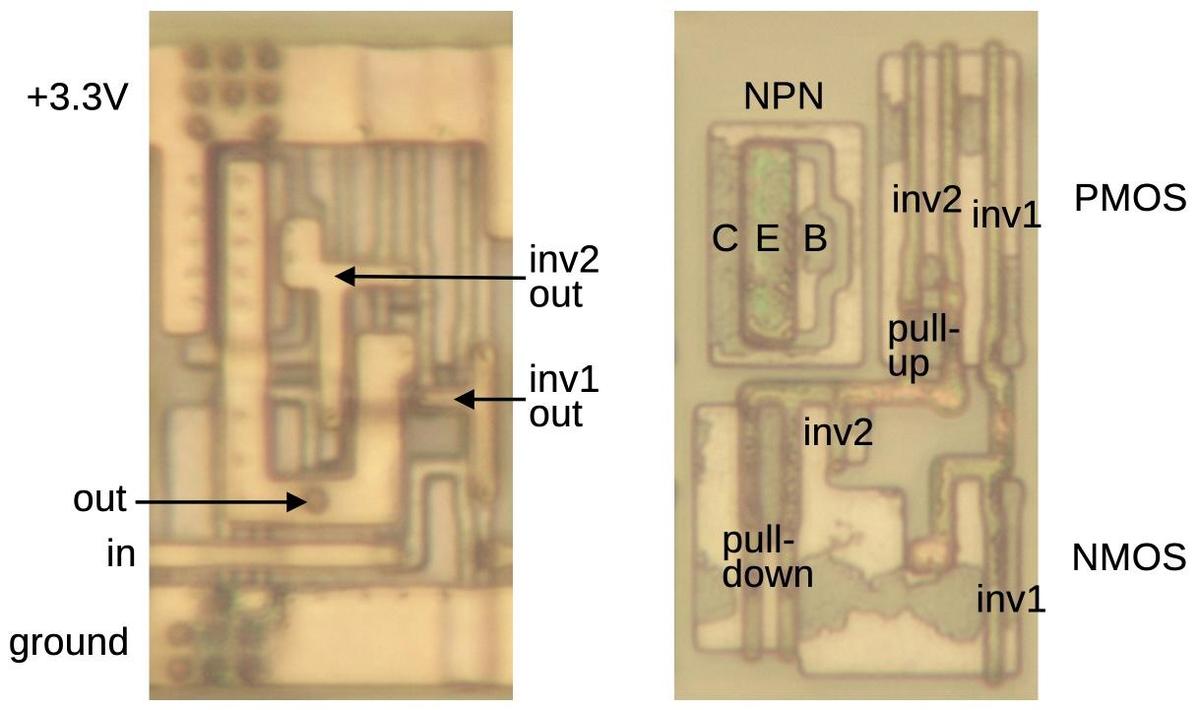

In the die images below, note the circular structure of the NPN transistor, very different from the linear structure of the NMOS and PMOS transistors and considerably larger. A sign of the buffer's high-current drive capacity is the output's thick metal wiring, much thicker than the typical signal wiring.

Conclusions

Standard-cell layout is extensively used in modern chips. Modern processors, with their nanometer-scale transistors, are much too small to study under a microscope. The Pentium, on the other hand, has features large enough that its circuits can be observed and reverse engineered. Of course, with 3.3 million transistors, the Pentium is too much for me to reverse engineer in depth, but I still find it interesting to study small-scale circuits and see how they were implemented. This post presented a small sample of the standard cells in the Pentium. The full standard-cell library is much larger, with dozens, if not hundreds, of different cells: many types of logic gates in a variety of sizes and drive strengths. But the fundamental design and layout principles are the same as the cells described here.

One unusual feature of the Pentium is its use of BiCMOS circuitry, which had a peak of popularity in the 1990s, right around the era of the Pentium. Although changing tradeoffs made BiCMOS impractical for digital circuitry, BiCMOS still has an important role in analog ICs, especially high-frequency applications. The Pentium in a sense is a time capsule with its use of BiCMOS.

I hope that you have enjoyed this look at some of the Pentium's circuits. I find it reassuring to see that even complex processors are made up of simple transistor circuits and you can observe and understand these circuits if you look closely.

For more on standard-cell circuits, I wrote about standard cells in an IBM chip and standard cells in the 386 (the 386 article has a lot of overlap with this one). Follow me on Twitter @kenshirriff or RSS for updates. I'm also on Mastodon occasionally as @[email protected].

Notes and references

-

In this blog post, I'm focusing on the "P54C" version of the original Pentium processor. Intel produced many different versions of the Pentium, and it can be hard to keep them straight. Part of the problem is that "Pentium" is a brand name, with multiple microarchitectures, lines, and products. At the high level, the Pentium (1993) was followed by the Pentium Pro (1995) Pentium II (1997), Pentium III (1999), Pentium 4 (2000), and so on. The original Pentium used the P5 microarchitecture, a superscalar microarchitecture that was advanced but still executed instruction in order like traditional microprocessors. The Pentium Pro was a major jump, implementing a microarchitecture called P6 that broke instructions into micro-operations and executed them out of order using dataflow techniques. The next microarchitecture version was NetBurst, first used with the Pentium 4. NetBurst provided a deep pipeline and introduced hyper-threading, but it was disappointingly slow and was replaced by the Core microarchitecture. The Core microarchitecture is based on the P6 and is Intel's current microarchitecture.

I'll focus now on the original Pentium, which went through several substantial revisions. The first Pentium product was the 80501 (codenamed P5), running at 60 or 66 MHz and using 5 volts. These chips were built with an 800 nm process and contained 3.1 million transistors.

The power consumption of these chips was disappointing, so Intel improved the chip, producing the 80502. These chips, codenamed P54C, used 3.3 volts and ran at 75-120 MHz. The chip's architecture remained essentially the same but support was added for multiprocessing, boosting the transistor count to 3.3 million. The P54C had a much more advanced clock circuit, allowing the external bus speed to stay low (50-66 MHz) while the internal clock speed—and thus performance—climbed to 100 MHz. The chips were built with a smaller 600 nm process with four layers of metal, compared to the previous three. Visually, the die of the P54C is almost the same as the P5, with the additional multiprocessing logic at the bottom and the clock circuitry at the top. For this article, I examined the P54C, but the standard cells should be similar in other versions.

Next, Intel moved to the 350 nm process, producing a smaller, faster Pentium chip, codenamed the P54CS; the die looks almost identical to the P54C (but smaller), with subtle changes to the bond pads. Another variant was designed for mobile use: the Pentium processor with "Voltage Reduction Technology" reduced power consumption by using a 2.9- or 3.1-volt supply for the core and a 3.3-volt supply to drive the I/O pins. These were built first with the 600 nm process (75-100 MHz) and then the 350 nm process (100-150 MHz).

The biggest change to the original Pentium was the Pentium MMX, with part number 80503 and codename P55C. This chip extended the x86 instruction set with 57 new instructions for vector processing. It was built on a 350 nm process before moving to 280 nm, and had 4.5 million transistors. More obscure variants of the original Pentium include the P54CQS, P54CS, P54LM, P24T, and Tillamook, but I won't get into them. ↩

-

Circuits that had a high degree of regularity, such as the arithmetic/logic unit (ALU) or register storage were typically constructed by manually laying out a block to implement the circuitry for one bit and then repeating the block as needed. Because a circuit was repeated 32 times for the 32-bit processor, the additional effort was worthwhile. ↩

-

An alternative layout technique is the gate array, which doesn't provide as much flexibility as a standard cell approach. In a gate array (sometimes called a master slice), the chip had a fixed array of transistors (and often resistors). The chip could be customized for a particular application by designing the metal layer to connect the transistors as needed. The density of the chip was usually poor, but gate arrays were much faster to design, so they were advantageous for applications that didn't need high density or produced a relatively small volume of chips. Moreover, manufacturing was much faster because the silicon wafers could be constructed in advance with the transistor array and warehoused. Putting the metal layer on top for a particular application could then be quick. Similar gate arrays used a fixed arrangement of logic gates or flip-flops, rather than transistors. Gate arrays date back to 1967. ↩

-

The behavior of MOS transistors is complicated, so the description above is simplified, just enough to understand digital circuits. In particular, MOS transistors don't simply switch between "on" and "off" but have states in between. This allows MOS transistors to be used in a wide variety of analog circuits. ↩

-

The earliest Pentiums had three layers of metal wiring, but Intel moved to a four-layer process with the P54C die, the version that I'm examining. ↩

-

To get this level of magnification with my microscope, I had to use an oil immersion lens. Instead of looking at the chip in air, as with a normal lens, I had to put a drop of special microscope oil on the chip. I carefully lower the lens until it dips into the oil (making sure I don't crash the lens into the chip). The purpose of the oil is that its index of refraction is almost the same as glass, much higher than air. This gives the lens a higher "numerical aperture", allowing the lens to resolve smaller details. ↩

-

For completeness, I'll mention that the inverter feeding the multiplexer inverter isn't exactly an inverter. Specifically, the inverter's two transistors are not tied together to produce an output. Instead, the inverter's NMOS transistor provides an input to the multiplexer's NMOS transistor and likewise, the PMOS transistor provides an input to the PMOS transistor. The omission of this connection does not affect the circuit's behavior, but it makes calling the circuit an inverter and a multiplexer a bit of an abstraction. ↩

-

Intel called this gate "BiNMOS" rather than "BiCMOS" because it uses a bipolar transistor and an NMOS transistor to drive the output, rather than two bipolar transistors. The Pentium's BiCMOS circuitry is described in a conference paper, showing a second NPN transistor to protect the first one. I don't see the second transistor on the die so the two transistors may be implemented in one silicon structure. Reference: R. F. Krick et al., “A 150 MHz 0.6 µm BiCMOS superscalar microprocessor,” IEEE Journal of Solid-State Circuits, vol. 29, no. 12, Dec. 1994, doi:10.1109/4.340418. ↩

-

The Pentium contains multiple types of BiCMOS standard cells, which I'll show in this footnote. The cell below is an inverter. It is similar to the BiCMOS buffer described earlier, except it lacks the first inverter in the circuit. To make room for the NPN transistor on the left, the PMOS transistors are shifted to the right. As a result, they don't line up with the PMOS transistors in other cells. This is a break from the traditional orderliness of standard cells.

A BiCMOS inverter with PMOS on the left and NMOS on the right. The input is at the bottom and the output is in the middle.The BiCMOS inverter below is similar, except it uses two NPN transistors, providing more output drive. I removed the M1 metal layer to provide a better view of the transistors.

A BiCMOS inverter with two NPN transistors. The PMOS transistors are in the lower left and the NMOS transistors are in the lower right.Another interesting BiCMOS circuit is the D flip-flop with enable and BiCMOS output, shown below. This is similar to the earlier flip-flop except it has an

enableinput, allowing it to either load a new value triggered by the clock, or to hold its earlier value. This allows the flip-flop to remember a value for more than one clock cycle. The additional functionality is implemented by another multiplexer, selecting either the old value or the new value. (This multiplexer is, in a way, one level higher than the multiplexer in each latch.) The transistor for the BiCMOS output is in the upper right, poking out from under the metal. (This circuit might be implemented as two independent cells, one for the flip-flop and one for the driver; I'm not sure.)A D flip-flop in the Pentium. -

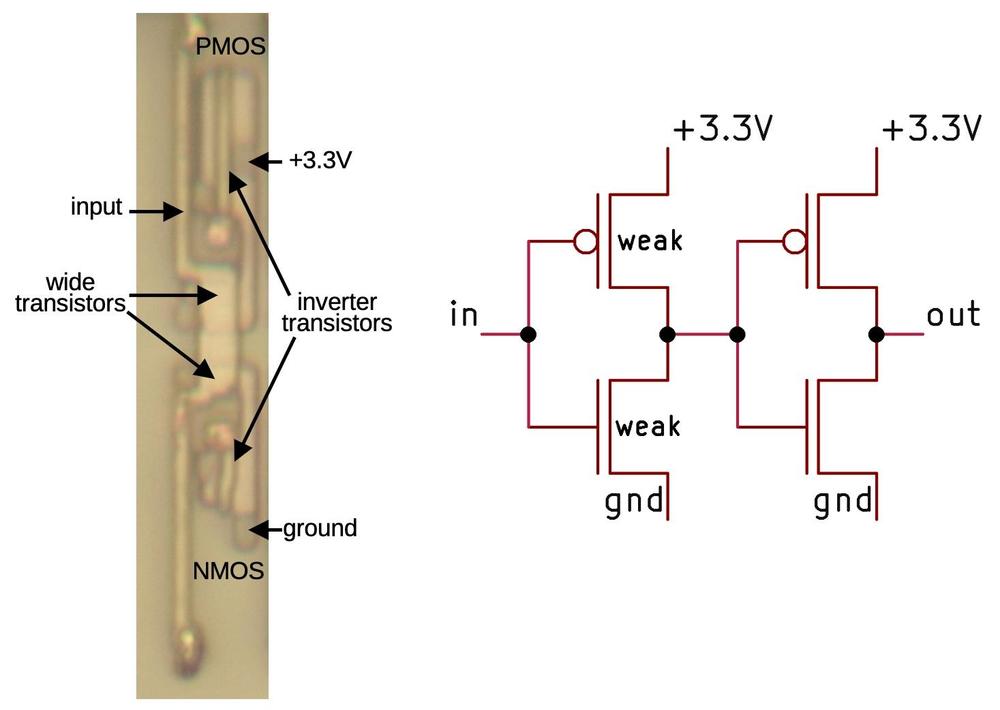

One puzzling inverter variant is used in a gate I'll call the "slow buffer". This buffer consists of two inverters, so it passes its input through to the output, buffered. The strange part is that the first inverter uses transistors with wide gates, which makes these transistors much weaker than regular transistors. As a result, the first inverter will be slow to switch states. My guess is that this circuit is used to delay signals, for example, to keep a signal aligned with another signal that is delayed by multiple logic gates.

The buffer consists of two inverters. The first inverter uses wide, weak transistors.You might expect that larger transistors would be stronger, not weaker. The problem is that these transistors are larger in the wrong dimension. If you make the gate wider, the effect is similar to multiple transistors in parallel, providing more current. But if you make the gate longer (as in this case), the effect is similar to multiple transistors in series, so the resistances add and the total current is reduced. In most cases, transistors are constructed with the smallest gate length possible, which is determined by the manufacturing process, so the transistors here are unusual. This chip was manufactured with an 800 nm process, so the smallest gate length is approximately 800 nm. The gate width (the normal direction for variation) varies dramatically depending on the circuit, optimized to provide maximum performance. ↩