The Intel 8086 processor (1978) has a complicated instruction set with instructions ranging from one to six bytes long. This raises the question of how the processor knows the length of an instruction.1 The answer is that the 8086 uses an interesting combination of lookup ROMs and microcode to determine how many bytes to use for an instruction. In brief, the ROMs perform enough decoding to figure out if it needs one byte or two. After that, the microcode simply consumes instruction bytes as it needs them. Thus, nothing in the chip explicitly "knows" the length of an instruction. This blog post describes this process in more detail.

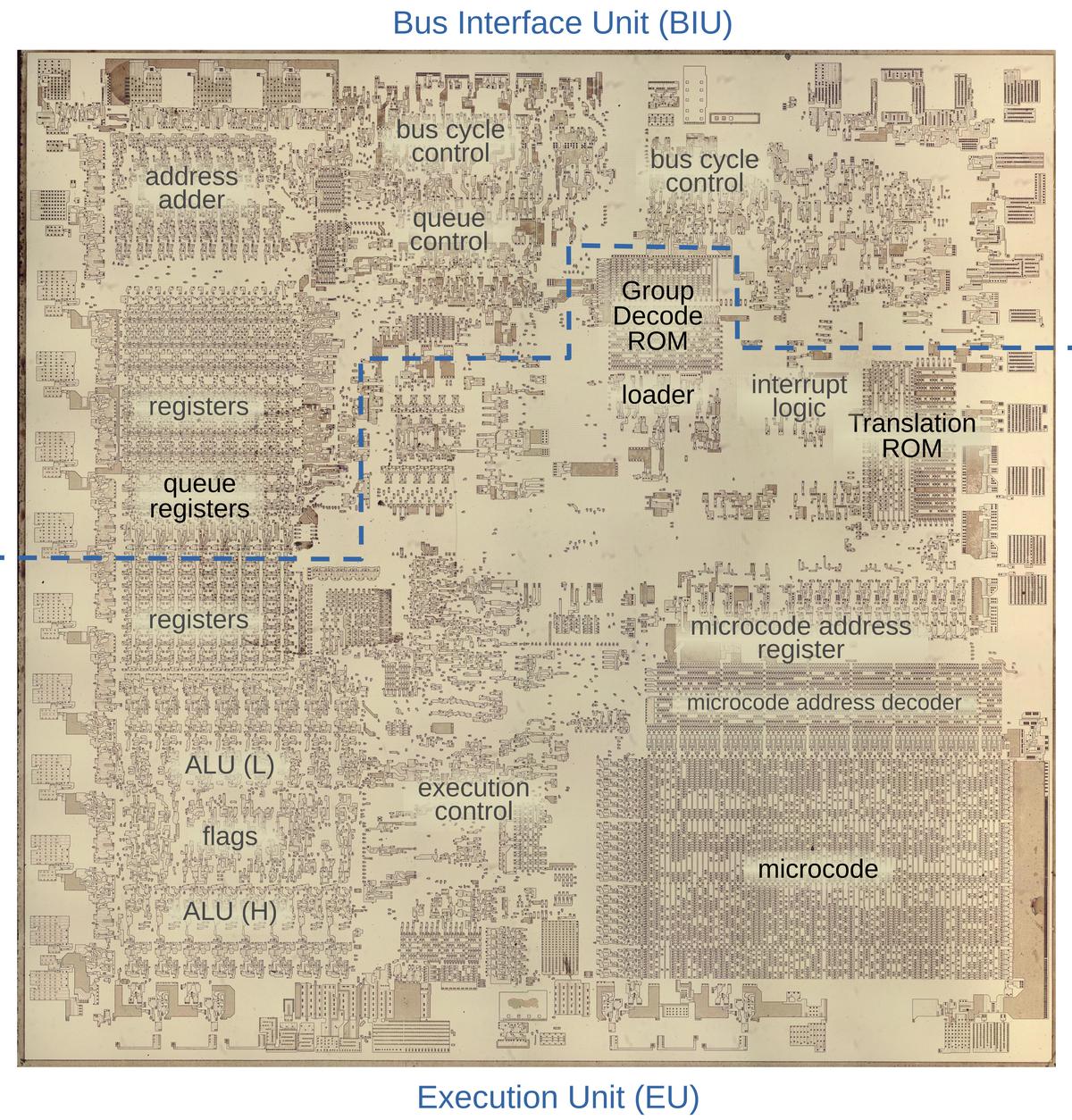

The die photo below shows the chip under a microscope. I've labeled the key functional blocks; the ones that are important to this post are darker. Architecturally, the chip is partitioned into a Bus Interface Unit (BIU) at the top and an Execution Unit (EU) below. The BIU handles bus and memory activity as well as instruction prefetching, while the Execution Unit (EU) executes the instructions.

The prefetch queue, the loader, and the microcode

The 8086 uses a 6-byte instruction prefetch queue to hold instructions, and this queue will play an important role in this discussion.3 Earlier microprocessors read instructions from memory as they were needed, which could cause the CPU to wait on memory. The 8086, instead, read instructions from memory before they were needed, storing them in the instruction prefetch queue. (You can think of this as a primitive instruction cache.) To execute an instruction, the 8086 took bytes out of the queue one at a time. If the queue ran empty, the processor waited until more instruction bytes were fetched from memory into the queue.

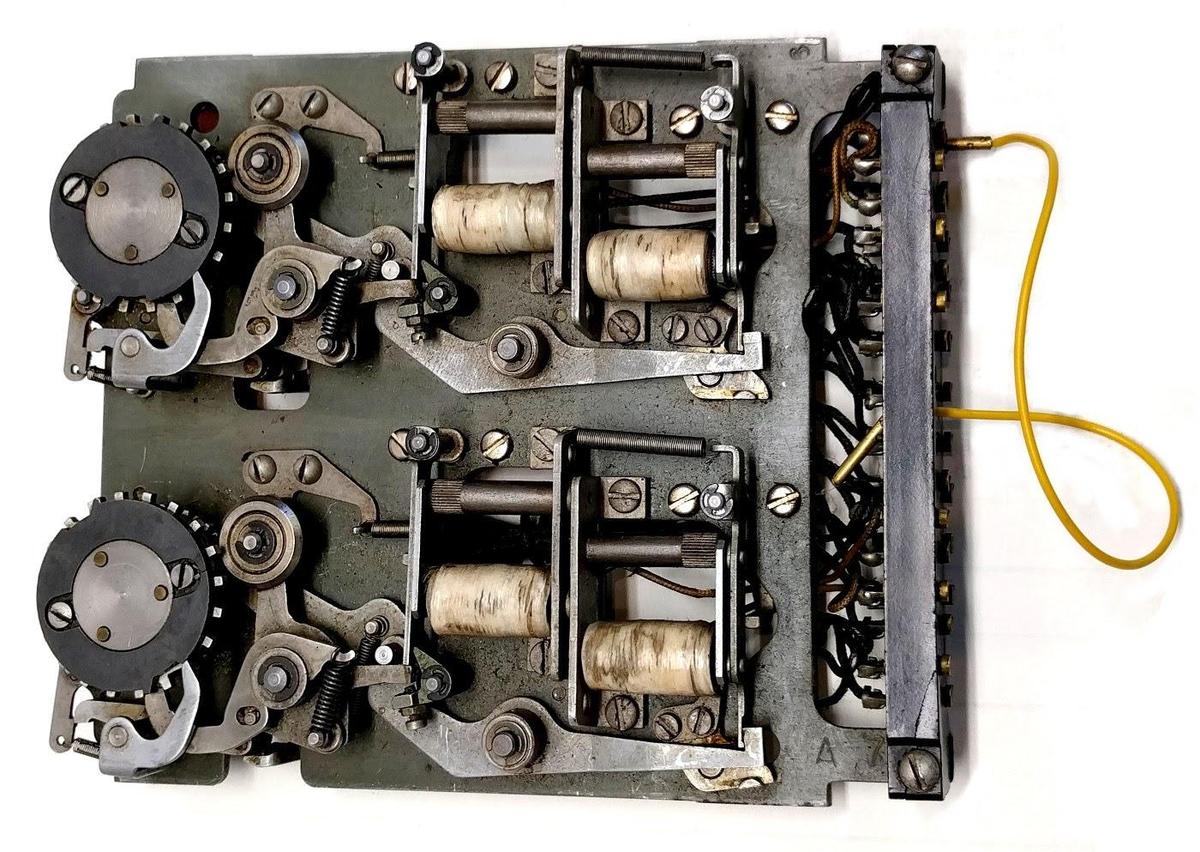

A circuit called the loader handles the interaction between the prefetch queue and instruction execution. The loader is a small state machine that provides control signals to the rest of the execution circuitry. The loader gets the first byte of an instruction from the prefetch queue and issues a signal FC (First Clock) that starts execution of the instruction.

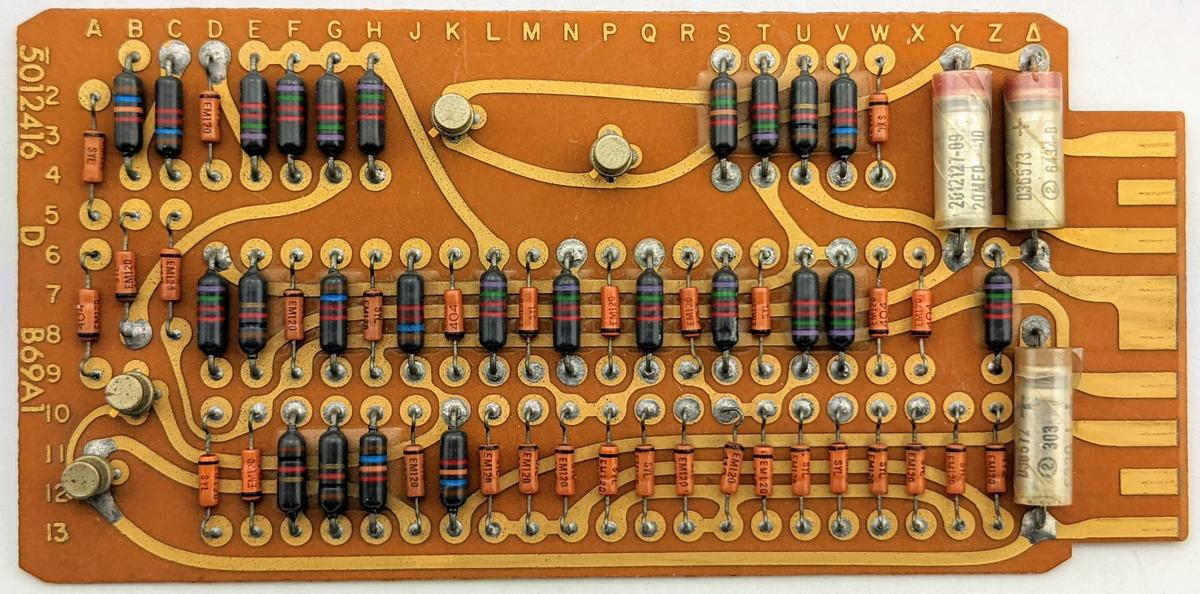

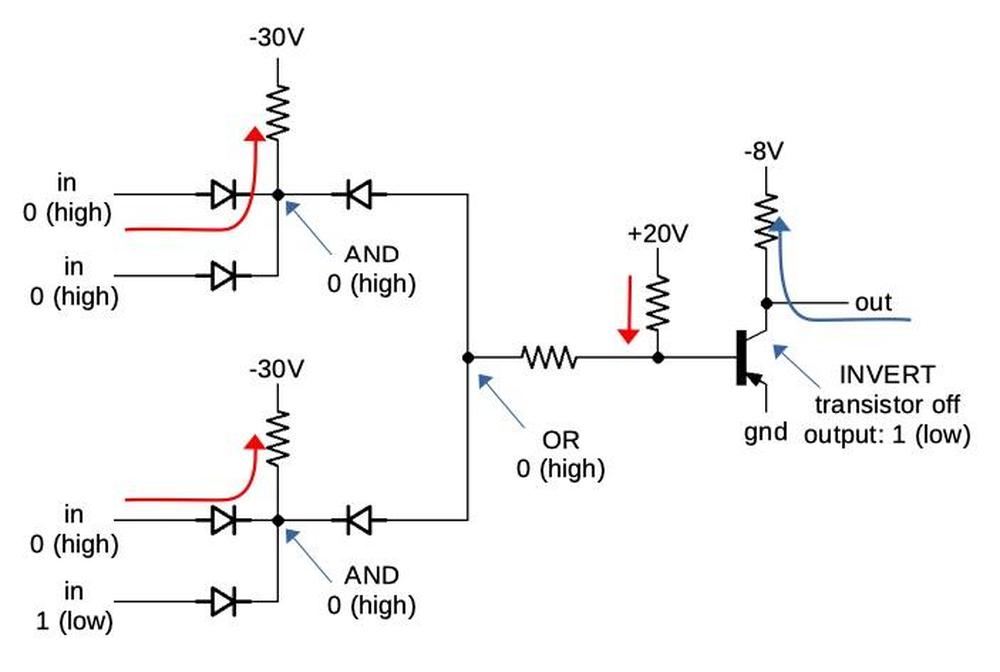

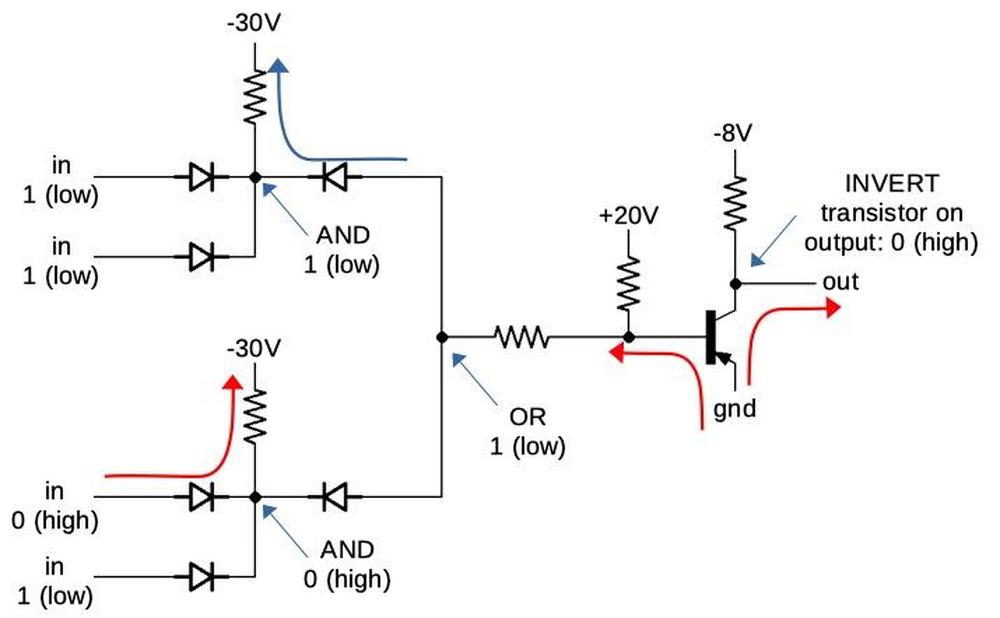

At this point, the Group Decode ROM performs the first stage of instruction decoding, classifying the instruction into various categories based

on the opcode byte.

Most of the 8086's instructions are implemented in microcode.

However, a few instructions are so simple that they are implemented with logic circuits. For example, the CLC (Clear Carry) instruction

clears the carry flag directly.

The Group Decode ROM categorizes these instructions as 1BL (one-byte, implemented in logic). The loader responds by issuing an SC (Second Clock)

signal to wrap up execution and start the next instruction. Thus, these simple instructions take two clock cycles.

The 8086 has various prefix bytes that can be put in front of an instruction to change its behavior.

For instance, a segment prefix changes the memory segment that the instruction uses. A LOCK prefix locks the bus during the next

instruction. The Group Decode ROM detects a prefix and outputs a prefix signal. This causes the prefix to be handled in logic,

rather than microcode, similar to the 1BL instructions.

Thus, a prefix also takes one byte and two clock cycles.

The remaining instructions are handled by microcode.2

Let's start with a one-byte instruction such as INC AX, which increments the AX register.

As before, the loader gets the instruction byte from the prefix queue.

The Group Decode ROM determines that this instruction is implemented in microcode and can start after one byte, so the microcode engine starts

running.

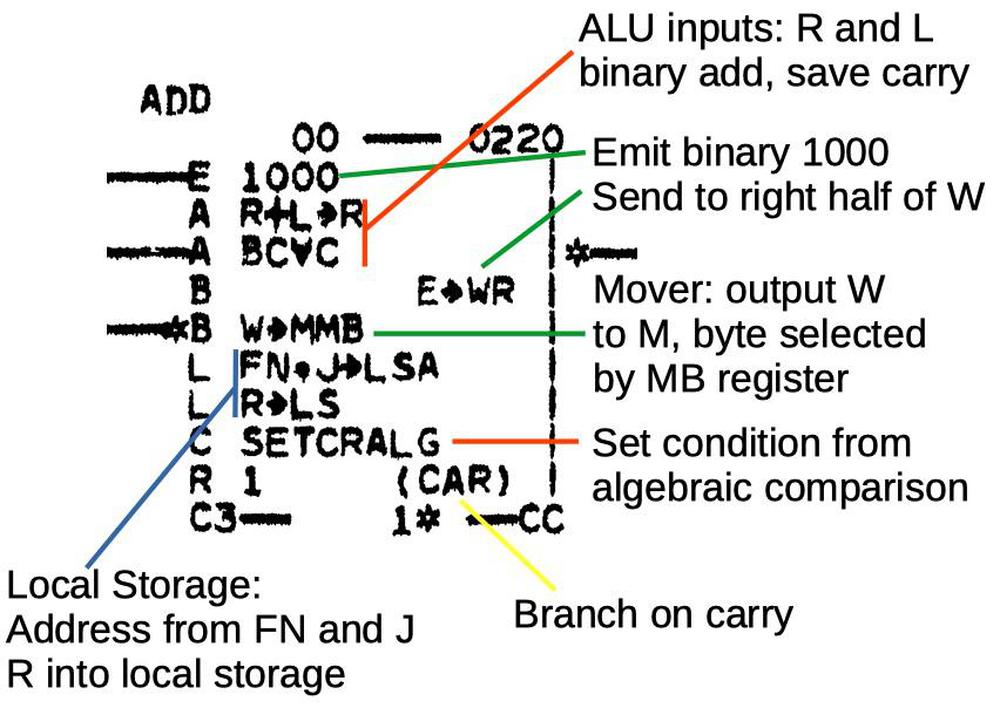

The microcode below handles the increment and decrement instructions. It moves the appropriate register, indicated by M to the ALU's temporary B

register.

It puts the incremented or decremented result (Σ) back into the register (M). RNI tells the loader to run the next instruction.

With two micro-instruction, this instruction takes two clock cycles.

M → tmpB XI tmpB, NX INC/DEC: get value from M, set up ALU Σ → M WB,RNI F put result in M, run next instruction

But what happens with an instruction that is more than one byte long, such as adding an immediate value to a register?

Let's look at ADD AX,1234, which adds 1234 to the AX register.

As before, the loader reads one byte and then the microcode engine starts running.

At this point, the 8086 doesn't "realize" that this is a 3-byte instruction.

The first line of the microcode below gets one byte of the immediate operand: Q→tmpBL loads a byte from the instruction prefetch queue into the low byte of the temporary B

register.

Similarly, the second line loads the second byte. The next line puts the register value (M) in tmpA. The last line puts

the sum back into the register and runs the next instruction.

Since this instruction takes two bytes from the prefetch queue, it is three bytes long in total.

But nothing explicitly indicates this instruction is three bytes long.

Q → tmpBL JMPS L8 2 alu A,i: get byte from queue Q → tmpBH get byte from queue M → tmpA XI tmpA, NX get value from M, set up ALU Σ → M WB,RNI F put result in M, run next instruction

You can also add a one-byte immediate value to a register, such as ADD AL,12. This uses the same microcode above. However,

in the first line, JMPS L8 is a conditional jump that skips the second micro-instruction if the data length is 8 bits.

Thus, the microcode only consumes one byte from the prefetch queue, making the instruction two bytes long.

In other words, what makes this instruction two bytes instead of three is the bit in the opcode which triggers the conditional jump in the microcode.

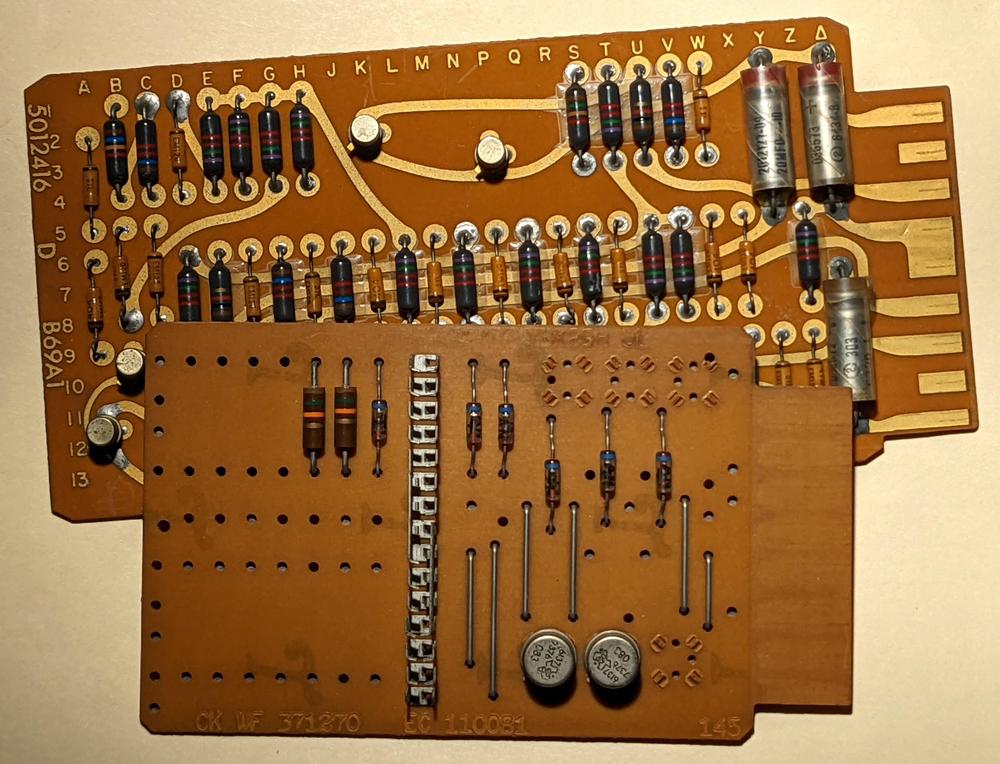

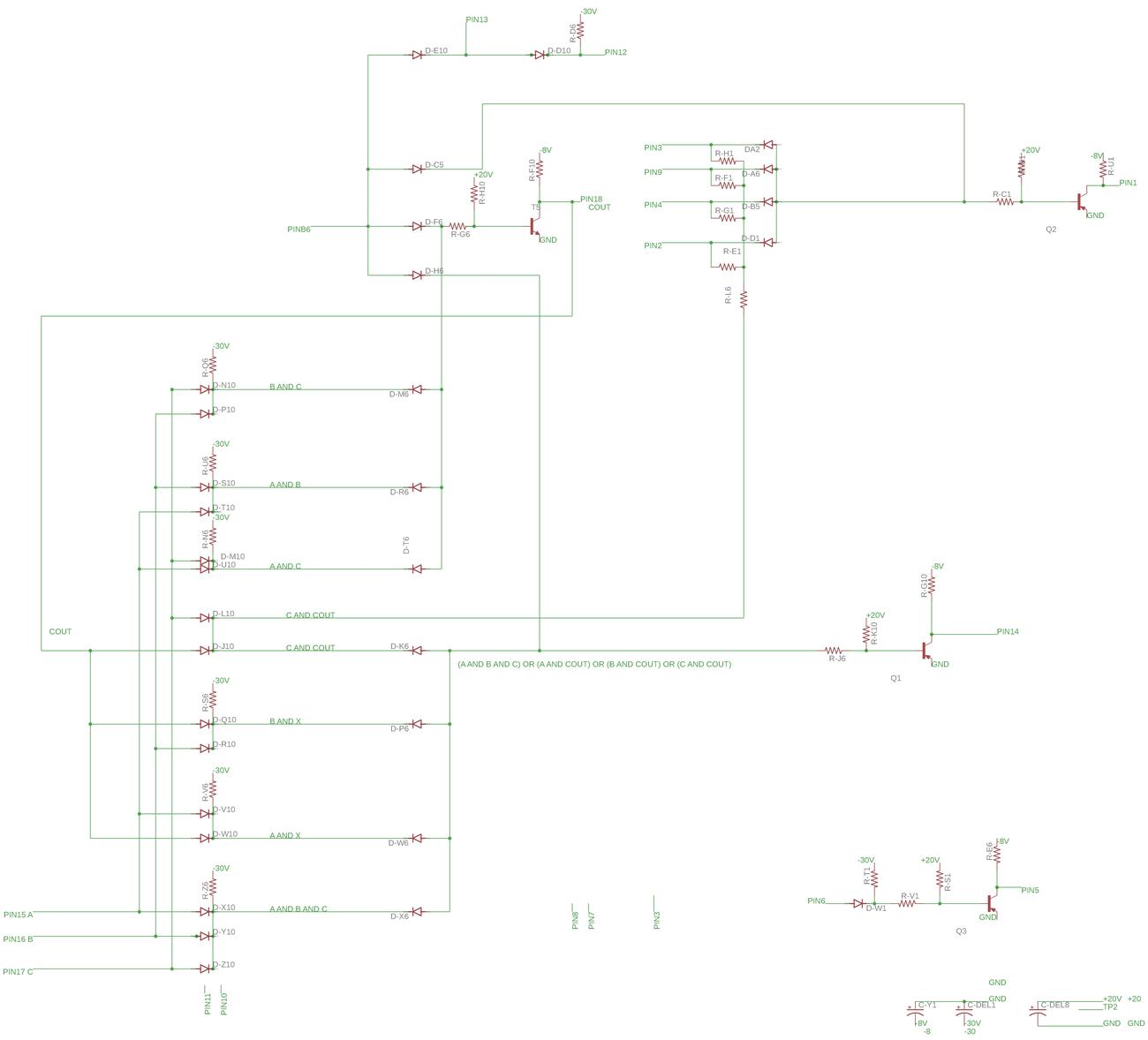

The 8086 has another class of instructions, those with a ModR/M byte following the opcode. The Group Decode ROM classifies these instructions as 2BR (two-byte ROM) indicating that the second byte must be fetched before processing by the microcode ROM. For these instructions, the loader fetches the second byte from the prefetch queue before triggering the SC (Second Clock signal) to start microcode execution.

The ModR/M byte indicates the addressing mode that the instruction should use, such as register-to-register or memory-to-register. The ModR/M can change the instruction length by specifying an address displacement of one or two bytes. A second ROM called the Translation ROM selects the appropriate microcode for the addressing mode (details). For example, if the addressing mode includes an address displacement, the microcode below is used:

Q → tmpBL JMPS MOD1 12 [i]: get byte(s) Q → tmpBH Σ → tmpA BX EAFINISH 12: add displacement

This microcode fetches two displacement bytes from the prefetch queue (Q).

However, if the ModR/M byte specifies a one-byte displacement, the MOD1 condition causes the microcode to jump over the second

fetch.

Thus, this microcode uses one or two additional instruction bytes depending on the value of the ModR/M byte.

To summarize, nothing in the 8086 "knows" how long an instruction is. The Group Decode ROM makes part of the decision, classifying instructions as a prefix, 1-byte logic, 2-byte ROM, or otherwise, causing the loader to fetch one or two bytes. The microcode then consumes instruction bytes as needed. In the end, the length of an 8086 instruction is determined by how many bytes are taken from the prefetch queue by the time it ends.

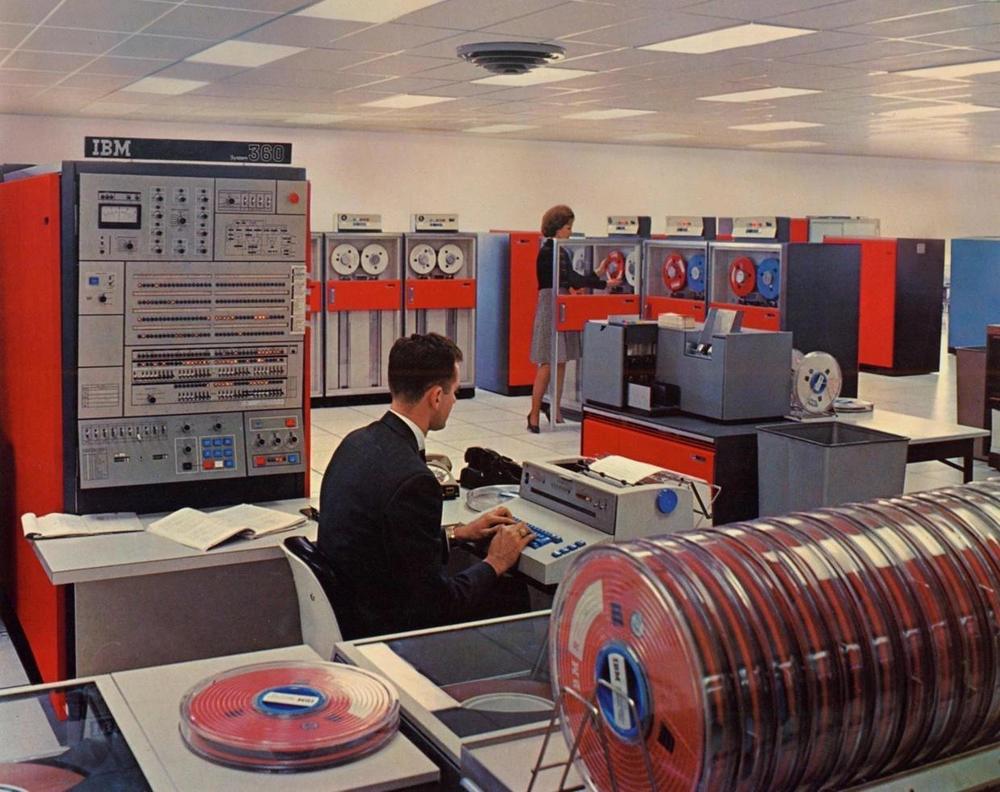

Some other systems

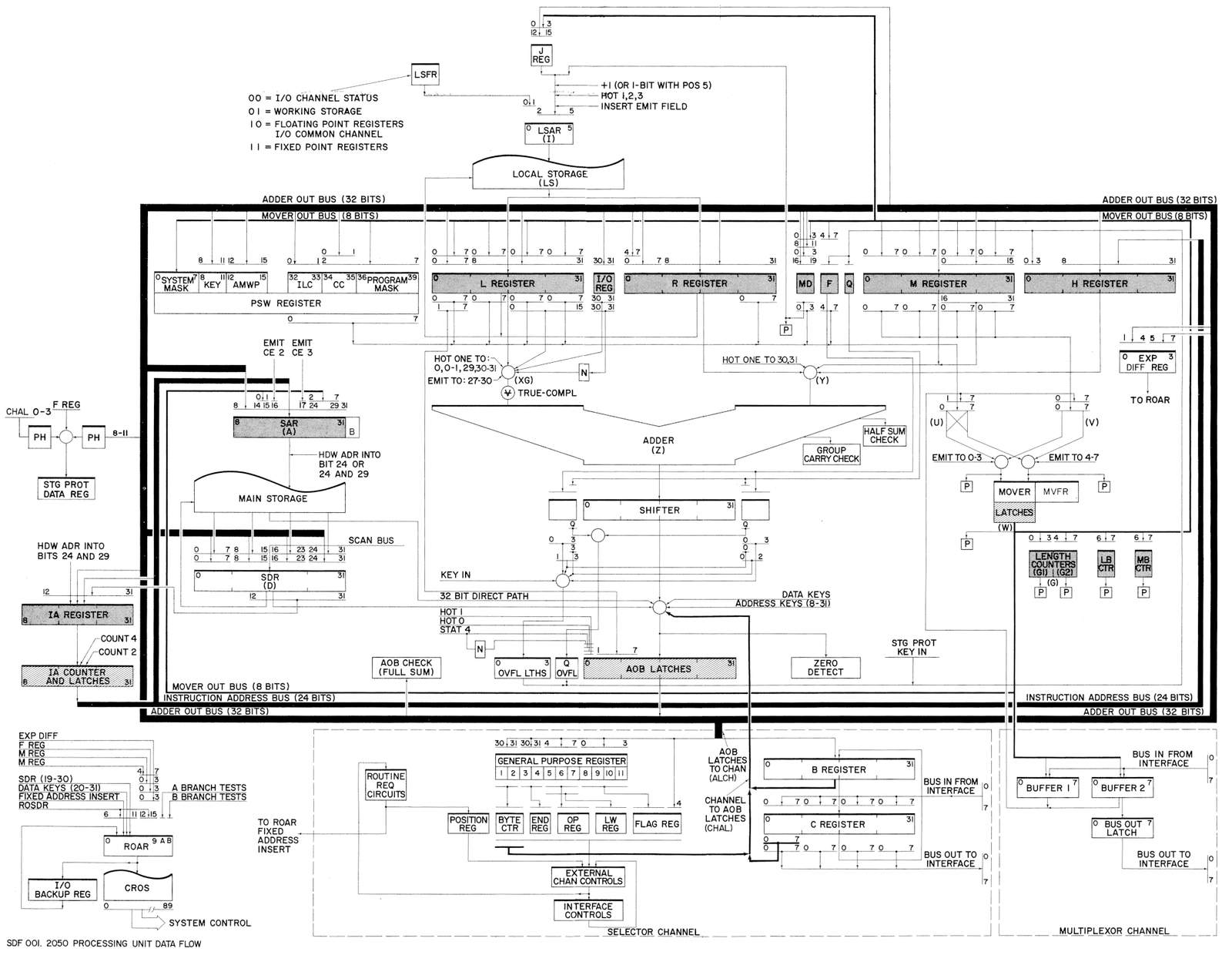

It's interesting to see how other processors deal with instruction length. For example, RISC processors (Reduced Instruction Set Computers) typically have fixed-length instructions. For instance, the ARM-1 processor used 32-bit instructions, making instruction decoding very simple.

Early microprocessors such as the MOS Technology 6502 (1975) didn't use microcode, but were controlled by state machines. The CPU fetches instruction bytes from memory as needed, as it moves through various execution states. Thus, as with the 8086, the length of an instruction wasn't explicit, but was how many bytes it used.

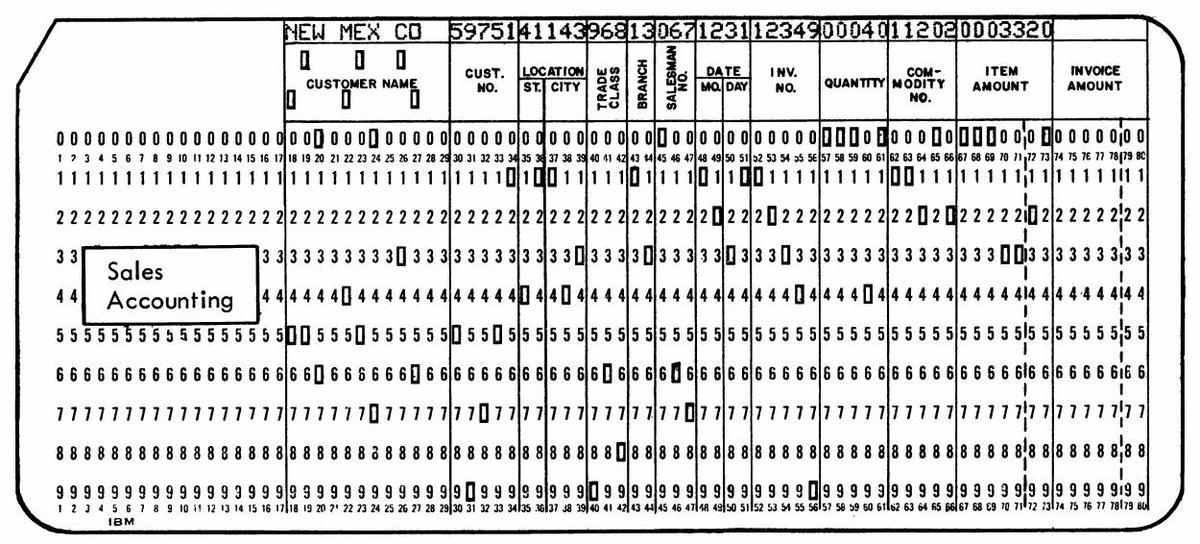

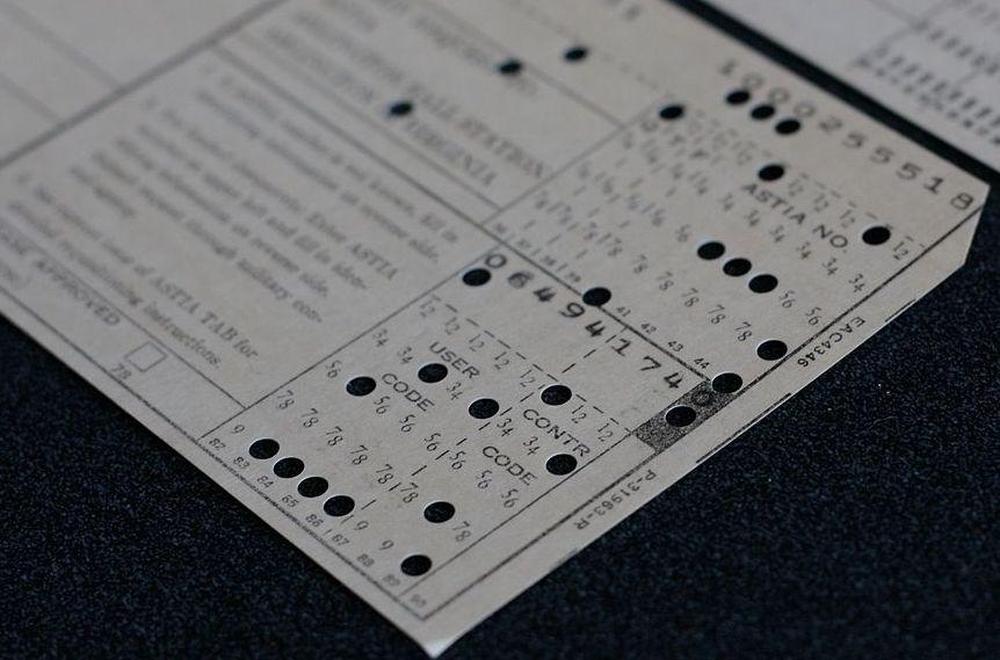

The IBM 1401 computer (1959) took a completely different approach with its variable-length words. Each character in memory had an associated "word mark" bit, which you can think of as a metadata bit. Each machine instruction consisted of a variable number of characters with a word mark on the first one. Thus, the processor could read instruction characters until it hit a word mark, which indicated the start of the next instruction. The word mark explicitly indicated to the processor how long each instruction was.

Perhaps the worst approach for variable-length instructions was the Intel iAPX 432 processor (1981), which had instructions with variable bit lengths, from 6 to 321 bits long. As a result, instructions weren't aligned on byte boundaries, making instruction decoding even more inconvenient. This was just one of the reasons that the iAPX 432 ended up overly complicated, years behind schedule, and a commercial failure.

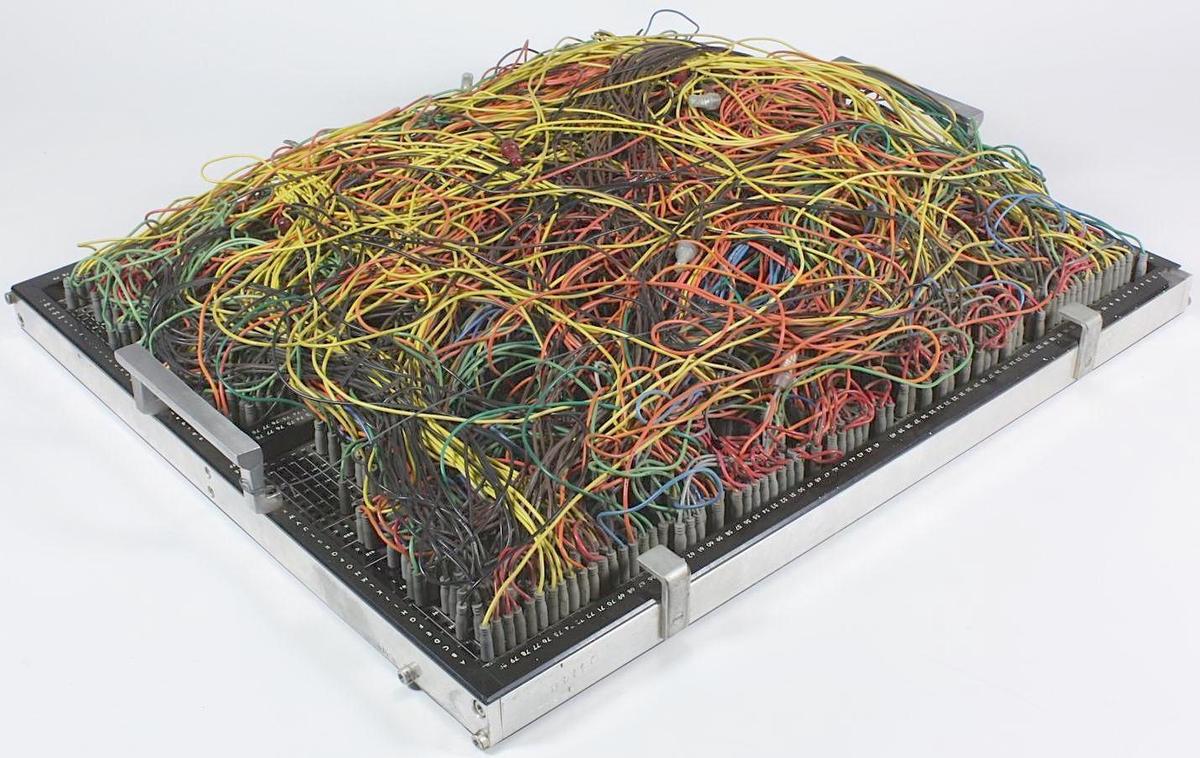

The 8086's variable-length instructions led to the x86 architecture, with instructions from 1 to 15 bytes long. This is particularly inconvenient with modern superscalar processors that run multiple instructions in parallel. The problem is that the processor must break the instruction stream into individual instructions before they execute. The Intel P6 microarchitecture used in the Pentium Pro (1995) has instruction decoders to decode the instruction stream into micro-operations.4 It starts with an "instruction length block" that analyzes the first bytes of the instruction to determine how long it is. (This is not a straightforward task to perform rapidly on multiple instructions in parallel.) The "instruction steering block" uses this information to break the byte stream into instructions and steer instructions to the decoders.

The AMD K6 3D processor (1999) had predecode logic that associated 5 predecode bits with each instruction byte: three pointed to the start of the next instruction, one indicated the length depended on a D bit, and one indicated the presence of a ModR/M byte. This logic examined up to three bytes to make its decisions. Instructions were split apart and assigned to decoders based on the predecode bits. In some cases, the predecode logic gave up and flagged the instruction as "unsuccessfully predecoded", for instance an instruction longer than 7 bytes. These instructions were handled by a slower path.

Conclusions

The 8086 processor has instructions with a variety of lengths, but nothing in the processor explicitly determines the length. Instead, an instruction uses as many bytes as it needs. (That sounds sort of tautological, but I'm not sure how else to put it.) The Group Decode ROM makes an initial classification, the Translation ROM determines the addressing mode, and the microcode consumes bytes as needed.

While this approach gave the 8086 a flexible instruction set, it created a problem in the long run for the x86 architecture, requiring complicated logic to determine instruction length. One benefit of RISC-based processors such as the Apple M1 is that they have (mostly) constant instruction lengths, making instruction decoding faster and simpler.

I've written multiple posts on the 8086 so far and plan to continue reverse-engineering the 8086 die so follow me on Twitter @kenshirriff or RSS for updates. I've also started experimenting with Mastodon recently as @oldbytes.space@kenshirriff.

Notes and references

-

I was inspired to investigate instruction length based on a Stack Overflow question. ↩

-

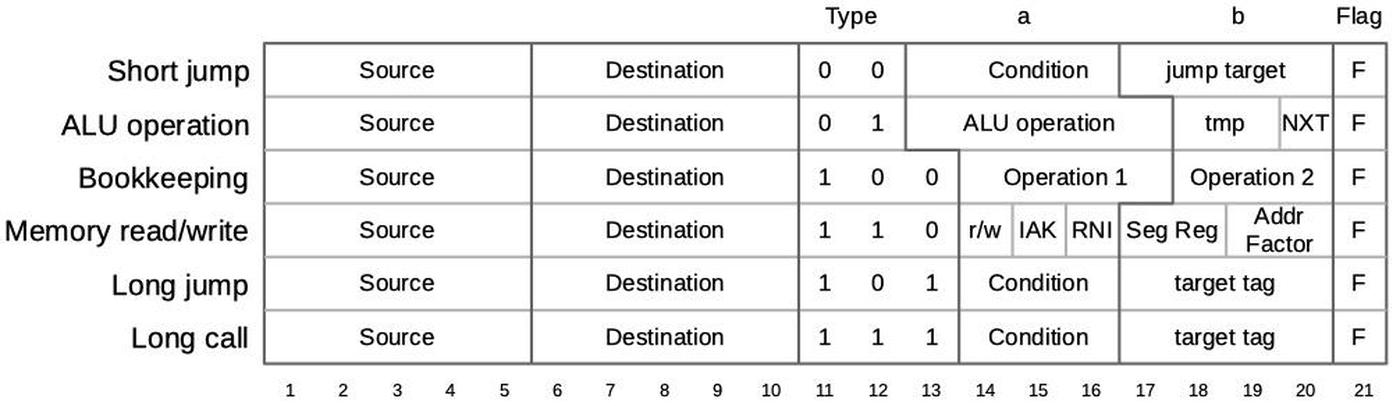

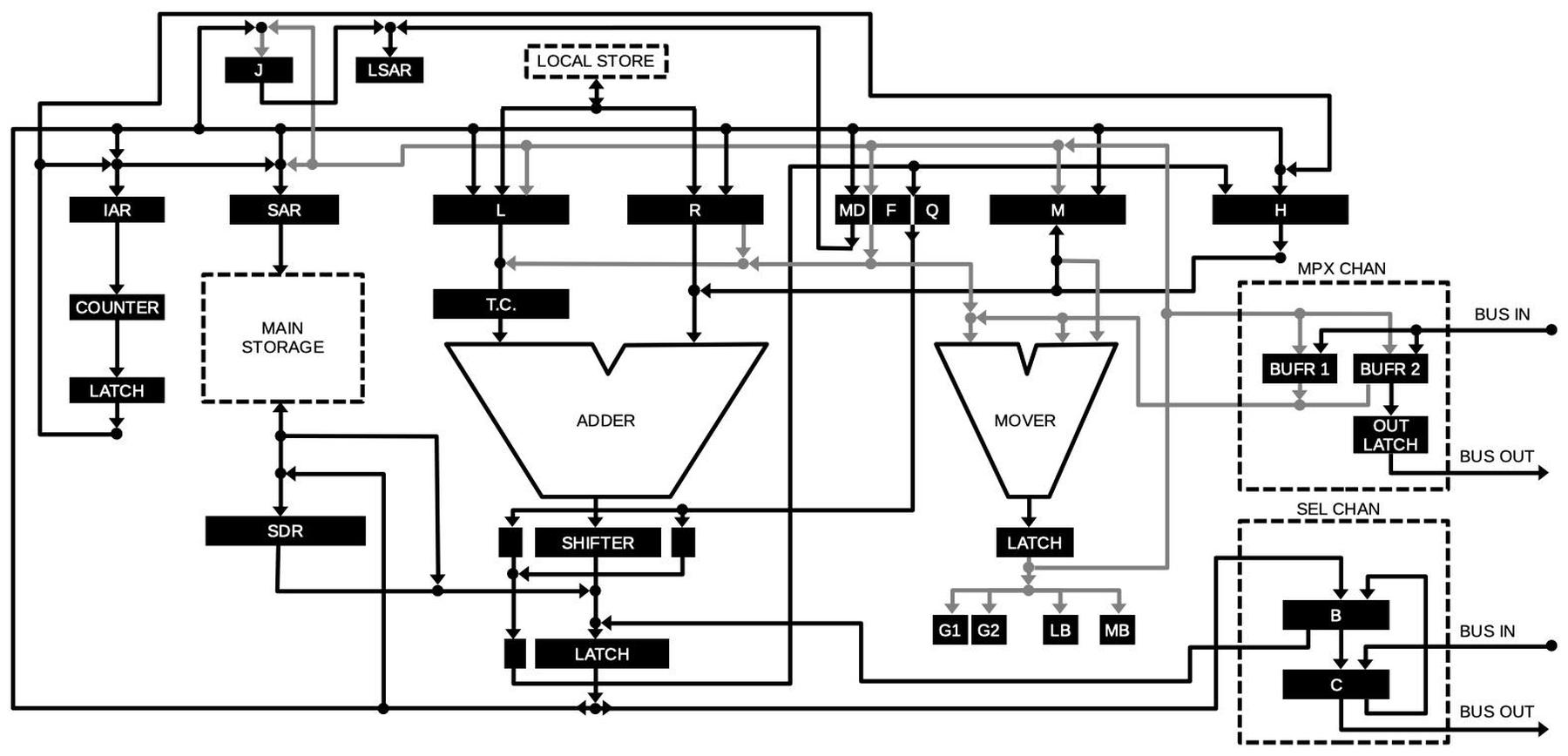

I'll just give a brief overview of microcode here. Each micro-instruction is 21 bits long, as shown below. A micro-instruction specifies a move between a source register and destination register. It also has an action that depends on the micro-instruction type. For more details, see my post on the 8086 microcode pipeline.

The encoding of a micro-instruction into 21 bits. Based on NEC v. Intel: Will Hardware Be Drawn into the Black Hole of Copyright? -

The 8088 processor, used in the original IBM PC, has a smaller 4-byte prefetch queue. The 8088 is almost the same as the 8086, except it has an 8-bit external bus instead of a 16-bit external bus. This makes memory accesses (including prefetches) slower, so a smaller prefetch queue works better. ↩

-

The book Modern Processor Design discusses the P6 microarchitecture in detail. The book The Anatomy of a High-Performance Microprocessor discusses the AMD K5 3D processor in even more detail; see chapter 2. ↩