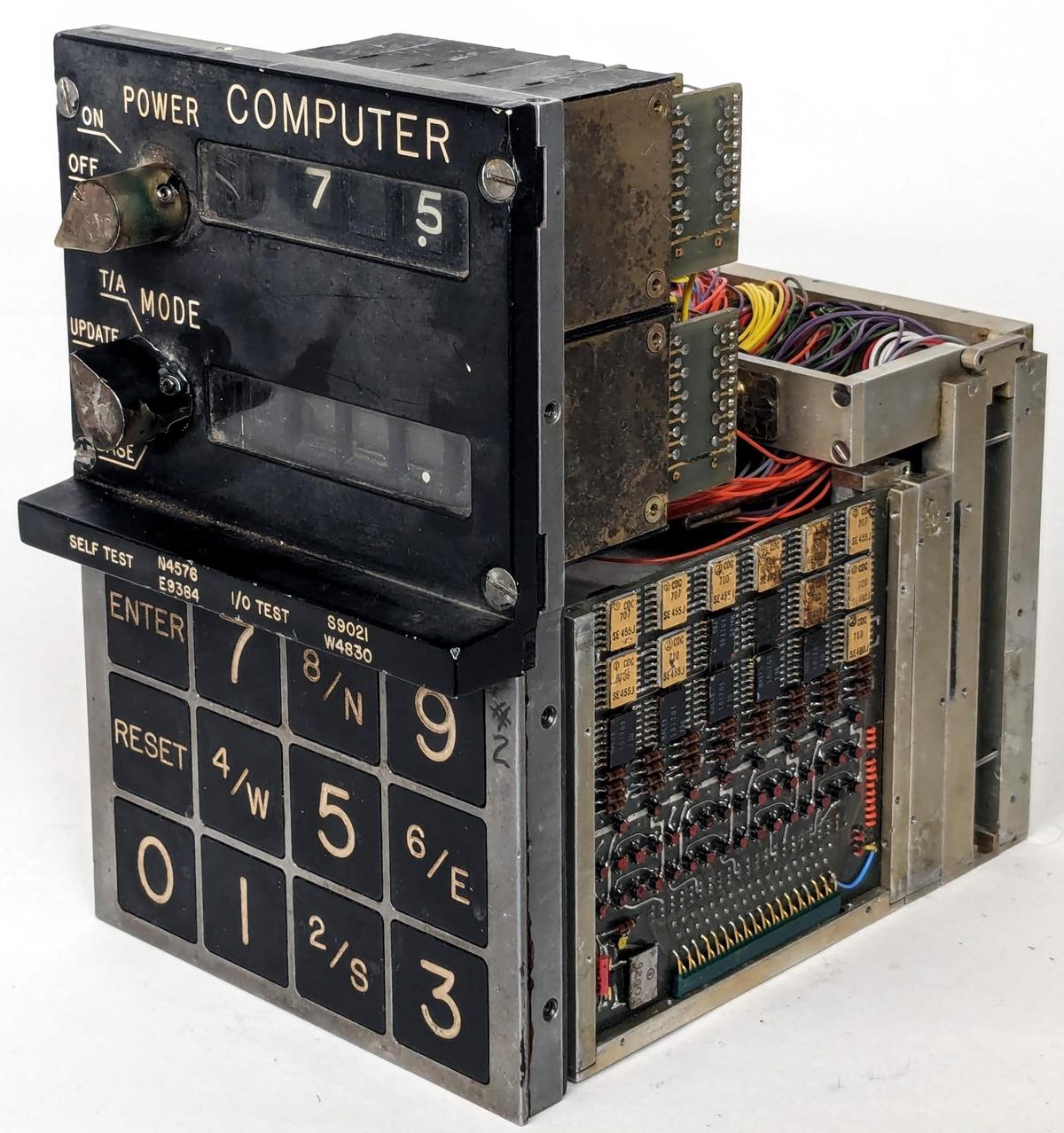

I recently obtained an aerospace computer from the early 1970s, apparently part of a navigation system. Aerospace computers are an interesting but mostly neglected area of computer hardware, so I'm always delighted to examine one up close. In an era when most computers were large mainframes, aerospace computers packed dense electronics into a small package, using technologies such as surface-mounted components and multi-layer printed circuit boards, technologies that wouldn't reach the mainstream for another decade. This blog post examines the circuitry and components inside this computer, including an unusual electromechanical display. Although I was unable to determine who manufactured this system or even its exact function, this system illustrates how hundreds of integrated circuits and a core memory stack can be crammed into a compact package.

The keyboard

The device has a simple numeric keyboard with a few unexpected features. The numeric keypad can also be used for direction entry, as four of the keys have N, S, E, and W on them. The keys are large, roughly the size of the Apollo spacecraft's DSKY buttons. My theory is that these buttons are designed for operation with gloves, perhaps in a fighter plane where the pilot wears a pressure suit. The buttons are hinged at the top, so they don't push straight in, but pivot when pressed.

Numeric keypads typically use one of two layouts: a telephone-style keypad has the digits 123 at the top, while a calculator-style keypad has the digits 789 at the top. Interestingly, this device uses a calculator layout, while most aviation devices have a telephone layout. The Apollo DSKY also used a calculator layout, which could be a hint at a NASA connection for this device.

Above the keyboard are four codes for self-test: N4576, E9384, S9021, and W4830. Entering these codes on the keyboard presumably triggered the appropriate test of the system when the switch is in test mode.

The display

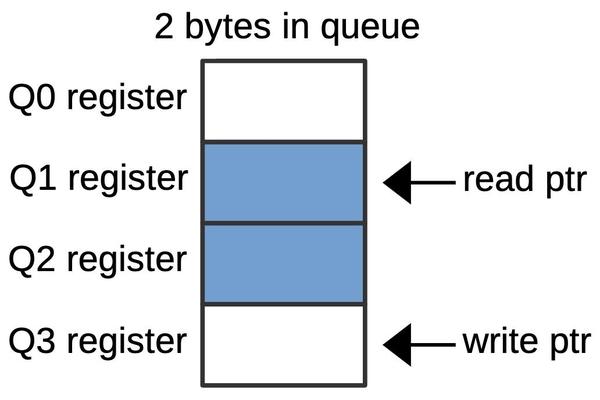

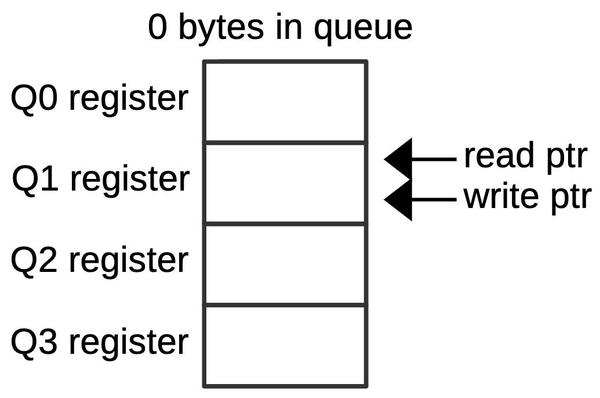

The computer's display is simple, showing a latitude and longitude. Each value has one decimal position, providing 0.1° of accuracy. The latitude and longitude are prefixed with a compass direction: North/South for latitude and East/West for longitude.

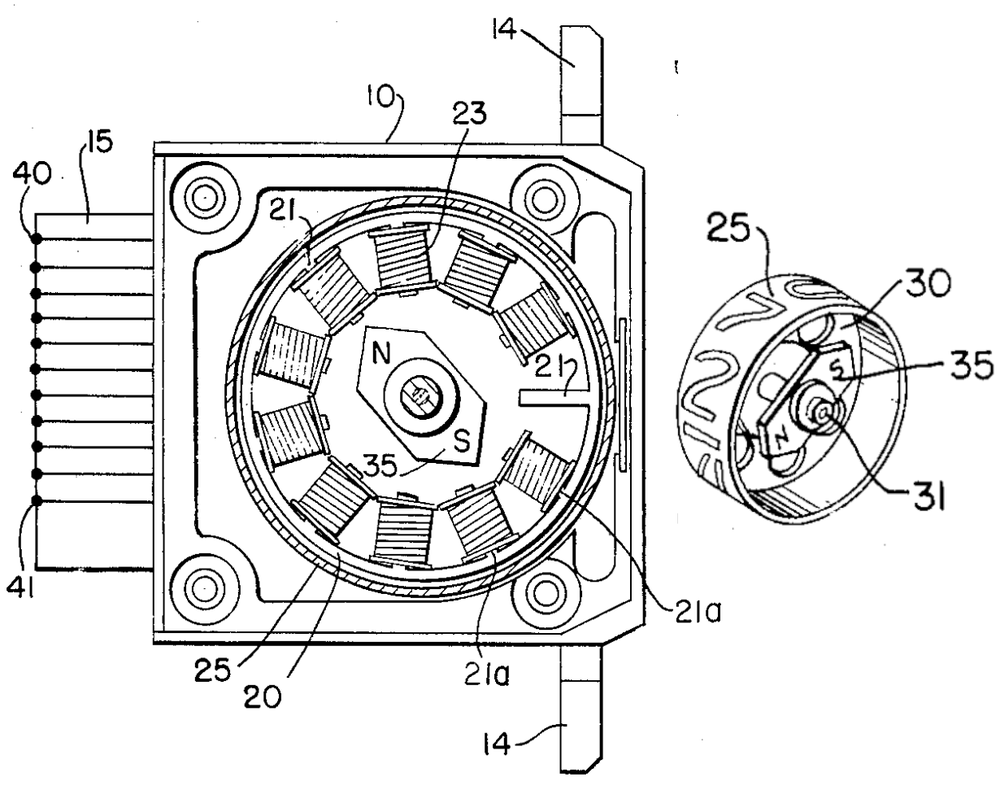

The display is constructed from an unusual type of electromechanical indicator, with an indicator module for each digit. Each digit position has a rotating wheel with 11 positions (ten digits and a blank). When the indicator module for a position is energized, the wheel spins to the specified position, showing the selected digit. The two leftmost indicators are slightly different as they show a compass direction instead of a digit: N, S, E, or W. Moreover, the direction indicators can also show the compass direction with a diagonal slash through it, as seen above. Perhaps the slashed direction indicates a problem with the value.

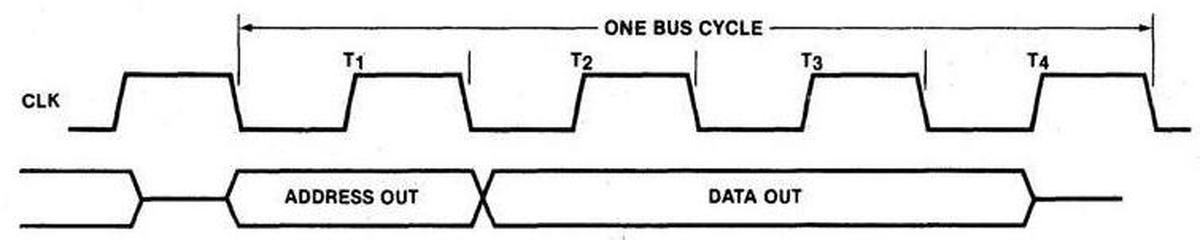

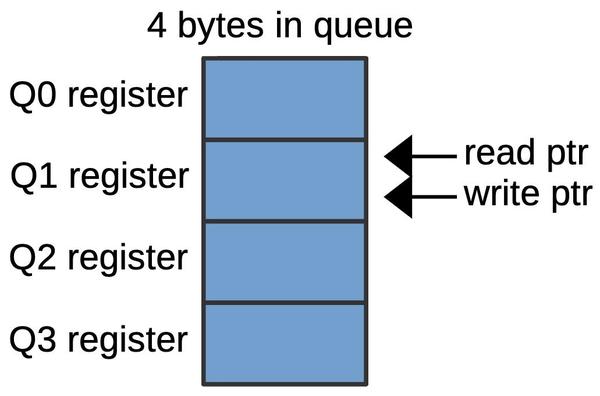

The diagram below shows how a digit indicator operates. Each digit position has an electromagnet with a wire to energize it. The dial wheel has an attached permanent magnet (indicated by N and S). Energizing one of the electromagnets causes the dial to spin to that position, aligning the permanent magnet on the dial with the electromagnet. This mechanism forms a reliable indicator with just one moving part. The displayed digit is clearer than a seven-segment display since the digit uses a real font rather than being created from segments.

Looking at the back of the keyboard/display unit shows the wiring of the display indicators. Each indicator has a common connection and ten wires to energize one of the electromagnets.1 The electromagnets are connected in a matrix, with all the "1" wires connected, the "2" wires connected, and so forth. To rotate an indicator to a particular digit, a common wire and an electromagnet wire are energized. For instance, powering the common wire of the second indicator and the "5" electromagnetic wire causes the second indicator to rotate to the "5" position. The wiring has a three-dimensional structure with ten bare wires running between the boards, one for each digit value. A yellow wire hangs off each bare wire, linking it to the connector on the left. Each indicator has ten diodes on a circuit board to block "sneak" paths that would energize unselected electromagnets.

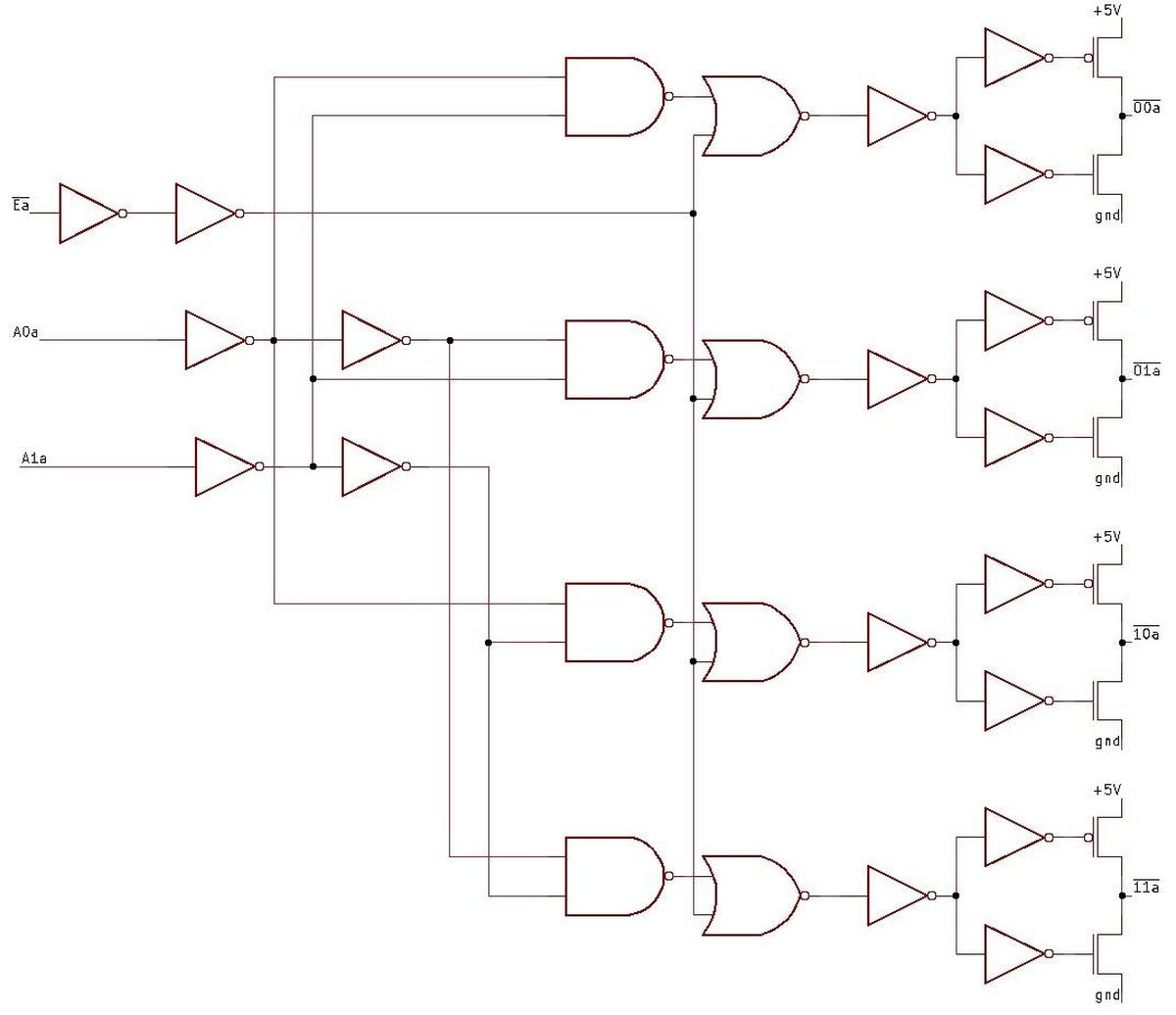

This matrix circuit reduces the amount of wiring required: although there are 100 electromagnets in total, just 20 wires are sufficient to control them. The driver circuitry, however, is a bit more complex as it must scan through the ten digit positions, activating the right pair of driver wires at the right time. Some of the logic circuitry described below must implement this scanning, as well as the driver circuitry to energize the indicators.

The display and keyboard have many similarities to the Delco Carousel Inertial Navigation System (INS) shown below. (The Delco Carousel was used in many military and civilian aircraft, from the C-141 cargo plane to the Boeing 747 passenger plane.) Both devices have two digital displays, one for latitude North/South and one for longitude East/West. Also note the numeric keypads with four keys assigned to the four compass directions. The controls of the Carousel INS system are considerably more complicated, though. The Carousel has a knob position "TK/GS" (track/ground speed), which may correspond to the "T/G" position on my device.

Note that the display on my unit has just four digits of accuracy, with one digit after the decimal point. A tenth of a degree would provide an accuracy of about ±7 miles, which is low for a navigation device. In comparison, the Delco Carousel has six digits of accuracy (± 100 feet perhaps). This suggests that the device does not provide INS navigation, but some other guidance with lower accuracy.

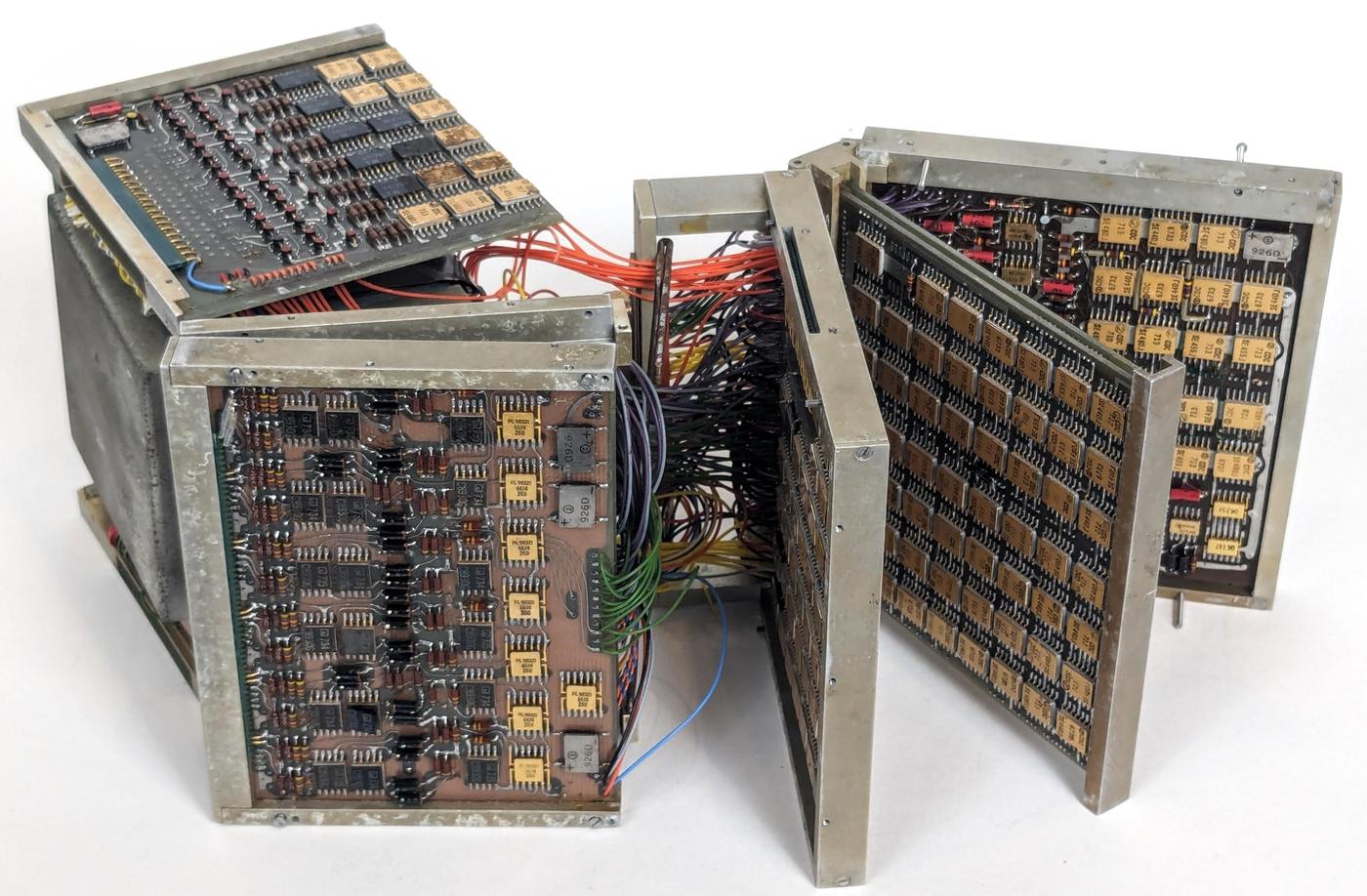

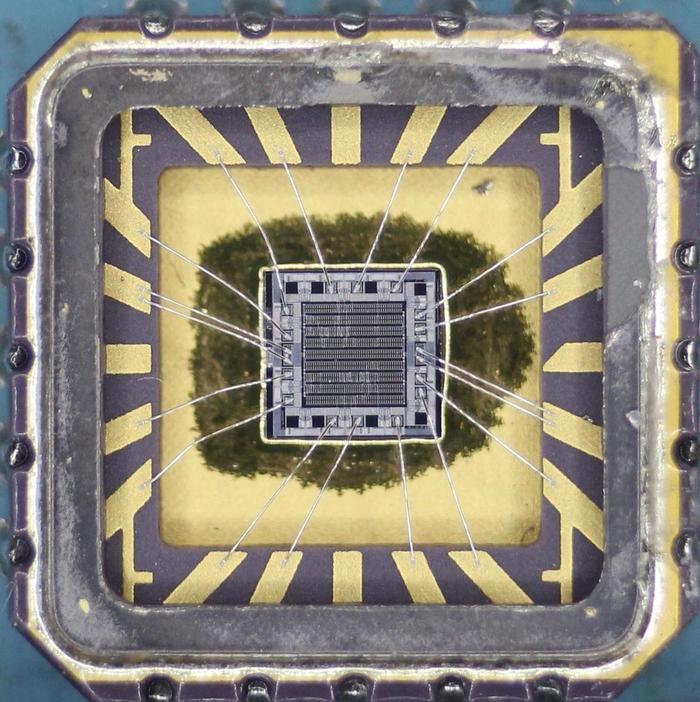

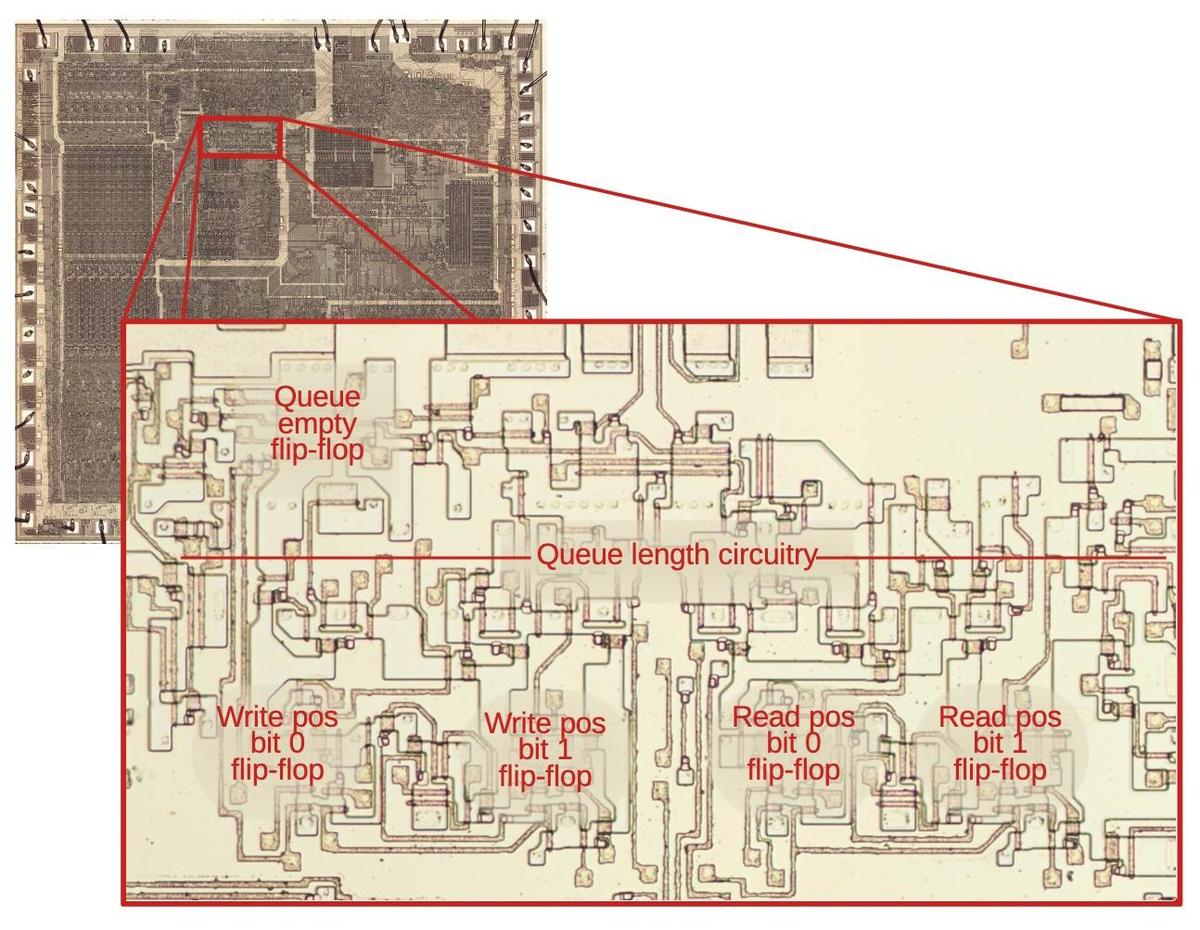

Packaging the electronics

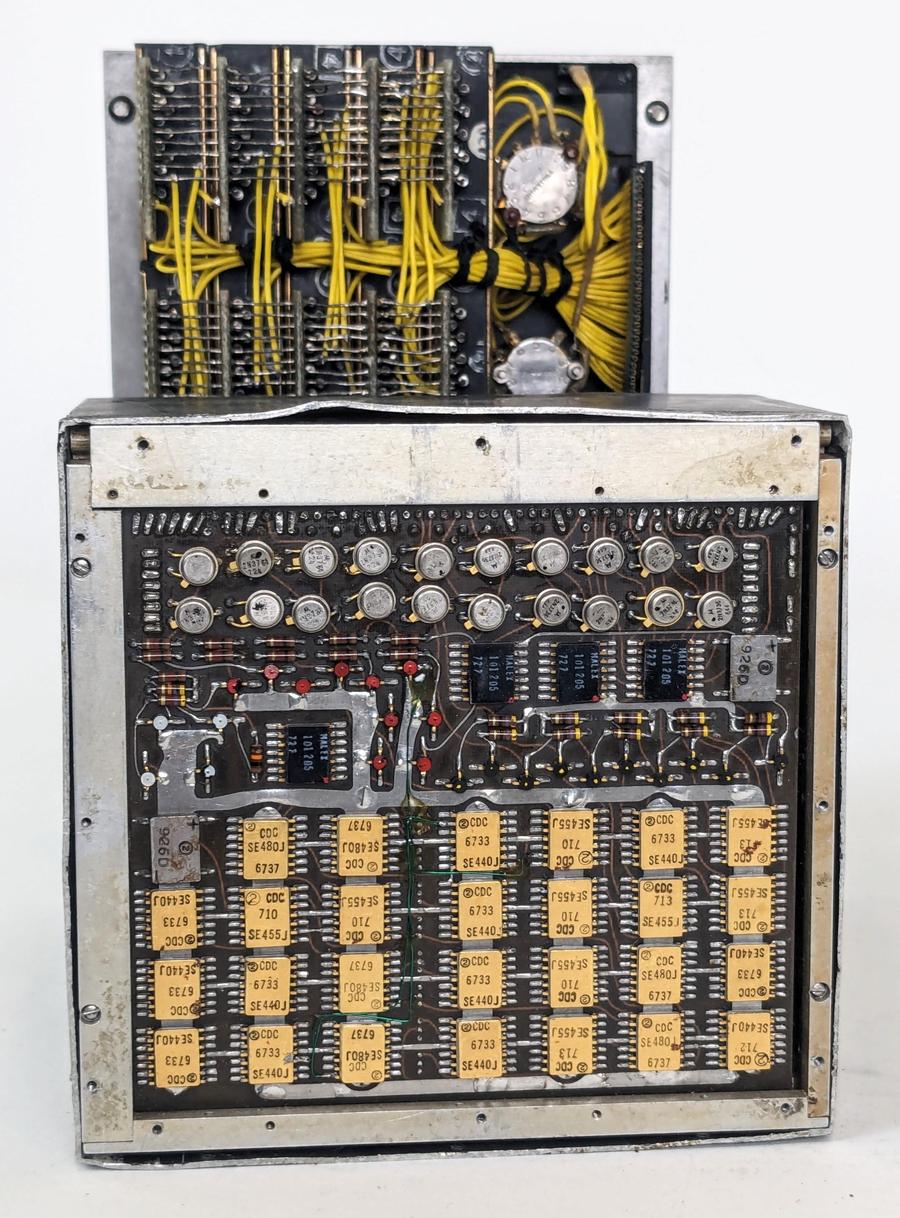

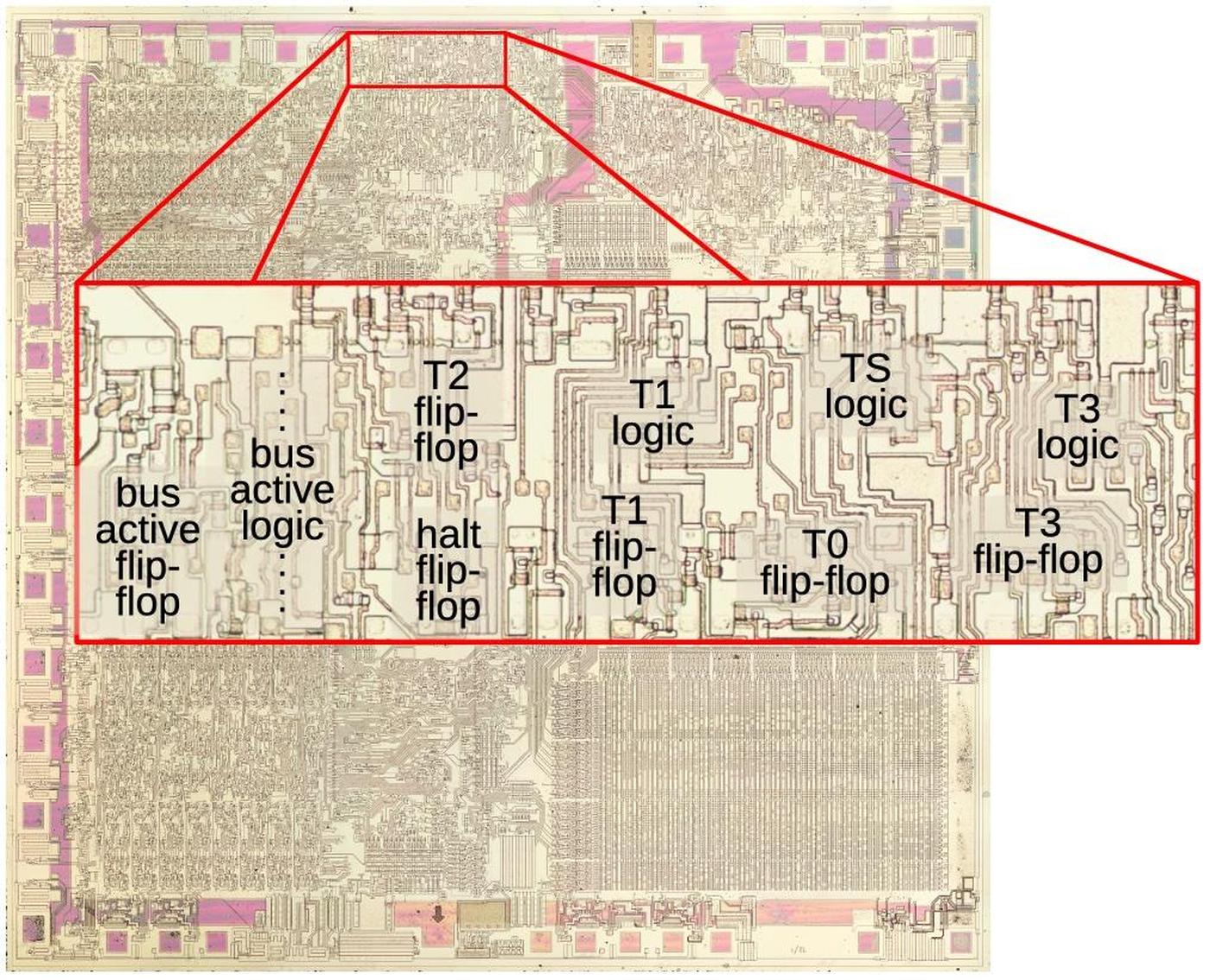

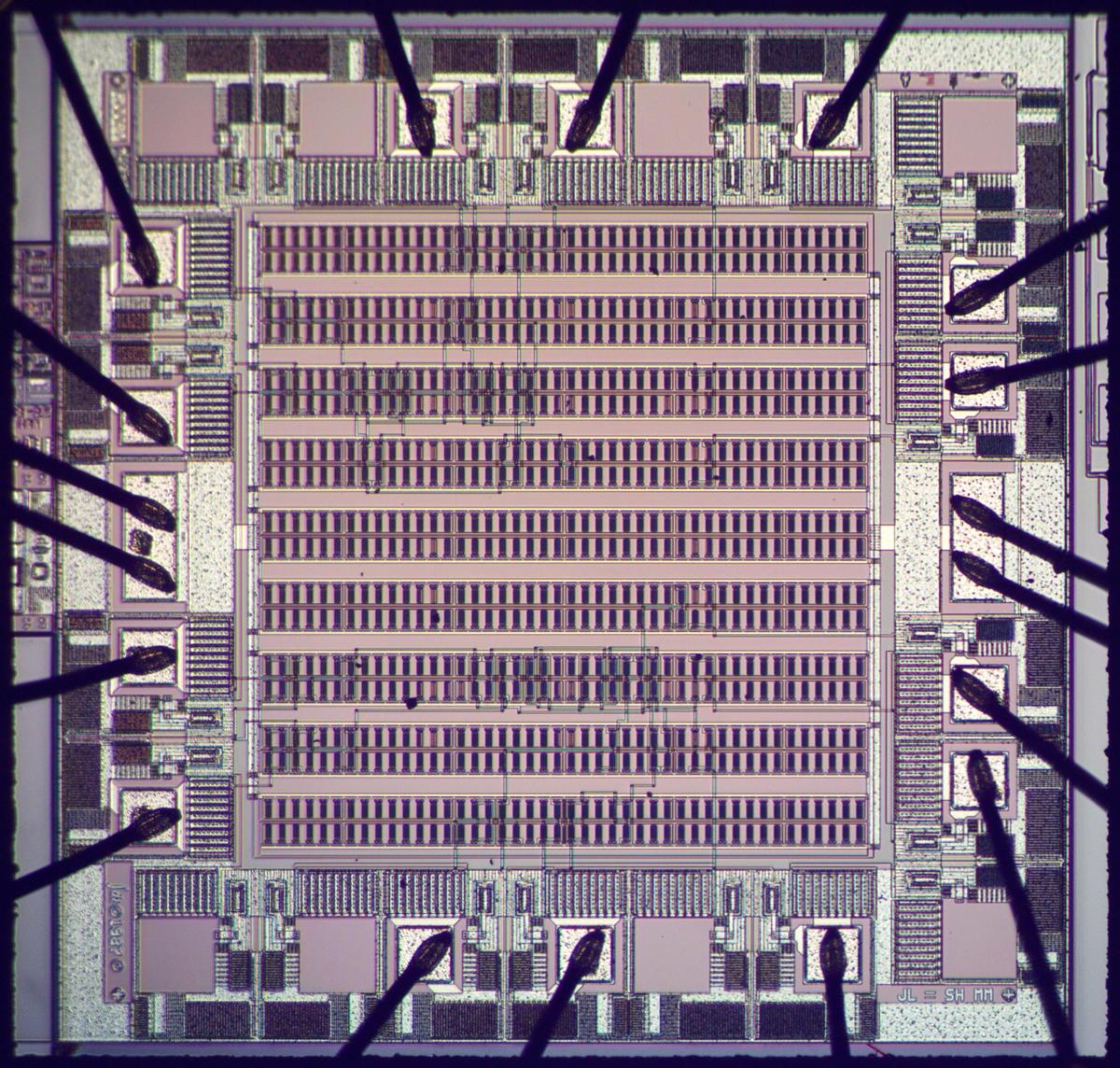

The unit contains 14 circuit boards, crammed with TTL integrated circuits, along with a core memory stack. The photo below shows how circuit boards surround the core memory stack. The mechanical design of the unit is advanced, allowing the boards to be opened up like a book. This provides compact packaging while allowing access to the boards.

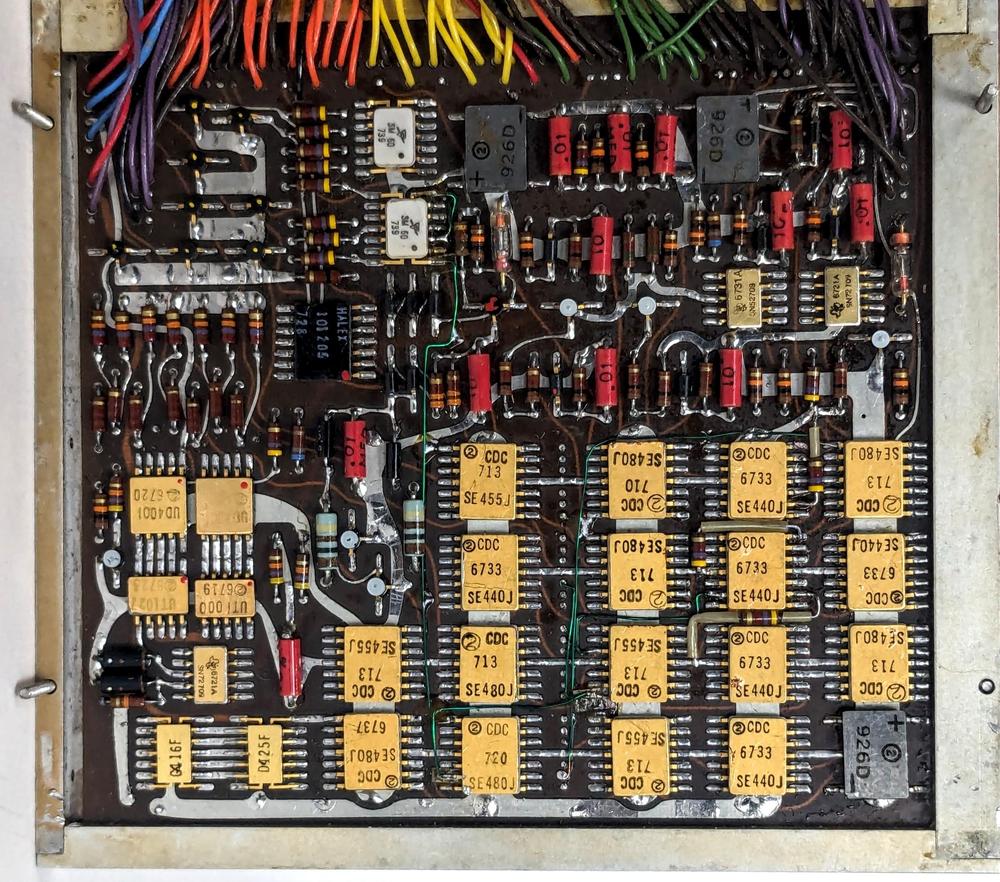

The circuit boards are four-layer printed circuit boards, more advanced than the common two-layer boards of the time. The boards use a mixture of surface-mounted and through-hole components. The flat-pack ICs and the tiny round transistors are surface mounted, which was rare at the time. On the other hand, the resistors, capacitors, diodes, and larger transistors use standard through-hole components. At the time, most electronics used through-hole components, although aerospace systems often used surface-mounted components for higher density. It wasn't until the late 1980s that surface-mount technology became commonplace.

The boards are mounted in solid metal frames, providing both structural integrity and heat conduction for cooling. Most of the frames hold two boards, mounted back-to-back for higher density.

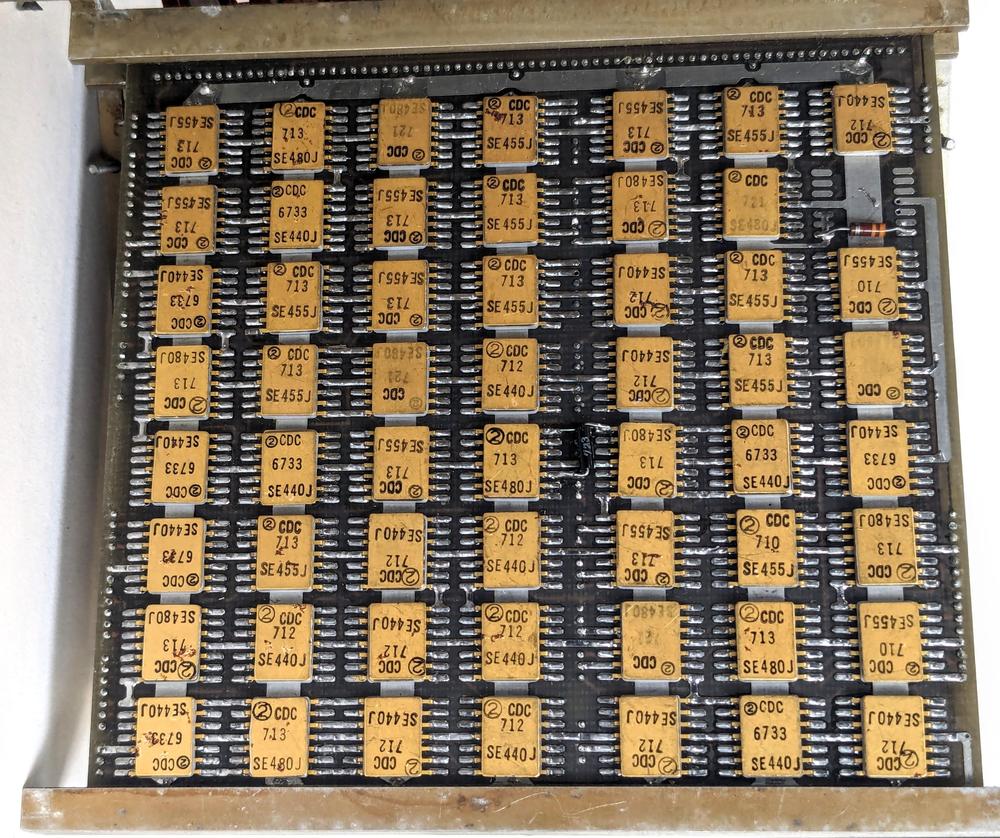

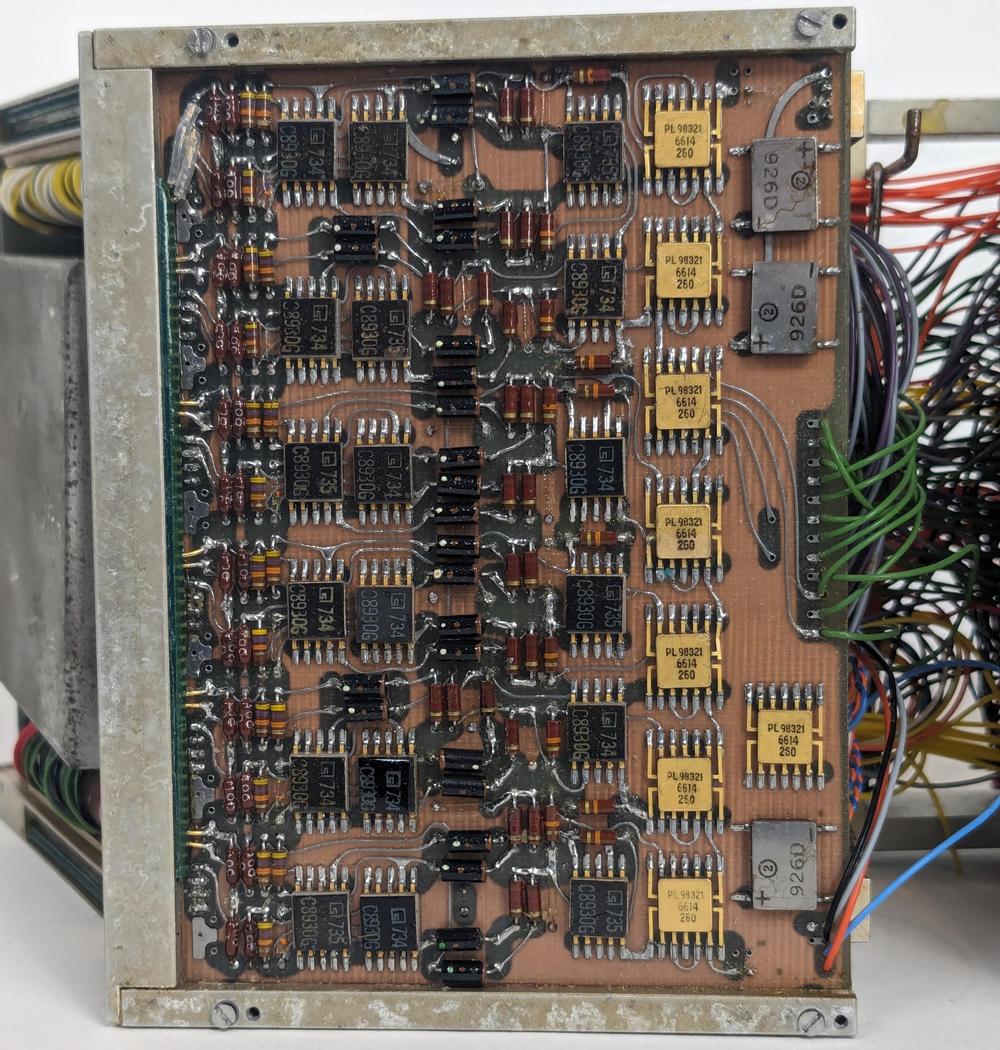

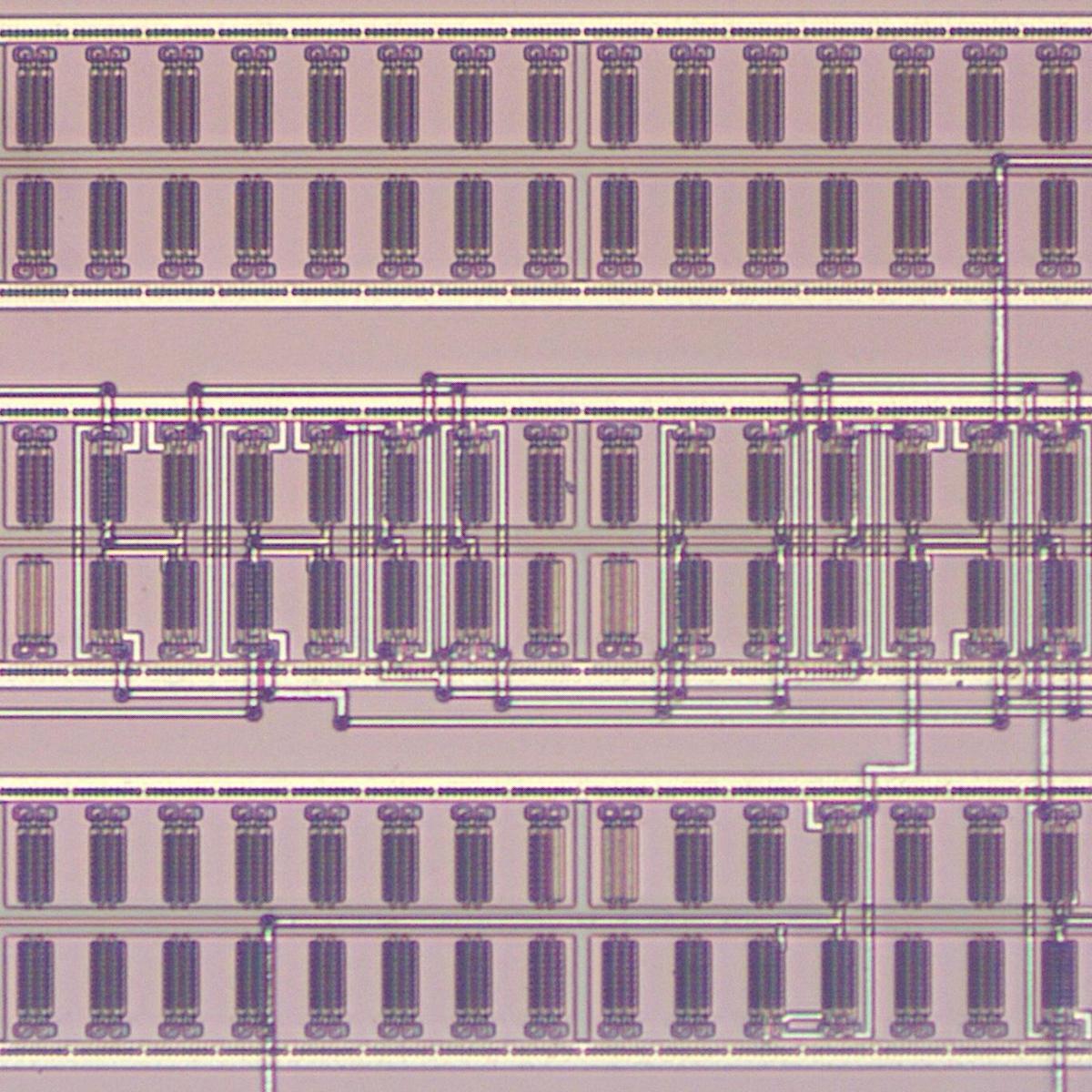

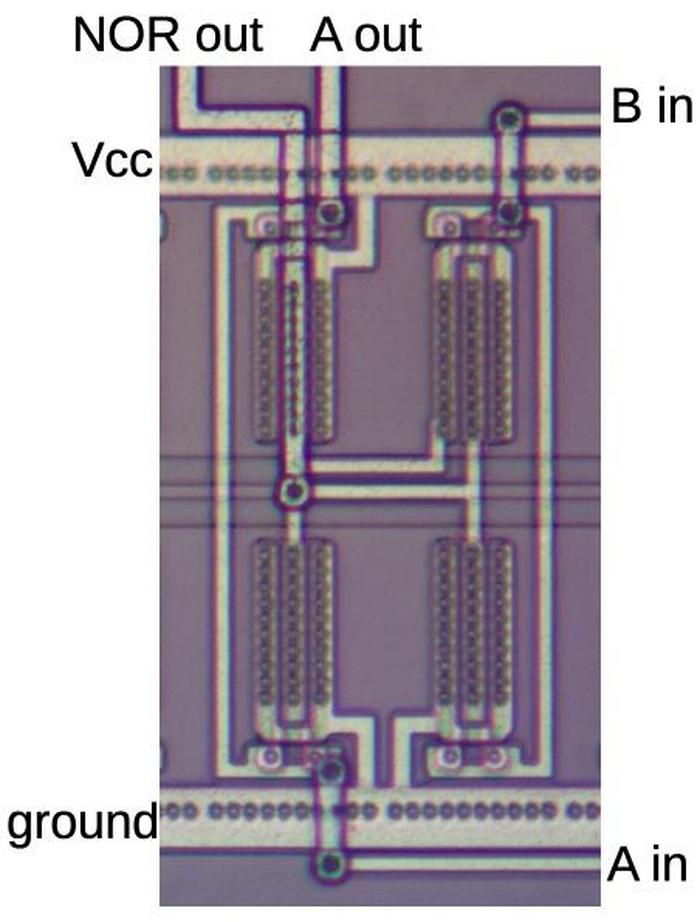

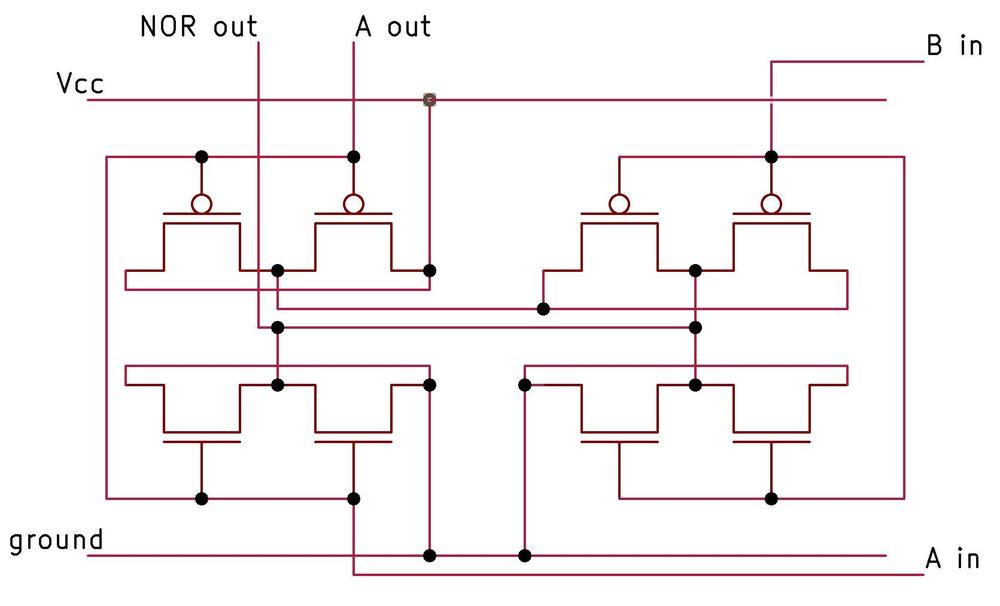

The logic boards

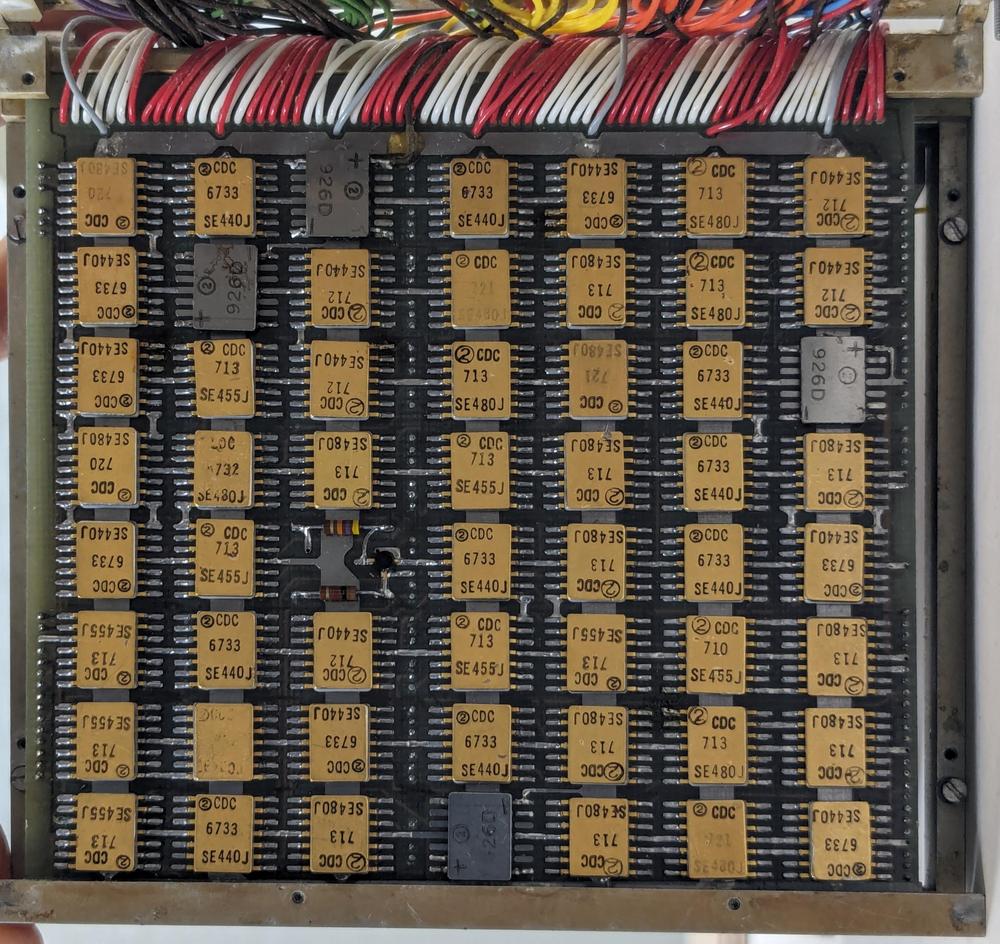

Four of the circuit boards are logic boards, packed with flat-pack integrated circuits. The board below holds 55 integrated circuits, showing the high density that is possible with flat packs.

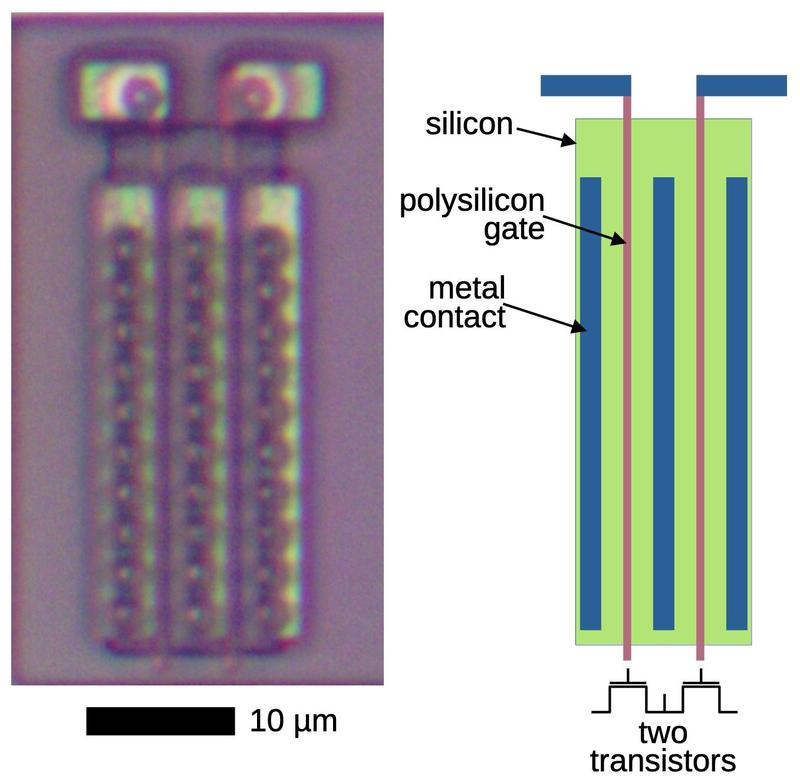

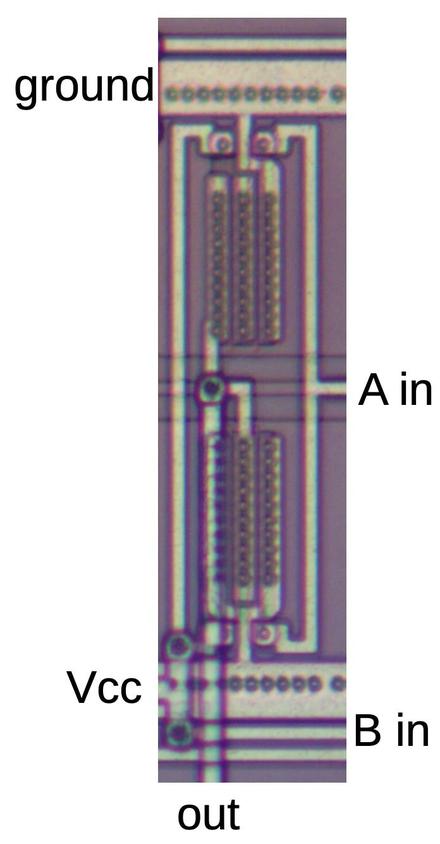

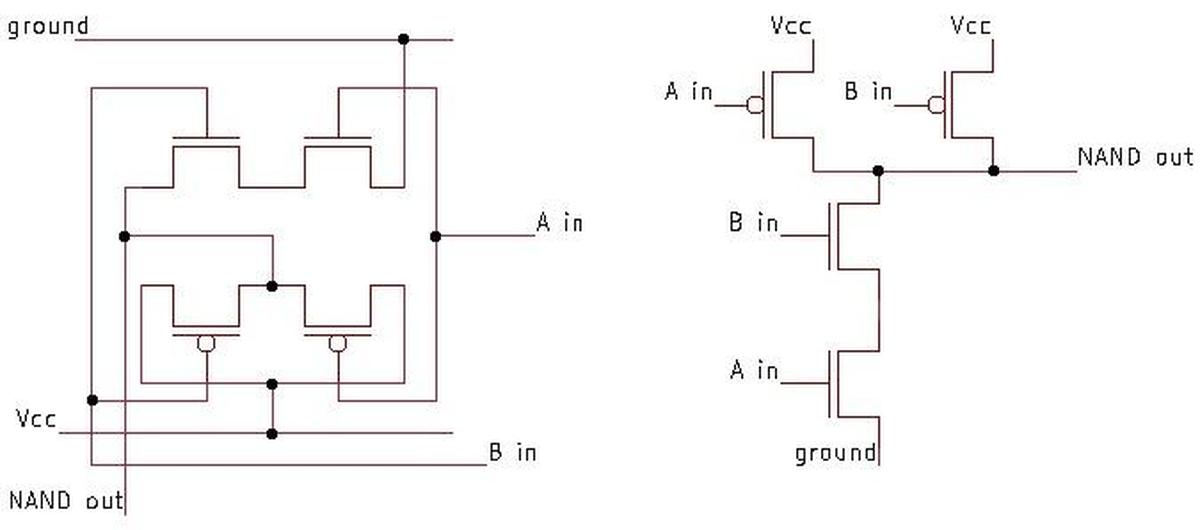

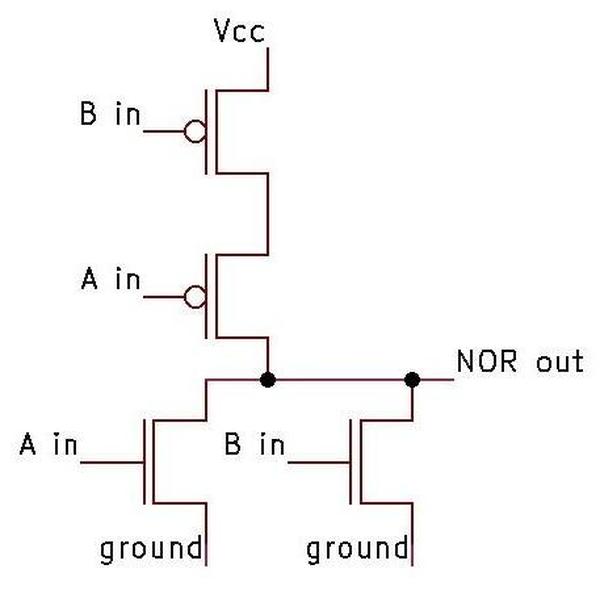

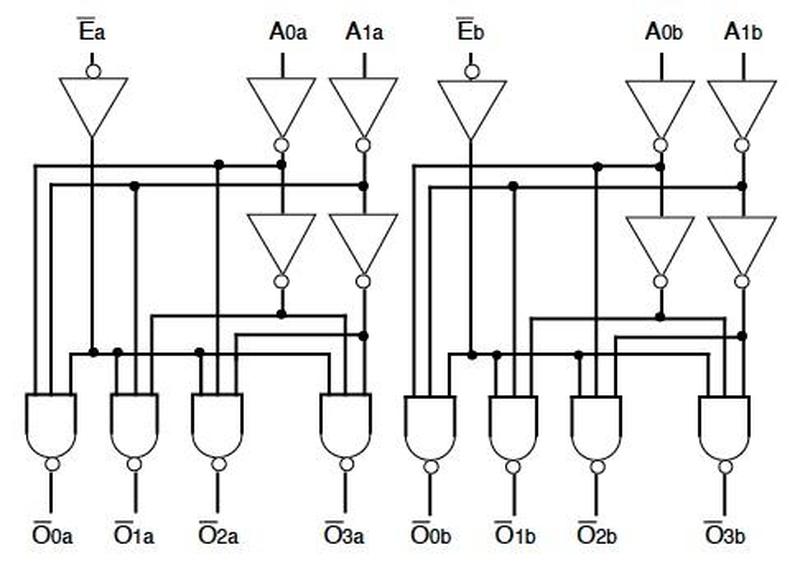

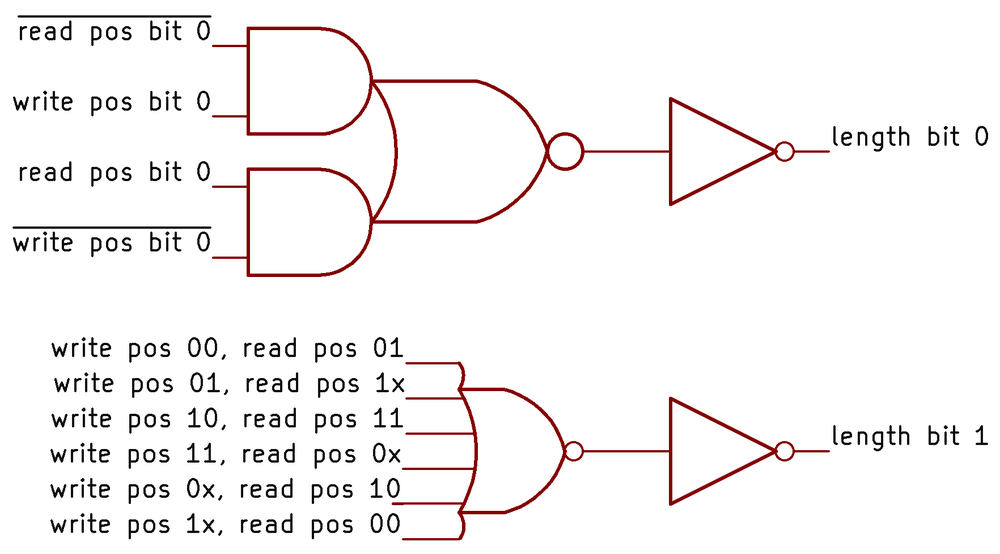

The logic ICs are Signetics 400-series chips, an early type of TTL (Transistor-Transistor Logic) chip. Just three types of these ICs are used: SE440J "Dual exclusive OR" (really AND-OR-INVERT but XOR if provided with particular inputs), SE455J "Dual 4-input buffer/driver" (4-input NAND or NOR gates depending on polarity), and SE480J "Quad 2-input NAND/NOR". These integrated circuits cost $15.45 each in 1966 (about $150 each in current dollars).2

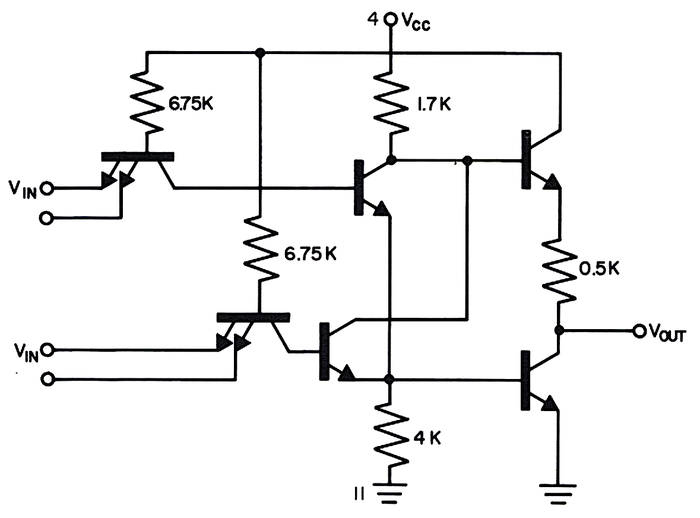

The schematic below shows the circuit that implements AND-OR-INVERT (or exclusive or) in the SE440J. The multiple-emitter transistors on the inputs may appear unusual, but this is the standard way to implement TTL gates. It is important to note that this chip only contains 12 transistors, so the density is low. (Since the chip contains two of these gates, this circuit is duplicated.) In the mid-1960s, integrated circuits only contained a few transistors—the Apollo Guidance Computer's ICs had just 6 transistors—but by the time this unit was built in the early 1970s, some chips had thousands of transistors, tracking Moore's Law. Thus, this unit both illustrates how aviation computers could be built from simple integrated circuits and how the dramatic improvements in IC technology rapidly obsoleted these computers.

The Signetics 400-series seems to have been obscure and short-lived, probably killed off by the wild success of 7400-series TTL chips. I was able to find only a few announcements and datasheets for these chips. The only users of these chips that I could find were NASA projects from the late 1960s.3 Signetics 400-series chips were used in the Mariner Mars and Venus probes, in the Data Automation Subsystem (DAS) (link, link). The Voyager Mars probes also used them. The SE455J gates were also used to interface the Apollo Guidance Computer to a core-rope simulator. JPL used the SE455J in a core memory system. NASA used the SE455J, SE480J, and other Signetics chips in its design for the MICROMIN computer. None of these systems appear to be related to the navigation system, but they illustrate that NASA was using these specific Signetics chips at the time in multiple designs.

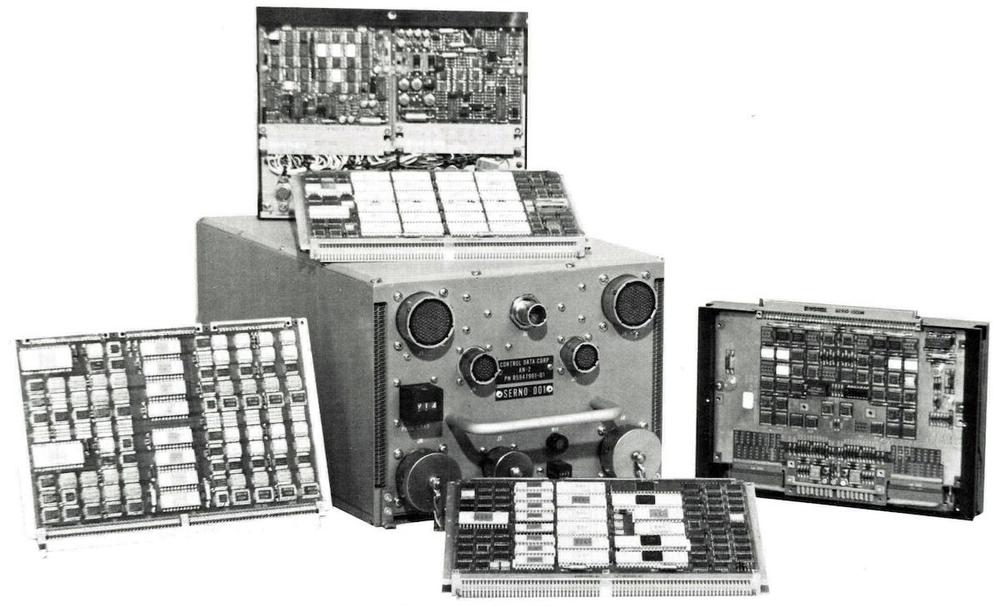

The chips are labeled "CDC", raising the possibility that these chips were built by Control Data Corporation (CDC) under license from Signetics. The Aerospace Division of CDC was active at the time, building various compact computer systems. For instance, the CDC 480 computer (1976) was a 16-bit computer based on the Am2900 bit-slice chip. Also known as the AN/AYK-14, this system was used on numerous aircraft including the F-18. An earlier CDC aerospace computer is the AN/AWG-9 Airborne Missile Control System (1965), a 24-bit computer in a compact 1.1 cubic foot package. Used on the F-14 fighter plane, this computer guided the Phoenix air-to-air missile. Based on CDC's activity in aerospace computers at the time, the mystery computer could be a CDC system, although this hypothesis is based solely on integrated circuits labeled "CDC".

The photo below shows another logic board. This one has numerous red and white wires attached, linking it to the rest of the system. Curiously, this board has a single transistor, with two associated resistors, in the middle of the board.

Analog boards

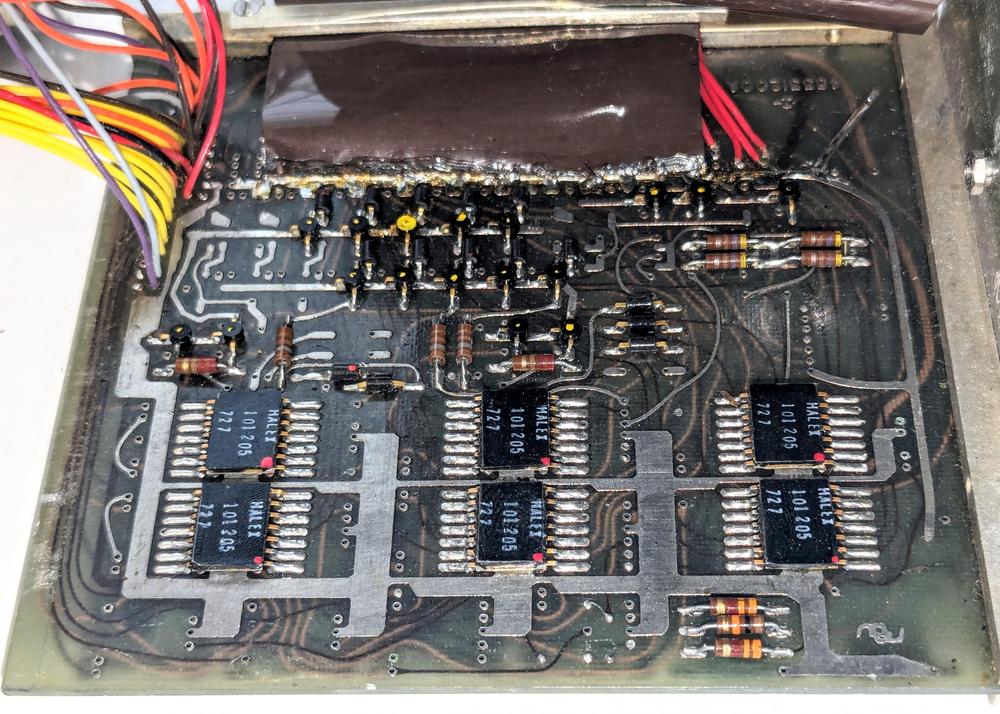

The computer contains not only logic boards but also boards full of analog circuitry to interface with the core memory, keyboard, and display. The board below contains 17 of the logic ICs seen earlier. However, it also uses many resistors, capacitors (red cylinders), transistors (white circles), inductors (white banded cylinders), and glass diodes. The board also has some analog integrated circuits. In particular, it has three TI SN52709 op-amps, the smaller 10-pin packages. The board also contains some integrated circuits that I couldn't identify: UT1000, UT1027, UD4001, and D245F. The SM 60 ICs in white packages have a logo that I don't recognize. The op-amps could function as sense amplifiers for the core memory, or this board could provide other analog interfacing.

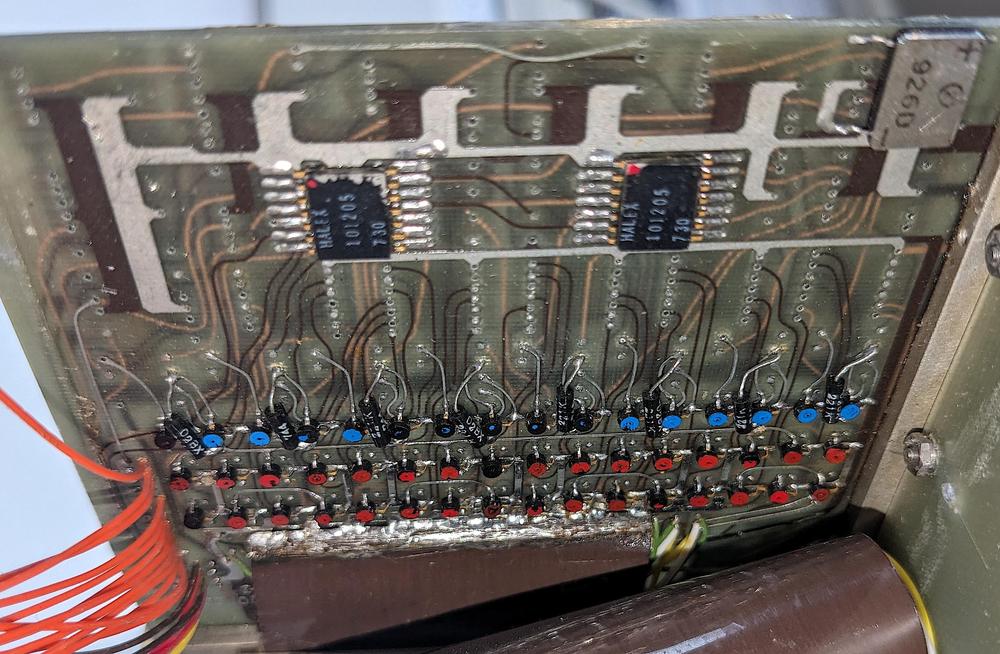

The board has multiple gray four-pin packages labeled "926D". Based on the + and - markings, these packages are probably bridge rectifiers, maybe providing power for the circuits. Many of the other boards have these rectifiers. The analog boards also contain a few Halex flat-pack devices labeled "HALEX 101205 727". Hanlex manufactured thin-film resistors in flat packs, so these are probably resistor networks. NASA used Halex resistor networks in some devices (link).4

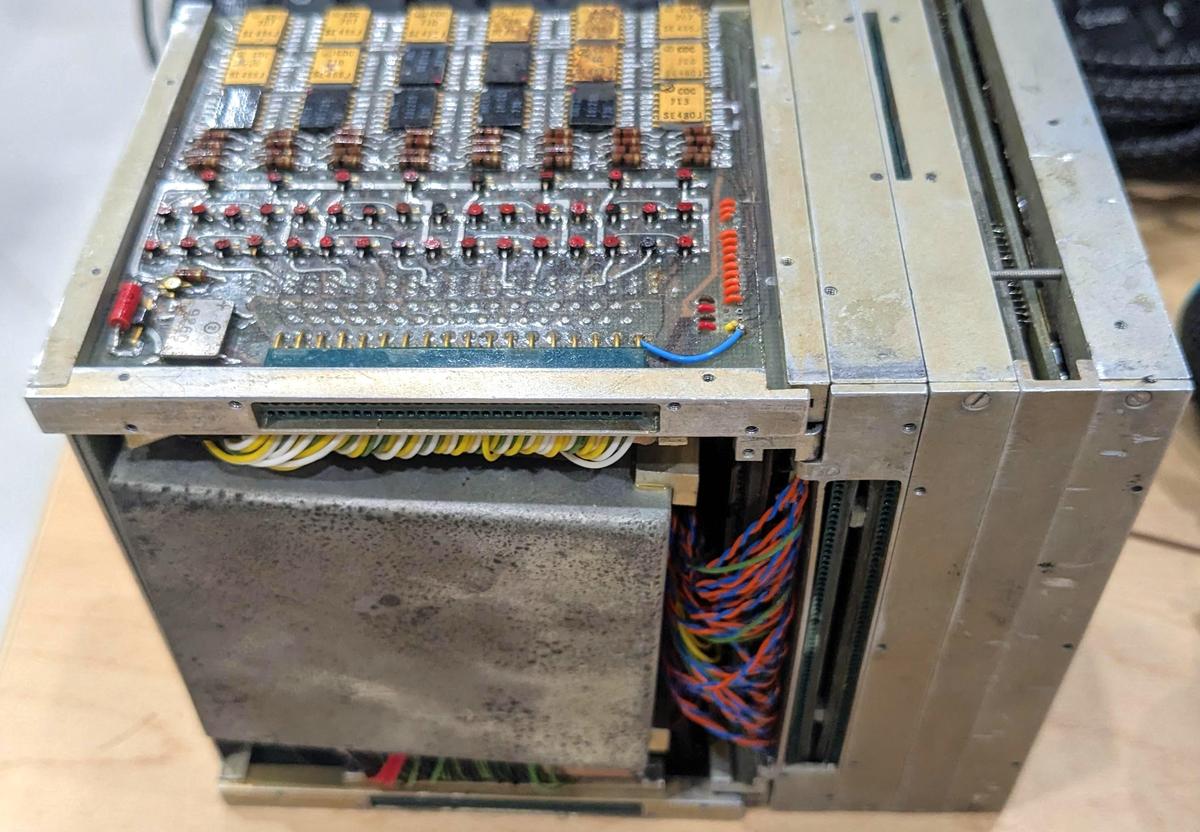

The analog board shown below sits next to the core memory stack. It uses a different set of flat-pack components: Signetics C8930G and PL 98321. Unfortunately, I could not identify these ICs. This board, unlike the previous boards, has a copper ground plane in the second layer of the circuit board; this layer is visible in the photo as the copper-colored background occupying most of the board.

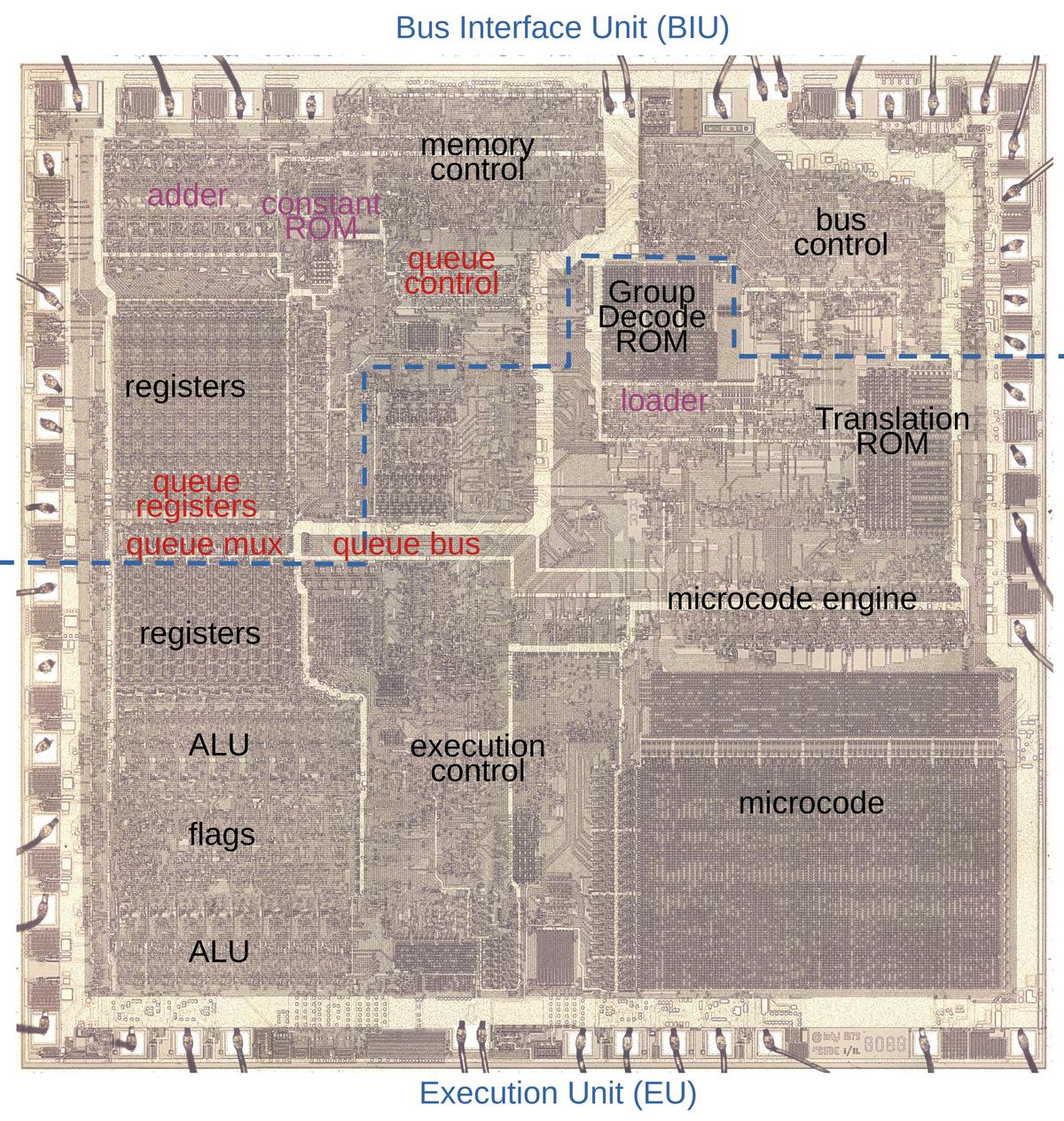

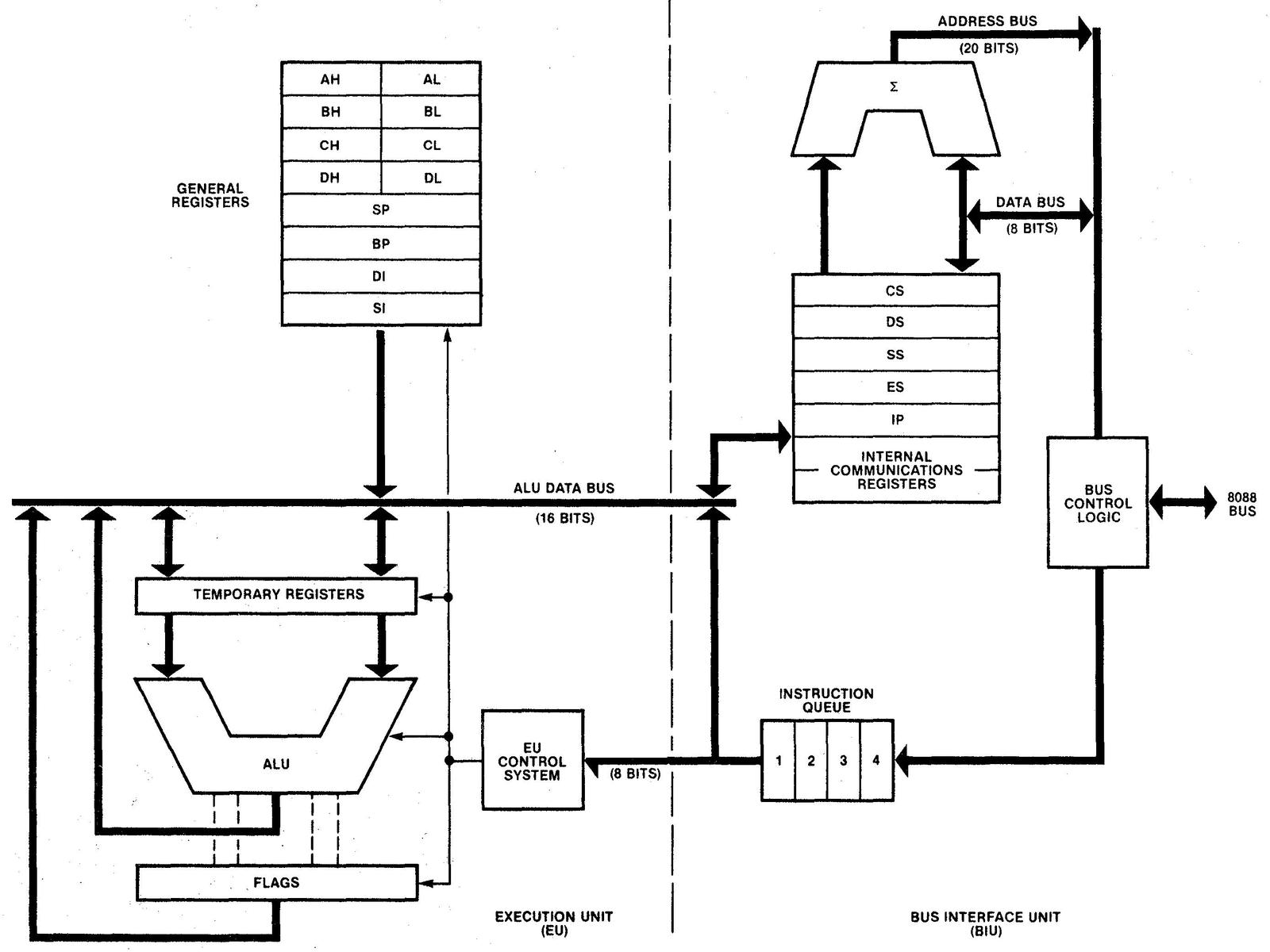

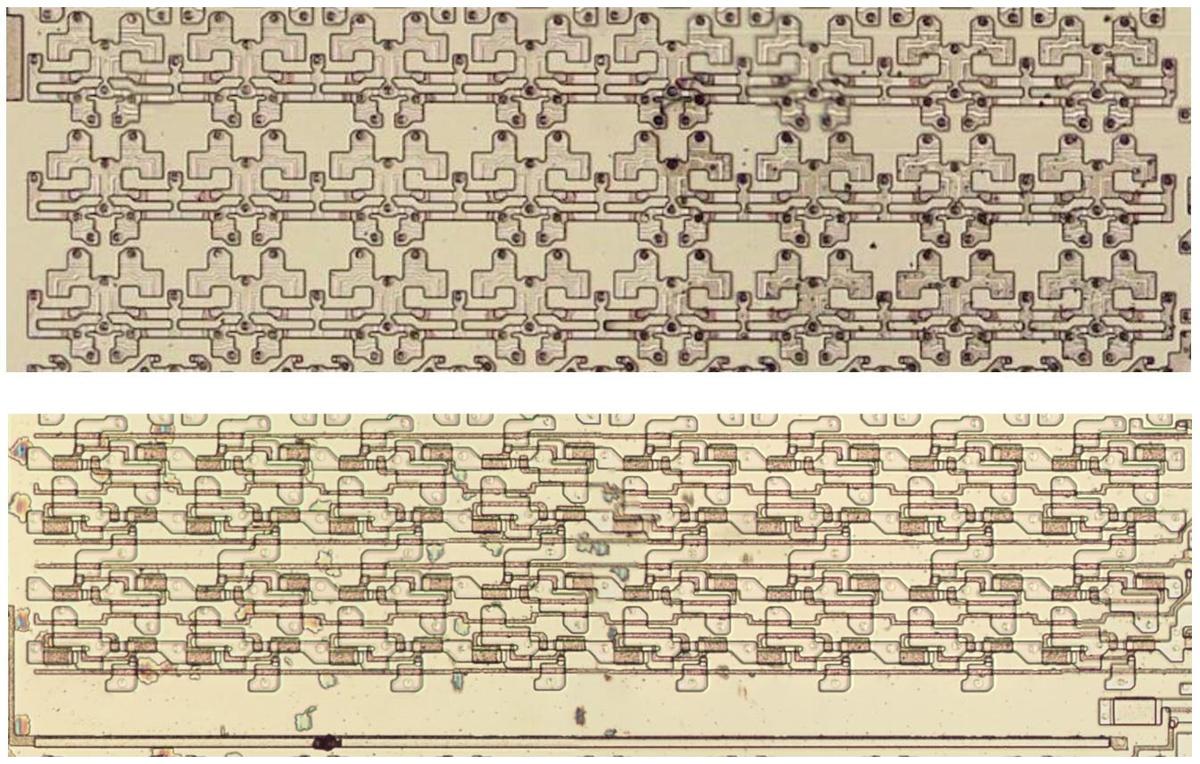

Core memory

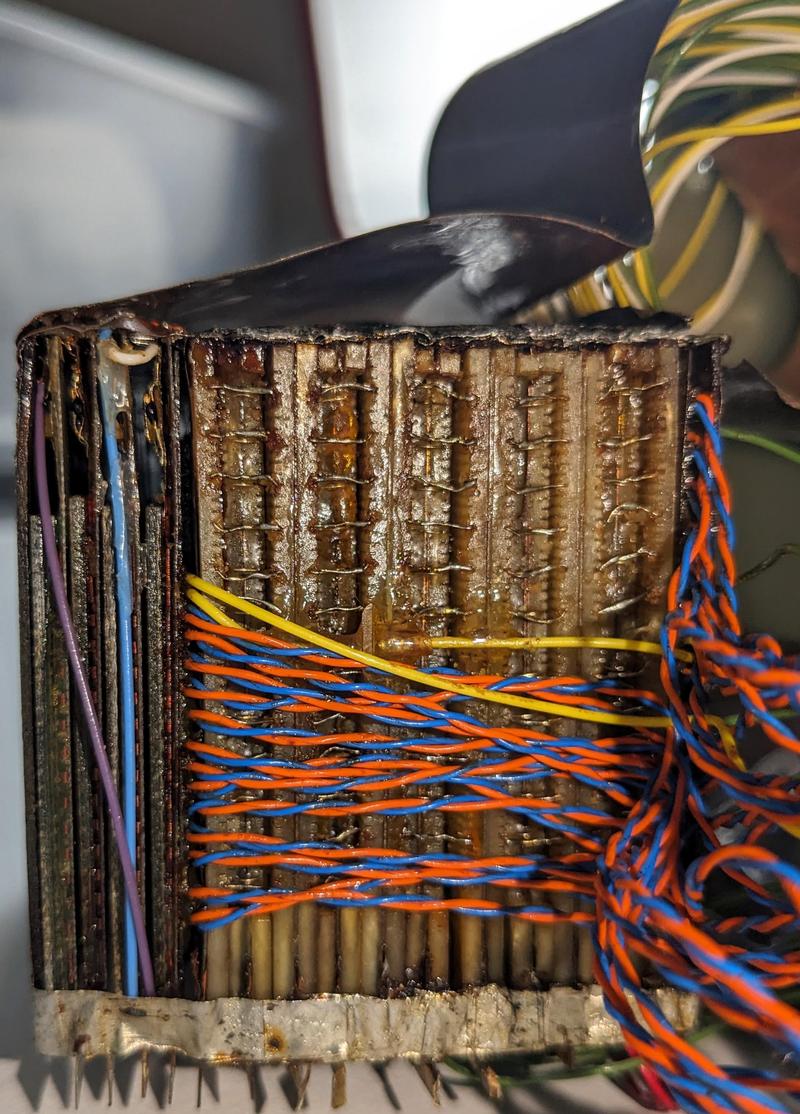

The unit is built around a core memory stack, as was common in the era before semiconductor memory took over. Magnetic core memory consists of a grid of tiny ferrite cores with wires threaded through them, forming a core plane. Typically, a core memory unit consists of multiple planes, one for each bit in the word, stacked to form a three-dimensional block of memory.

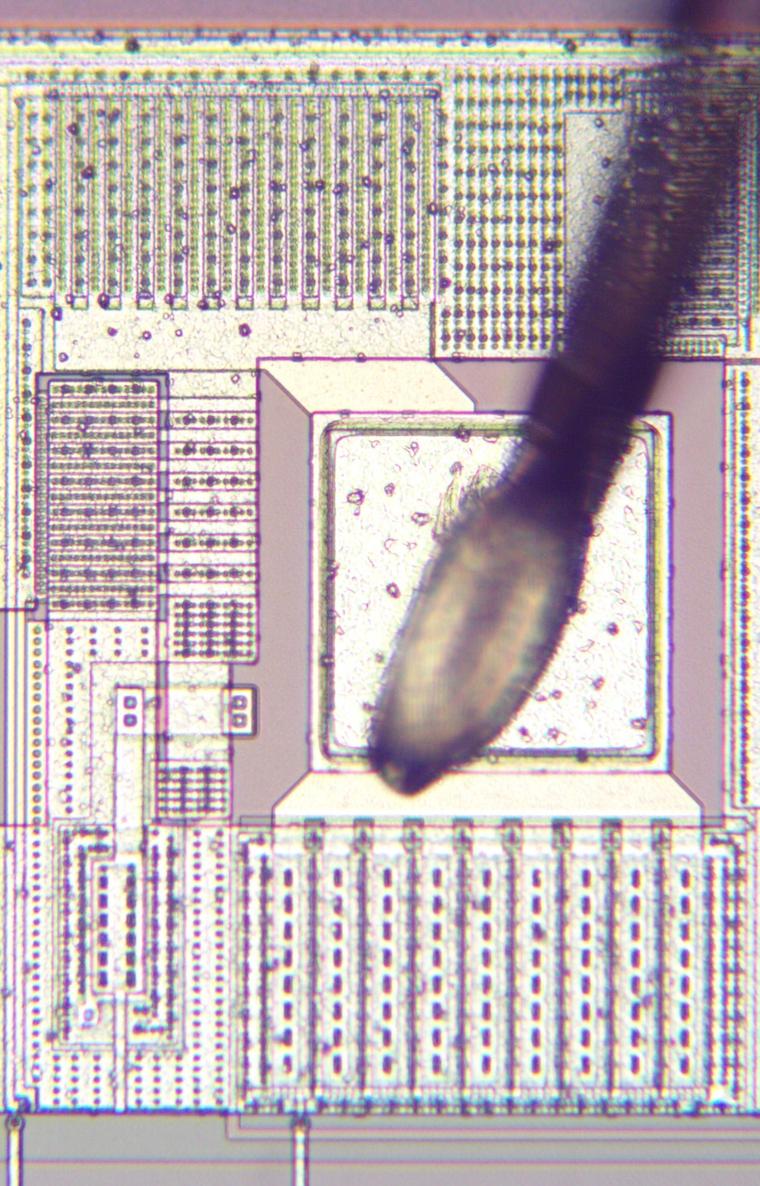

The photo below shows a closeup of the stack. It appears to have 20 planes, suggesting a 20-bit processor. Soldered wires connect the planes together to provide continuous wiring through the stack. The soldering on these wires looks somewhat haphazard, suggesting that this was not a production unit.

The photo below shows the other side of the core memory stack, with similar wiring between the planes. At the right are a few layers of a different type, connected with 26 wires. The tape measure shows that the core memory stack is compact, about 6 cm on a side (2¼").

Some of the boards are drivers for the core memory stack. The board below has 48 small round transistors, colored either blue or red. Note the green, white, and yellow wires in the lower right, mostly hidden under the brown ground ribbon. These wires are connected to the core memory stack.

The board below also has numerous wires to the core stack, underneath the brown ground ribbon, so it is presumably another driver board. This board has some round driver transistors with yellow dots. Curiously, in the upper left there are a few circuit board pads where transistors could be mounted but are missing. Perhaps with the additional components the board would support a system with more of something: a larger keyboard? more memory?

Looking at the back of the unit, you can see the display indicator wiring at the top and a circuit board at the bottom. This board contains 20 transistors in metal cans, specifically Motorola 2N3736 NPN transistors. The core memory stack has 20 planes, matching the 20 transistors on this board, so the board probably implements the core memory "inhibit drivers", controlling the bit written to each plane. The board also has numerous tiny surface-mount transistors in white, red, and black packages. Close examination shows a few thin green "bodge" wires on this board, indicating that rework was performed on the board to fix a circuit problem, another piece of evidence that this unit is a prototype.

The core memory stack is enclosed by two sheet metal boxes, which I removed for the photos. The stack also has two flexible ground planes attached to it. The designers clearly wanted to ensure that the memory was well shielded, to a degree that I haven't seen in other systems.

Conclusions

Despite my research, this aerospace computer remains a mystery. I was unable to identify who manufactured it or even its exact function. One hypothesis is a NASA connection since NASA was extensively using these Signetics chips at the time. Moreover, this computer was obtained in the Houston area. Another hypothesis, based on the "CDC" label on the chips, is that this computer was built by Control Data's Aerospace Division. If you have any leads on this mysterious aviation computer, please contact me.

This system may have been a prototype. It has no part numbers, manufacturer name, or identifying plate.5 Moreover, the soldering on the core memory stack doesn't seem to be flight quality. Finally, the boards don't have conformal coating, which is typically used for spaceflight systems. However, the mechanical design looks advanced for a prototype, with dense boards that fold together like a book.

This unit clearly has a navigation role, but seems to be too inaccurate for an inertial navigation system (INS). It contains many integrated circuits, but not enough to form a full computer. I hypothesize that this unit contains the circuitry to drive the core memory and the display, and handle keyboard input. Looking at the underside of the unit (below), there are three connectors. I suspect these connectors were plugged into a larger box that held the computer itself.

The date codes on the integrated circuits range from 1966 to 1973, so the computer was probably manufactured in 1973. The seven-year range for date codes is a bit surprising, since integrated circuit technology changed a lot during these years. I suspect that the Signetics 400-series ICs had older date codes because this line didn't catch on so there was a lot of old stock rather than newly-manufactured parts. I also suspect that this system was designed around 1969, based on the multiple NASA systems using these chips then, suggesting that the design and manufacturing of this unit was a multi-year project.

Despite the lingering mysteries of this device, it provides an interesting example of aerospace computers at the beginning of the 1970s. Even though integrated circuits were primitive at the time, with just a few transistors per chip, aerospace computers used these chips and high-density packaging to build computers that were compact, reliable, and low power. These miniature computers controlled aircraft, missiles, and spacecraft, worlds away from the room-filling mainframes that attracted most of the attention.

Thanks to Usagi Electric for providing the aerospace computer. Eric Schlaepfer and Marc Verdiell helped with the analysis. Thanks to Don Straney for his research and comments. Various commenters on Reddit and Twitter provided suggestions. Follow me on Twitter @kenshirriff or RSS for updates. I'm also on Mastodon as oldbytes.space@kenshirriff.

Notes and references

-

The indicators have a blank position, so there are 11 electromagnets. However, only the ten electromagnets associated with digits are used in the device. The N/S/E/W indicators have a square box in one of the positions, which probably is not used. ↩

-

Signetics had multiple temperature ranges for the 400-series low-power ICs. The RE prefix indicated ultra high reliability aerospace components rated for a temperature range of -55°C to +125°C. The SE prefix on the chips in this unit indicated military airborne chips with the same temperature range. A NE or ST prefix indicated military prototype or industrial chips with a smaller temperature range (0°C to +70°C). A SP prefix indicated the commercial temperature rating, from +15°C to +55°C. A J suffix indicated a flat pack and an A suffix indicated a dual in-line pack (DIP). ↩

-

NASA computers are the only documented systems that I could find that used these Signetics chips. One possible conclusion is that NASA was the only organization to use these chips. However, it is likely that other companies used these chips but didn't document them as thoroughly as NASA. That is, detailed circuitry for military aerospace computers is unlikely to be on the Internet. ↩

-

Halex also made hybrid microcircuits, such as flip-flops, so these packages could be more complex than resistor networks. However, I think a resistor network is more likely. ↩

-

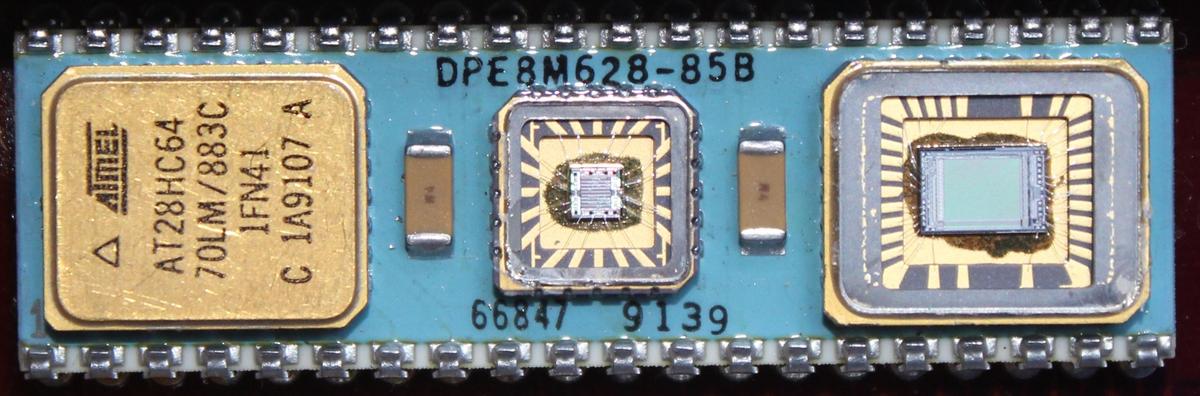

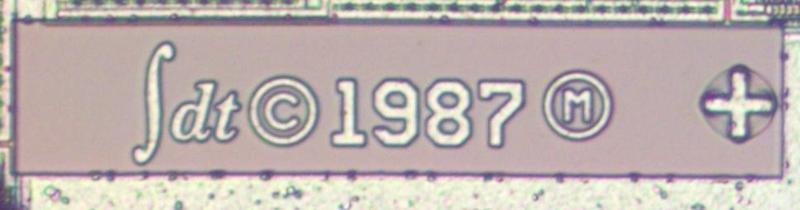

One of the circuit boards had the number "45333000" on it, along with a symbol like "+I-", as shown below.

Closeup of a circuit board showing a number, maybe identifying the board.One board also had a mysterious symbol that resembles "mw". I couldn't match these symbols to any manufacturers, and it is unclear if they are logos, fiducials, or other symbols.

Closeup of a circuit board showing the "mw" mark.