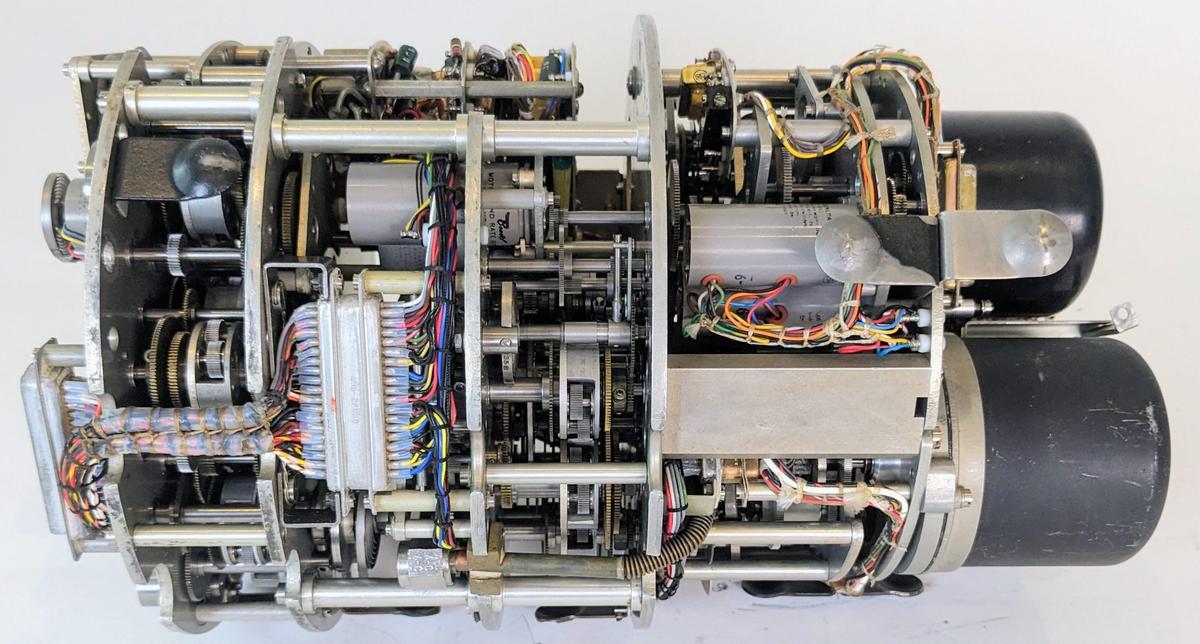

The Bendix Central Air Data Computer (CADC) is an electromechanical analog computer that uses gears and cams for its mathematics. It was a key part of military planes such as the F-101 and the F-111 fighters, computing airspeed, Mach number, and other "air data". This article reverse-engineers the two pressure transducers, on the right in the photo below. It is part 3 of my series on the CADC.1

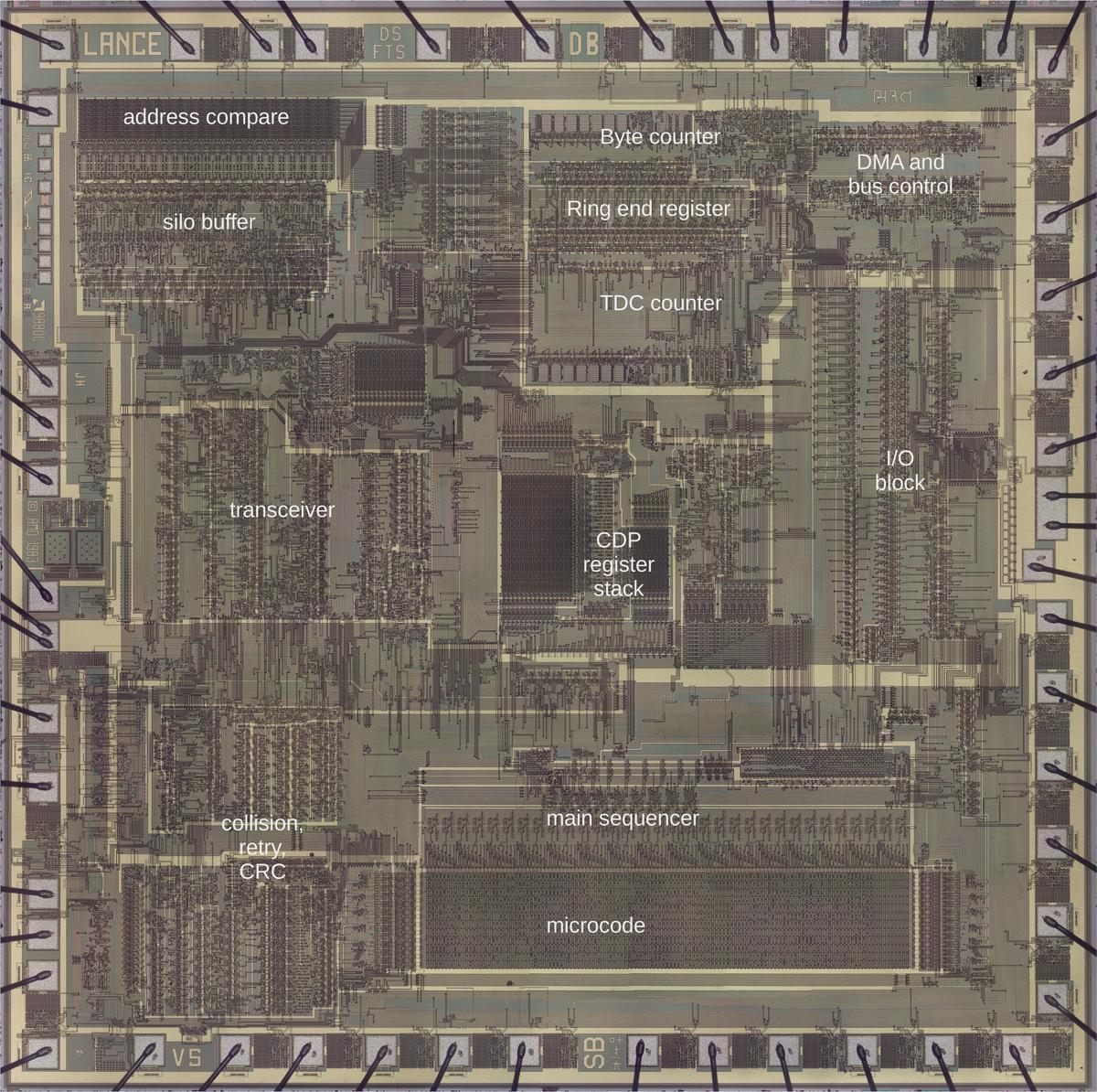

Aircraft have determined airspeed from air pressure for over a century. A port in the side of the plane provides the static air pressure,2 the air pressure outside the aircraft. A pitot tube points forward and receives the "total" air pressure, a higher pressure due to the speed of the airplane forcing air into the tube. The airspeed can be determined from the ratio of these two pressures, while the altitude can be determined from the static pressure.

But as you approach the speed of sound, the fluid dynamics of air change and the calculations become very complicated. With the development of supersonic fighter planes in the 1950s, simple mechanical instruments were no longer sufficient. Instead, an analog computer calculated the "air data" (airspeed, air density, Mach number, and so forth) from the pressure measurements. This computer then transmitted the air data electrically to the systems that needed it: instruments, weapons targeting, engine control, and so forth. Since the computer was centralized, such a system was called a Central Air Data Computer or CADC, manufactured by Bendix and other companies.

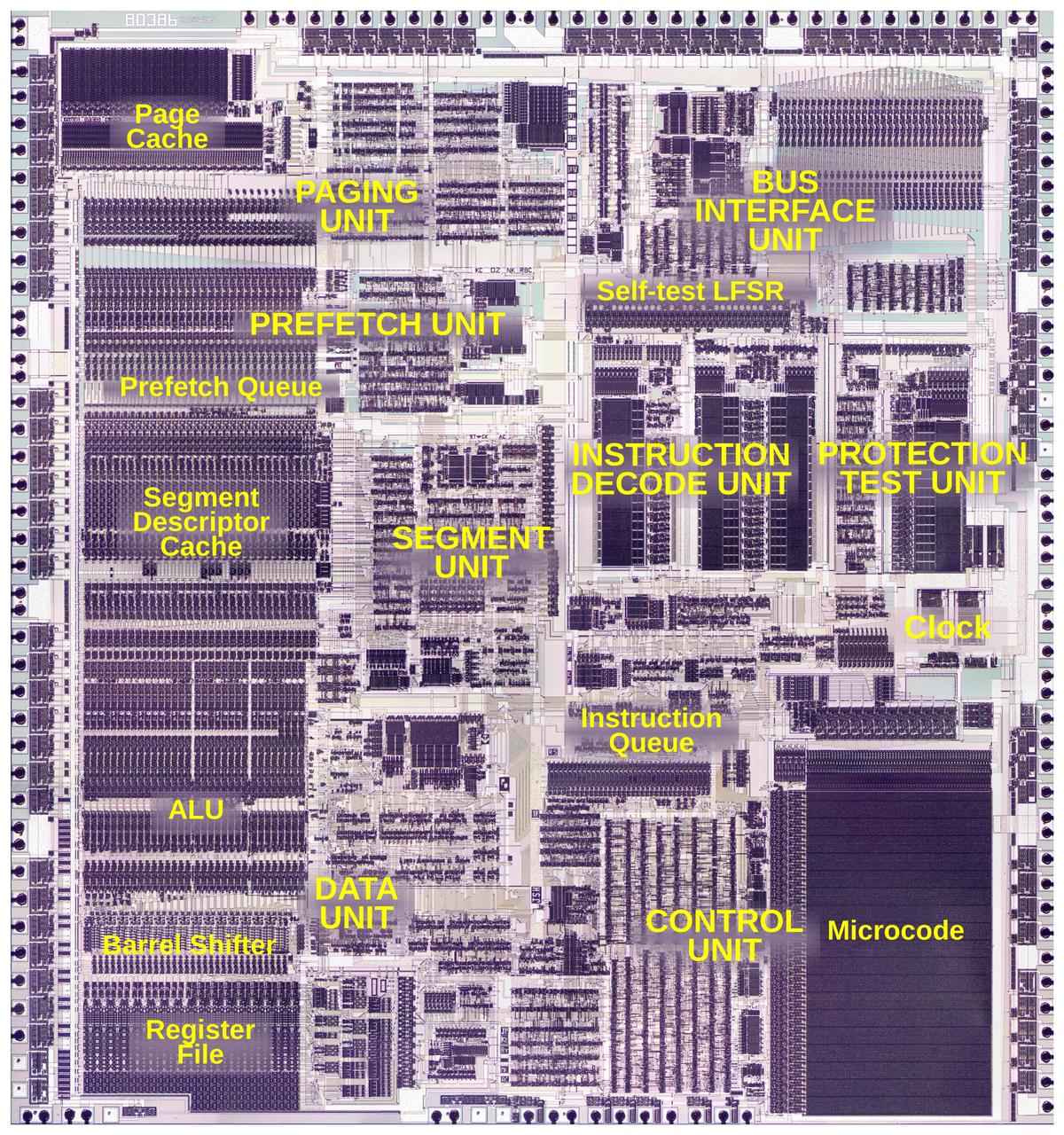

Each value in the Bendix CADC is indicated by the rotational position of a shaft. Compact electric motors rotated the shafts, controlled by magnetic amplifier servo loops. Gears, cams, and differentials performed computations, with the results indicated by more rotations. Devices called synchros converted the rotations to electrical outputs that controlled other aircraft systems. The CADC is said to contain 46 synchros, 511 gears, 820 ball bearings, and a total of 2,781 major parts (but I haven't counted). These components are crammed into a compact cylinder: 15 inches long and weighing 28.7 pounds.

The equations computed by the CADC are impressively complicated. For instance, one equation computes the Mach number $M$ from the total pressure \( P_t \) and the static pressure \( P_s \):3

\[~~~\frac{P_t}{P_s} = \frac{166.9215M^7}{( 7M^2-1)^{2.5}}\]

It seems incredible that these functions could be computed mechanically, but three techniques make this possible. The fundamental mechanism is the differential gear, which adds or subtracts values. Second, logarithms are used extensively, so multiplications and divisions become additions and subtractions performed by a differential, while square roots are calculated by gearing down by a factor of 2. Finally, specially-shaped cams implement functions: logarithm, exponential, and other one-variable functions.4 By combining these mechanisms, complicated functions can be computed mechanically.

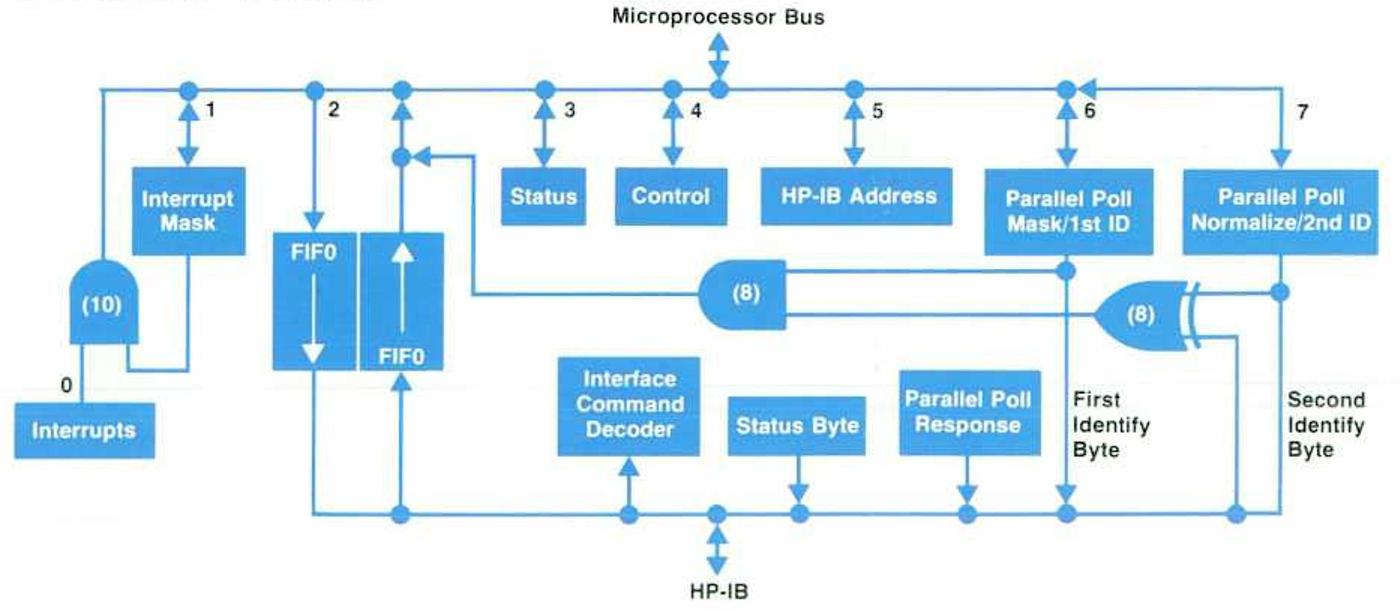

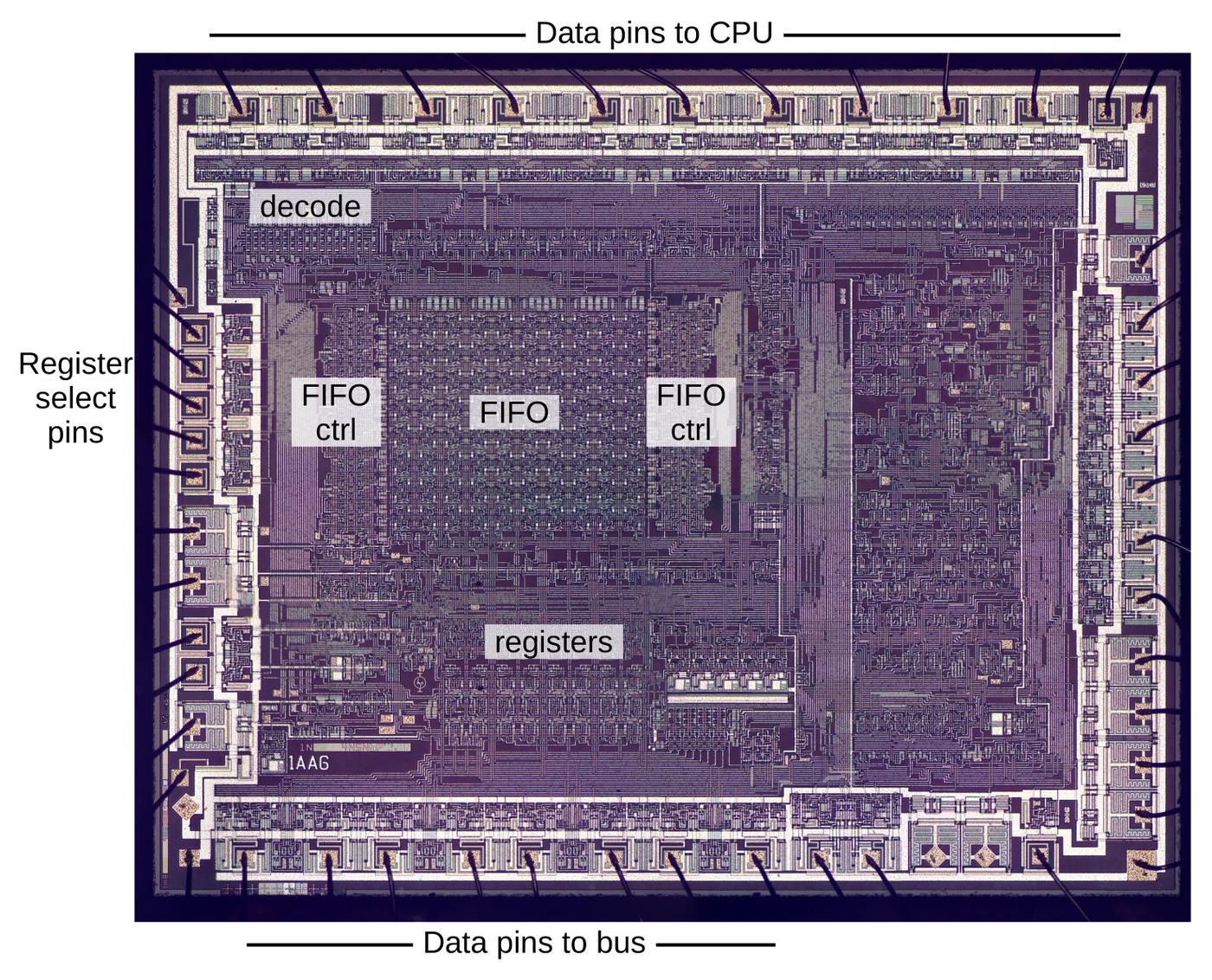

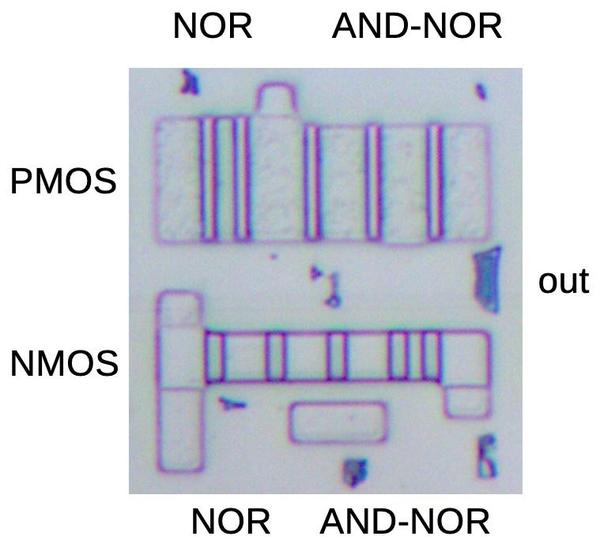

The pressure transducers

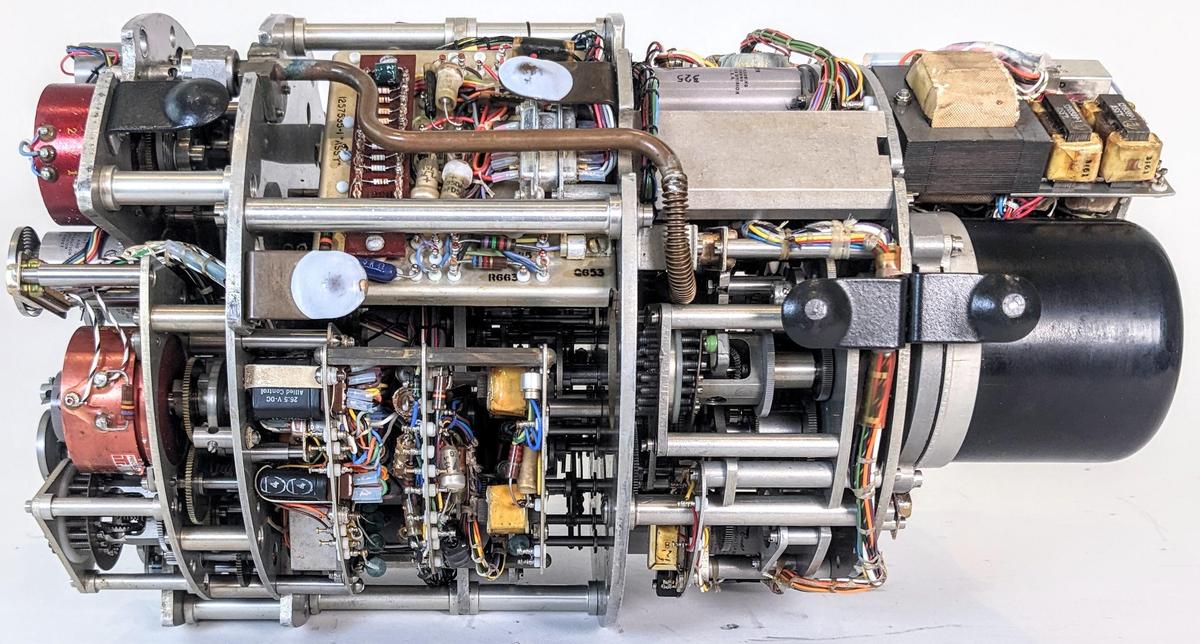

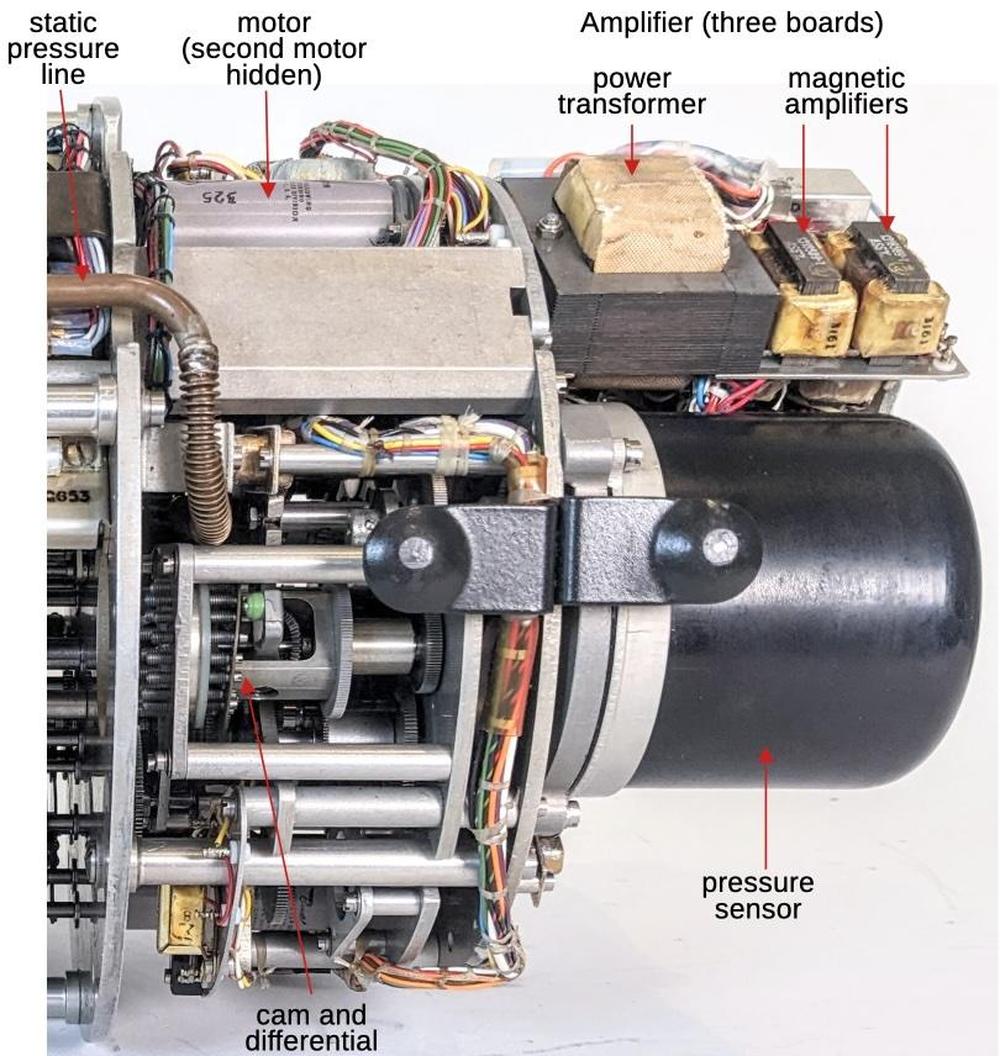

In this article, I'm focusing on the pressure transducers and how they turn pressures into shaft rotations. The CADC receives two pressure inputs: the total pressure \( P_t \) from the pitot tube, and the static pressure \( P_s \) from the static pressure port.5 The CADC has two independent pressure transducer subsystems, one for total pressure and one for static pressure. The two pressure transducers make up the right half of the CADC. The copper pressure tube for the static pressure is visible on top of the CADC below. This tube feeds into the black-domed pressure sensor at the right. The gears, motors, and other mechanisms to the left of the pressure sensor domes generate shaft rotations that are fed into the remainder of the CADC for calculations.

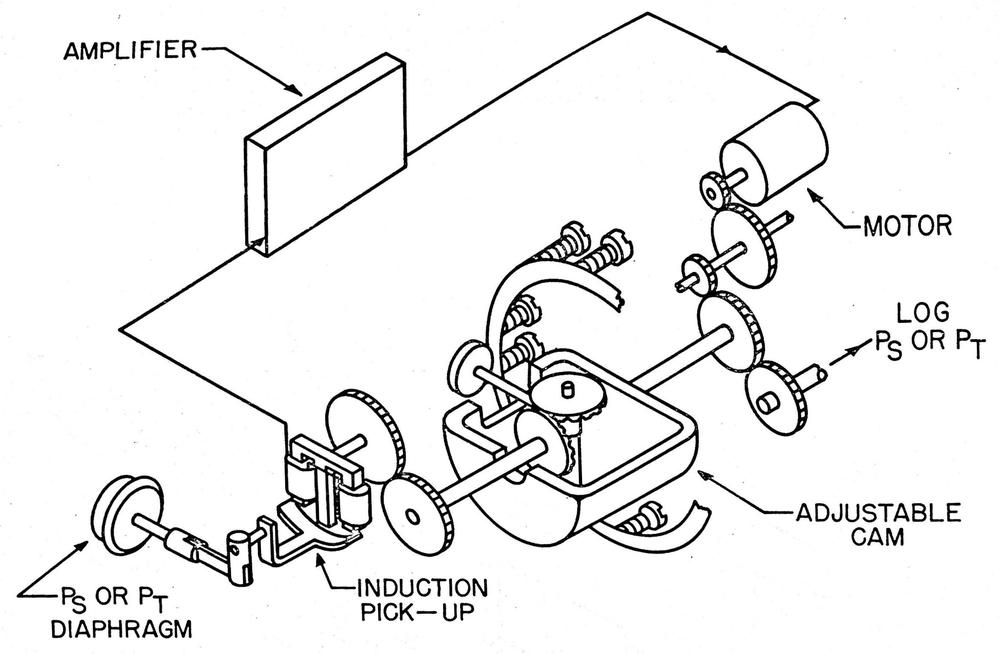

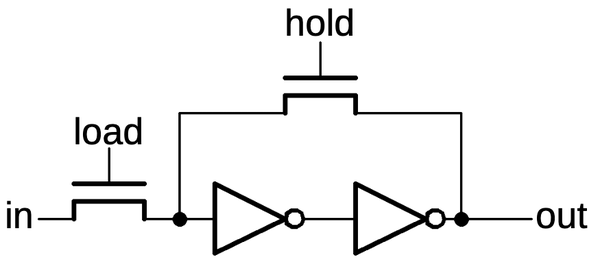

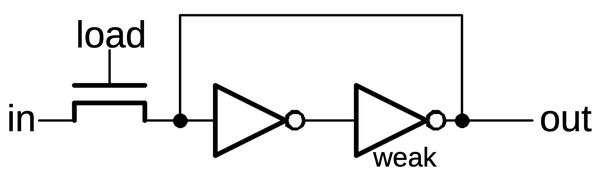

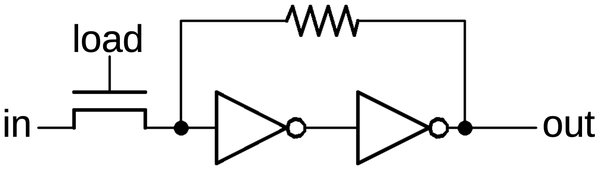

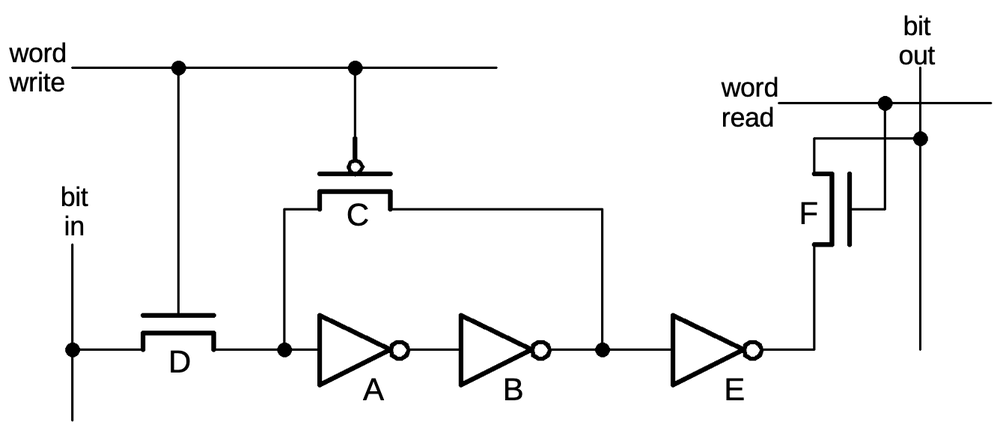

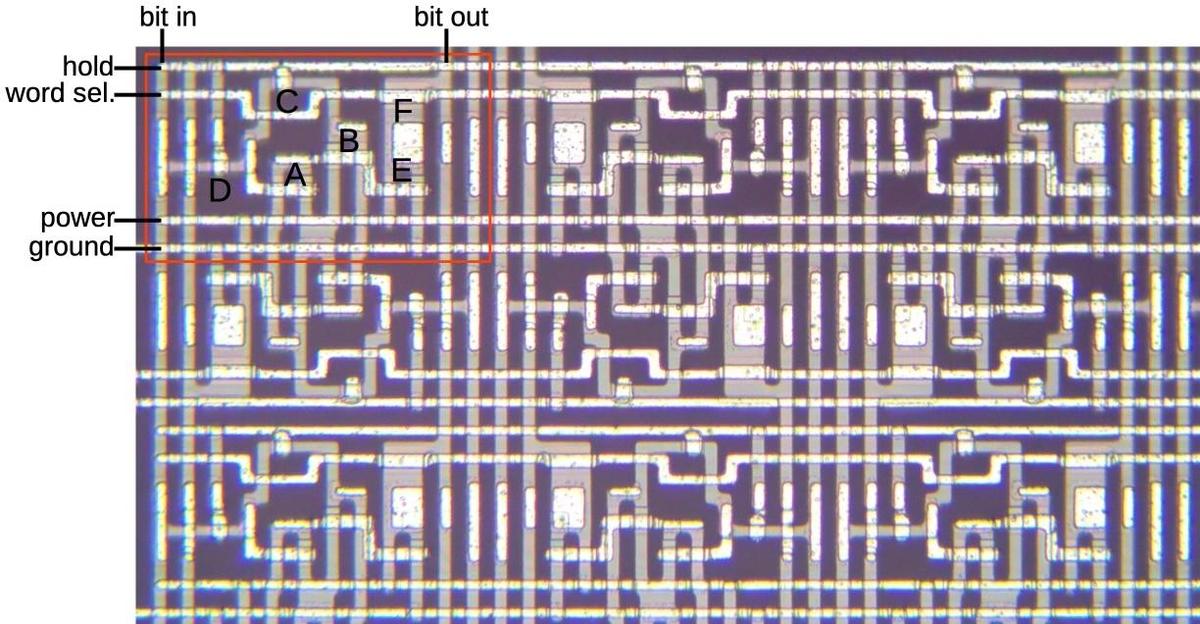

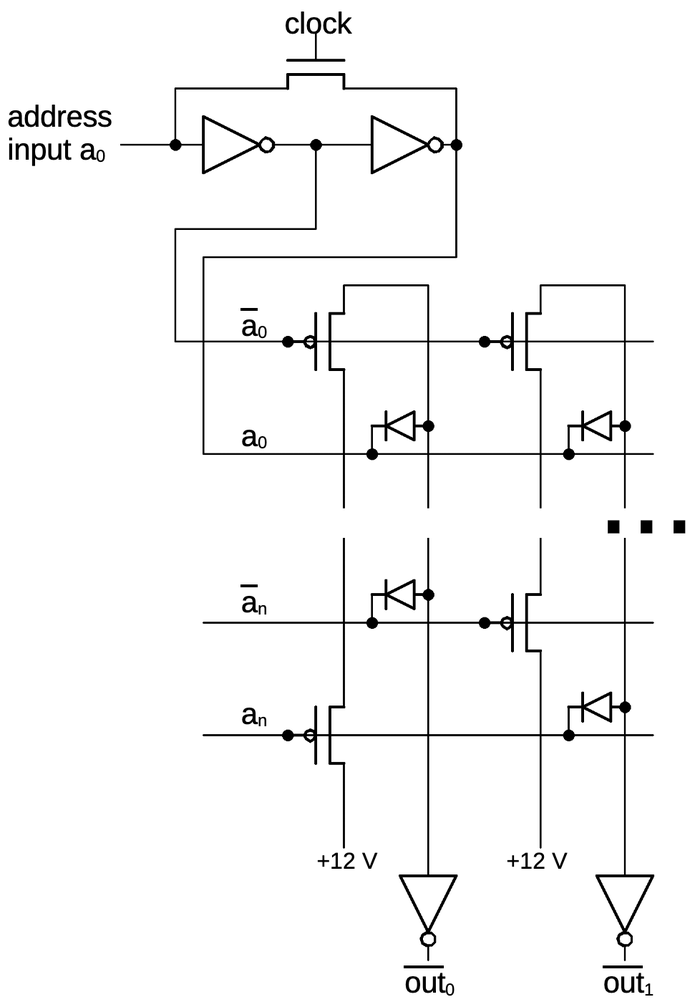

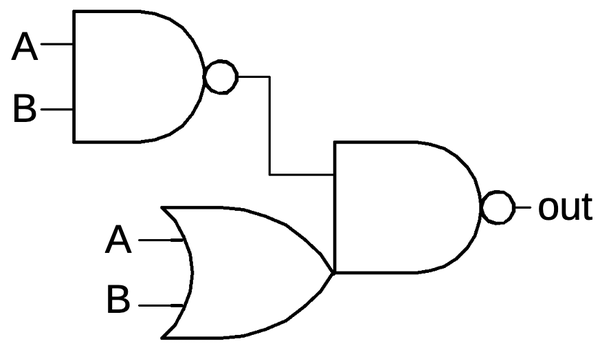

The pressure transducer has a tricky job: it must measure tiny pressure changes, but it must also provide a rotational signal that has enough torque to rotate all the gears in the CADC. To accomplish this, the pressure transducer uses a servo loop that amplifies small pressure changes into accurate rotations. The diagram below provides an overview of the process. The pressure input causes a small movement in the bellows diaphragm. This produces a small shaft rotation that is detected by a sensitive inductive pickup. This signal is amplified and drives a motor with enough power to drive the output shaft. The motor is also geared to counteract the movement of the bellows. The result is a feedback loop so the motor's rotation tracks the air pressure, but provides much more torque. An adjustable cam corrects for any error produced by irregularities in the diaphragm response. This complete mechanism is implemented twice, once for each pressure input.

To summarize, as the pressure moves the diaphragm, the induction pick-up produces an error signal. The motor is driven in the appropriate direction until the error signal becomes zero. At this point, the output shaft rotation exactly matches the input pressure. The advantage of the servo loop is that the diaphragm only needs to move the sensitive inductive pickup, rather than driving the gears of the CADC, so the pressure reading is more accurate.

In more detail, the process starts with connections from the aircraft's pitot tube and static pressure port to the CADC. The front of the CADC (below) has connections for the total pressure and the static pressure. The CADC also has five round military connectors for electrical connections between the CADC and the rest of the aircraft. (The outputs from the CADC are electrical, with synchros converting the shaft rotations into electrical representations.) Finally, a tiny time clock at the upper right keeps track of how many hours the CADC has been in operation, so it can be maintained according to schedule.

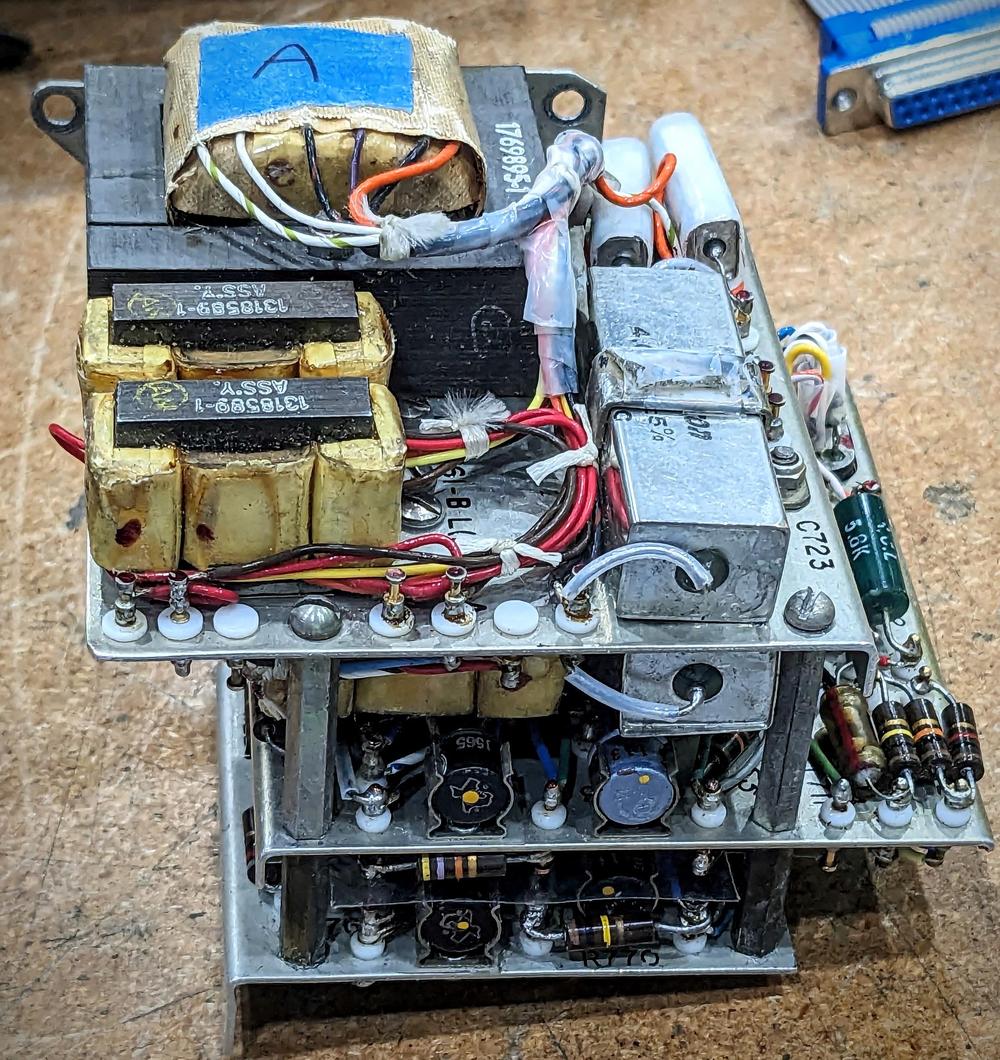

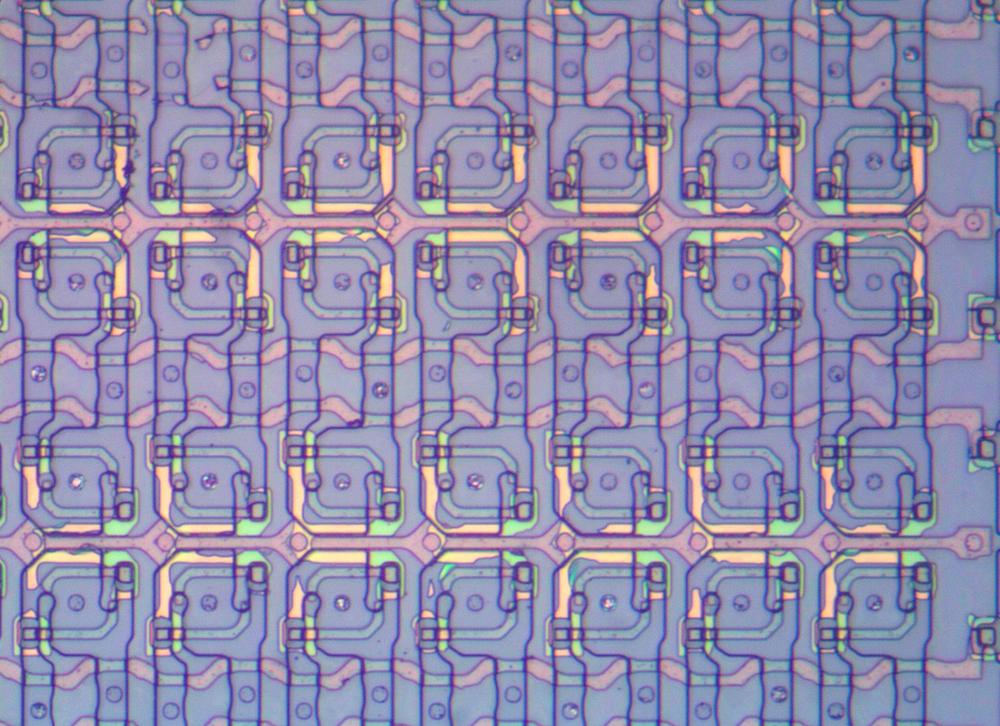

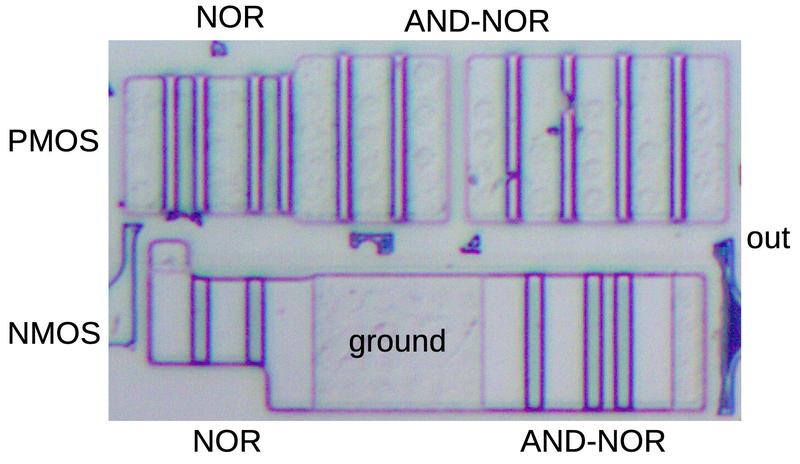

The photo below shows the main components of the pressure transducer system. At the upper left, the pressure line from the CADC's front panel goes to the pressure sensor, airtight under a black dome. The error signal from the sensor goes to the amplifier, which consists of three boards. The amplifier's power transformer and magnetic amplifiers are the most visible components. The amplifier drives the motors to the left. There are two motors controlled by the amplifier: one for coarse adjustments and one for fine adjustments. By using two motors, the CADC can respond rapidly to large pressure changes, while also accurately tracking small pressure changes. Finally, the output from the motor goes through the adjustable cam in the middle before providing the feedback signal to the pressure sensor. The output from the transducer to the rest of the CADC is a shaft on the left, but it is in the middle of the CADC and isn't visible in the photo.

The pressure sensor

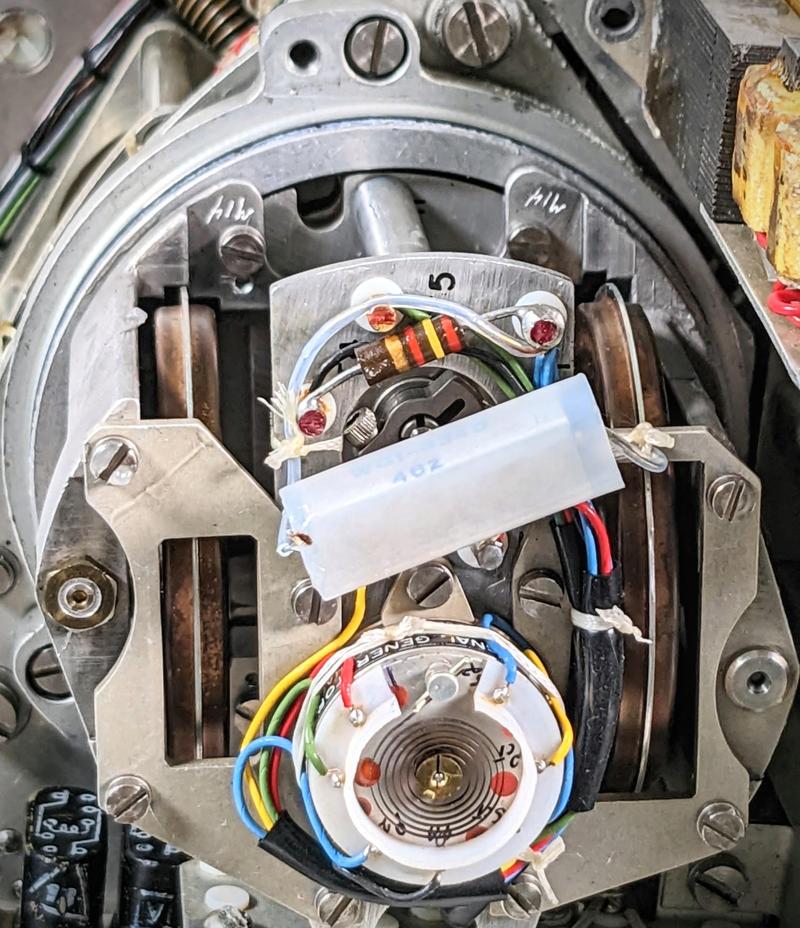

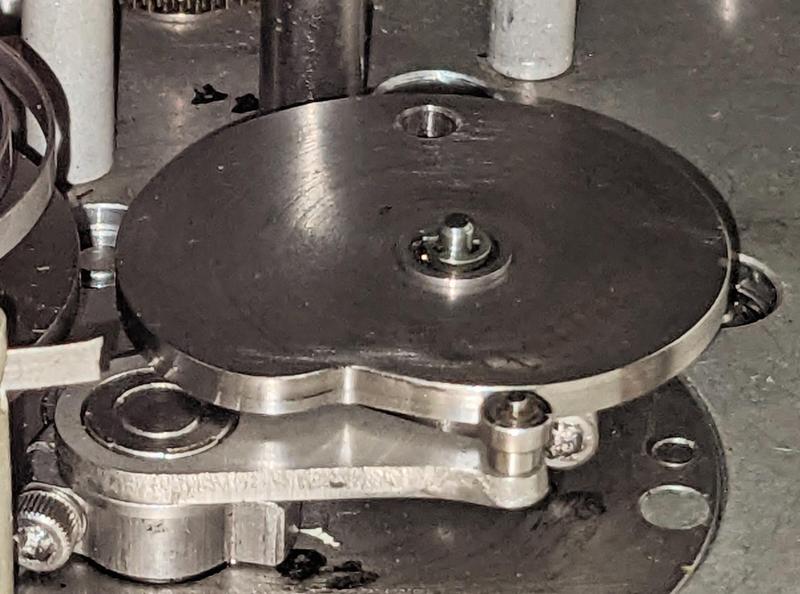

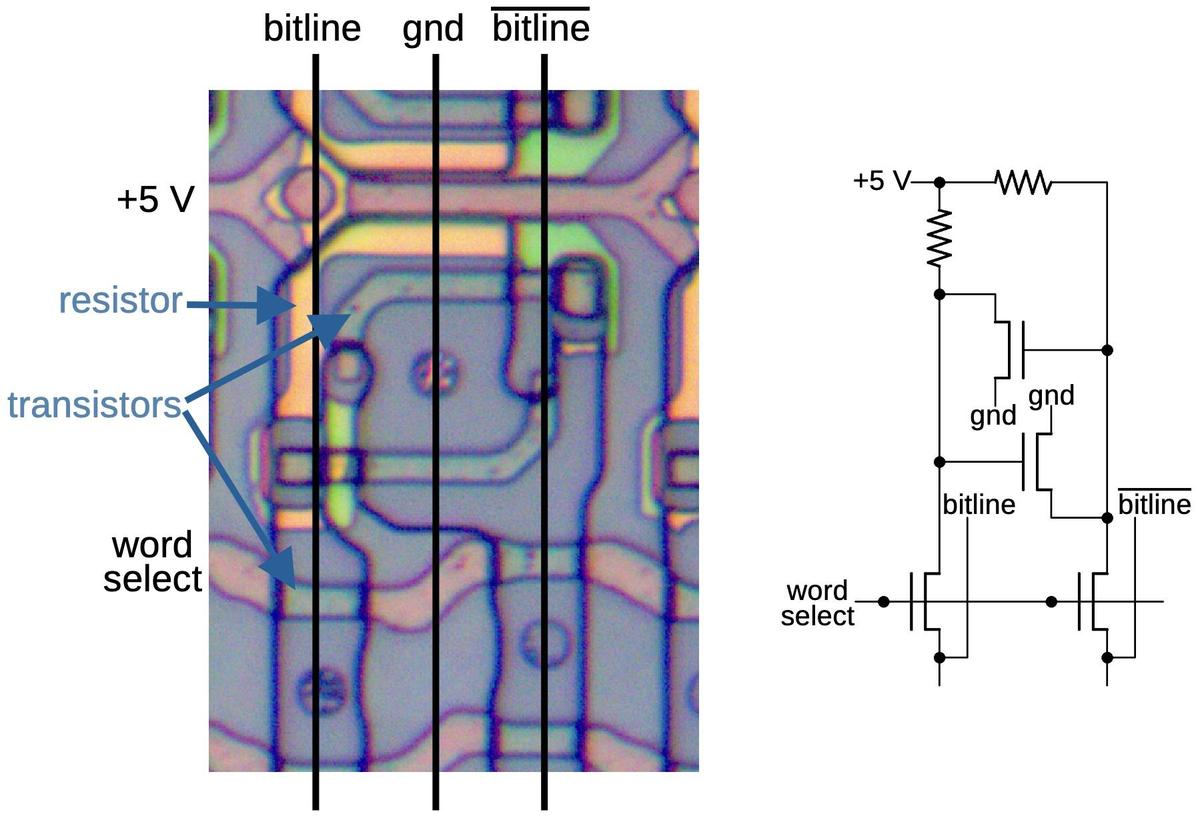

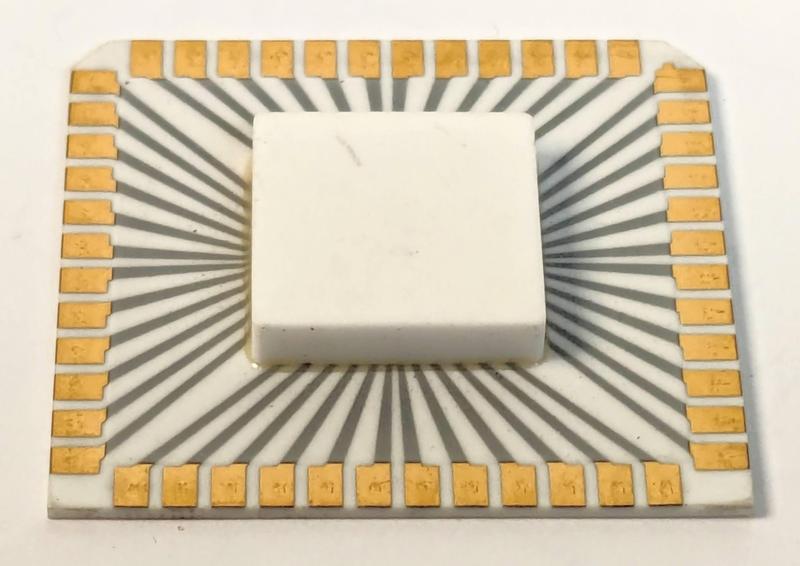

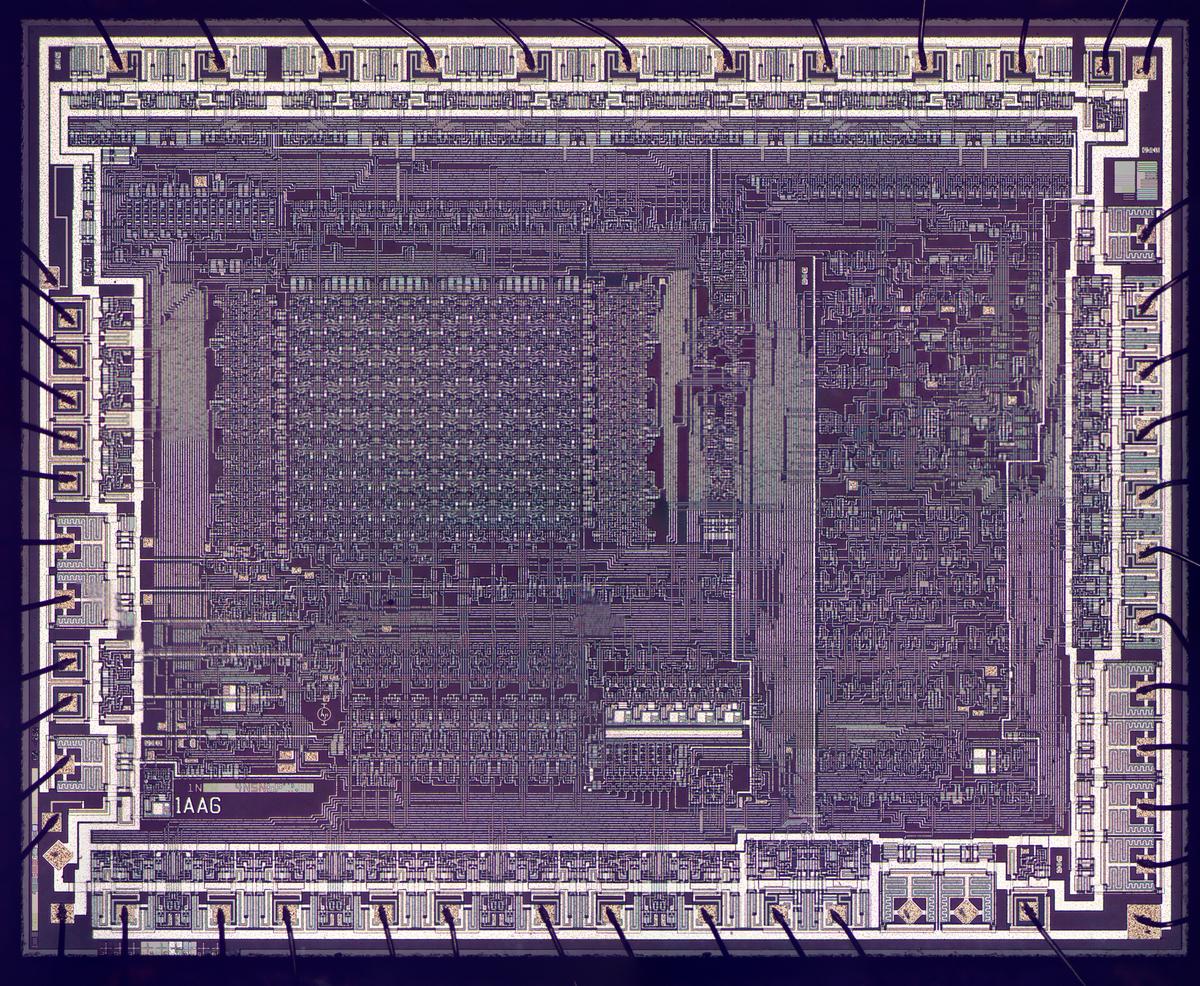

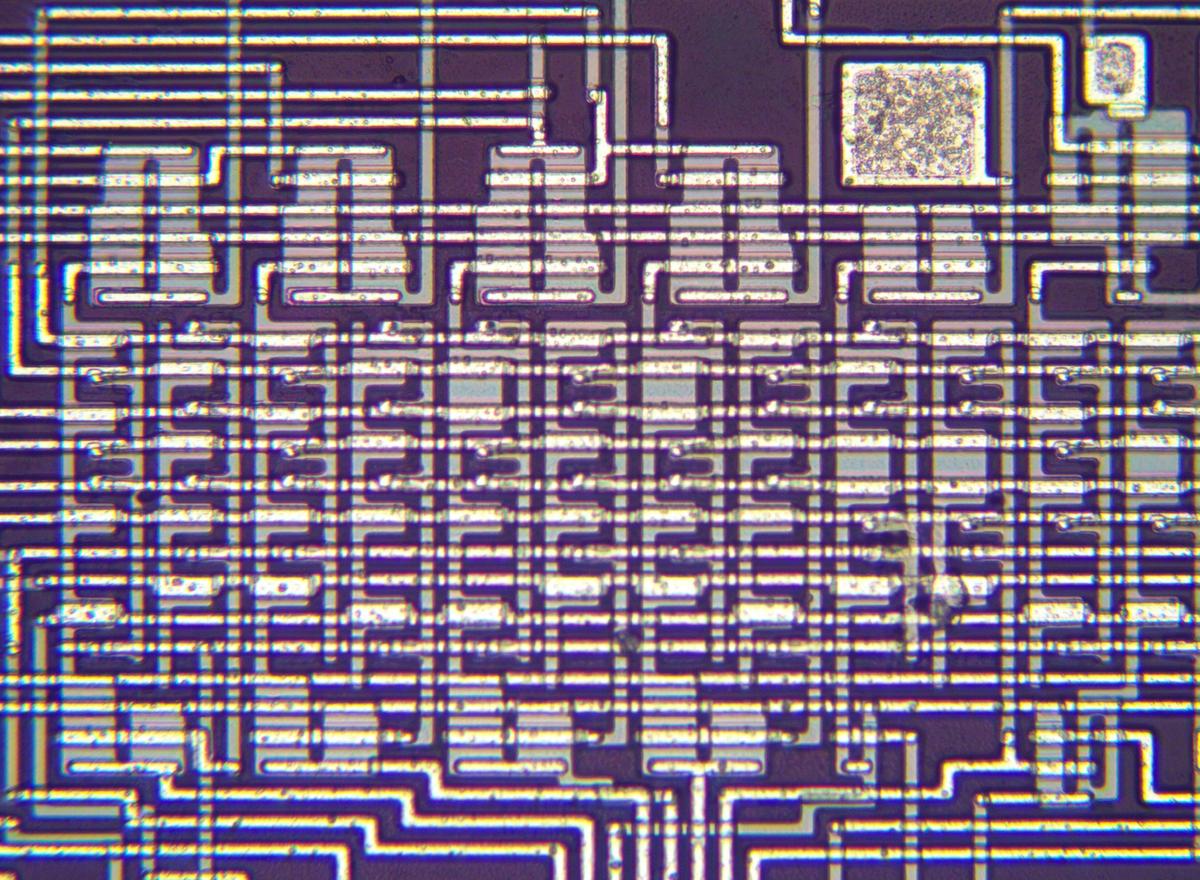

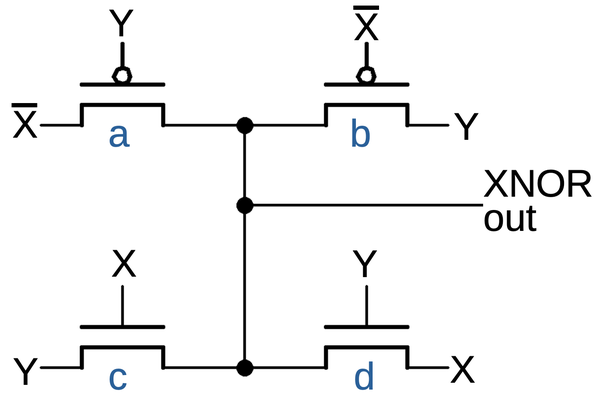

Each pressure sensor is packaged in a black airtight dome and is fed from its associated pressure line. Inside the sensor, two sealed metal bellows (below) expand or contract as the pressure changes. The bellows are connected to opposite sides of a metal shaft, which rotates as the bellows expand or contract. This shaft rotates an inductive pickup, providing the error signal. The servo loop rotates a second shaft that counteracts the rotation of the first shaft; this shaft and gears are also visible below.

The end view of the sensor below shows the inductive pickup at the bottom, with colorful wires for the input (400 Hz AC) and the output error signal. The coil visible on the inductive pickup is an anti-backlash spring to ensure that the pickup doesn't wobble back and forth. The electrical pickup coil is inside the inductive pickup and isn't visible.

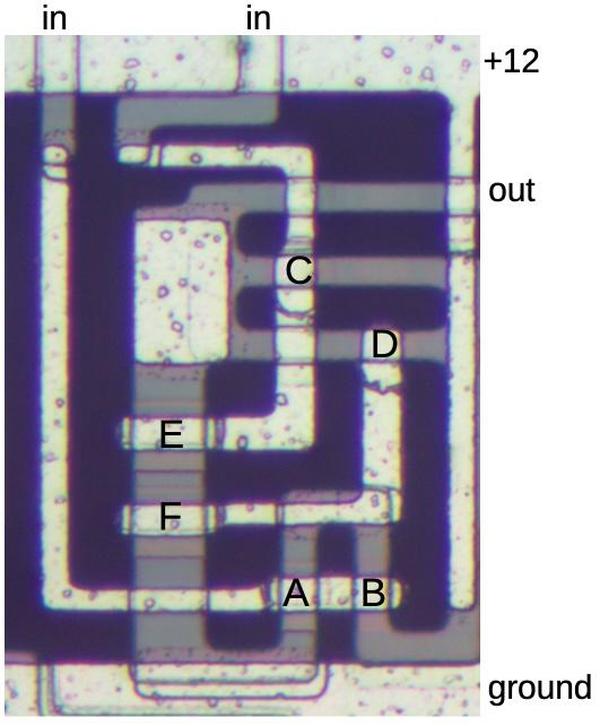

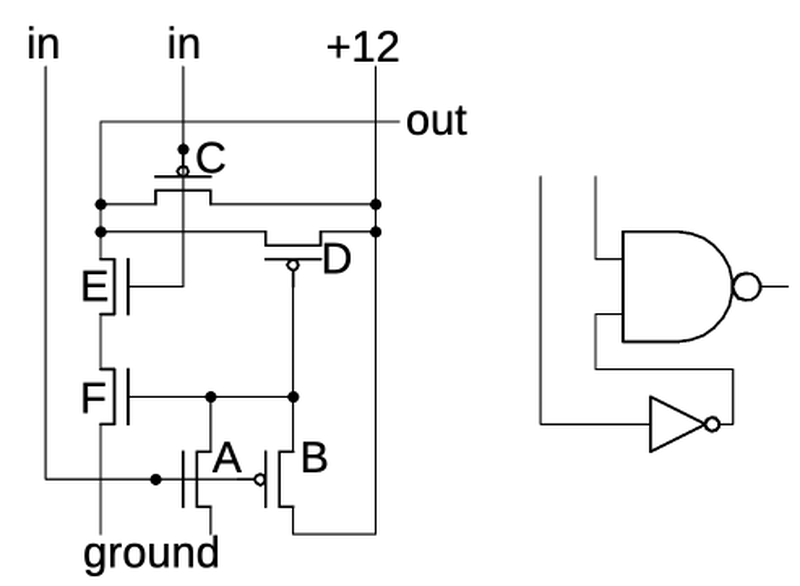

The amplifier

Each transducer feedback signal is amplified by three circuit boards centered around magnetic amplifiers, transformer-like amplifiers that were popular before high-power transistors came along. The photo below shows how the amplifier boards are packed next to the transducers. The boards are complex, filled with resistors, capacitors, germanium transistors, diodes, relays, and other components.

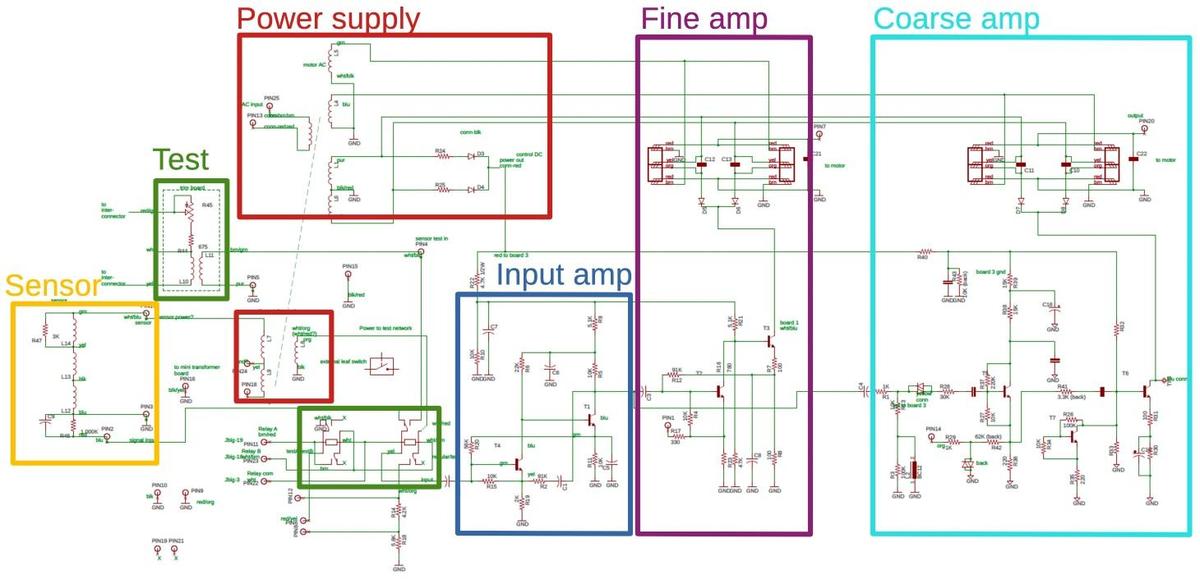

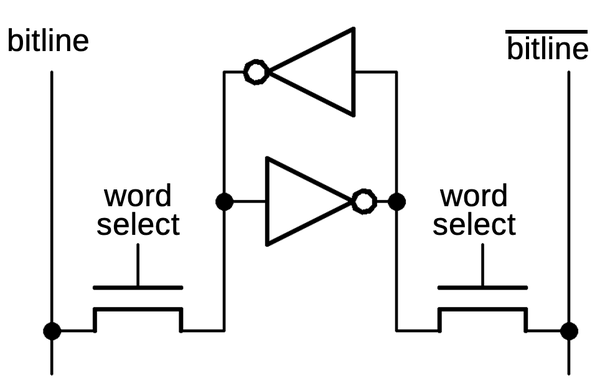

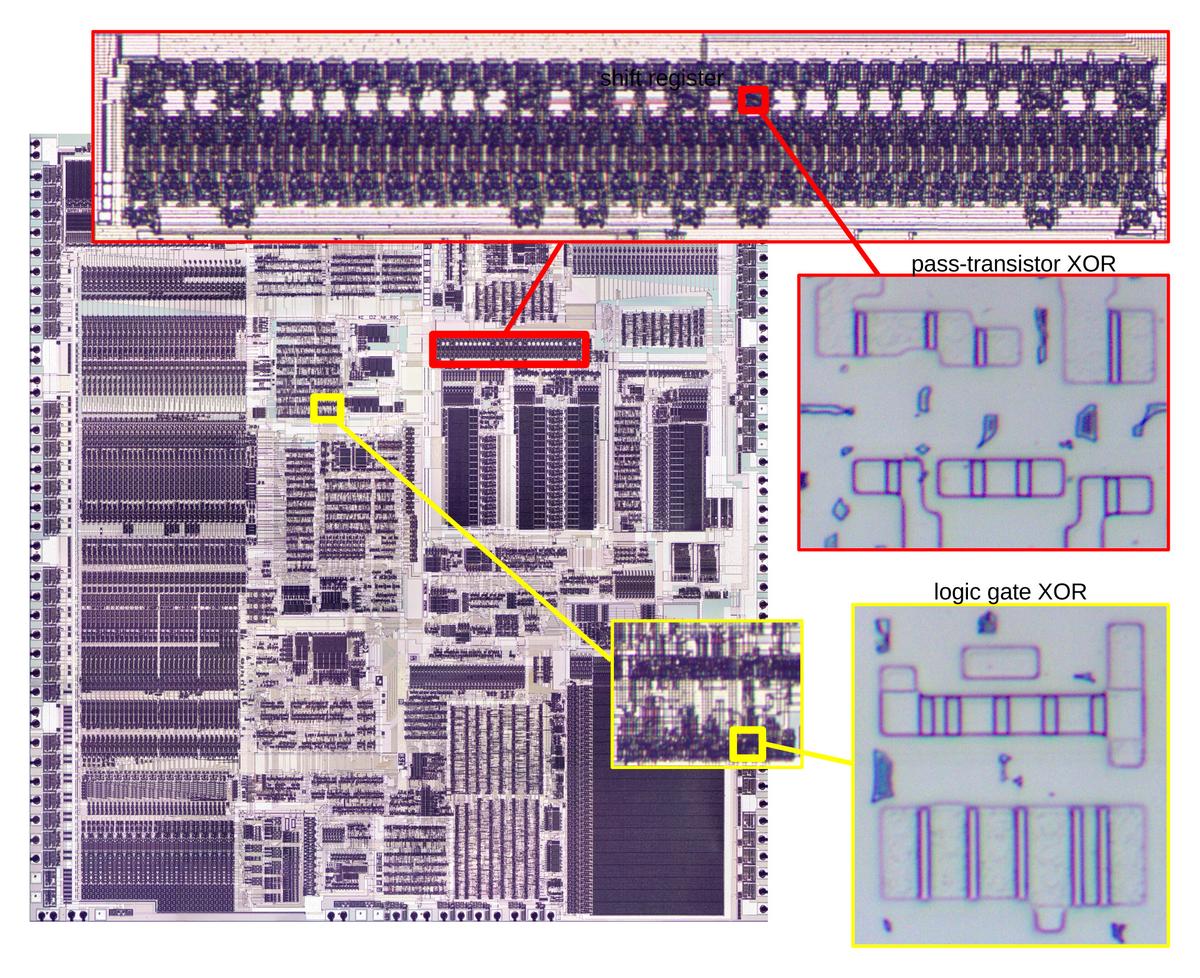

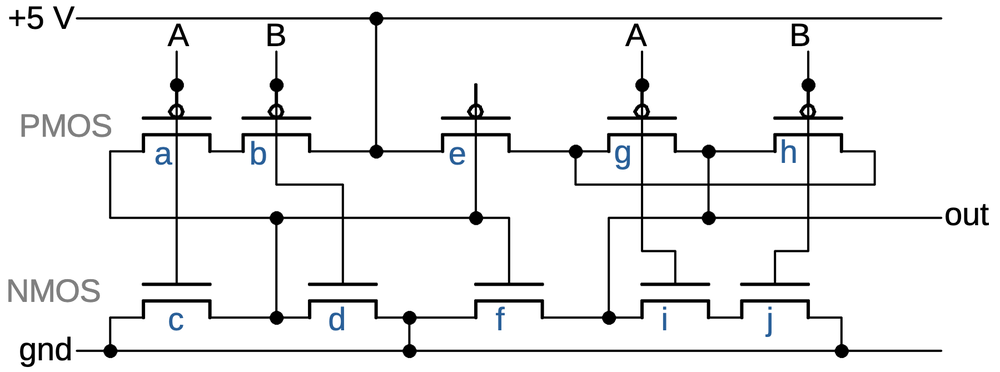

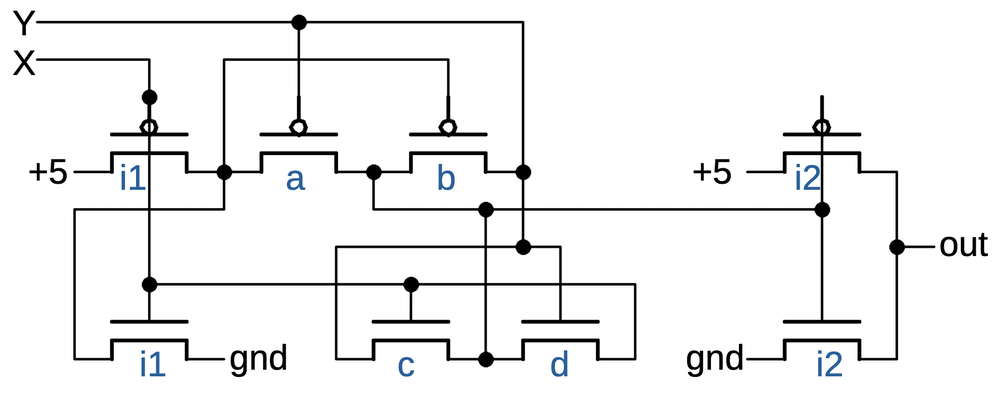

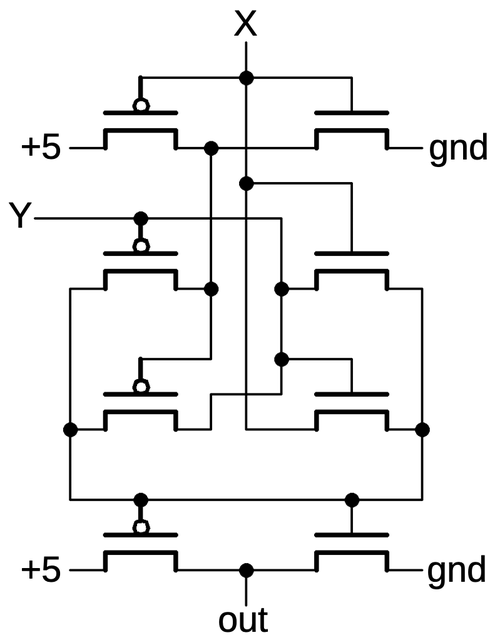

I reverse-engineered the boards and created the schematic below. I'll discuss the schematic at a high level; click it for a larger version if you want to see the full circuitry. The process starts with the inductive sensor (yellow), which provides the error input signal to the amplifier. The first stage of the amplifier (blue) is a two-transistor amplifier and filter. From there, the signal goes to two separate output amplifiers to drive the two motors: fine (purple) and coarse (cyan).

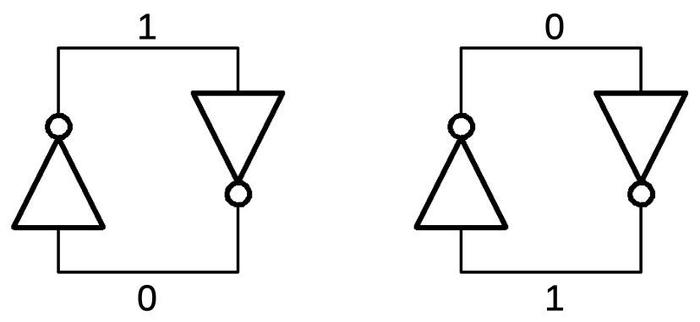

The inductive sensor provides its error signal as a 400 Hz sine wave, with a larger signal indicating more error. The phase of the signal is 0° or 180°, depending on the direction of the error. In other words, the error signal is proportional to the driving AC signal in one direction and flipped when the error is in the other direction. This is important since it indicates which direction the motors should turn. When the error is eliminated, the signal is zero.

Each output amplifier consists of a transistor circuit driving two magnetic amplifiers. Magnetic amplifiers are an old technology that can amplify AC signals, allowing the relatively weak transistor output to control a larger AC output. The basic idea of a magnetic amplifier is a controllable inductor. Normally, the inductor blocks alternating current. But applying a relatively small DC signal to a control winding causes the inductor to saturate, permitting the flow of AC. Since the magnetic amplifier uses a small signal to control a much larger signal, it provides amplification.

In the early 1900s, magnetic amplifiers were used in applications such as dimming lights. Germany improved the technology in World War II, using magnetic amplifiers in ships, rockets, and trains. The magnetic amplifier had a resurgence in the 1950s; the Univac Solid State computer used magnetic amplifiers (rather than vacuum tubes or transistors) as its logic elements. However, improvements in transistors made the magnetic amplifier obsolete except for specialized applications. (See my IEEE Spectrum article on magnetic amplifiers for more history of magnetic amplifiers.)

In the CADC, magnetic amplifiers control the AC power to the motors. Two magnetic amplifiers are visible on top of the amplifier board stack, while two more are on the underside; they are the yellow devices that look like transformers. (Behind the magnetic amplifiers, the power transformer is labeled "A".)

The transistor circuit generates the control signal to the magnetic amplifiers, and the output of the magnetic amplifiers is the AC signal to the motors. Specifically, the CADC uses two magnetic amplifiers for each motor. One magnetic amplifier powers the motor to spin clockwise, while the other makes the motor spin counterclockwise. The transistor circuit will pull one magnetic amplifier winding low; the phase of the input signal controls which magnetic amplifier, and thus the motor direction. (If the error input signal is zero, neither winding is pulled low, both magnetic amplifiers block AC, and the motor doesn't turn.)6 The result of this is that the motor will spin in the correct direction based on the error input signal, rotating the mechanism until the mechanical output position matches the input pressure. The motors are "Motor / Tachometer Generator" units that also generate a voltage based on their speed. This speed signal is fed into the transistor amplifier to provide negative feedback, limiting the motor speed as the error becomes smaller and ensuring that the feedback loop doesn't overshoot.

The other servo loops in the CADC (temperature and position error correction) have one motor driver constructed from transistors and two magnetic amplifiers. However, each pressure transducer has two motor drivers (and thus four magnetic amplifiers), one for fine adjustment and one for coarse adjustment. This allows the servo loop to track the input pressure very closely, while also adjusting rapidly to larger changes in pressure. The coarse amplifier uses back-to-back diodes to block small changes; only input voltages larger than a diode drop will pass through and energize the coarse amplifier.

The CADC is powered by standard avionics power of 115 volts AC, 400 hertz. Each pressure transducer amplifier has a simple power supply running off this AC, using a multi-winding power transformer. A center-tapped winding and full wave rectifier produces DC for the transistor amplifiers. Other windings supply AC (controlled by the magnetic amplifiers) to power the motors, AC for the magnetic amplifier control signals, and AC for the sensor. The transformer ensures that the transducer circuitry is electrically isolated from other parts of the CADC and the aircraft. The power supply is indicated in red in the schematic above.

The schematic also shows test circuitry (blue). One of the features of the CADC is that it can be set to two test configurations before flight to ensure that the system is operating properly and is correctly calibrated.7 Two relays allow the pressure transducer to switch to one of two test inputs. This allows the CADC to be checked for proper operation and calibration. The test inputs are provided from an external board and a helical feedback potentiometer (Helipot) that provides simulated sensor input.

Getting the amplifiers to work was a challenge. Many of the capacitors in the CADC had deteriorated and failed, as shown below. Marc went through the CADC boards and replaced the bad capacitors. However, one of the pressure transducer boards still failed to work. After much debugging, we discovered that one of the new capacitors had also failed. Finally, after replacing that capacitor a second time, the CADC was operational.

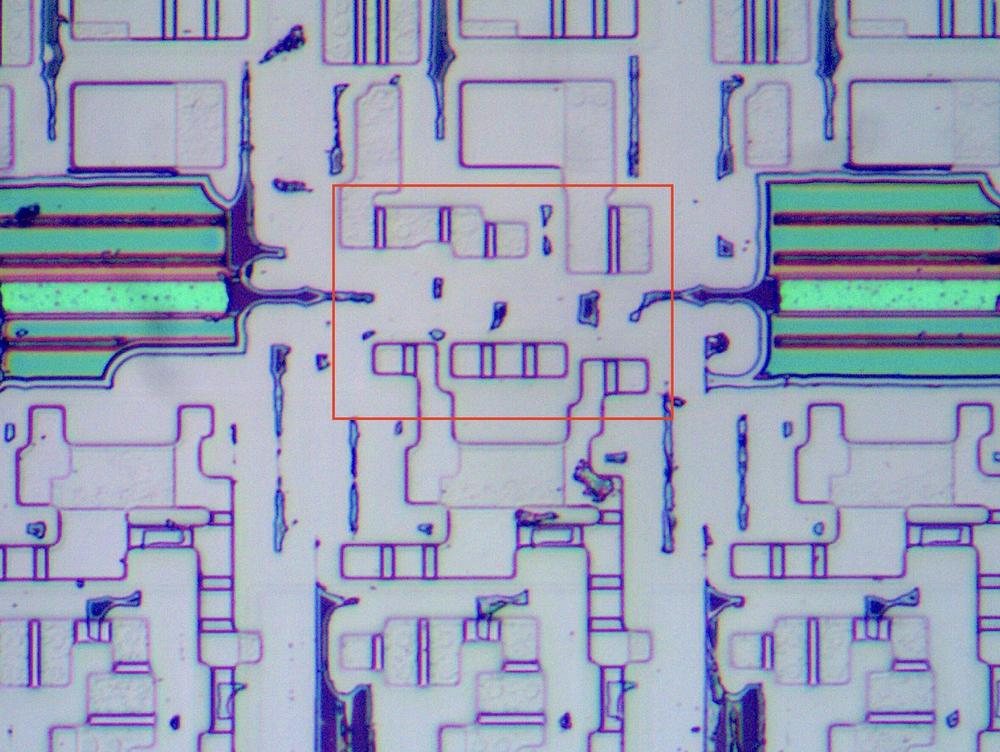

The mechanical feedback loop

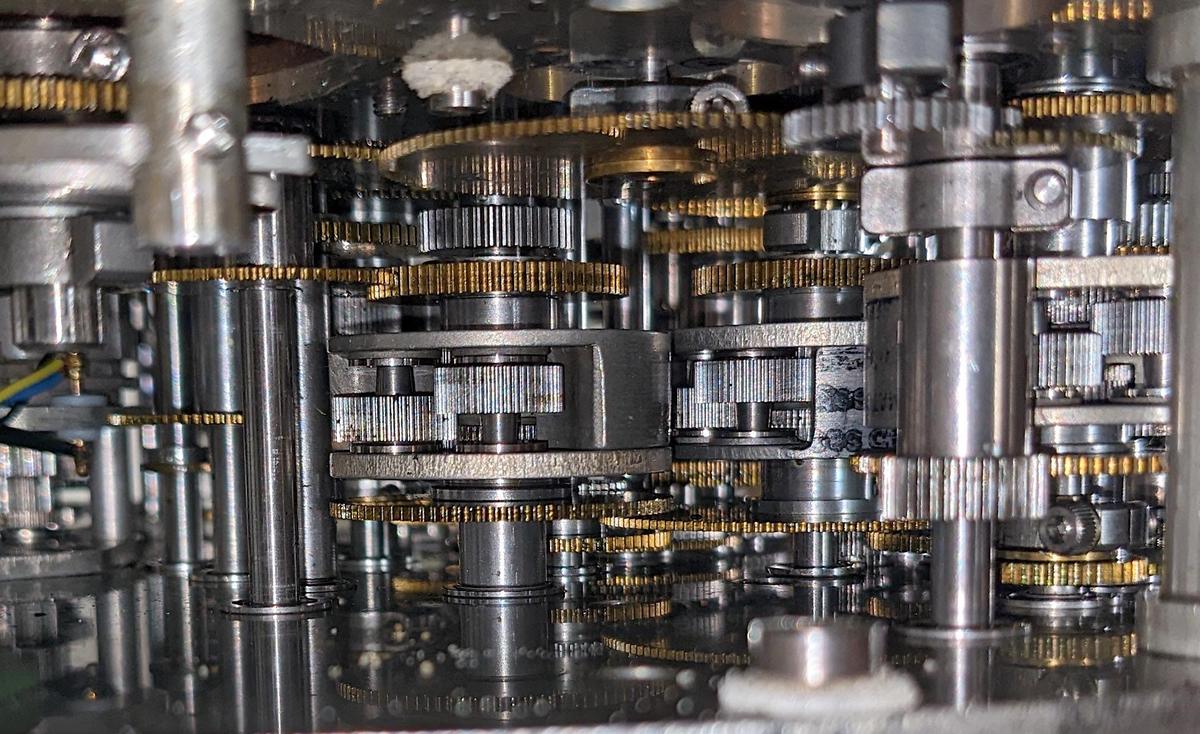

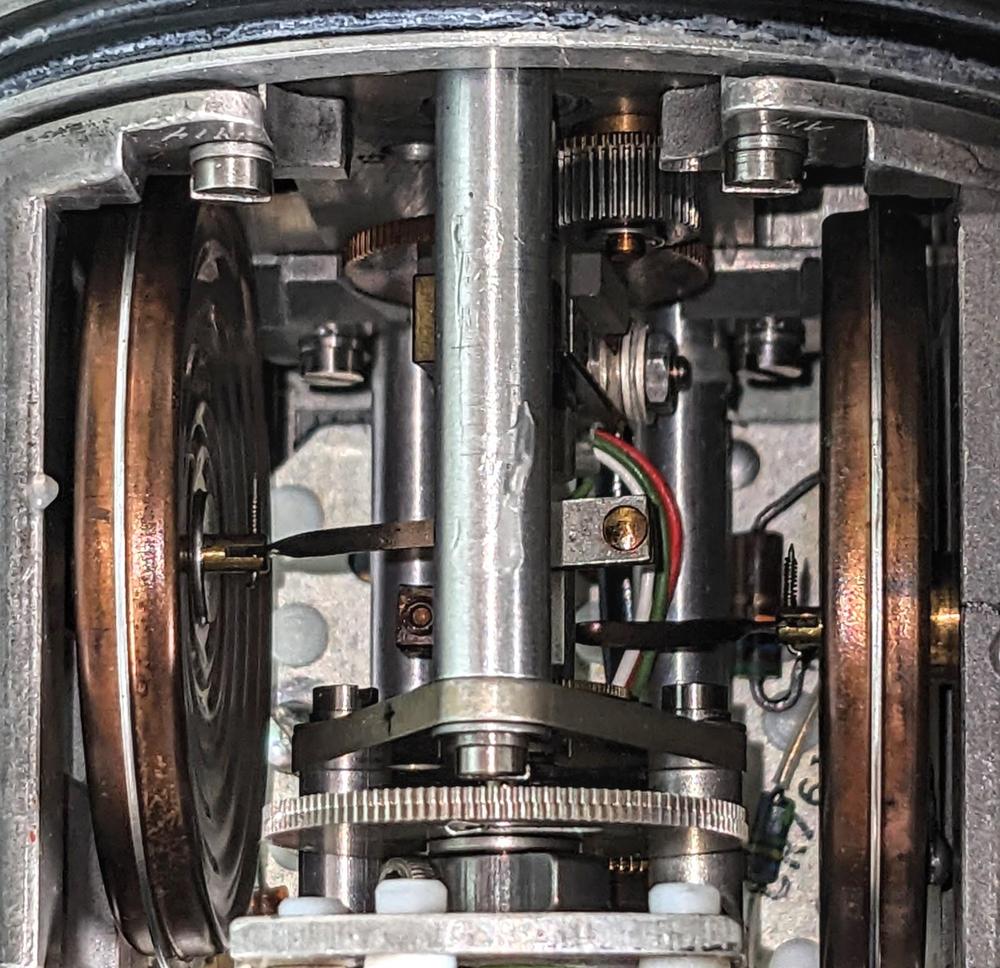

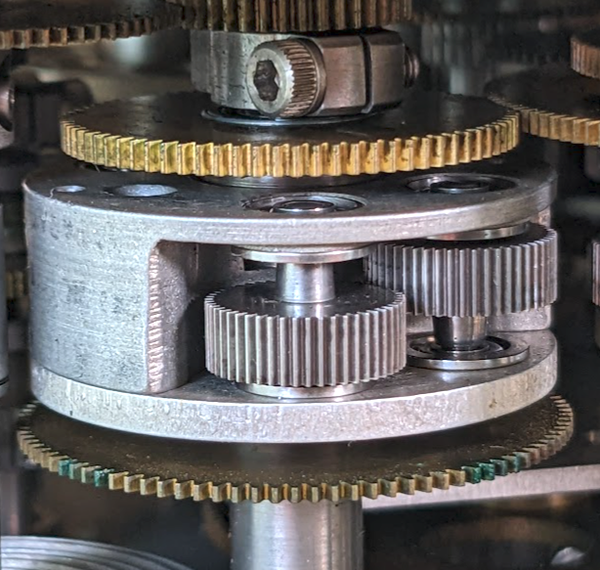

The amplifier boards energize two motors that rotate the output shaft,8 the coarse and fine motors. The outputs from the coarse and fine motors are combined through a differential gear assembly that sums its two input rotations.9 While the differential functions like the differential in a car, it is constructed differently, with a spur-gear design. This compact arrangement of gears is about 1 cm thick and 3 cm in diameter. The differential is mounted on a shaft along with three co-axial gears: two gears provide the inputs to the differential and the third provides the output. In the photo, the gears above and below the differential are the input gears. The entire differential body rotates with the sum, connected to the output gear at the top through a concentric shaft. The two thick gears inside the differential body are part of its mechanism.

(Differential gear assemblies are also used as the mathematical component of the CADC, as it performs addition or subtraction. Since most values in the CADC are expressed logarithmically, the differential computes multiplication and division when it adds or subtracts its inputs.)

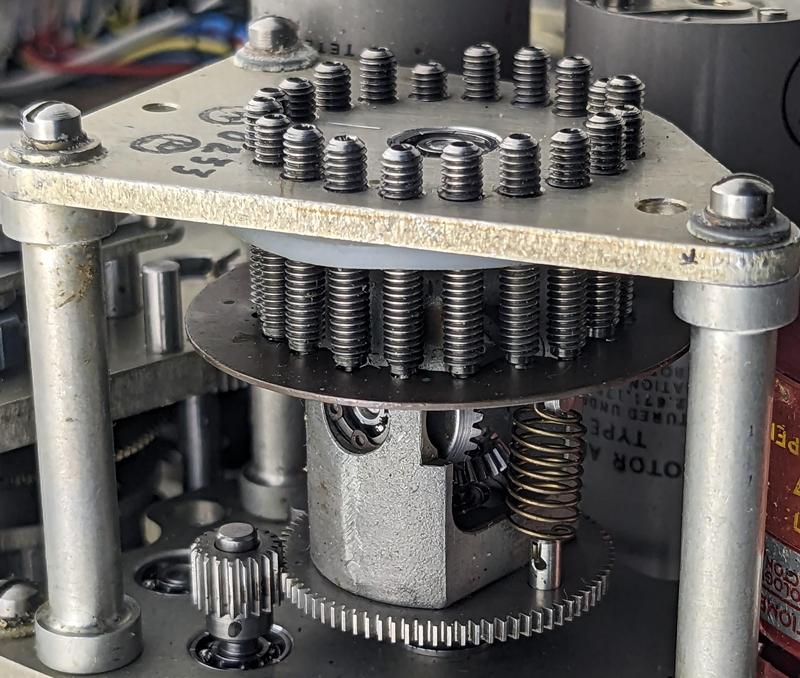

The CADC uses cams to correct for nonlinearities in the pressure sensors. The cam consists of a warped metal plate. As the gear rotates, a spring-loaded vertical follower moves according to the shape of the plate. The differential gear assembly under the plate adds this value to the original input to obtain a corrected value. (This differential implementation is different from the one described above.) The output from the cam is fed into the pressure sensor, closing the feedback loop.

At the top, 20 screws can be rotated to adjust the shape of the cam plate and thus the correction factor. These cams allow the CADC to be fine-tuned to maximize accuracy. According to the spec, the required accuracy for pressure was "40 feet or 0.15 percent of attained altitude, whichever is greater."

Conclusions

The Bendix CADC was built at an interesting point in time, when computations could be done digitally or analog, mechanically or electrically. Because the inputs were analog and the desired outputs were analog, the decision was made to use an analog computer for the CADC. Moreover, transistors were available but their performance was limited. Thus, the servo amplifiers are built from a combination of transistors and magnetic amplifiers.

Modern air data computers are digital but they are still larger than you might expect because they need to handle physical pressure inputs. While a drone can use a tiny 5mm MEMS pressure sensor, air data computers for aircraft have higher requirements and typically use larger vibrating cylinder pressure sensors. Even so, at 45 mm long, the modern pressure sensor is dramatically smaller than the CADC's pressure transducer with its metal-domed bellows sensor, three-board amplifier, motors, cam, and gear train. Although the mechanical Bendix CADC seems primitive, this CADC was used by the Air Force until the 1980s. I guess if the system worked, there was no reason to update it.

I plan to continue reverse-engineering the Bendix CADC,10 so follow me on Twitter @kenshirriff or RSS for updates. I'm also on Mastodon as @oldbytes.space@kenshirriff. Thanks to Joe for providing the CADC. Thanks to Nancy Chen for obtaining a hard-to-find document for me. Marc Verdiell and Eric Schlaepfer are working on the CADC with me.

Notes and references

-

My previous posts on the CADC provide an overview and reverse-engineering of the left side. Much of the background of this article is copied from the previous articles, if it looks familiar. ↩

-

The static air pressure can also be provided by holes in the side of the pitot tube. I couldn't find information indicating exactly how the planes with the CADC received static pressure. ↩

-

Although the CADC's equations may seem ad hoc, they can be derived from fluid dynamics principles. These equations were standardized in the 1950s by various government organizations including the National Bureau of Standards and NACA (the precursor of NASA). ↩

-

The CADC also uses cams to implement functions such as logarithms, exponentials, and complicated functions of one variable such as ${M}/{\sqrt{1 + .2 M^2}}$. These cams have a completely different design from the corrector cams. The function cams are fixed shape, unlike the adjustable corrector cams. The function is encoded into the cam's shape during manufacturing, so implementing a hard-to-compute nonlinear function isn't a problem for the CADC. The photo below shows a cam with the follower arm in front. As the cam rotates, the follower moves in and out according to the cam's radius. The pressure transducers do not use fixed cams, so I won't discuss them more in this article.

A cam inside the CADC implements a function. -

The CADC also has an input for the "position error correction". This input provides a correction factor because the measured static pressure may not exactly match the real static pressure. The problem is that the static pressure is measured from a port on the aircraft. Distortions in the airflow may cause errors in this measurement. A separate box, the "compensator", determined the correction factor based on the angle of attack and fed it to the CADC as a synchro signal. The position error correction is applied in a separate section of the CADC, downstream from the transducers, so I will ignore it for this article. ↩

-

A bit more explanation of the transistor circuit driving the magnetic amplifier. The idea is that one magnetic amplifier or the other is selected, depending on the phase of the error signal, causing the motor to turn counterclockwise or clockwise as needed. To implement this, the magnetic amplifier control windings are connected to opposite phases of the 400 Hz power. The transistor is connected to both magnetic amplifiers through diodes, so current will flow only if the transistor pulls the winding low during the half-cycle that the winding is powered high. Thus, depending on the phase of the transistor output, one winding or the other will be powered, allowing that magnetic amplifier to pass AC to the motor. ↩

-

According to the specification, the CADC has simulated "low point" and "high point" test conditions. The low point is 11,806 feet altitude, 1064 ft/sec true airspeed, Mach .994, total temperature 317.1 °K, and density × speed of sound of 1.774 lb sec/ft3. The high point is 50,740 feet altitude, 1917 ft/sec true airspeed, Mach 1.980, total temperature 366.6 °K, and density × speed of sound of .338 lb sec/ft3. ↩

-

The motor part number is Bendix FV101-5A1. ↩

-

Strictly speaking, the output of the differential is the sum of the inputs divided by two. I'm ignoring the factor of 2 because the gear ratios can easily cancel it out. It's also arbitrary whether you think of the differential as adding or subtracting, since it depends on which rotation direction is defined as positive. ↩

-

It was very difficult to find information about the CADC. The official military specification is MIL-C-25653C(USAF). After searching everywhere, I was finally able to get a copy from the Technical Reports & Standards unit of the Library of Congress. The other useful document was in an obscure conference proceedings from 1958: "Air Data Computer Mechanization" (Hazen), Symposium on the USAF Flight Control Data Integration Program, Wright Air Dev Center US Air Force, Feb 3-4, 1958, pp 171-194. ↩