This article looks inside the 3101 chip and explains how it works. I received two 3101 chips from Evan Wasserman and used a microscope to take photos of the tiny silicon die inside.4 Around the outside of the die, sixteen black bond wires connect pads on the die to the chip's external pins. The die itself consists of silicon circuitry connected by a metal layer on top, which appears golden in the photo. The thick metal lines through the middle of the chip power the chip. The silicon circuitry has a grayish-purple color, but it largely covered by the metal layer. Most of the chip contains a repeated pattern: this is the 16x4 array of storage cells. In the upper left corner of the chip, the digits "3101" in metal identify the chip, but "Intel" is not to be found.

Overview of the chip

The 3101 chip is controlled through its 16 external pins. To select one of the chip's 16 words of memory, the address in binary is fed into the chip through the four address pins (A0 to A3). Memory is written by providing the 4-bit value on the data input pins (D1 to D4). Four data output pins (O1 to O4) are used to read memory; these pins are inverted as indicated by the overbar. The chip has two control inputs. The chip select pin (CS) enables or disables the chip. The write enable pin (WE) selects between reading or writing the memory. The chip is powered with 5 volts across the Vcc and ground pins.

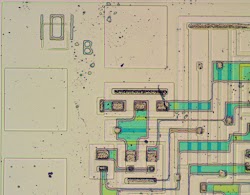

The diagram below shows how the key components of the 3101 are arranged on the die. The RAM storage cells are arranged as 16 rows of 4 bits. Each row stores a word, with bits D1 and D2 on the left and D3 and D4 on the right. The address decode logic in the middle selects which row of storage is active, based on the address signals coming from the address drivers at the top. At the bottom, the read/write drivers provide the interface between the storage cells and the data in and out pins.

Transistors

Transistors are the key components in a chip. The 3101 uses NPN bipolar transistors, different from the MOS transistors used in modern memory chips. The diagram below shows one of the transistors in the 3101 as it appears on the die. The slightly different tints in the silicon indicate regions that have been doped to form N and P type silicon with different semiconductor properties. The cross-section diagram illustrates the internal structure of the transistor. On top (black) are the metal contacts for the collector (C), emitter (E), and base (B). Underneath, the silicon has been doped to form the N and P regions that make up the transistor.

A key innovation of the 3101 was using Schottky transistors (details), which made the 3101 almost twice as fast as other memory chips.5 In the cross section, note that the base's metal contact touches both the P and N regions. You might think this shorts the two regions together, but instead a Schottky diode is formed where the metal contacts the N layer.6

The 3101 also used many multiple-emitter transistors. While a multiple-emitter transistors may seem strange, they are common in bipolar integrated circuits, especially TTL logic chips. A multiple-emitter transistor simply has several emitter regions embedded in the base region. The die photo below shows one of these transistors with the collector on the left, followed by the base and two emitters.

Driving the data output pins requires larger, high-current transistors. The image below shows one of these transistors. The central rectangle is the base, surrounded by the C-shaped emitter in the middle and the large collector on the outside. Eight of these high-current transistors are also used to drive the internal address select lines.

Diodes

While examining the 3101 chip, I was surprised by the large number of diodes on the chip. Eventually I figured out that the chip used DTL (diode-transistor logic) for most of its logic rather than TTL (transistor-transistor logic) that I was expecting. The diagram below shows one of the diodes on the chip. I believe the chip builds diodes using the standard technique of connecting an NPN transistor as a diode.

Resistors

The die photo below shows several resistors on the 3101 die. The long, narrow snaking regions of p-type silicon provide resistance. Resistors in integrated circuits are inconveniently large, but are heavily used in the 3101 for pull-up and pull-down resistors. At the right is a square resistor, which has low resistance because it is very wide.7 It is used to route a signal under the metal layer, rather than functioning a resistor per se.

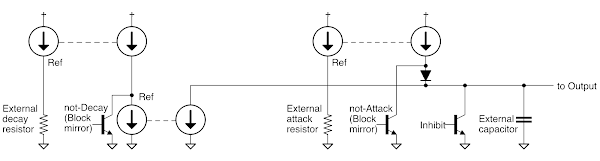

The static RAM cell

Now that I've explained the individual components of the chip, I'll explain how the circuitry is wired together for storage. The diagram below shows the cell for one bit of storage with the circuit diagram overlaid. Each cell consists of two multi-emitter transistors (outlined in red) and two resistors (at the top). The horizontal and vertical wiring connects cells together. This circuit forms a static RAM cell, basically a latch that can be in one of two states, storing one data bit.

Before explaining how this storage cell works, I'll explain a simpler latch circuit, below. This circuit has two transistors cross-connected so if one transistor is on, it forces the other off. In the diagram, the left transistor is on, which keeps the right transistor off, which keeps the left transistor on. Thus, the circuit will remain in this stable configuration. The opposite state—with the left transistor off and the right transistor on—is also stable. Thus, the latch has two stable configurations, allowing it to hold a 0 or a 1.

To make this circuit usable—so the bit can be read or modified—more complex transistors with two emitters are used. One emitter is used to select which cell to read or write, while the other emitter is used for the read or write data. This yields the schematic below, which matches the storage cell die photo diagram above.

Multiple storage cells are combined into a grid to form the memory memory. One word of memory consists of cells in the same row that share select lines. All the cells in a column store the same bit position; their data lines are tied together. (The bias line provides a voltage level to all cells in the memory.8)

Note that unlike the simplified cell, the circuit above doesn't have an explicit ground connection; to be powered, it requires a low input on either the select or data/bias lines. There are three cases of interest:

- Unselected: If the negative row select line is low, current flows out through the row select line. The data and bias lines are unaffected by this cell.

- Read: If the negative row select line is higher than the data and bias lines, current will flow out the data line if the left transistor is on, and out the bias line if the right transistor is on. Thus, the state of the cell can be read by examining the current on the data line.

- Write: If the negative row select line is higher and the data and bias lines have significantly different voltages, the transistor on the lower side will switch on, forcing the cell into a particular state. This allows a 0 or 1 to be written to the cell.

Thus, by carefully manipulating the voltages on the select lines, data lines and the bias line, one row of memory can be read or written, while the other cells hold their current value without influencing the data line. The storage cell and the associated read/write circuitry are essentially analog circuits rather than digital since the select, data, and bias voltages must be carefully controlled voltages rather than logic levels.

The address decode logic

The address decode circuitry determines which row of memory cells is selected by the address lines.11 The interesting thing about this circuitry is that you can easily see how it works just by looking at the die photo. The address driver circuitry sends the four address signals along with their complements on eight metal traces through the chip. Each storage row has a four-emitter transistor. In each row you can see four black dots, which are the connections between emitters and address lines. A row will be selected if all the emitter inputs are high.9 A dot on an address line (e.g. A0) will "match" a 1, while a dot on the complemented address line (e.g. A0) will match a 0, so each row matches a unique four-bit address. In the die photo below, you can see the decoding logic counting down in binary for rows 15 down to 11;10 the remainder of the circuit follows the same pattern.

Some systems that used the 3101

The 64-bit storage capacity of the 3101 was too small for a system's main memory, but the chip had a role in many minicomputers. For example, the Burroughs D Machine was a military computer (and the source of the chips I examined). It used core memory for its main storage, but a board full of 3101 chips provided high-speed storage for its microcode. The Xerox Alto used four 3101 chips to provide 16 high-speed registers for the CPU, while the main memory used slower DRAM chips. Interdata used 3101 chips in many of its 16- and 32-bit minicomputers up until the 1980s.12

The 3101 was also used in smaller systems. The Diablo 8233 terminal used them as RAM.13 The Datapoint 2200 was a "programmable terminal" that held its processor stack in fast 3101 chips rather than the slow main memory which was built from Intel 1405 shift registers.

How I created the die photos

To get the die photos, I started with two chips that I received thanks to Evan Wasserman and John Culver. The pins on the chips had been crushed in the mail, but this didn't affect the die photos. The chips had two different lot numbers that indicate they were manufactured a few months apart. Strangely, the metal lids on the chips were different sizes and the dies were slightly different. For more information, see the CPU Shack writeup of the 3101.

Popping the metal lid off the chips was easy—just a tap with a hammer and chisel. This revealed the die inside.

Using a metallurgical microscope and Hugin stitching software (details), I stitched together multiple microscope photos to create an image of the die. The metal layer is clearly visible, but it obscures the silicon underneath, making it hard to determine the chip's circuitry. The photo below shows a closeup of the die showing the "3101" part number.

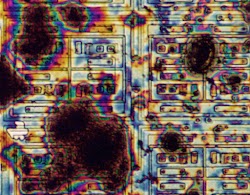

I applied acid14 to remove the metal layer. This removed most of the metal, revealing the silicon circuitry underneath. Some of the metal is still visible, but thinner, appearing transparent green. Strangely, the number 3101 turned into 101; apparently the first digit wasn't as protected by oxide as the other digits.

Below is the complete die photo of the chip with the metal layer partially stripped off. (Click it for a larger version.) This die photo was most useful for analyzing the chip. Enough of the metal was removed to clearly show the silicon circuits, but the remaining traces of metal showed most of the wiring. The N+ silicon regions appear to have darkened in this etch cycle.

I wanted to see how the chip looked with the metal entirely removed so I did a second etch cycle. Unfortunately, this left the die looking like it had been destroyed.

I performed a third etch cycle. It turns out that the previous etch hadn't destroyed the die, but just left a thin layer of oxide that caused colored interference bands. The final etch removed the remaining oxide, leaving a nice, clean die. Only a ghost of the "101" number is visible. The contacts between the metal layer and the silicon remained after the etch; they may be different type of metal that didn't dissolve.

Below is the full die photo with all the metal stripped off. (Click it for a full-size image.)

Conclusion

The 3101 RAM chip illustrates the amazing improvements in integrated circuits driven by Moore's Law.15 While the 3101 originally cost $99.50 for 64 bits, you can now buy 16 gigabytes of RAM for that price, two billion times as much storage. If you built a 16 GB memory from two billion 3101 chips, the chips alone would weigh about 3000 tons and use over a billion watts, half of Hoover Dam's power. A modern 16GB DRAM module, in comparison, uses only about 5 watts.

As for Intel, the 3101 RAM was soon followed by many other memory products with rapidly increasing capacity, making Intel primarily a memory company that also produced processors. However, facing strong competition from Japanese memory manufacturers, Intel changed its focus to microprocessors and abandoned the DRAM business in 1985.16 By 1992, the success of the x86 processor line had made Intel the largest chip maker, justifying this decision. Even though Intel is now viewed as a processor company, it was the humble 3101 memory chip that gave Intel its start.

Thanks to Evan Wasserman and John Culver for sending me the chips. John also did a writeup of the 3101 chip, which you can read at CPU Shack.

Notes and references

-

You might wonder why Intel's first chip had the seemingly-arbitrary number 3101. Intel had a highly-structured naming system. A 3xxx part number indicated a bipolar product. A 1 for the second digit indicated RAM, while the last two digits (01) were a sequence number. Fortunately, the marketing department stepped in and gave the 4004 and 8008 processors better names. ↩

-

Memory chips started out very expensive, but prices rapidly dropped. Computer Design Volume 9 page 28, 1970, announced a price drop of the 3101 from $99.50 to $40 in small volumes. Ironically, the Intel 3101 is now a collector's item and on eBay costs much more than the original price—hundreds of dollars for the right package. ↩

-

Several sources say that the 3101 was the first solid state memory, but this isn't accurate. There were many companies making memory chips in the 1960s. For instance, Texas Instruments announced the 16-bit SN5481 bipolar memory chip in 1966 (Electronics, V39 #1, p151) and Transitron had the TMC 3162 and 3164 16-bit RAM (Electrical Design News, Volume 11, p14). In 1968, RCA made 72-bit and 288-bit CMOS memories for the Air Force (document, photo). Lee Boysel built 256-bit dynamic RAMs at Fairchild in 1968 and 1K dynamic RAMs at Four Phase Systems in 1969 (timeline and Boysel presentation). For more information on the history of memory technology, see timeline and History of Semiconductor Engineering, p215. Another source for memory history is To the Digital Age, p193. ↩

-

From my measurements, the 3101 die is about 2.39mm by 3.65mm. Feature size is about 12µm. ↩

-

If you've used TTL chips, you probably used the 74LSxx family. The "S" stands for the Schottky transistors that make these chip fast. These chips were "the single most profitable product line in the history of Texas Instruments" (ref). ↩

-

The Schottky diode in the Schottky transistor is formed between the base and collector. This diode prevents the transistor from becoming saturated, allowing it to switch faster. ↩

-

The resistance of an IC resistor is proportional to the length divided by the width. The sheet resistance of a material is measured in the unusual unit of ohms per square. You might think it should be per square nanometer or square mm or something, but since the resistance depends on the ratio of length to width, the unit cancels out. ↩

-

The bias line is shared by all the cells. For reading, it is set to a low voltage. For writing, it is set to an intermediate voltage: higher than the data 0 voltage, but lower than the data 1 voltage. The bias voltage is controlled by the write enable pin.

More advanced chips use two data lines instead of a bias line for more sensitivity. A differential amplifier to compare the currents on the two data lines and distinguish the tiny change between a zero bit and a one bit. However, the 3101 uses such high currents internally that this isn't necessary; it can read the data line directly. ↩

-

If my analysis is correct, when a row is selected, the address decode logic raises both the positive row select and negative row select lines by about 0.8 volts (one diode drop). Thus, the cell is still powered by the same voltage differential, but the voltage shift makes the data and bias lines active. ↩

-

Address lines A3 and A2 are reversed in the decoding logic, presumably because it made chip layout simpler. This has no effect on the operation of the chip since it doesn't matter of the physical word order matches the binary order. ↩

-

The 3101 has a chip select pin that makes it easy to combine multiple chips into a larger memory. If this pin is high, the chip will not read or write its contents. One strange thing about the address decoding logic is that each pair of address lines is driven by a NAND gate latch. There's no actual latching happening, so I don't understand why this circuit is used.

How the 3101 implements this feature is a bit surprising. The chip select signal is fed into the address decoding circuit; if the chip is not selected, both A0 and the complement A0 are forced low. Thus, none of the rows will match in the address decoding logic and the chip doesn't respond. ↩

-

The Interdata 7/32 (the first 32-bit minicomputer) used 3101 chips in its memory controller. (See the maintenance manual page 338.) The Interdata 16/HSALU used 3101 chips for its CPU registers. (See the maintenance manual page 259.) As late as 1982, the Interdata 3210 used 3101 chips to hold cache tags (see manual page 456). On the schematics note that part number 19-075 indicates the 3101. ↩

-

The Diablo 8233 terminal used 3101A (74S289) chips as RAM for its discrete TTL-based processor (which was more of a microcontroller) that controlled the printer. (See maintenance manual page 187.) This systems was unusual since it contained both an 8080 microprocessor and a TTL-based processor. ↩

-

The metal layer of the chip is protected by silicon dioxide passivation layer. The professional way to remove this layer is with dangerous hydrofluoric acid. Instead, I used Armour Etch glass etching cream, which is slightly safer and can be obtained at craft stores. I applied the etching cream to the die and wiped it for four minutes with a Q-tip. (Since the cream is designed for frosting glass, it only etches in spots. It must be moved around to obtain a uniform etch.) Next, I applied a few drops of hydrochloric acid (pool acid from the hardware store) to the die for a few hours. ↩

-

Moore's law not only describes the exponential growth in transistors per chip, but drives this growth. The semiconductor industry sets its roadmap according to Moore's law, making it in some sense a self-fulfilling prophecy. See chapter 8 of Technological Innovation in the Semiconductor Industry for a thorough discussion. ↩

-

Intel's 1985 Annual Report says "It was a miserable year for Intel" and discusses the decision to leave the DRAM business. ↩