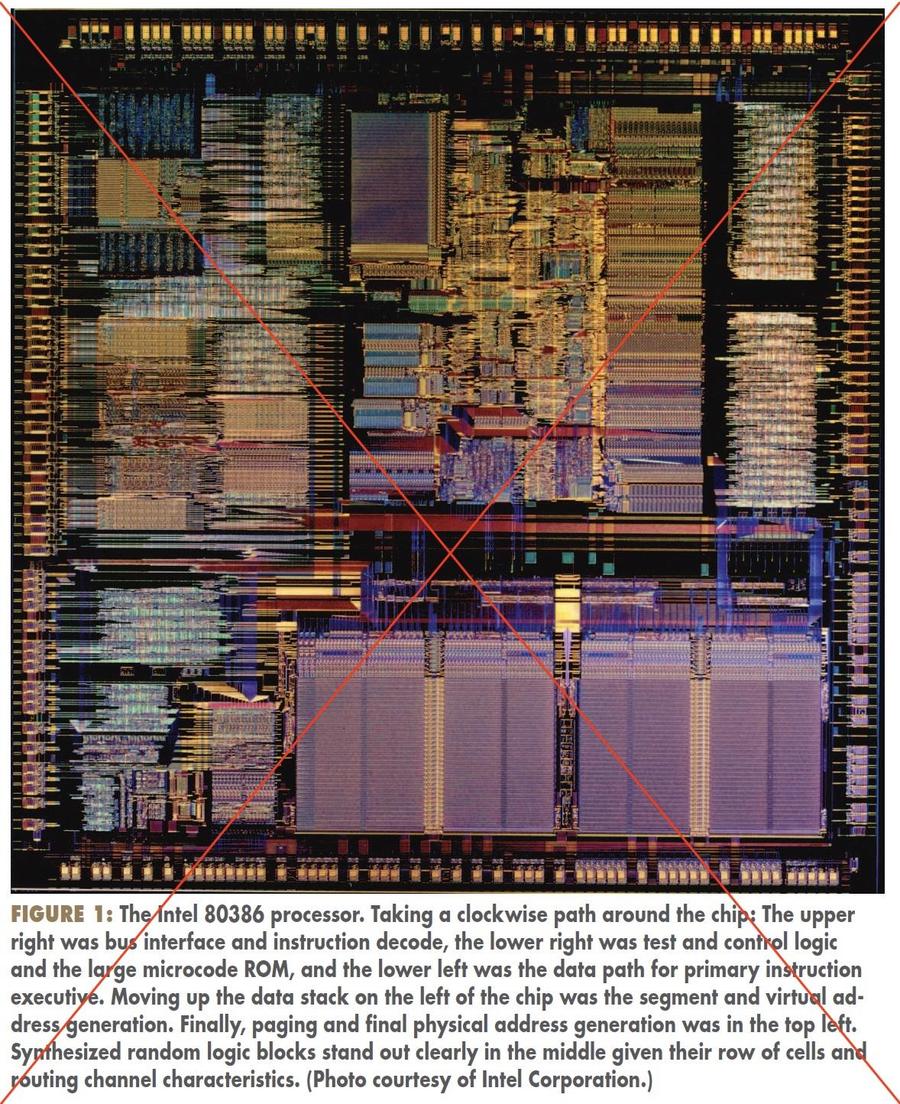

In this blog post, I look at die photos of the Intel 386 processor and explain what they reveal about the history of the processor, such as the move from the 1.5 µm process to the 1 µm process. You might expect that Intel simply made the same 386 chip at a smaller scale, but there were substantial changes to the chip's layout, even some visible to the naked eye.2 I also look at why the 386 SL had over three times the transistors as the other 386 versions.3

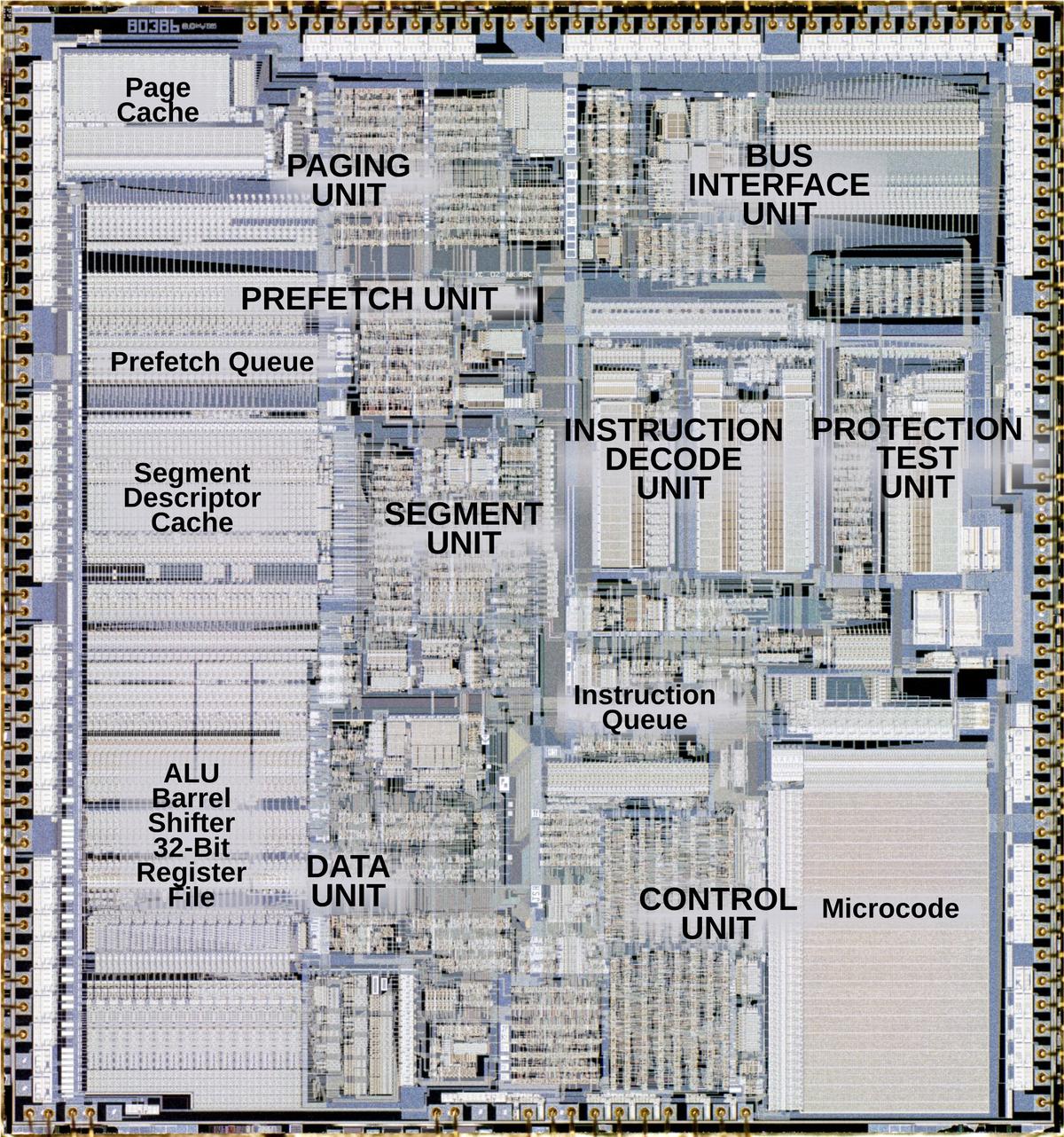

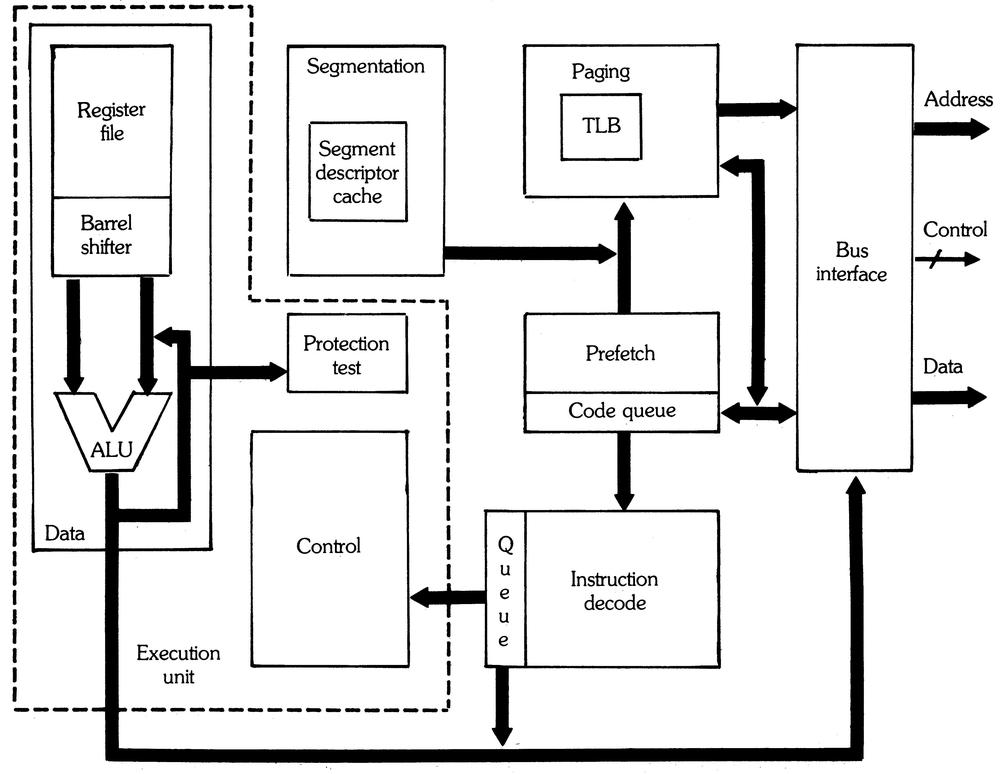

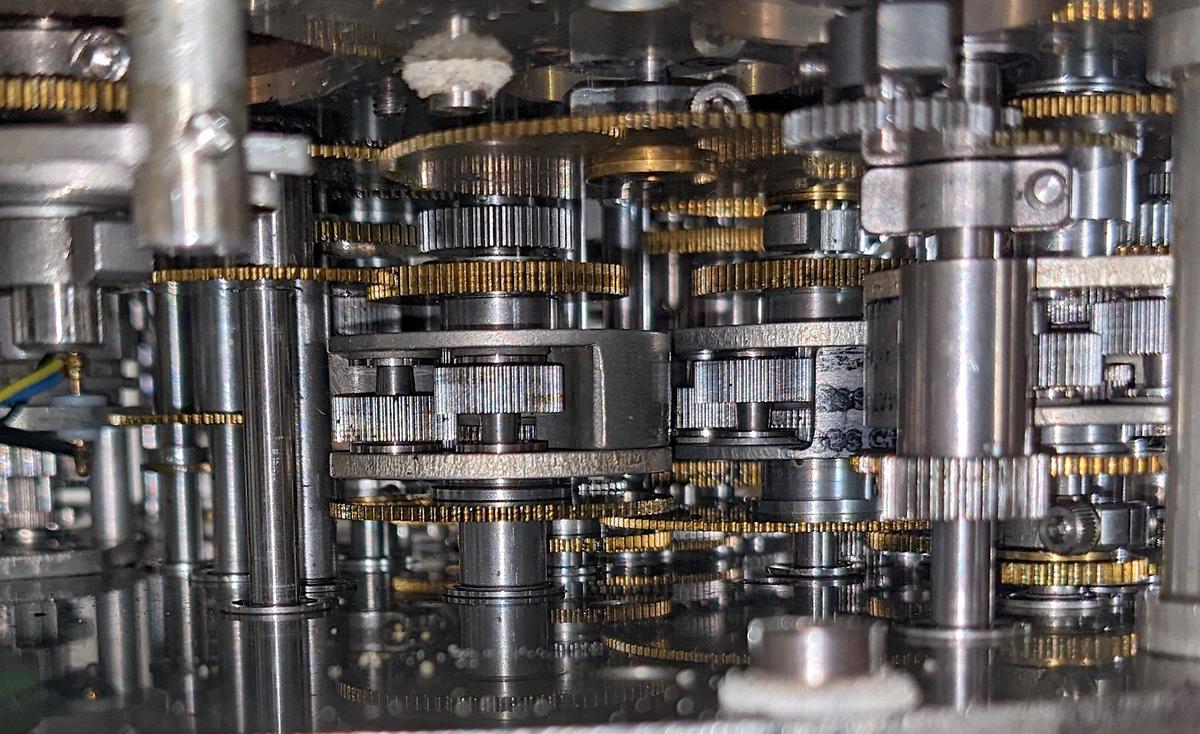

The 80386 was a major advancement over the 286: it implemented a 32-bit architecture, added more instructions, and supported 4-gigabyte segments. The 386 is a complicated processor (by 1980s standards), with 285,000 transistors, ten times the number of the original 8086.4 The 386 has eight logical units that are pipelined5 and operate mostly autonomously.6 The diagram below shows the internal structure of the 386.7

The heart of a processor is the datapath, the components that hold and process data. In the 386, these components are in the lower left: the ALU (Arithmetic/Logic Unit), a barrel shifter to shift data, and the registers. These components form regular rectangular blocks, 32 bits wide. The datapath, along with the circuitry to the left that manages it, forms the Data Unit. In the lower right is the microcode ROM, which breaks down machine instructions into micro-instructions, the low-level steps of the instruction. The microcode ROM, along with the microcode engine circuitry, forms the Control Unit.

The 386 has a complicated instruction format. The Instruction Decode Unit breaks apart an instruction into its component parts and generates a pointer to the microcode that implements the instruction. The instruction queue holds three decoded instructions. To improve performance, the Prefetch Unit reads instructions from memory before they are needed, and stores them in the 16-byte prefetch queue.8

The 386 implements segmented memory and virtual memory, with access protection.9 The Memory Management Unit consists of the Segment Unit and the Paging Unit: the Segment Unit translates a logical address to a linear address, while the Paging Unit translates the linear address to a physical address. The segment descriptor cache and page cache (TLB) hold data about segments and pages; the 386 has no on-chip instruction or data cache.10 The Bus Interface Unit in the upper right handles communication between the 386 and the external memory and devices.

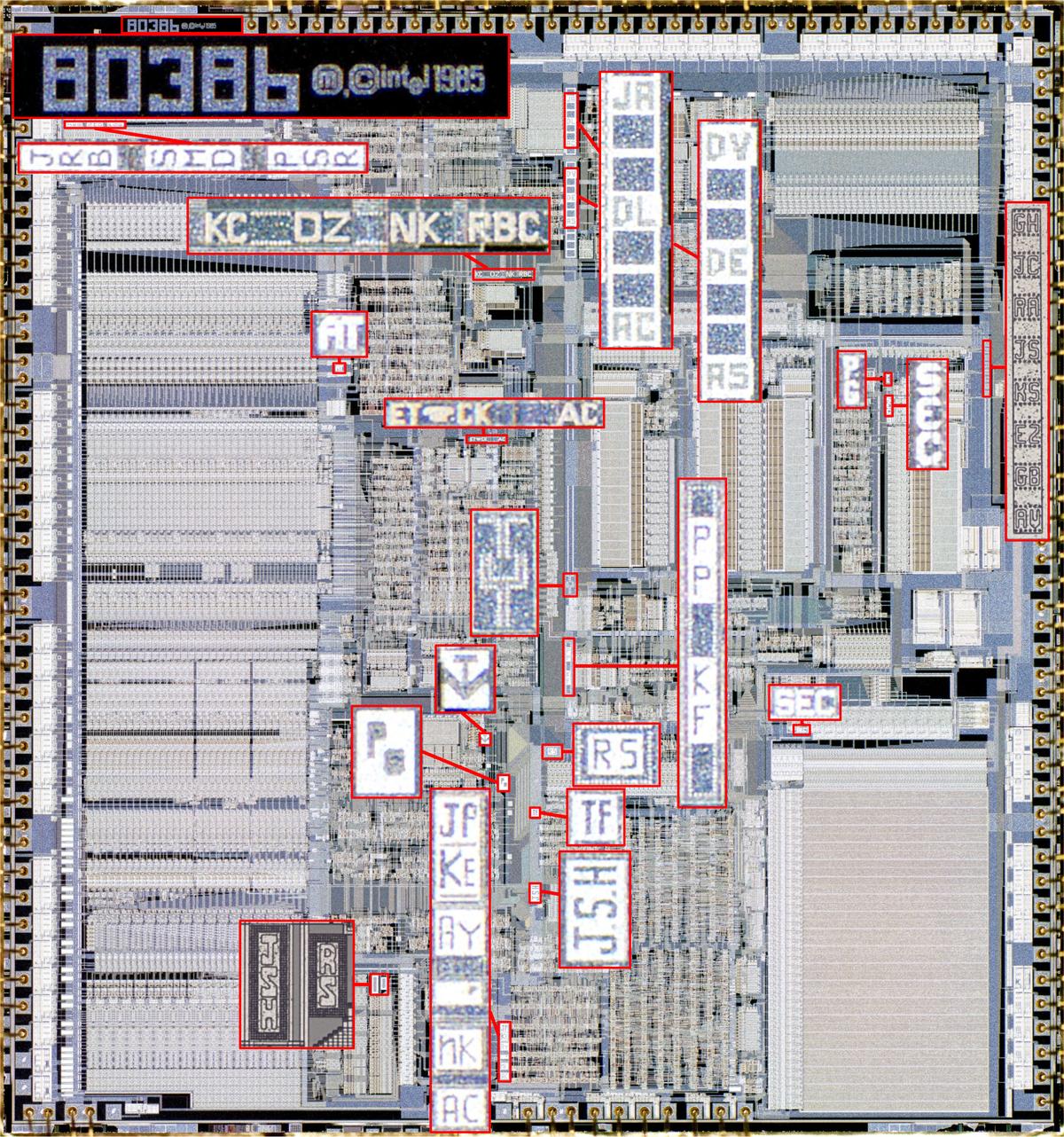

Silicon dies are often labeled with the initials of the designers. The 386 DX, however, has an unusually large number of initials. In the image below, I have enlarged the tiny initials so they are visible. I think the designers put their initials next to the unit they worked on, but I haven't been able to identify most of the names.11

The shrink from 1.5 µm to 1 µm

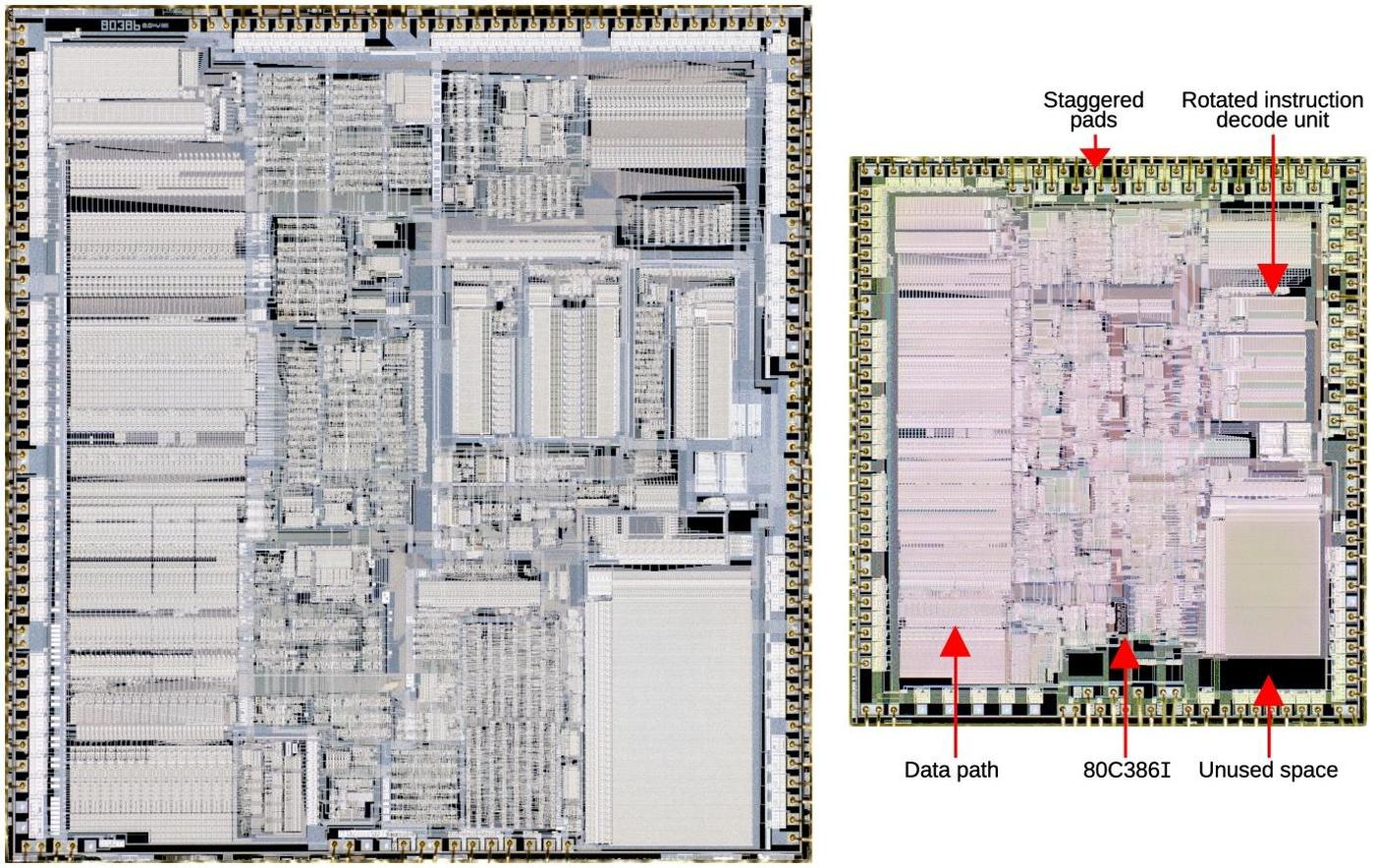

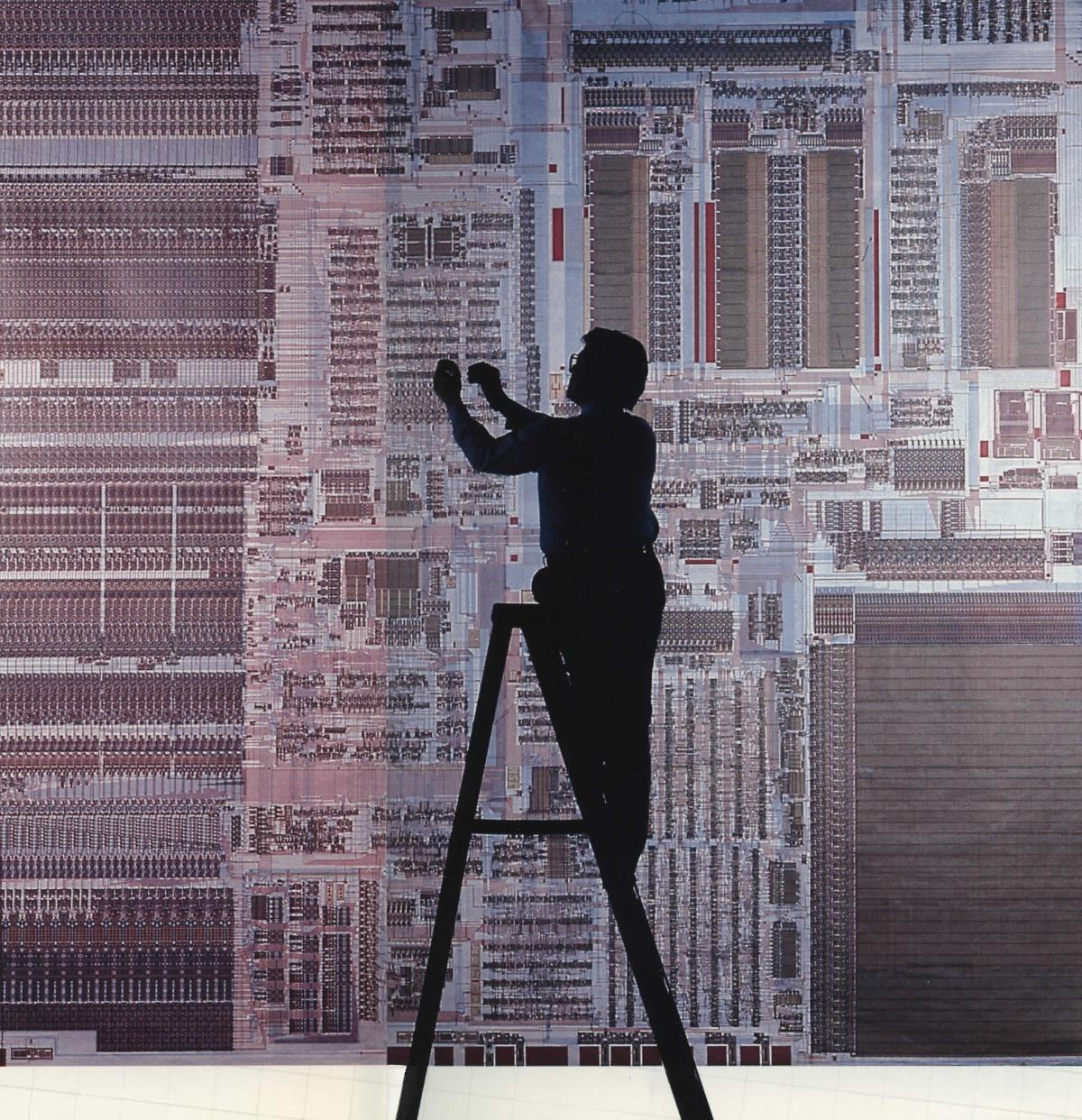

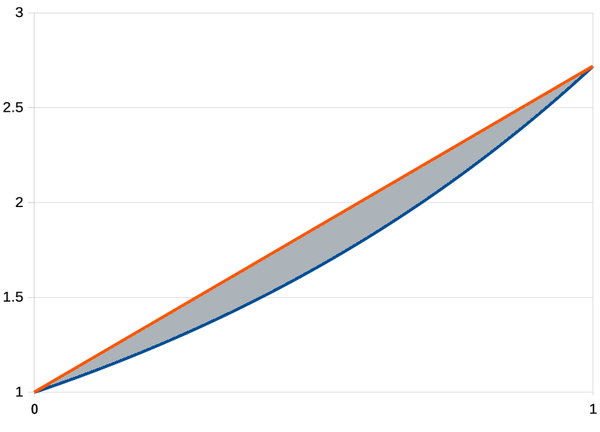

The original 386 was built on a process called CHMOS-III that had 1.5 µm features (specifically the gate channel length for a transistor). Around 1987, Intel moved to an improved process called CHMOS-IV, with 1 µm features, permitting a considerably smaller die for the 386. However, shrinking the layout wasn't a simple mechanical process. Instead, many changes were made to the chip, as shown in the comparison diagram below. Most visibly, the Instruction Decode Unit and the Protection Unit in the center-right are horizontal in the smaller die, rather than vertical. The standard-cell logic (discussed later) is considerably more dense, probably due to improved layout algorithms. The data path (left) was highly optimized in the original so it remained essentially unchanged, but smaller. One complication is that the bond pads around the border needed to remain the same size so bond wires could be attached. To fit the pads around the smaller die, many of the pads are staggered. Because different parts of the die shrank differently, the blocks no longer fit together as compactly, creating wasted space at the bottom of the die. For some reason, the numerous initials on the original 386 die were removed. Finally, the new die was labeled 80C386I with a copyright date of 1985, 1987; it is unclear what "C" and "I" indicate.

The change from 1.5 µm to 1 µm may not sound significant, but it reduced the die size by 60%. This allowed more dies on a wafer, substantially dropping the manufacturing cost.12 The strategy of shrinking a processor to a new process before designing a new microarchitecture for the process became Intel's tick-tock strategy.

The 386 SX

In 1988, Intel introduced the 386 SX processor, the low-cost version of the 386, with a 16-bit bus instead of a 32-bit bus. (This is reminiscent of the 8088 processor with an 8-bit bus versus the 8086 processor with a 16-bit bus.) According to the 386 oral history, the cost of the original 386 die decreased to the point where the chip's package cost about as much as the die. By reducing the number of pins, the 386 SX could be put in a one-dollar plastic package and sold for a considerably reduced price. The SX allowed Intel to segment the market, moving low-end customers from the 286 to the 386 SX, while preserving the higher sales price of the original 386, now called the DX.13 In 1988, Intel sold the 386 SX for $219, at least $100 less than the 386 DX. A complete SX computer could be $1000 cheaper than a similar DX model.

For compatibility with older 16-bit peripherals, the original 386 was designed to support a mixture of 16-bit and 32-bit buses, dynamically switching on a cycle-by-cycle basis if needed. Because 16-bit support was built into the 386, the 386 SX didn't require much design work. (Unlike the 8088, which required a redesign of the 8086's bus interface unit.)

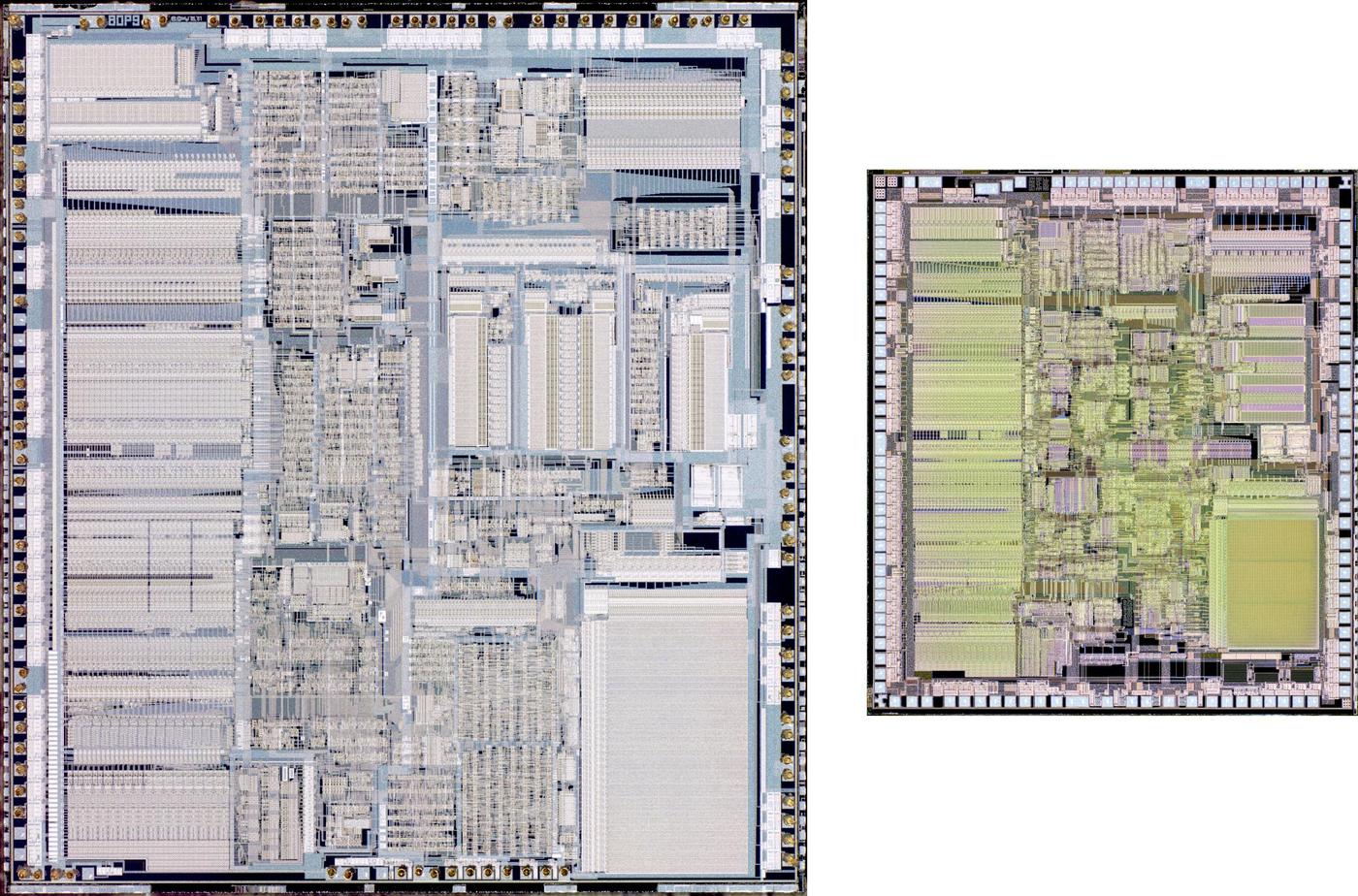

The 386 SX was built at both 1.5 µm and 1 µm. The diagram below compares the two sizes of the 386 SX die. These photos may look identical to the 386 DX photos in the previous section, but close examination shows a few differences. Since the 386 SX uses fewer pins, it has fewer bond pads, eliminating the staggered pads of the shrunk 386 DX. There are a few differences at the bottom of the chip, with wiring in much of the 386 DX's wasted space.

Comparing the two SX revisions, the larger die is labeled "80P9"; Intel's internal name for the chip was "P9", using their confusing series of P numbers. The shrunk die is labeled "80386SX", which makes more sense. The larger die is copyright 1985, 1987, while the shrunk die (which should be newer) is copyright 1985 for some reason. The larger die has mostly the same initials as the DX, with a few changes. The shrunk die has about 21 sets of initials.

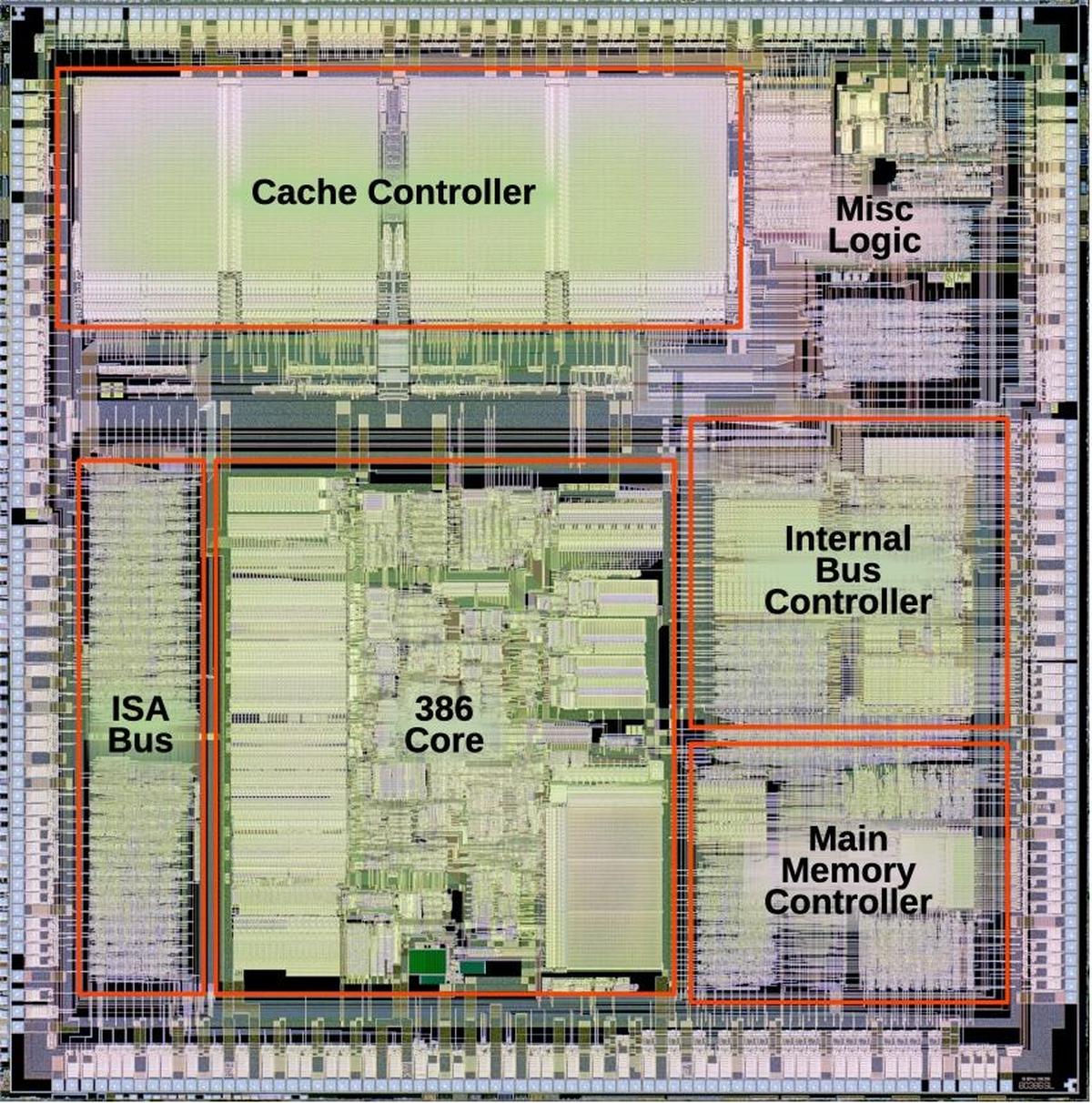

The 386 SL die

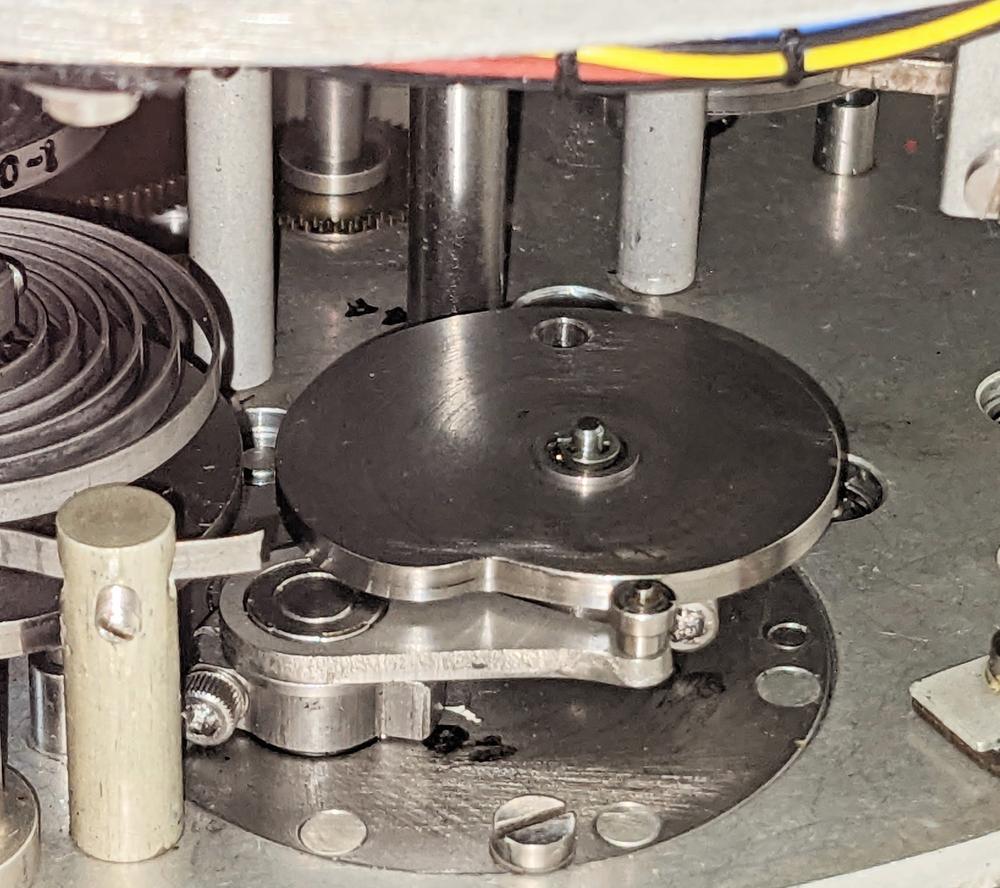

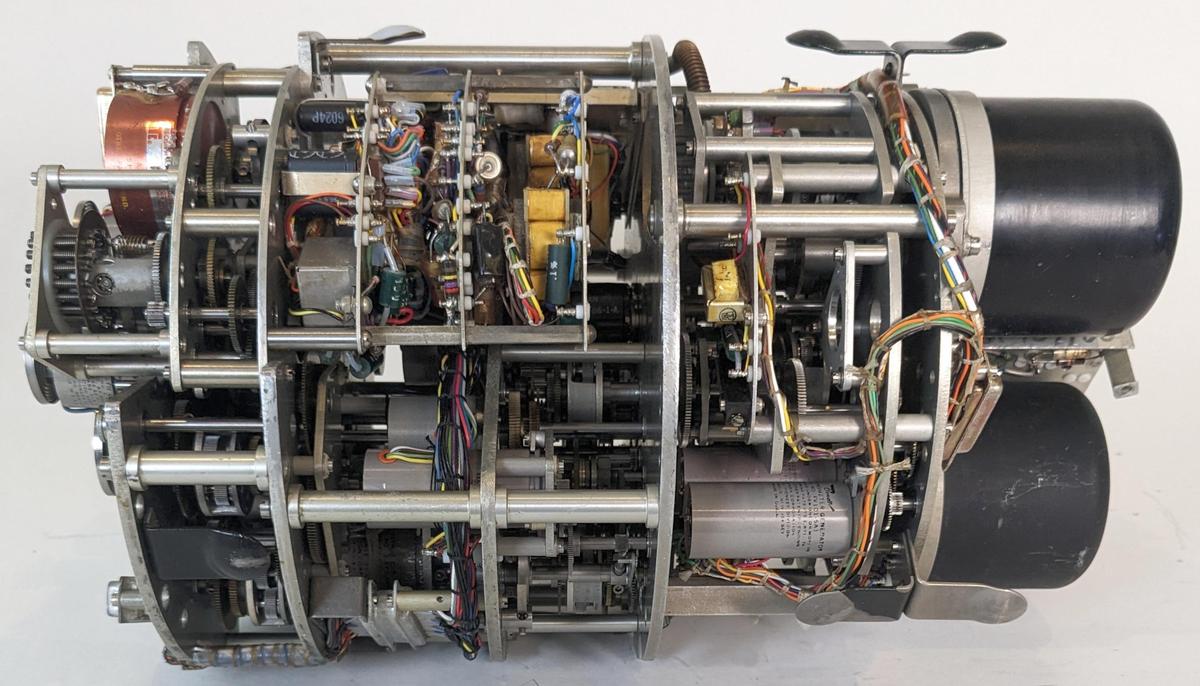

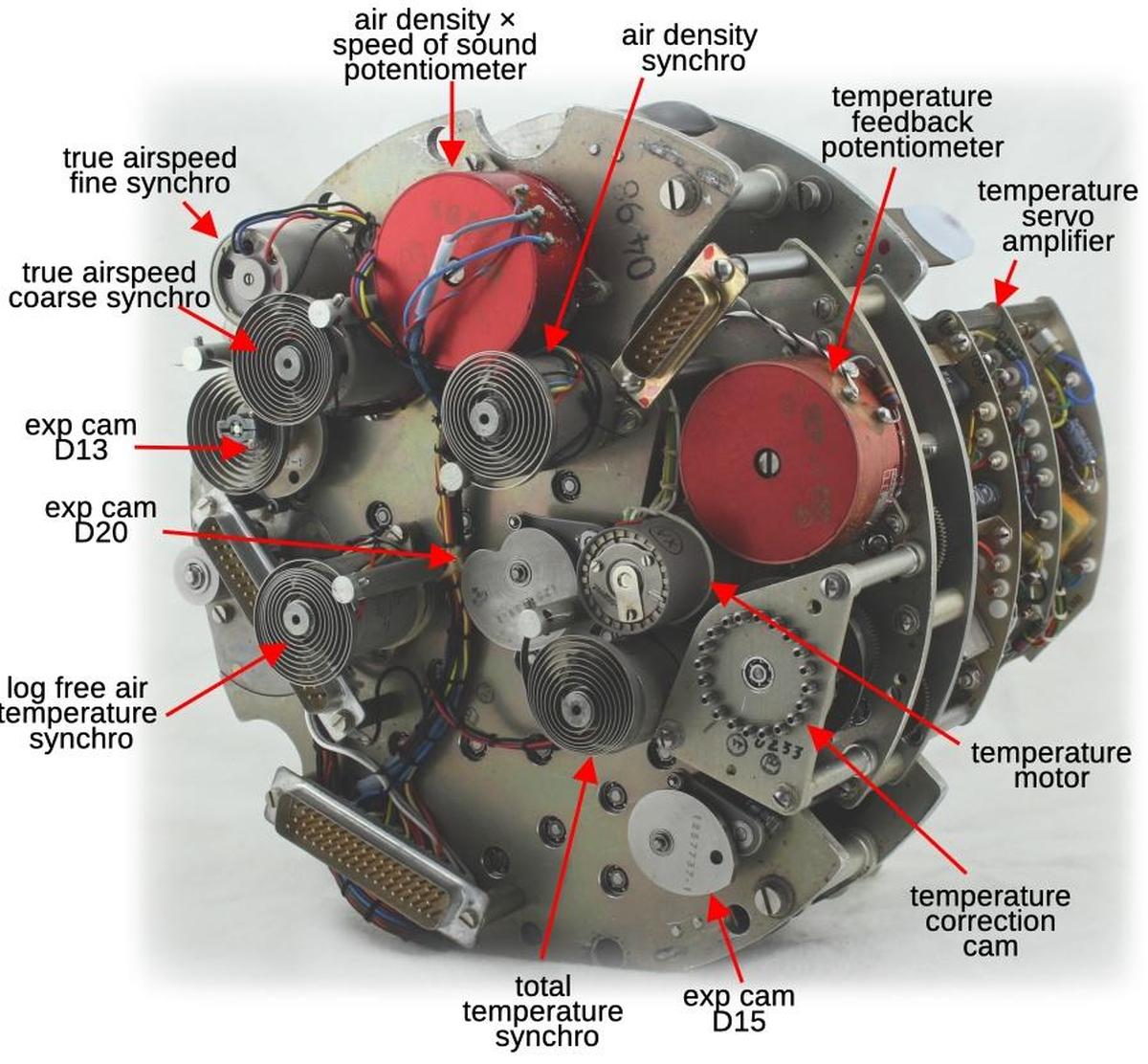

The 386 SL (1990) was a major extension to the 386, combining a 386 core and other functions on one chip to save power and space. Named "SuperSet", it was designed to corner the notebook PC market.14 The 386 SL chip included an ISA bus controller, power management logic, a cache controller for an external cache, and the main memory controller.

Looking at the die photo below, the 386 core itself takes up about 1/4 of the SL's die. The 386 core is very close to the standard 386 DX, but there are a few visible differences. Most visibly, the bond pads and pin drivers have been removed from the core. There are also some circuitry changes. For instance, the 386 SL core supports the System Management Mode, which suspends normal execution, allowing power management and other low-level hardware tasks to be performed outside the regular operating system. System Management Mode is now a standard part of the x86 line, but it was introduced in the 386 SL.

In total, the 386 SL contains 855,000 transistors,15 over 3 times as many as the regular 386 DX. The cache tag RAM takes up a lot of space and transistors. The cache data itself is external; this on-chip circuitry just manages the cache. The other new components are largely implemented with standard-cell logic (discussed below); this is visible as uniform stripes of circuitry, most clearly in the ISA bus controller.

A brief history of the 386

From the modern perspective, it seems obvious for Intel to extend the x86 line from the 286 to the 386, while keeping backward compatibility. But at the time, this path was anything but clear. This history starts in the late 1970s, when Intel decided to build a "micromainframe" processor, an advanced 32-bit processor for object-oriented programming that had objects, interprocess communication, and memory protection implemented in the CPU. This overly ambitious project fell behind schedule, so Intel created a stopgap processor to sell until the micromainframe processor was ready. This stopgap processor was the 16-bit 8086 processor (1978).

In 1981, IBM decided to use the Intel 8088 (an 8086 variant) in the IBM Personal Computer (PC), but Intel did not realize the importance of this at the time. Instead, Intel was focused on their micromainframe processor, also released in 1981 as the iAPX 432, but this became "one of the great disaster stories of modern computing" as the New York Times called it. Intel then reimplemented the ideas of the ill-fated iAPX 432 on top of a RISC architecture, creating the more successful i960.

Meanwhile, things weren't going well at first for the 286 processor, the follow-on to the 808616. Bill Gates and others called its design "brain-damaged". IBM was unenthusiastic about the 286 for their own reasons.17 As a result, the 386 project was a low priority for Intel and the 386 team felt that it was the "stepchild"; internally, the 386 was pitched as another stopgap, not Intel's "official" 32-bit processor.

Despite the lack of corporate enthusiasm, the 386 team came up with two proposals to extend the 286 to a 32-bit architecture. The first was a minimal approach to extend the existing registers and address space to 32 bits. The more ambitious proposal would add more registers and create a 32-bit instruction set that was significantly different from the 8086's 16-bit instruction set. At the time, the IBM PC was still relatively new, so the importance of the installed base of software wasn't obvious; software compatibility was viewed as a "nice to have" feature rather than essential. After much debate, the decision was made around the end of 1982 to go with the minimal proposal, but supporting both segments and flat addressing, while keeping compatibility with the 286.

By 1984, though, the PC industry was booming and the 286 was proving to be a success. This produced enormous political benefits for the 386 team, who saw the project change from "stepchild" to "king". Intel introduced the 386 in 1985, which was otherwise "a miserable year for Intel and the rest of the semiconductor industry," as Intel's annual report put it. Due to an industry-wide business slowdown, Intel's net income "essentially disappeared." Moreover, facing heavy competition from Japan, Intel dropped out of the DRAM business, a crushing blow for a company that got its start in the memory industry. Fortunately, the 386 would change everything.

Given IBM's success with the IBM PC, Intel was puzzled that IBM wasn't interested in the 386 processor, but IBM had a strategy of their own.18 By this time, the IBM PC was being cloned by many competitors, but IBM had a plan to regain control of the PC architecture and thus the market: in 1987, IBM introduced the PS/2 line. These new computers ran the OS/2 operating system instead of Windows and used the proprietary Micro Channel architecture.19 IBM used multiple engineering and legal strategies to make cloning the PS/2 slow, expensive, and risky, so IBM expected they could take back the market from the clones.

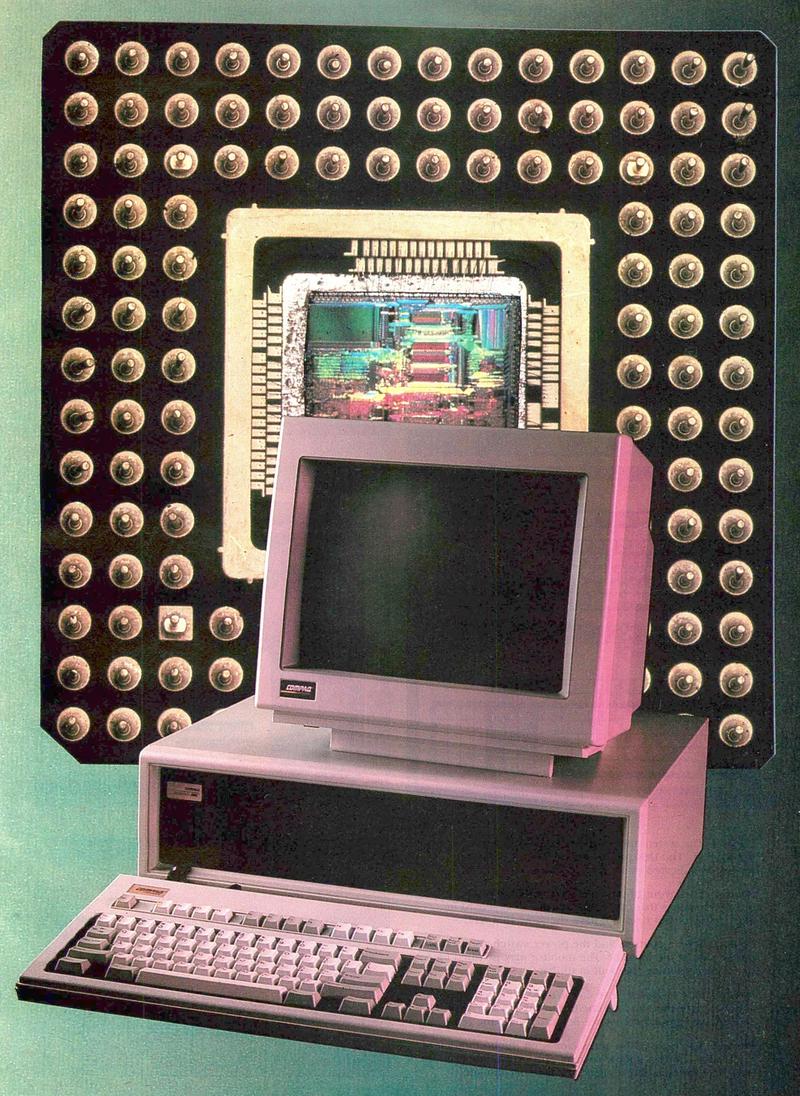

Compaq took the risky approach of ignoring IBM and following their own architectural direction.20 Compaq introduced the high-end Deskpro 386 line in September 1986, becoming the first major company to build 386-based computers. An "executive" system, the Deskpro 386 model 40 had a 40-megabyte hard drive and sold for $6449 (over $15,000 in current dollars). Compaq's gamble paid off and the Deskpro 386 was a rousing success.

As for IBM, the PS/2 line was largely unsuccessful and failed to become the standard. Rather than regaining control over the PC, "IBM lost control of the PC standard in 1987 when it introduced its PS/2 line of systems."21 IBM exited the PC market in 2004, selling the business to Lenovo. One slightly hyperbolic book title summed it up: "Compaq Ended IBM's PC Domination and Helped Invent Modern Computing". The 386 was a huge moneymaker for Intel, leading to Intel's first billion-dollar quarter in 1990. It cemented the importance of the x86 architecture, not just for Intel but for the entire computing industry, dominating the market up to the present day.

How the 386 was designed

The design process of the 386 is interesting because it illustrates Intel's migration

to automated design systems and heavier use of simulation.23

At the time, Intel was behind the industry in its use of tools so the leaders of the 386

realized that more automation would be necessary to build a complex chip like the 386 on schedule.

By making a large investment in automated tools, the 386 team completed the design ahead of schedule.

Along with proprietary CAD tools, the team made heavy use of standard Unix tools such as sed, awk, grep, and make to manage the various design databases.

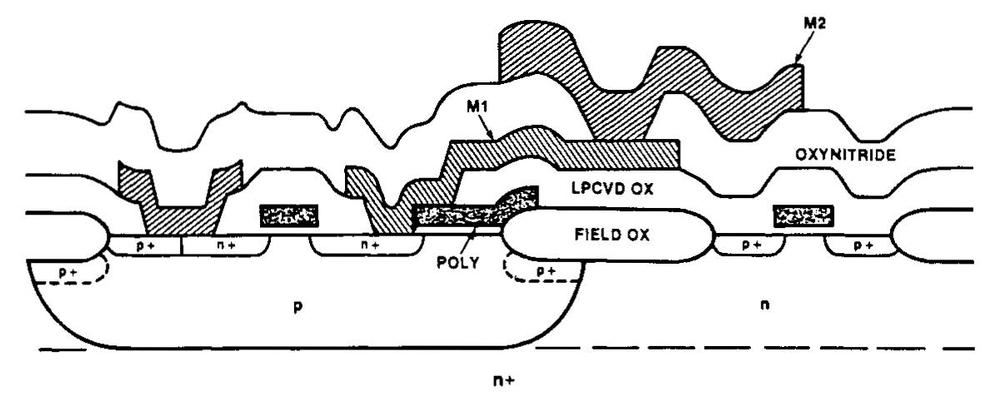

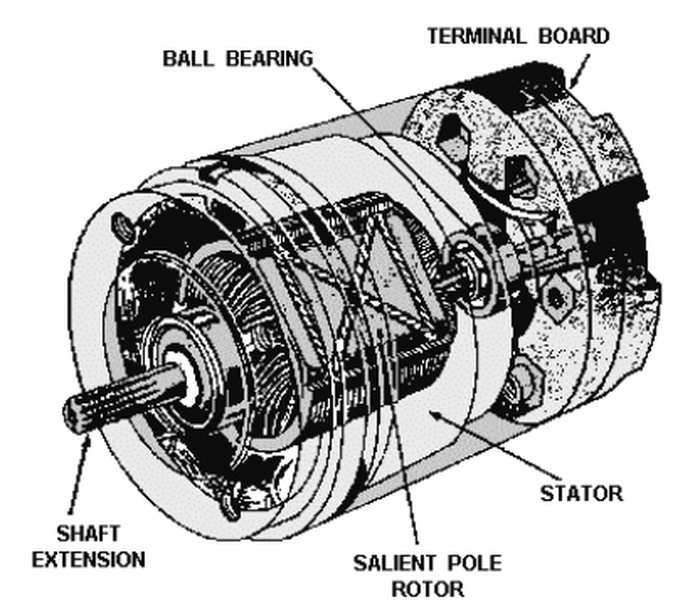

The 386 posed new design challenges compared to the previous 286 processor. The 386 was much more complex, with twice the transistors. But the 386 also used fundamentally different circuitry. While the 286 and earlier processors were built from NMOS transistors, the 386 moved to CMOS (the technology still used today). Intel's CMOS process was called CHMOS-III (complementary high-performance metal-oxide-silicon) and had a feature size of 1.5 µm. CHMOS-III was based on Intel's HMOS-III process (used for the 286), but extended to CMOS. Moreover, the CHMOS process provided two layers of metal instead of one, changing how signals were routed on the chip and requiring new design techniques.

The diagram below shows a cross-section through a CHMOS-III circuit, with an NMOS transistor on the left and a PMOS transistor on the right. Note the jagged three-dimensional topography that is formed as layers cross each other (unlike modern polished wafers). This resulted in the "forbidden gap" problem that caused difficulty for the 386 team. Specifically second-layer metal (M2) could be close to the first-layer metal (M1) or it could be far apart, but an in-between distance would cause problems: the forbidden gap. If the metal layer crossed in the "forbidden gap", the metal could crack and whiskers of metal would touch, causing the chip to fail. These problems reduced the yield of the 386.

The design of the 386 proceeded both top-down, starting with the architecture definition, and bottom-up, designing standard cells and other basic circuits at the transistor level. The processor's microcode, the software that controlled the chip, was a fundamental component. It was designed with two CAD tools: an assembler and microcode rule checker. The high-level design of the chip (register-level RTL) was created and refined until clock-by-clock and phase-by-phase timing were represented. The RTL was programmed in MAINSAIL, a portable Algol-like language based on SAIL (Stanford Artificial Intelligence Language). Intel used a proprietary simulator called Microsim to simulate the RTL, stating that full-chip RTL simulation was "the single most important simulation model of the 80386".

The next step was to convert this high-level design into a detailed logic design, specifying the gates and other circuitry using Eden, a proprietary schematics-capture system. Simulating the logic design required a dedicated IBM 3083 mainframe that compared it against the RTL simulations. Next, the circuit design phase created the transistor-level design. The chip layout was performed on Applicon and Eden graphics systems. The layout started with critical blocks such as the ALU and barrel shifter. To meet the performance requirements, the TLB (translation lookaside buffer) for the paging mechanism required a creative design, as did the binary adders.

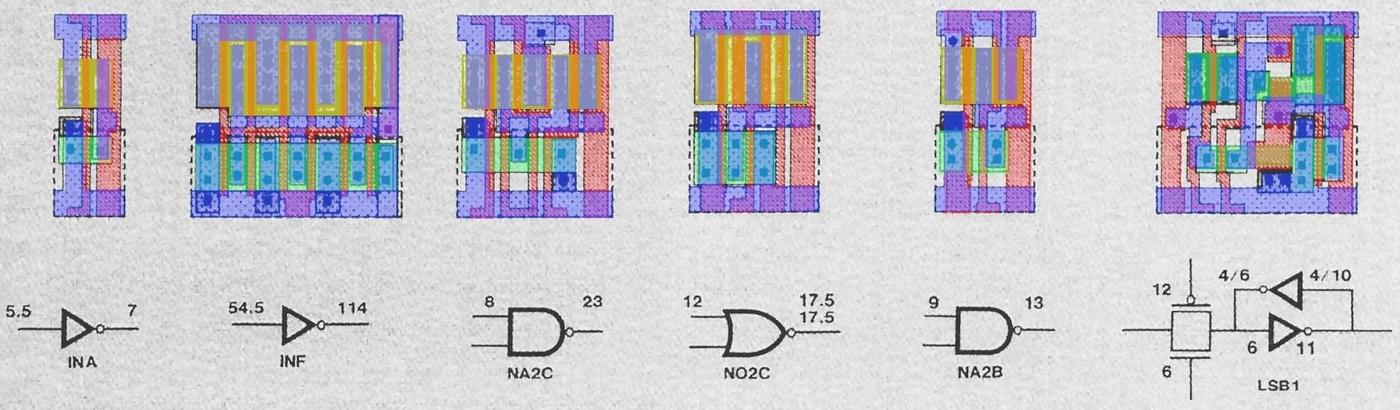

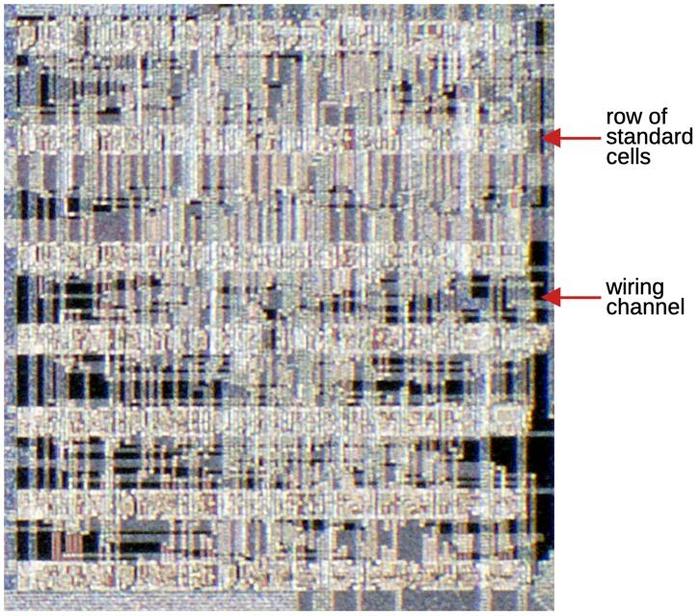

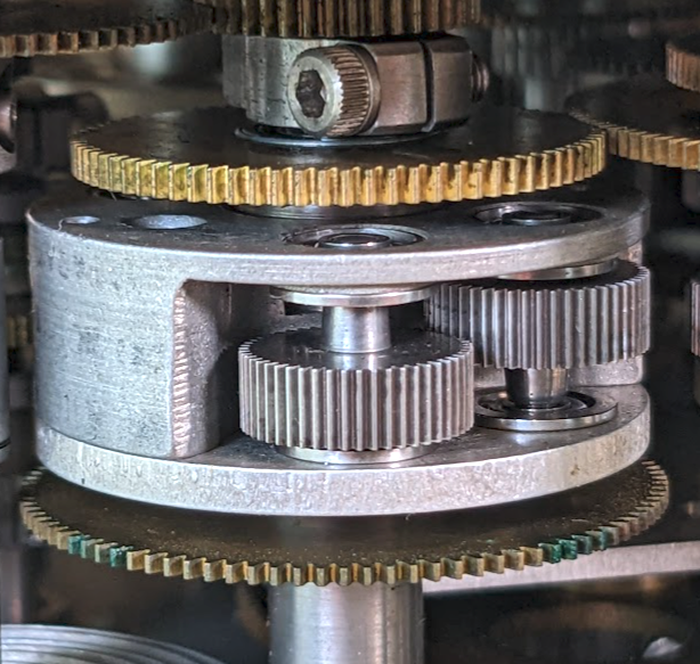

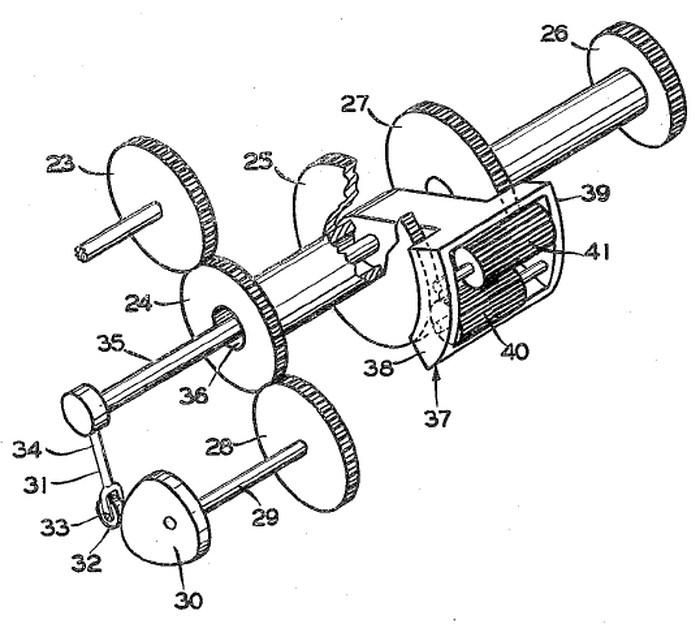

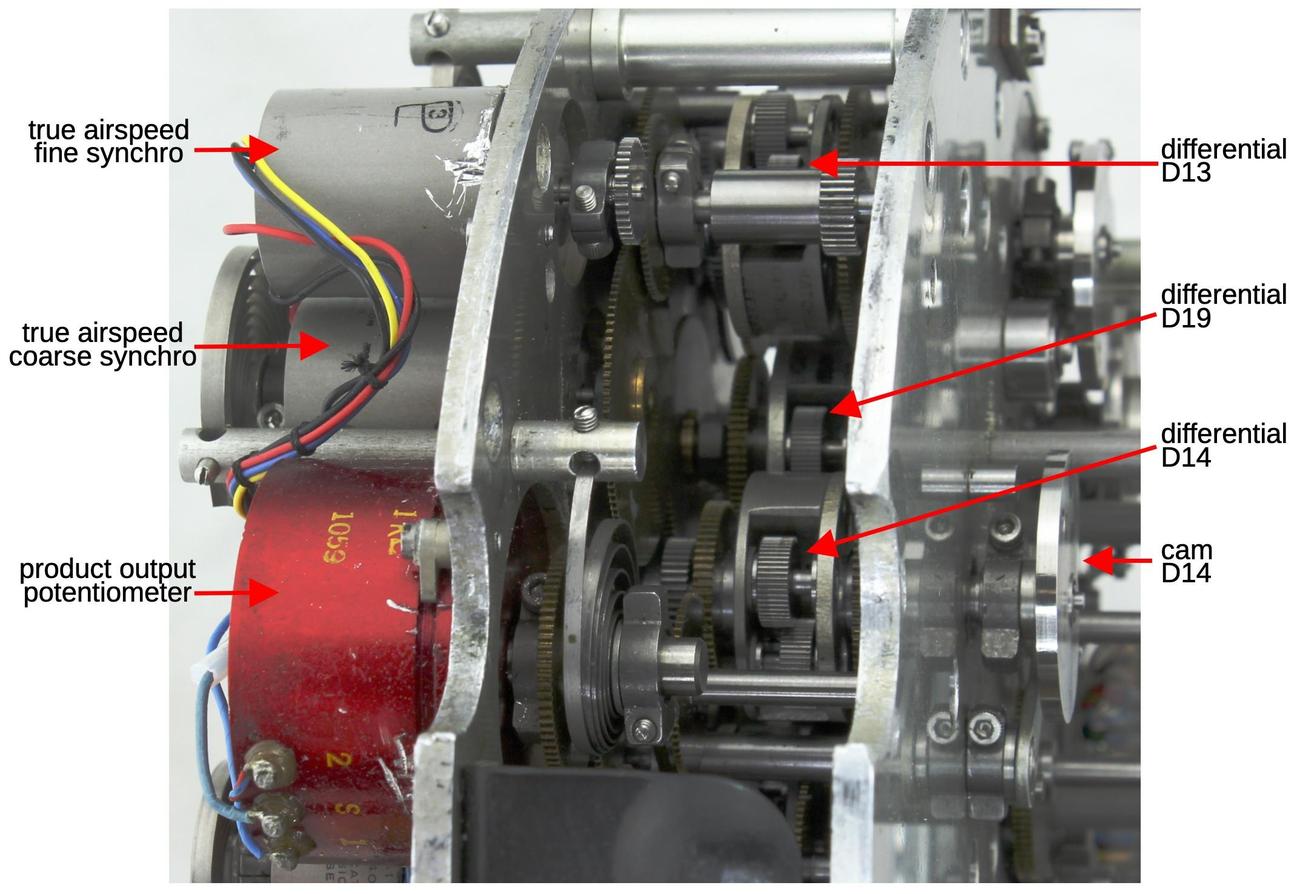

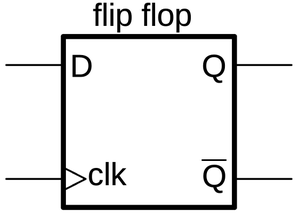

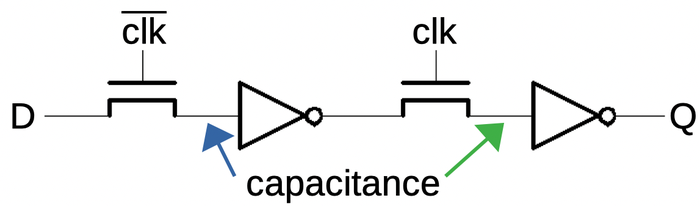

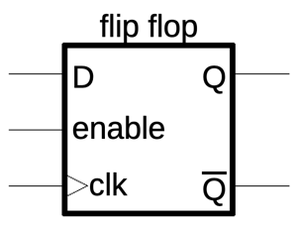

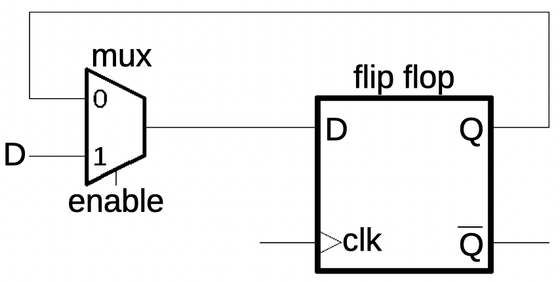

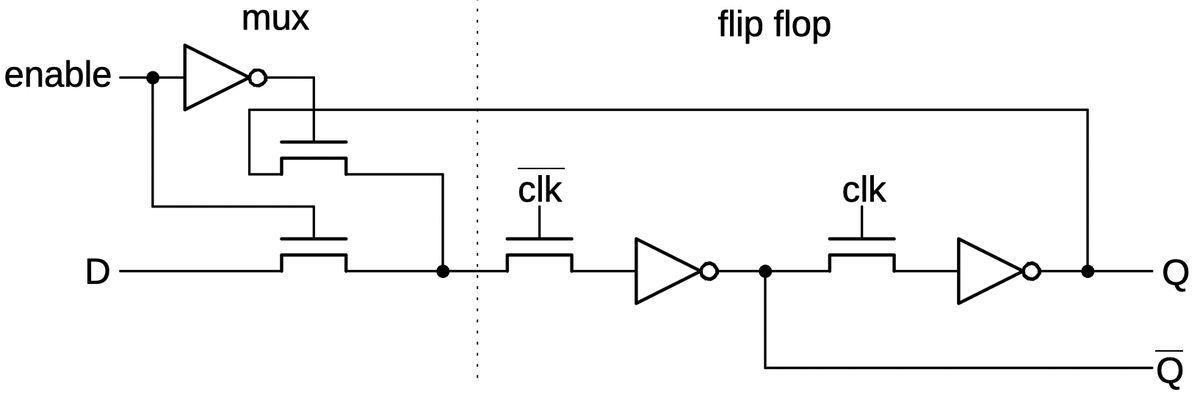

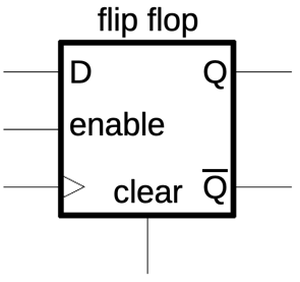

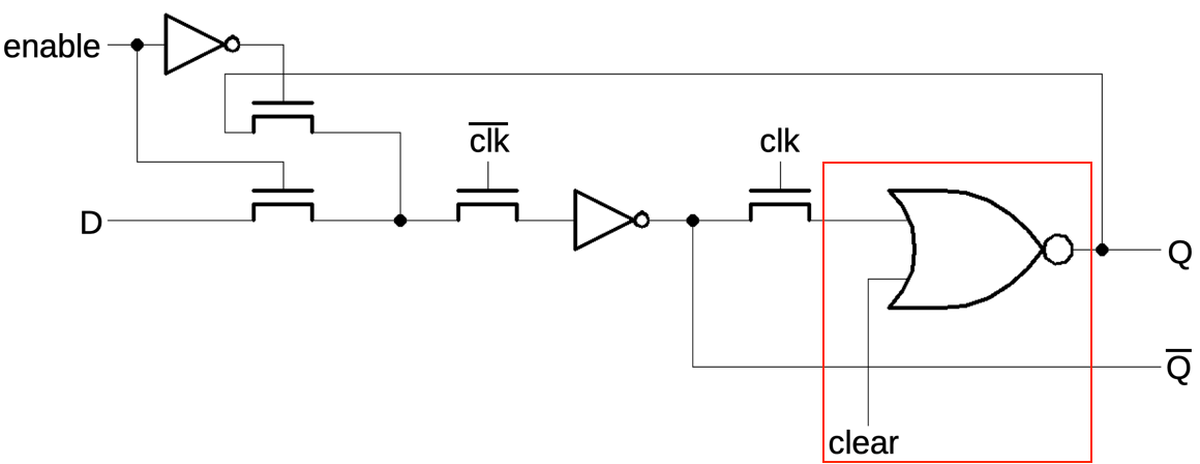

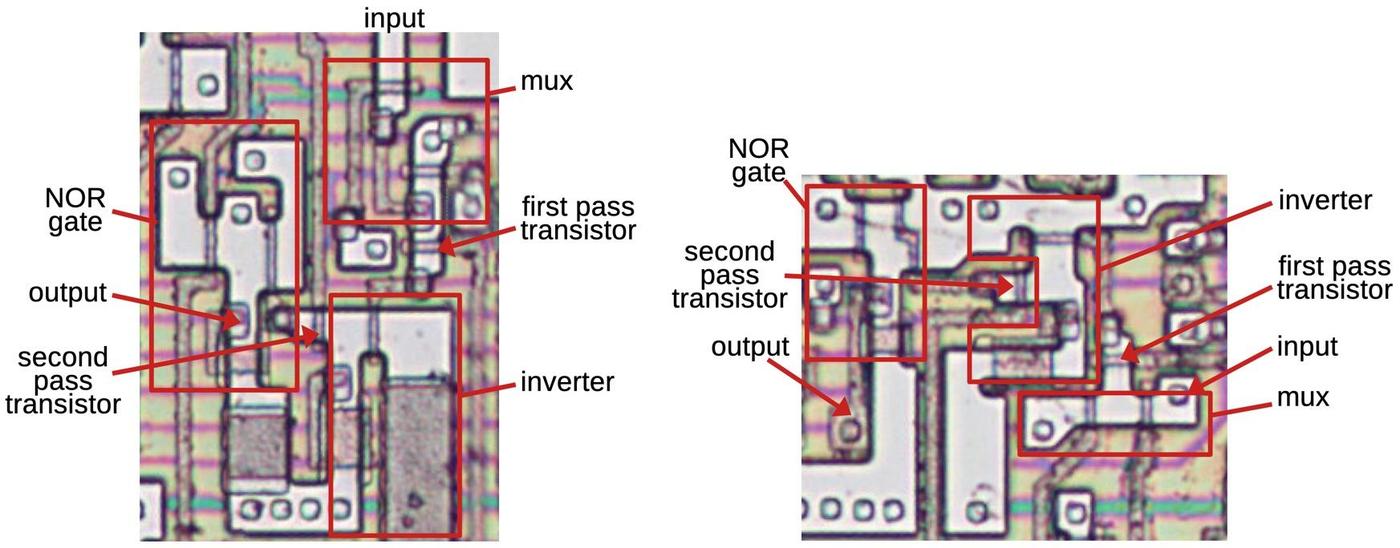

The "random" (unstructured) logic was implemented with standard cells, rather than the transistor-by-transistor design of earlier processors. The idea of standard cells is to have fixed blocks of circuitry (above) for logic gates, flip-flops, and other basic functions.24 These cells are arranged in rows by software to implement the specified logic description. The space between the rows is used as a wiring channel for connections between the cells. The disadvantage of a standard cell layout is that it generally takes up more space than an optimized hand-drawn layout, but it is much faster to create and easier to modify.

These standard cells are visible in the die as regular rows of circuitry. Intel used the TimberWolf automatic placement and routing package, which used simulated annealing to optimize the placement of cells. TimberWolf was built by a Berkeley grad student; one 386 engineer said, "If management had known that we were using a tool by some grad student as the key part of the methodology, they would never have let us use it. " Automated layout was a new thing at Intel; using it improved the schedule, but the lower density raised the risk that the chip would be too large.

The data path consists of the registers, ALU (Arithmetic Logic Unit), barrel shifter, and multiply/divide unit that process the 32-bit data. Because the data path is critical to the performance of the system, it was laid out by hand using a CALMA system. The designers could optimize the layout, taking advantage of regularities in the circuitry, optimizing the shape and size of each transistor and fitting them together like puzzle pieces. The data path is visible on the left side of the die, forming orderly 32-bit-wide rectangles in contrast to the tangles of logic next to it.

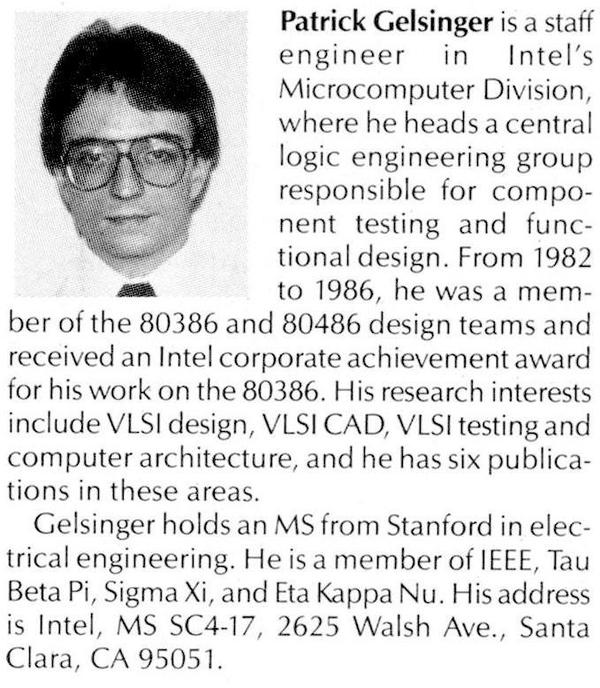

Once the transistor-level layout was complete, Intel's Hierarchical Connectivity Verification System checked that the final layout matched the schematics and adhered to the process design rules. The 386 set an Intel speed record, taking just 11 days from completing the layout to "tapeout", when the chip data is sent on magnetic tape to the mask fabrication company. (The tapeout team was led by Pat Gelsinger, who later became CEO of Intel.) After the glass masks were created using an electron-beam process, Intel's "Fab 3" in Livermore (the first to wear the bunnysuits) produced the 386 silicon wafers.

Chip designers like to claim that their chip worked the first time, but that was not the case for the 386. When the team received the first silicon for the 386, they ran a trivial do-nothing test program, "NoOp, NoOp, Halt", and it failed. Fortunately, they found a small fix to a PLA (Programmable Logic Array). Rather than create new masks, they were able to patch the existing mask with ion milling and get new wafers quickly. These wafers worked well enough that they could start the long cycles of debugging and fixing.

Once the processor was released, the problems weren't over.25 Some early 386 processors had a 32-bit multiply problem, where some arguments would unpredictably produce the wrong results under particular temperature/voltage/frequency conditions. (This is unrelated to the famous Pentium FDIV bug that cost Intel $475 million.) The root cause was a layout problem, not a logic problem; they didn't allow enough margin to handle the worst case data in combination with manufacturing process and environment factors. This tricky problem didn't show up in simulation or chip verification, but was only found in stress testing. Intel sold the faulty processors, but marked them as only valid for 16-bit software, while marking the good processors with a double sigma, as seen below.26 This led to embarrassing headlines such as Some 386 Systems Won't Run 32-Bit Software, Intel Says. The multiply bug also caused a shortage of 386 chips in 1987 and 1988 as Intel redesigned the chip to fix the bug. Overall, the 386 issues probably weren't any worse than other processors and the problems were soon forgotten.

Conclusions

The 386 processor was a key turning point for Intel. Intel's previous processors sold very well, but this was largely due to heavy marketing ("Operation Crush") and the good fortune to be selected for the IBM PC. Intel was technologically behind the competition, especially Motorola. Motorola had introduced the 68000 processor in 1979, starting a powerful line of (more-or-less) 32-bit processors. Intel, on the other hand, lagged with the "brain-damaged" 16-bit 286 processor in 1982. Intel was also slow with the transition to CMOS; Motorola had moved to CMOS in 1984 with the 68020.

The 386 provided the necessary technological boost for Intel, moving to a 32-bit architecture, transitioning to CMOS, and fixing the 286's memory model and multitasking limitations, while maintaining compatibility with the earlier x86 processors. The overwhelming success of the 386 solidified the dominance of the x86 and Intel, and put other processor manufacturers on the defensive. Compaq used the 386 to take over PC architecture leadership from IBM, leading to the success of Compaq, Dell, and other companies, while IBM eventually departed the PC market entirely. Thus, the 386 had an oversized effect on the computer industry, shaping the winners and losers for decades.

I plan to write more about the 386, so follow me on Twitter @kenshirriff or RSS for updates. I'm also on Mastodon occasionally as @[email protected]. Acknowledgements: The die photos are courtesy of Antoine Bercovici; you should follow him on Twitter as @Siliconinsid.27 Thanks to Pat Gelsinger and Roxanne Koester for providing helpful papers.

Notes and references

-

The 386 also changed the industry because Intel abandoned the standard practice of second sourcing (allowing other companies to manufacture a chip). AMD, for example, had been a second source for the 286. But Intel decided to keep production of the 386 to themselves. Intel ended up licensing the 386 to IBM, though, as the IBM 386SLC. Despite the name, this was the 386 SX, not the 386 SL. ↩

-

Intel made various keychains containing the 386 die, as shown at CPU World. If you know where to look, it is easy to distinguish the variants. In particular, look at the instruction decoders above the microcode and see if they are oriented vertically (pre-shrink 386) or horizontally (post-shrink 386). ↩

-

The naming of the 386 versions is a bit of a mess. The 386 started as the 80386 and later the i386. The 80386SX was introduced in 1988; this is the version with a 16-bit bus. The "regular" 386 was then renamed the DX to distinguish it from the SX. There are several other versions of the 386 that I won't discuss here, such as the EX, CXSB, and 80376. See Wikipedia for details.

Confusingly, the 486 also used the SX and DX names, but in a different way. The 486 DX was the original that included a floating-point unit, while floating-point was disabled in the 486 SX. Thus, in both cases "DX" was the full chip, while "SX" was the low-cost version, but the removed functionality was entirely different.

Another complication is that a 386DX chip will have a marking like "SX217", but this has nothing to do with the 386 SX. SX217 is an Intel S-Specification number, which specifies the particular stepping of the processor, indicating a manufacturing change or if a feature has been fixed or removed. ↩

-

Counting transistors isn't as straightforward as you might think. For example, a ROM may have a transistor for a 1 bit and no transistor for a 0 bit. Thus, the number of transistors depends on the data stored in the ROM. Likewise, a PLA has transistors present or absent in a grid, depending on the desired logic functions. For this reason, transistor counts are usually the number of "transistor sites", locations that could have a transistor, even if a transistor is not physically present. In the case of the 386, it has 285,000 transistor sites and 181,000 actual transistors (source), so over 100,000 reported transistors don't actually exist.

I'll also point out that most sources claim 275,000 transistors for the 386. My assumption is that 285,000 is the more accurate number (since this source distinguishes between transistor sites and physical transistors), while 275,000 is the rounded number. ↩

-

The 386's independent, pipelined functional units provide a significant performance improvement and the pipeline can be executing up to eight instructions at one time. For instance, the 386's microcode engine permits some overlap between the end of one instruction and the beginning of the next, an overlap that speeds up the processor by about 9%. But note that instructions are still executed sequentially, taking multiple clocks per instruction, so it is nothing like the superscalar execution introduced in the Pentium. ↩

-

The diagram of the 386 die shows eight functional units. It can be compared to the block diagram below, which shows how the units are interconnected.

Block diagram of the 386. From The Intel 80386—Architecture and Implementation. -

My labeled die diagram combines information from two Intel papers: The Intel 80386—Architecture and Implementation and Design and Test of the 80386. The former paper describes the eight functional units. The latter paper provides more details, but only shows six functional units. (The Control Unit and Data Unit are combined into the Execution Unit, while the Protection Test Unit is dropped as an independent unit.) Interestingly, the second paper is by Patrick Gelsinger, who is now CEO of Intel. Pat Gelsinger also wrote "80386 Tapeout - Giving Birth to an Elephant", which says there are nine functional units. I don't know what the ninth unit is, maybe the substrate bias generator? In any case, the count of functional units is flexible.

Patrick Gelsinger's biography from his 80386 paper. -

The 386 has a 16-byte prefetch queue, but apparently only 12 bytes are used due to a pipeline bug (details). ↩

-

Static checks for access violations are performed by the Protection Test Unit, while dynamic checks are performed by the Segment Unit and the Paging Unit. ↩

-

The 386 was originally supposed to have an on-chip cache, but there wasn't room and the cache was dropped in the middle of the project. As it was, the 386 die barely fit into the lithography machine's field of view. ↩

-

It kind of looks like the die has the initials ET next to a telephone. Could this be a reference to the movie E.T. and its catchphrase "E.T. phone home"? "SEC" must be senior mask designer Shirley Carter. "KF" is engineer Kelly Fitzpatrick. "PSR" is probably Paul S. Ries who designed the 386's paging unit. ↩

-

I think that Intel used a 6" (150mm) wafer for the 386. With a 10mm×10mm die, about 128 chips would fit on a wafer. But with a 6mm×6.5mm die, about 344 would fit on a wafer, over 2.5 times as many. (See Die per wafer estimator.) ↩

-

The 286 remained popular compared to the 386, probably due to its lower price. It wasn't until 1991 that the number of 386 units sold exceeded the 286 (source). Intel's revenue for the 386 was much, much higher than for the 286 though (source). ↩

-

The "SuperSet" consisted of the 386 SL along with the 82360SL peripheral I/O chip. The I/O chip contained various ISA bus peripherals, taking the place of multiple chips such as the 8259 that dated back to the 8080 processor. The I/O chip included DMA controllers, timers, interrupt controllers, a real time clock, serial ports, and a parallel port. It also had a hard disk interface, a floppy disk controller, and a keyboard controller. ↩

-

The 386 SL transistor count is from the Intel Microprocessor Quick Reference Guide, which contains information on most of Intel's processors. ↩

-

The 186 processor doesn't fit cleanly into the sequence of x86 processors. Specifically, the 186 is an incompatible side-branch, rather than something in the 286, 386, 486 sequence. The 186 was essentially an 8086 that included additional functionality (clock generator, interrupt controller, timers, etc.) to make it more suitable for an emedded system. The 186 was used in some personal computers, but it was incompatible with the IBM PC so it wasn't very popular. ↩

-

IBM didn't want to use the 286 because they were planning to reverse-engineer the 286 and make their own version, a 16-megahertz CMOS version. This was part of IBM's plan to regain control of the PC architecture with the PS/2. Intel told IBM that "the fastest path to a 16-megahertz CMOS 286 is the 386 because it is CMOS and 16-megahertz", but IBM continued on their own 286 path. Eventually, IBM gave up and used Intel's 286 in the PS/2. ↩

-

IBM might have been reluctant to support the 386 processor because of the risk of cutting into sales of IBM's mid-range 4300 mainframe line. An IBM 4381-2 system ran at about 3.3 MIPS and cost $500,000, about the same MIPS performance as 386/16 system for under $10,000. The systems aren't directly comparable, of course, but many customers could use the 386 for a fraction of the price. IBM's sales of 4300 and other systems declined sharply in 1987, but the decline was blamed on DEC's VAX systems.

An IBM 4381 system. The 4381 processor is the large cabinet to the left of the terminals. The cabinets at the back are probably IBM 3380 disk drives. From an IBM 4381 brochure. -

The most lasting influence of the PS/2 was the round purple and green keyboard and mouse ports that were used by most PCs until USB obsoleted them. The PS2 ports are still available on some motherboards and gaming computers.

The PS/2 keyboard and mouse ports on the back of a Gateway PC. -

When Compaq introduced their 386-based system, "they warned IBM that it has but six months to announce a similar machine or be supplanted as the market's standard setter." (source). Compaq turned out to be correct. ↩

-

The quote is from Computer Structure and Logic. ↩

-

Most of my section on the 386 design process is based on Design and Test of the 80386. The 386 oral history also provides information on the design process. The article Such a CAD! also describes Intel's CAD systems. Amusingly, I noticed that one of its figures (below) used a photo of the 386SL instead of the 386DX, with the result that the text is completely wrong. For instance, what it calls the microcode ROM is the cache tag RAM.

Erroneous description of the 386 layout. I put an X through it so nobody reuses it. -

Intel has published a guide to their 1.5 micron CHMOS III cell library. I assume this is the same standard-cell library that was used for the logic in the 386. The library provided over 150 logic functions. It also provided cell-based versions of the Intel 80C51 microcontroller and various Intel support chips such as the 82C37A DMA controller, the 82C54 interval timer, and the 82C59 interrupt controller.

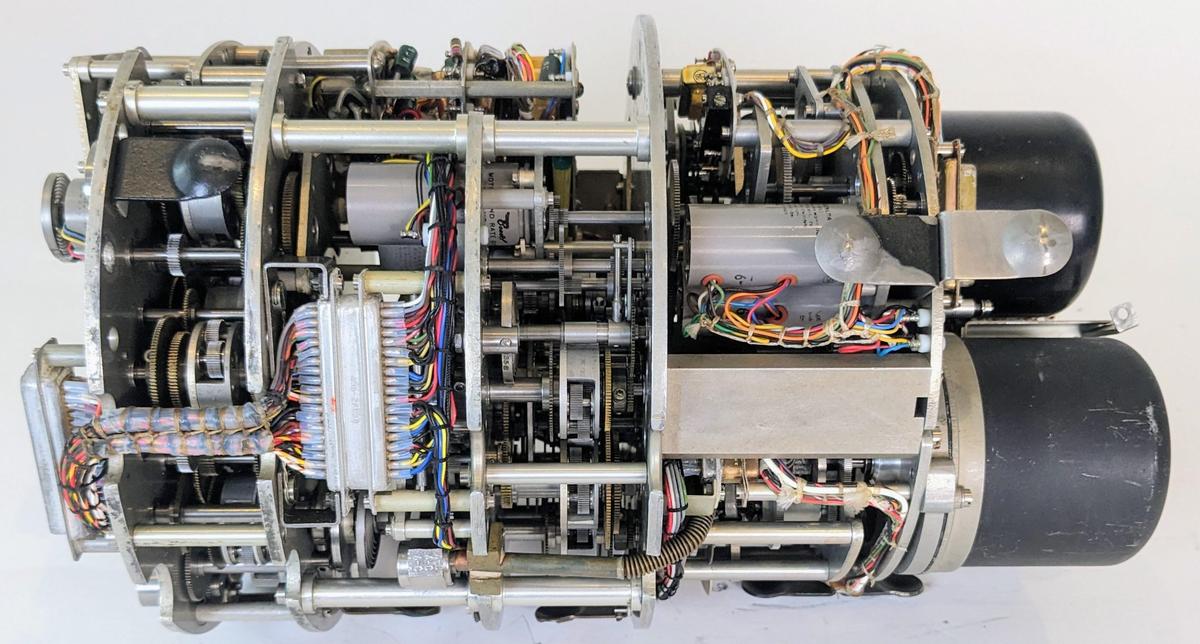

Die photo of the 82360SL ISA Peripheral I/O Chip, from the 386 SL Data Book.Interestingly, the 386 SL's Peripheral I/O chip (the 82360SL) included the functionality of these support chips. Standard-cell construction is visible as the stripes in the die photo (above). Moreover, the layout of the die shows separated blocks, probably corresponding to each embedded chip. I expect that Intel designed standard-cell versions of the controller chips to embed in the I/O chip and then added the chips to the standard-cell library since they were available. ↩

-

For an example of the problems that could require a new stepping of the 386, see Intel backs off 80386 claims but denies chip recast needed (1986). It discusses multitasking issues with the 386, with Intel calling them "minor imperfections" that could cause "little glitches", while others suggested that the chip would need replacement. The bugs fixed in each stepping of the 386 are documented here. ↩

-

One curiosity about the 386 is the

IBTSandXBTSinstructions. The Insert Bit String and Extract Bit String instructions were implemented in the early 386 processors, but then removed in the B1 stepping. It's interesting that the bit string instructions were removed in the B1 stepping, the same stepping that fixed the 32-bit multiplication bug. Intel said that they were removed "in order to use the area of the chip previously occupied for other microcircuitry" (source). I wonder if Intel fixed the multiplication bug in microcode, and needed to discard the bit string operations to free up enough microcode space. Intel reused these opcodes in the 486 for theCMPXCHGinstruction, but that caused conflicts with old 386 programs, so Intel changed the 486 opcodes in the B stepping. ↩ -

Since Antoine photographed many different 386 chips, I could correlate the S-Specs with the layout changes. I'll summarize the information here, in case anyone happens to want it. The larger DX layout is associated with SX213 and SX215. (Presumably the two are different, but nothing that I could see in the photographs.) The shrunk DX layout is associated with SX217, SX218, SX366, and SX544. The 386 SL image is SX621. ↩