Current target: 74LS189 16x4 static RAM, inverting, tri-state outputs. pic.twitter.com/rYdYvyCUxC

— Robert Baruch (@babbageboole) July 16, 2017

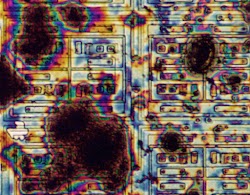

The die photo above is part of Project 54/74, an ambitious project to take die photos of every chip in the popular 7400 series of TTL chips (and the military-grade 5400 versions). The 74LS189 was an early RAM chip (1976) that held just 64 bits: sixteen 4-bit words. This photo interested me because I had recently written about Intel's first product, the 64-bit 3101 memory chip (1969). In my photo below of the 3101, you can see the 16 rows and 4 columns of memory cells forming a regular pattern that takes up most of the chip. The 74LS189 was an improved version of the 3101 RAM chip, so the two die photos should have been very similar. But the two photos were entirely different and the 74LS189 die didn't have 64 of anything. This just didn't make sense.

A closer examination of the chip brought more confusion. I usually start analyzing a chip by figuring out which of the pins are power, inputs, and outputs, and cross-referencing with the datasheet to find the function of each pin. The power and ground pins are easy to spot, since these are connected to thick metal traces that feed every part of the chip. Most 7400-series chips have the power and ground on diagonally-opposite corners of the chip.1 The die photo, however, shows the power and ground separated by just 5 positions. This immediately rules out the possibility that the chip is the advertised 74LS189, and makes it unlikely to be a 7400-series chip at all. In addition, the transistors all looked wrong. A chip in the 74LSxx series is built from bipolar transistors, which are fairly large and have a distinctive appearance. The transistors in the die photo looked like much smaller and simpler CMOS transistors.

The chip also contained a complex resistor network, not the simple resistors you'd expect on a TTL chip. The resistor network (along with the large, complex transistors next to it) led me to suspect that this chip had analog circuitry as well as digital logic. I thought it might be an analog-to-digital converter (ADC), but after looking at some ADC datasheets, I decided that wasn't the case. The chip had way too many inputs, for one thing.

The first big clue was when I studied the resistor network carefully. In the photo below, I've marked the resistors with light or dark blue lines. They are all exactly the same length, giving them the same resistance (R). Some were connected as pairs to get a resistance of exactly 2R. I noticed they were connected in a pattern of R-2R-R-2R-... which forms a R-2R resistor ladder network. This structure is used for digital to analog conversion (DAC): you feed bits into the network and you get out a voltage corresponding to the value. The chip had two of these ladders, forming two 4-bit digital-to-analog converters.

What values were going into the digital-to-analog converters? The middle of the die photo contained two small matrices, which I recognized as ROMs, each holding about 24 four-bit words. Perhaps the values in the ROMs were being fed to the DAC. Each row of the ROM had one section (on the right below) to decode 5 address bits, and a second section (on the left) to output the associated 4 data bits. Each data row has a transistor for 1 or no transistor for 0. The decoder is arranged in pairs with one transistor present out of each pair, either matching a 0 address or matching a 1 address. Thus, by looking at the chip, we can read the values in the ROMs.

Normally a ROM has sequential rows, so you can see the decoder counting in binary, but this decoder was different. Addresses in the ROM were arranged as 10011, 11001, 01100, ... Each address was generated by shifting the previous one to the right and adding a new bit on the left. E.g. 10011 -> 11001. This suggested the ROM addresses were generated by a linear-feedback shift register (LFSR) rather than a binary counter. The motivation is a shift register takes up less space than a counter on the chip; if you don't need the counter to count in the normal order, this is a good tradeoff. There were a couple strange things about the ROM: some addresses appeared to be missing and some addresses perform sort of a "wild card" match, but I'll ignore that for now. Also, the two ROMs were similar but not quite identical.

Looking at the data in the ROM, I noticed the rightmost bit was present for a while, then absent, and finally present again, while the other bits jumped around. That suggested the rightmost bit was the high-order bit. I extracted the data, and after swapping a couple bits got the curve below, a somewhat distorted sine wave.

So, the mystery chip had two ROMs with sine-ish curves and two digital-to-analog converters. Clearly it's not a RAM chip, but what is it? I looked at function/waveform generator chips, but they didn't seem to match. Could it be a sound synthesis chip (like the 76477 or a Yamaha synthesizer chip)? They didn't seem to match the chip's characteristics either. Why would the chip have a bunch of inputs and an output with two sine wave channels? After puzzling for a long time, I thought of Touch-Tone phone dialing.

DTMF: dialing a Touch-Tone phone

Perhaps I should explain how Touch-Tone phones work. Technically known as Dual-Tone Multi-Frequency signaling (DTMF), Touch-Tone was introduced in 1963 to replace rotary-dial phones with push button dialing. Each button press generates two tones of specific frequencies, which indicate the pressed button to the telephone switching system. Specifically, there is one tone for each row on the keypad and one tone for each column, and a button generates the two corresponding tones.2

Mostek introduced the MK5085 Touch-Tone dialer chip in 1975.3 This chip revolutionized the construction of Touch-Tone phones: instead of using eight carefully-tuned, expensive oscillators, the phone could generate the tones with a cheap integrated circuit. The MK5085 was soon followed by a series of Mostek integrated dialer chips with slightly different functions4 as well as versions from other manufacturers.5

A quick web search found a Touch-Tone chip datasheet. The pinout of this chip matched the die photo with the power, input and output pins in the right places. The datasheet said the chip was metal-gate CMOS (not TTL), which matched the appearance of the die. Finally, the datasheet's block diagram matched the functional blocks I could see on the chip.

This was pretty conclusive: the mystery die was not a RAM chip but an entirely unrelated DTMF dialing chip. This 74LS189 chip was counterfeit; someone had relabeled the DTMF die as a Texas Instruments 74LS189 chip.

How the DTMF chip works

Now that I had identified the chip, I wanted to understand more about how it works. It turns out that it uses some interesting mathematics and circuitry to generate the tones. The chip needs to generate two tones of the right frequencies based on the 4 row inputs and 4 column inputs from the keypad. It generates these tones by starting with a 3.579545 MHz11 frequency and dividing it down to two lower frequency clocks. Each clock is used to step through the sine-wave lookup table in ROM, generating a sine wave of the desired frequency. Finally, the two sine waves are combined to produce the output.

By looking at the output frequencies listed in the datasheet, we can deduce what is happening internally. For instance, to generate the 1639.0 Hz tone, you can divide the 3.579545 MHz input by 2184. (Reducing a frequency by an integer factor is straightforward in hardware: count the input pulses and reset every time you reach 2184.) Similarly, the other output frequencies can be generated by dividing by integers 2408, 2688, 2968, 3808 4200, 4648 and 5152. Dividing by numbers this large would require inconveniently large counters, but but I noticed these numbers are are all divisible by 56, yielding quotients 39, 43, 48, 53, 68, 75, 83 and 92. These smaller numbers are much more practical to divide by in hardware.

This suggests a straightforward hardware implementation: divide the 3.579545 MHz clock by 2. Then divide by 68, 75, 83 or 92 (depending on the row input), using a 7-bit counter. Finally, iterate through a 28-word ROM to generate the sine wave, yielding the 28-step sine wave described in the datasheet. Similarly, the column frequencies can be generated by dividing by 39, 43, 48 or 53 (using a 6-bit counter) depending on the column input.

At this point, I had reverse-engineered how the chip operated. Or had I? A closer look at the chip revealed 5-bit and 6-bit counters, one bit too small for the necessary divisors. What was going on? How could the chip divide by 68 with a 6-bit counter?

The diagram below shows divider circuitry for the row output, showing the 6-bit shift-register counter. Also visible is the circuit to detect when the counter should be reset, based on which of the four keypad rows is selected.7 The column circuitry is similar, but with a 5-bit counter.

More investigation showed that multiple companies made pin-compatible DTMF chips, but they all generated slightly different frequencies. 5 Although the chips seemed like clones, they were all implemented in different ways, dividing the input frequency differently, yielding outputs that were unique (but all within the phone system's tolerance). By repeating the mathematical analysis, I could reverse-engineer each manufacturer's implementation and figure out the divisors and ROM sizes. (Details in footnotes.10)

I found that the divisors for the MK5089 design would fit in the counters I saw on the chip. Specifically, it divides the input frequency by 4 and then divides row frequencies by 33, 36, 40 or 44 (values that fit in 6 bits) and the column frequencies by 17, 19, 21 or 23 (values that fit in 5 bits). The row output ROM has 29 values, while the column output ROM has 32 values. This nicely fit the counter sizes I saw on the die. It also explains why the two ROMs on the die are slightly different.8

Understanding the silicon

I reverse-engineered parts of the chip by closely examining the silicon circuits, so I'll explain some of the silicon-level structures. The chip is built mostly from CMOS13, but the structures are a bit more complex than you see in textbooks. The basic idea of CMOS is it is built from MOS transistors, both PMOS and NMOS transistors connected in a Complementary way (thus the name CMOS). To oversimplify, an NMOS transistor turns on when the input is high, and can pull the output low. A PMOS transistor is opposite; it turns on when the input is low, and can pull the output high.

The diagram below shows the structure of a metal-gate MOS transistor. Electricity flows between the source and the drain, under control of the gate. The metal gate is separated from the silicon by an insulating oxide layer. (The Metal / Oxide / Silicon layers give it the name MOS.) For a PMOS transistor, the source and drain are P-type silicon while the base silicon is N-type. An NMOS transistor is opposite: the source and drain are N-type silicon while the base silicon is P-type.

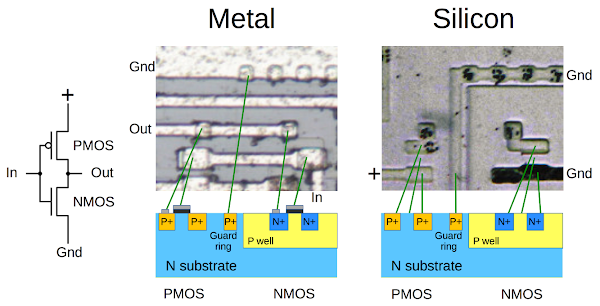

The diagram below shows a CMOS inverter on the chip, built from a PMOS transistor and an NMOS transistor. The first photo shows the metal layer. By dissolving the metal in acid, the silicon is revealed in the second photo. In combination, they reveal the inverter's structure, as shown in the cross-section diagram. You can see the metal gates for the PMOS and NMOS transistors, as well as the silicon regions for the source and drain.12 The black spots are contacts between the metal and silicon, where they are connected.

Note that the NMOS transistor must be embedded in P-type silicon. To achieve this, the transistor is placed in a "P well", a region of P-doped silicon. A grounded "guard ring" surrounds the P well to help isolate it. The chip contains multiple P wells, which typically hold multiple NMOS transistors.

Logic gates (NAND, NOR) are constructed by combining multiple transistors in a similar way (details). CMOS transistors can also be configured to pass or block a signal (details), a technique used to build the shift registers in the chip. These circuits are straightforward to recognize if you examine the chip closely, allowing the circuitry to be reverse engineered, for example the shift-register counter shown earlier.

The DMTF chip is both digital and analog. The diagram below shows the 4-bit digital-to-analog converter for the column tone. (This circuit is in the upper-left of the die; the similar row tone circuit is in the upper right.) The circuit takes 4 bits from the ROM, passes them through a buffer, and then four transistors drive the R-2R resistor ladder digital-to-analog converter that was discussed earlier. The resulting analog voltage forms the synthesized sine wave. Note that the transistors are scaled to provide the necessary current; the "8x" transistor is eight times the size of the "1x" transistor. The NMOS transistors are in a P-well, as described earlier.

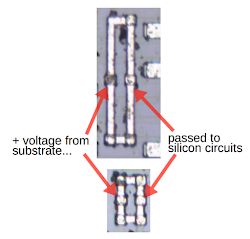

The die has some unusual structures, metal squares and larger loops that at first glance don't seem connected to anything. I've never seen these described before, so I'll explain what they are. They provide power and ground to parts of the circuit without direct wiring to the power or ground pins. Integrated circuits typically have extensive wiring in the metal layer to provide power and ground to all the circuits that need them. This chip, however, eliminates some of this wiring by using the substrate as a power connection and using the guard rings as ground connections. The photo below shows metal loops that provides a bridge between the positive substrate and a circuit that requires positive voltage.

The metal loops below provide a bridge between the negative guard ring and the circuitry that requires ground. As far as I can tell, there's no reason to make these links a loop rather than a straight connection.

Conclusion

As for Robert Baruch's purchase of the chip, he contacted the eBay seller who gave him a refund. The seller explained that the chip must have been damaged in shipping! (Clearly you should pack your chips carefully so they don't turn into something else entirely.)

Follow me on Twitter: @kenshirriff to find out about my latest blog posts. I also have an RSS feed.

Thanks to Robert Baruch for the die photos. His high-resolution photos are here and here.

Notes and references

-

A few unusual 7400-series chips (such as the 7473 flip flop) don't have the power and ground pins diagonally opposite, but in the middle. On the die, however, these pins are still symmetrically opposite. This simplifies routing of power and ground on the die. ↩

-

Touch-Tone keypads normally have four rows and three columns, but the system supports a fourth column. The fourth column is used for some special network purposes and require a special keypad. ↩

-

The Touch-Tone chip was patented, which later led to a complex patent battle. ↩

-

Mostek later introduced a second generation of dialer chips with the MK5380. Instead of an R-2R A/D converter, it used a network of resistors with taps selected to generate the sinusoidal voltages. That is, instead of using a ROM to fit the sine curve to 16 uniform voltage steps, 16 unequal voltage levels were selected to fit the sine curve. This was described in patent 4,446,436. The datasheet for the NTE1690 chip says it uses a "resistive ladder network", which is probably the same thing. ↩

-

Many manufacturers made Touch-Tone chips that were compatible with the MK5089, often giving them similar part numbers. Some of them are TP5089, MV5089, UM95089, TCM5089, MK5089, and NTE1690 chips. While these DTMF chips seem interchangeable, surprisingly they use entirely different designs internally. Careful examination of the datasheets shows that they output slightly different frequencies. For instance, for the lowest tone the TP5089 has a frequency of 694.8 Hz, while the S2559 outputs 699.1 Hz and the NTE1690 outputs 701.3 Hz, all slightly off from the official 697 Hz. ↩

-

Touch-Tone keypads have an unusual internal structure. A standard calculator keypad has a grid of switches. In contrast, a Touch-Tone keypad has 8 switches (4 row, 4 column) and each button closes two switches (so it is known as 2-of-8). Thus, while a calculator normally scans the rows and reads the columns, the output of a Touch-Tone keypad can be read directly. Some DTMF chips include scanning circuitry so a calculator-style keypad can be used. ↩

-

Conceptually, the counter is reset when the appropriate value is reached. However, since it is implemented with a linear-feedback shift register, only the input bit can be changed, rather than resetting entirely. That is, the counter jumps ahead (by one bit flip) at the proper point so the number of counts is the desired value. Strictly speaking, this makes the counter a nonlinear-feedback shift register. ↩

-

My original readout of the ROM gave a distorted sine wave, but with further analysis I figured out the problem. I had noticed that the address patterns didn't always follow the shifted sequence from the LFSR. In addition, some addresses weren't fully decoded, in effect providing "wild card" addresses. Looking more closely, I realized that the wild card addresses would fill in the gaps in the sequence. The reason was that the ROM designers had used a shortcut to make the ROM smaller. For example, if address 00111 stored the value 13 and address 00011 also stored the value 13, these two rows in the ROM could be collapsed into one: decoding the address 00?11 to the value 13. (Strictly speaking, this makes it a PLA, not a ROM.) Essentially, the ROM could sometimes combine the same value on the ascending and descending parts of the sine way. When I filled in the missing entries, the resulting sine waves looked much better. This also showed that the two ROMs held 29 and 329 entries (as required by the mathematics) and explained why the two ROMs were slightly different on the die. ↩

-

You might know that a LFSR will get stuck on all-zeros, so it can only use 2^n-1 of the possible 2^n values. So how can the chip's 5-bit LFSR access all 32 entries in the ROM? The solution is that it's a non-linear feedback shift register (NLFSR), slightly more complicated than a LFSR. In particular, there is a row in the PLA that detects the all-zero entry and keeps the sequence from getting stuck there (as would happen on a LFSR). ↩

-

Each DTMF chip's datasheet lists slightly different output frequencies. By factoring these frequencies, I could reverse-engineer the internal design of the chip—the divisors it used and the ROM sizes. The table below gives these values for four different chip designs. Each output frequency is generated by dividing the crystal frequency (3.579545 MHz) by the scale factor, the appropriate divisor, and the points per cycle. Note that the output frequencies are all close to the correct frequencies, but not an exact match.

↩Chip Row divisors and frequencies Column divisors and frequencies Points per cycle Scale factor TP5089 92 83 75 68 53 48 43 39 28 2 694.8 Hz 770.1 Hz 852.3 Hz 940.0 Hz 1206.0 Hz 1331.7 Hz 1486.5 Hz 1639.0 Hz S2559 80 73 66 59 46 42 38 34 32 2 699.1 Hz 766.2 Hz 847.4 Hz 948.0 Hz 1215.9 Hz 1331.7 Hz 1471.9 Hz 1645.0 Hz MK5089, MV5089 44 40 36 33 23 21 19 17 29 (row), 32 (col) 4 701.3 Hz 771.5 Hz 857.2 Hz 935.1 Hz 1215.9 Hz 1331.7 Hz 1471.9 Hz 1645.0 Hz UM95089 80 73 66 59 46 42 38 34 16 4 699.1 Hz 766.2 Hz 847.4 Hz 948.0 Hz 1215.9 Hz 1331.7 Hz 1471.9 Hz 1645.0 Hz Correct frequency: 697 Hz 770 Hz 852 Hz 941 Hz 1209 Hz 1336 Hz 1477 Hz 1633 Hz -

You might wonder why they picked 3.579545 MHz for the crystal, as that seems like a strange frequency. That's the NTSC colorburst frequency, used by color televisions for complex technical reasons. Since the crystals were made by the millions for color televisions, they were inexpensive and easy to obtain. ↩

-

In the die photo, the source of an NMOS transistor connected to ground is much darker. I assume this is due to a different doping level, perhaps to pull the P well to ground. ↩

-

While most of the circuitry in the chip is CMOS, other parts use NMOS or PMOS logic to simplify the circuitry. For instance, the ROMs have NMOS transistors for the address decode and PMOS for the data storage. Another example is the circuitry to detect multiple button presses. For the four row buttons, there are six double-press combinations which are detected by an AND-OR-INVERT gate with 6 AND gates. This is built as a single complex NMOS gate, with a pull-up resistor. The diagram below shows how it is structured. (A similar circuit checks the column inputs for double presses.)

The circuitry to detect multiple button presses is built from NMOS, not CMOS. -

Two interesting articles about finding counterfeit semiconductors come from SparkFun and Bunnie Studios. For articles on counterfeiting, see this and this. ↩